Top AI Discoveries That Might Change The World (pt. 2)

Part 2 of exploring the latest AI research papers that are set to redefine the future of Artificial Intelligence.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 178th edition of The AI Edge newsletter. This edition features cutting-edge AI research papers of this quarter that are pushing the boundaries of what's achievable in the AI domain.

Here’s a quick rundown:

🧪 OpenAI’s secret sauce behind Dall-E 3’s accuracy🔍 LLM hallucination problem will be over with “Woodpecker”

🤗 Hugging Face released Zephyr-7b-beta, an open-access GPT-3.5 alternative

🎥 Microsoft’s New AI Advances Video Understanding with GPT-4V

🤖 Microsoft Research explains why Hallucination is necessary in LLMs!

🔥 Berkeley Research’s real-world humanoid locomotion

🤖 Alter3, a humanoid robot generating spontaneous motion using GPT-4🚀 Google Research’s new approach to improve performance of LLMs

🎥 Meta’s 3D AI for everyday devices💻 ByteDance presents DiffPortrait3D for zero-shot portrait view

🚀 Can a SoTA LLM run on a phone without internet?

Let’s go!

OpenAI’s secret sauce of Dall-E 3’s accuracy

OpenAI published a paper on DALL-E 3, explaining why the new AI image generator follows prompts much more accurately than comparable systems.

Prior to the actual training of DALL-E 3, OpenAI trained its own AI image labeler, which was then used to relabel the image dataset for training the actual DALL-E 3 image system. During the relabeling process, OpenAI paid particular attention to detailed descriptions.

Why does this matter?

The controllability of image generation systems is still a challenge as they often overlook the words, word ordering, or meaning in a given caption. Caption improvement is a new approach to addressing the challenge. However, the image labeling innovation is only part of what's new in DALL-E 3, which has many improvements over DALL-E 2 not disclosed by OpenAI.

LLM hallucination problem will be over with “Woodpecker”

Researchers from the University of Science and Technology of China and Tencent YouTu Lab have developed a framework called "Woodpecker" to correct hallucinations in multimodal large language models (MLLMs).

Woodpecker uses a training-free method to identify and correct hallucinations in the generated text. The framework goes through five stages, including key concept extraction, question formulation, visual knowledge validation, visual claim generation, and hallucination correction.

The researchers have released the source code and an interactive demo of Woodpecker for further exploration and application. The framework has shown promising results in boosting accuracy and addressing the problem of hallucinations in AI-generated text.

Why does this matter?

As MLLMs continue to evolve and improve, the importance of such frameworks in ensuring their accuracy and reliability cannot be overstated. And its open-source availability promotes collaboration and development within the AI research community.

Hugging Face released Zephyr-7b-beta, an open-access GPT-3.5 alternative

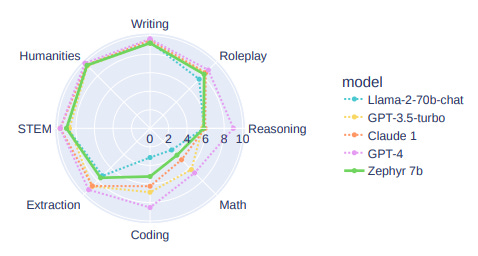

The latest Zephyr-7b-beta by Hugging Face’s H4 team is topping all 7b models on chat evals and even 10x larger models. It is as good as ChatGPT on AlpacaEval and outperforms Llama2-Chat-70B on MT-Bench.

Zephyr 7B is a series of chat models based on:

Mistral 7B base model

The UltraChat dataset with 1.4M dialogues from ChatGPT

The UltraFeedback dataset with 64k prompts & completions judged by GPT-4

Here's what the process looks like:

Why does this matter?

Notably, this approach requires no human annotation and no sampling compared to other approaches. Moreover, using a small base LM, the resulting chat model can be trained in hours on 16 A100s (80GB). You can run it locally without the need to quantize.

This is an exciting milestone for developers as it would dramatically reduce concerns over cost/latency while also allowing them to experiment and innovate with GPT alternatives.

Microsoft’s New AI Advances Video Understanding with GPT-4V

A paper by Microsoft Azure AI introduces “MM-VID”, a system that combines GPT-4V with specialized tools in vision, audio, and speech to enhance video understanding. MM-VID addresses challenges in analyzing long-form videos and complex tasks like understanding storylines spanning multiple episodes.

Experimental results show MM-VID's effectiveness across different video genres and lengths. It uses GPT-4V to transcribe multimodal elements into a detailed textual script, enabling advanced capabilities like audio description and character identification.

Why does this matter?

Improved video understanding can make content more enjoyable for all viewers. Also, MM-VID's impact can be seen in inclusive media consumption, interactive gaming experiences, and user-friendly interfaces, making technology more accessible and useful in our daily lives.

Microsoft Research explains why Hallucination is necessary for LLMs!

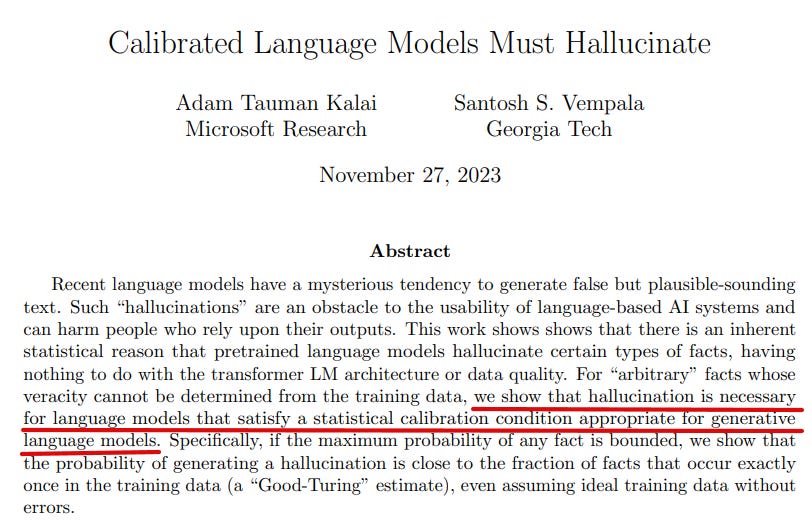

Microsoft Research + 4 others have explored that there is a statistical reason behind these hallucinations, unrelated to the model architecture or data quality. For arbitrary facts that cannot be verified from the training data, hallucination is necessary for language models that satisfy a statistical calibration condition.

However, the analysis suggests that pretraining does not lead to hallucinations on facts that appear more than once in the training data or on systematic facts. Different architectures and learning algorithms may help mitigate these types of hallucinations.

Why does this matter?

This research is crucial in shedding light on hallucinations. It highlights some unverifiable facts beyond the training data. Also, these hallucinations might be necessary for language models to meet statistical calibration conditions.

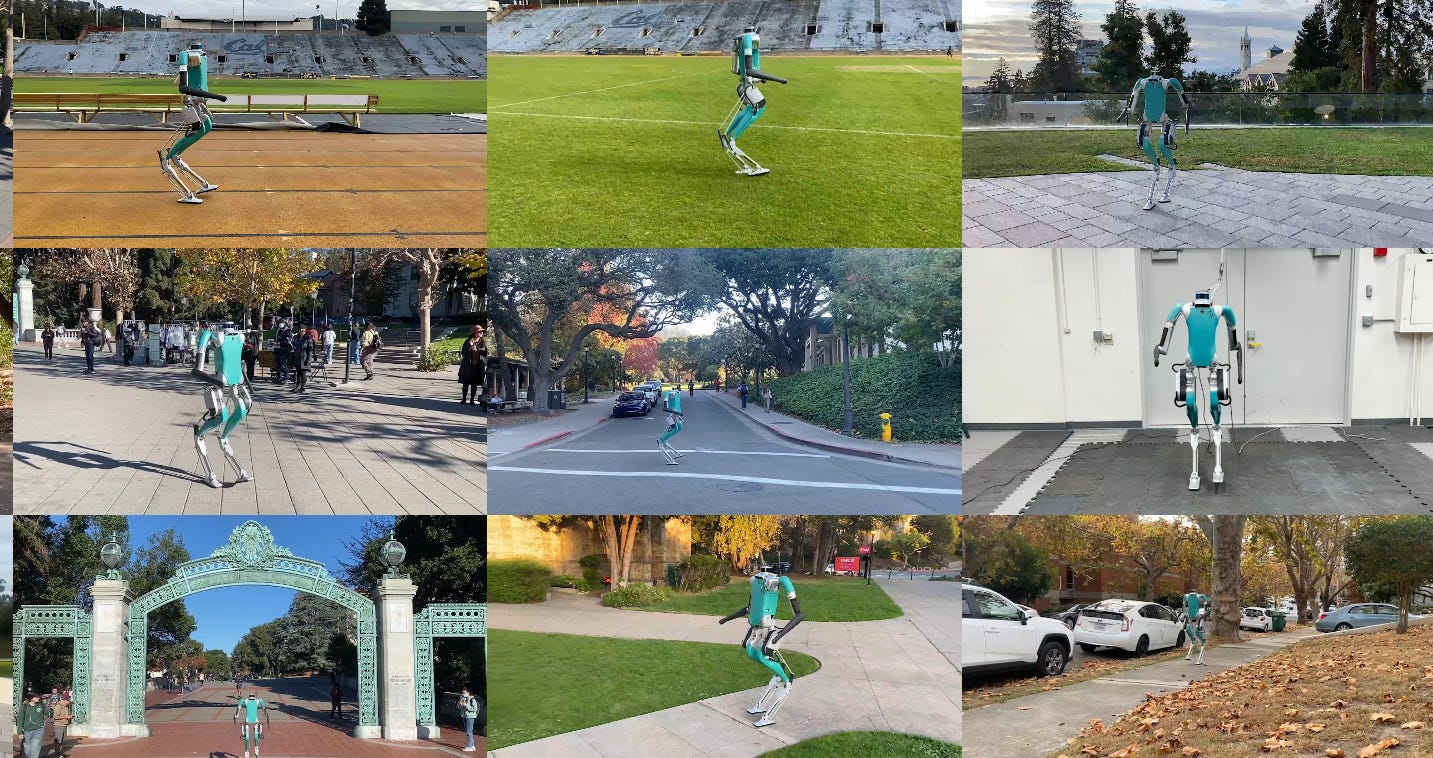

Berkeley Research’s real-world humanoid locomotion

Berkeley Research has released a new paper that discusses a learning-based approach for humanoid locomotion, which has the potential to address labor shortages, assist the elderly, and explore new planets. The controller used is a Transformer model that predicts future actions based on past observations and actions.

The model is trained using large-scale reinforcement learning in simulation, allowing for parallel training across multiple GPUs and thousands of environments.

Why does this matter?

Berkeley Research's novel approach to humanoid locomotion will help with vast real-world implications. This innovation holds promise for addressing labor shortages, aiding the elderly, and much more.

We need your help!

We are working on a Gen AI survey and would love your input.

It takes just 2 minutes.

The survey insights will help us both.

And hey, you might also win a $100 Amazon gift card!

Every response counts. Thanks in advance!

Alter3, a humanoid robot generating spontaneous motion using GPT-4

Researchers from Tokyo integrated GPT-4 into their proprietary android, Alter3, thereby effectively grounding the LLM with Alter's bodily movement.

Typically, low-level robot control is hardware-dependent and falls outside the scope of LLM corpora, presenting challenges for direct LLM-based robot control. However, in the case of humanoid robots like Alter3, direct control is feasible by mapping the linguistic expressions of human actions onto the robot's body through program code.

Remarkably, this approach enables Alter3 to adopt various poses, such as a 'selfie' stance or 'pretending to be a ghost,' and generate sequences of actions over time without explicit programming for each body part. This demonstrates the robot's zero-shot learning capabilities. Additionally, verbal feedback can adjust poses, obviating the need for fine-tuning.

Why does this matter?

It signifies a step forward in AI-driven robotics. It can foster the development of more intuitive, responsive, and versatile robotic systems that can understand human instructions and dynamically adapt their actions. Advances in this can revolutionize diverse fields, from service robotics to manufacturing, healthcare, and beyond.

Google Research’s new approach to improve LLM performance

Google Research released a new approach to improve the performance of LLMs; It answers complex natural language questions. The approach combines knowledge retrieval with the LLM and uses a ReAct-style agent that can reason and act upon external knowledge.

The agent is refined through a ReST-like method that iteratively trains on previous trajectories, using reinforcement learning and AI feedback for continuous self-improvement. After just two iterations, a fine-tuned small model is produced that achieves comparable performance to the large model but with significantly fewer parameters.

Why does this matter?

Having access to relevant external knowledge gives the system greater context for reasoning through multi-step problems. For the AI community, this technique demonstrates how the performance of language models can be improved by focusing on knowledge and reasoning abilities in addition to language mastery.

Meta’s 3D AI for everyday devices

Meta research and Codec Avatars Lab (with MIT) have proposed PlatoNeRF, a method to recover scene geometry from a single view using two-bounce signals captured by a single-photon lidar. It reconstructs lidar measurements with NeRF, which enables physically-accurate 3D geometry to be learned from a single view.

The method outperforms related work in single-view 3D reconstruction, reconstructs scenes with fully occluded objects, and learns metric depth from any view. Lastly, the research demonstrates generalization to varying sensor parameters and scene properties.

Why does this matter?

The research is a promising direction as single-photon lidars become more common and widely available in everyday consumer devices like phones, tablets, and headsets.

ByteDance presents DiffPortrait3D for zero-shot portrait view

ByteDance research presents DiffPortrait3D, a novel conditional diffusion model capable of generating consistent novel portraits from sparse input views.

Given a single portrait as reference (left), DiffPortrait3D is adept at producing high-fidelity and 3d-consistent novel view synthesis (right). Notably, without any finetuning, DiffPortrait3D is universally effective across a diverse range of facial portraits, encompassing, but not limited to, faces with exaggerated expressions, wide camera views, and artistic depictions.

Why does this matter?

The framework opens up possibilities for accessible 3D reconstruction and visualization from a single picture.

Can a SoTA LLM run on a phone without internet?

Amidst the rapid evolution of generative AI, on-device LLMs offer solutions to privacy, security, and connectivity challenges inherent in cloud-based models.

New research at Haltia, Inc. explores the feasibility and performance of on-device large language model (LLM) inference on various Apple iPhone models. Leveraging existing literature on running multi-billion parameter LLMs on resource-limited devices, the study examines the thermal effects and interaction speeds of a high-performing LLM across different smartphone generations. It presents real-world performance results, providing insights into on-device inference capabilities.

It finds that newer iPhones can handle LLMs, but achieving sustained performance requires further advancements in power management and system integration.

Why does this matter?

Running LLMs on smartphones or even other edge devices has significant advantages. This research is pivotal for enhancing AI processing on mobile devices and opens avenues for privacy-centric and offline AI applications.

That's all for now!

If you are new to The AI Edge, subscribe now and gain exclusive access to content enjoyed by professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other esteemed organizations.

Thanks for reading, and see you on Monday.😊