The AI Thrills of CES 2024 Day 2

Plus: Microsoft AI's new video-gen model, Meta’s new text-to-audio method.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 186th edition of The AI Edge newsletter. This edition brings you “The AI extravaganza continued on day 2 of CES 2024.”

And a huge shoutout to our incredible readers. We appreciate you😊

In today’s edition:

✨ AI extravaganza continued on day 2 of CES 2024

📽️ Microsoft AI introduces a new video-gen model

🔊 Meta’s new method for text-to-audio

📚 Knowledge Nugget: Fine-tuning pipeline for open-source LLMs by

Let’s go!

AI extravaganza continued on day 2 of CES 2024

Day 2 of CES 2024 has been filled with innovative AI announcements. Here are some standout highlights from the day.

Swift Robotics unveiled AI-powered strap-on shoes called 'Moonwalkers' that increase walking speed while maintaining a natural gait.

WeHead puts a face to ChatGPT that gives you a taste of what's to come before the showroom officially opens on Jan 9.

Intuition Robotics launched ElliQ 3, which aims to enhance the well-being and independence of older adults, fostering a happier and more connected lifestyle.

Amazon integrated with Character AI to bring conversational AI companions to devices.

L'Oreal revealed an AI chatbot that gives beauty advice based on an uploaded photograph.

Y-Brush is a kind of toothbrush that can brush your teeth in just 10 seconds. It was Developed by dentists over three years ago.

Swarovski's $4,799 smart AI-powered binoculars can identify birds and animals for you.

Why does this matter?

The wide range of AI innovations showcased on day two of CES 2024 demonstrates how rapidly this technology is evolving and integrating into our daily lives. From AI companions to augmented reality binoculars, these announcements highlight how AI is applied across industries to enhance products and services in healthcare, beauty, transportation, and more.

Microsoft AI introduces a new video-gen model

Microsoft AI has developed a new model called DragNUWA that aims to enhance video generation by incorporating trajectory-based generation alongside text and image prompts. This allows users to have more control over the production of videos, enabling the manipulation of objects and video frames with specific trajectories.

Combining text and images alone may not capture intricate motion details, while images and trajectories may not adequately represent future objects, and language can result in ambiguity. DragNUWA aims to address these limitations and provide highly controllable video generation. The model has been released on Hugging Face and has shown promising results in accurately controlling camera movements and object motions.

Why does this matter?

By incorporating trajectories alongside text and images, this technique allows for precise manipulation of objects and frames that language or images alone cannot achieve. The ability to finely tune video outputs gives creators more agency over their AI-generated content. It also enables new applications in fields like computer animation, visualization, and simulation that require intricate control over video dynamics.

Meta’s new method for text-to-audio

Meta launched a new method, ‘MAGNeT’, for generating audio from text; it uses a single-stage, non-autoregressive transformer to predict masked tokens during training and gradually constructs the output sequence during inference. To improve the quality of the generated audio, an external pre-trained model is used to rescore and rank predictions.

A hybrid version of MAGNeT combines autoregressive and non-autoregressive models for faster generation. The approach is compared to baselines and found to be significantly faster while maintaining comparable quality. Ablation studies and analysis highlight the importance of each component and the trade-offs between autoregressive and non-autoregressive modeling.

Why does this matter?

It enables high-quality text-to-speech synthesis while being much faster than previous methods. This speed and quality improvement could expand the viability of text-to-speech for systems like virtual assistants, reading apps, dialog systems, and more.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

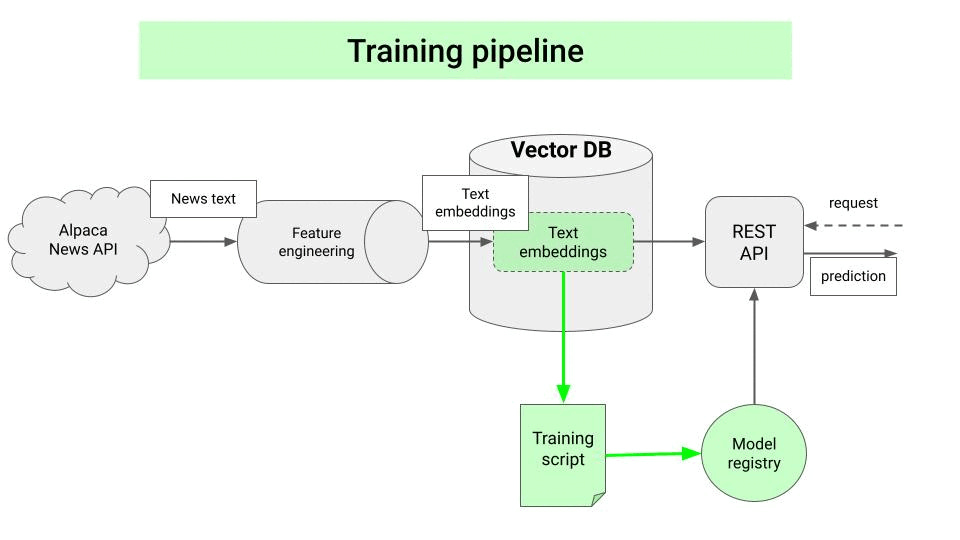

Knowledge Nugget: Fine-tuning pipeline for open-source LLMs

This article by

discusses the process of building a fine-tuning pipeline for open-source LLMs. The pipeline is part of a hands-on tutorial course on building a financial advisor using LLMs and MLOps best practices. Fine-tuning an LLM for a specific task offers better performance and cost-effectiveness than a generalist LLM.The four steps to fine-tune an open-source LLM for the financial advisor project are: generating a dataset of input-output examples, choosing an open-source base LLM, running the fine-tuning script using the trl library, and logging experiment results and model artifacts. The final model can then be pushed to the model registry for deployment.

Why does this matter?

The tutorial demonstrates best practices for fine-tuning open-source LLMs for real-world applications. Fine-tuning improves task-specific performance, while proper MLOps implementation enables reproducibility, automation, and responsible deployment to production. Hands-on experience finetuning and deploying LLMs with MLOps is key for building impactful AI systems.

What Else Is Happening❗

🌐 OpenAI has introduced a new subscription plan called ChatGPT Team

The plan offers a dedicated workspace for teams of up to 149 people and admin tools for team management. Users gain access to OpenAI's latest models, including GPT-4, GPT-4 with Vision, and DALL-E 3, and tools for analyzing, editing, and extracting information from uploaded files. (Link)

🛍️ OpenAI launches a store for custom AI-powered chatbots

Developed using its text- and image-gen AI models. The store, accessible through the ChatGPT client, features a range of GPTs developed by OpenAI's partners and the wider developer community. Users must be subscribed to OpenAI's premium ChatGPT plans to access the store. (Link)

💼 Nasdaq invests in advanced AI to combat cyber fraud and financial crime

CEO Adena Friedman announced the company's commitment to enhancing its anti-crime capabilities in the face of increasing digital-age challenges. The move comes as cybercrime and financial fraud become more sophisticated. The FBI has also highlighted the challenge of proactive investigations in cybercrime. (Link)

🕹️ Valve announced new rules for developers to publish games and use AI

Under the new rules, developers must disclose if their games use AI-generated content and promise that it is not illegal or infringing. Players will be able to see if a game contains AI and report any illegal AI-generated content. The changes aim to increase transparency and allow customers to make informed choices. (Link)

📰 OpenAI is in talks with CNN, Fox Corp., and Time to license their content

As it seeks to enhance its AI products. It aims to secure access to news content to improve the accuracy and relevance of its AI chatbot. These negotiations come as OpenAI faces lawsuits accusing it of copyright infringement. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From ML to ChatGPT to generative AI and LLMs, We break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊