Hello, Engineering Leaders and AI enthusiasts

Today, we’ll focus on Stability AI. Unlike other AI giants, Stability AI might not be popular among the masses, but it’s definitely on the radar of every AI enthusiast.

Let’s hit rewind on major Stability AI announcements to trace its growth trajectory.

Let’s go!

🚀 Stability AI launches StableLM, an open-source suite of language models

🎬 Stability AI releases a powerful text-to-animation tool

💥 SDXL 0.9, the most advanced development in Stable Diffusion

🖼️ Stable Doodle: Next chapter in AI art

🧨 Stability AI introduces 2 LLMs close to ChatGPT

🆕 Stability AI released SDXL 1.0, featured on Amazon Bedrock

🚀 Stability AI launches LLM code generator

⚡Stability collabs with NVIDIA to 2x the AI Image gen speed🚩 Stability AI’s 1st Japanese Vision-Language Model

Stability AI launches StableLM, an open-source suite of language models

The creators of Stable Diffusion, Stability AI, just released a suite of open-sourced large language models (LLMs) called StableLM. This comes just 5 days after the public release of their text-to-image generative AI model, SDXL.

StableLM is trained on a new experimental dataset built on The Pile, but three times larger with 1.5 trillion tokens of content. The richness of this dataset gives StableLM surprisingly high performance in conversational and coding tasks, despite its small size of 3 to 7 billion parameters (by comparison, GPT-3 has 175 billion parameters).

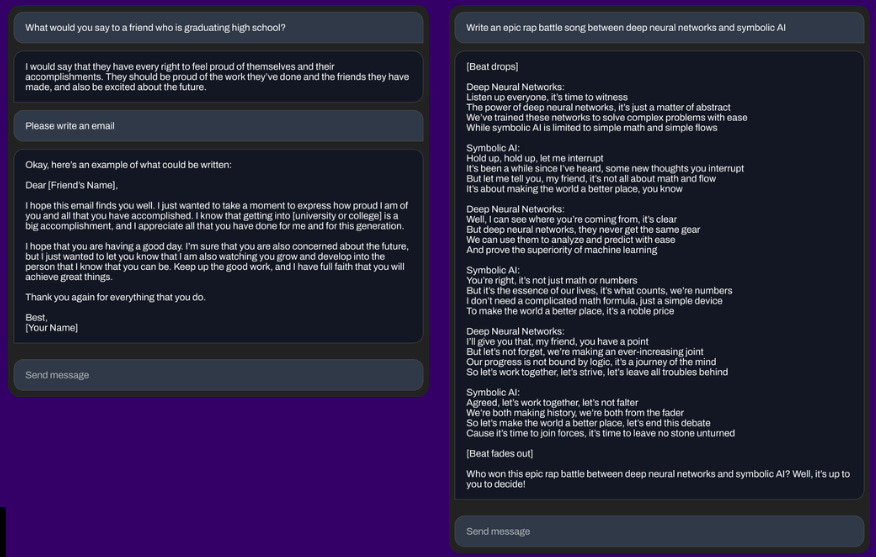

Some examples

Stability AI releases a powerful text-to-animation tool

Stability AI released Stable Animation SDK, a tool designed for developers and artists to implement the most advanced Stable Diffusion models to generate stunning animations. It allows using all the models, including Stable Diffusion 2.0 and Stable Diffusion XL. And it offers three ways to create animations:

Text to animation

Initial image input + text input

Input video + text input

The initial image/video inputs act as the starting point for the animation, which is additionally guided by text prompts to arrive at the final output.

SDXL 0.9, the most advanced development in Stable Diffusion

Stability AI announces SDXL 0.9, the most advanced development in the Stable Diffusion text-to-image suite of models. SDXL 0.9 produces massively improved image and composition detail over its predecessor, Stable Diffusion XL. Here’s an example of a prompt tested on both SDXL beta (left) and 0.9.

The key driver of this advancement in composition for SDXL 0.9 is its significant increase in parameter count over the beta version. SDXL 0.9 is run on two CLIP models, including one of the largest OpenCLIP models trained to date. This beefs up 0.9’s processing power and ability to create realistic imagery with greater depth and a higher resolution of 1024x1024.

Stable Doodle: Next chapter in AI art

Stability AI, the startup behind Stable Diffusion, has released 'Stable Doodle,' an AI tool that can turn sketches into images. The tool accepts a sketch and a descriptive prompt to guide the image generation process, with the output quality depending on the detail of the initial drawing and the prompt. It utilizes the latest Stable Diffusion model and the T2I-Adapter for conditional control.

Stable Doodle is designed for both professional artists and novices and offers more precise control over image generation. Stability AI aims to quadruple its $1 billion valuation in the next few months.

Stability AI introduces 2 LLMs close to ChatGPT

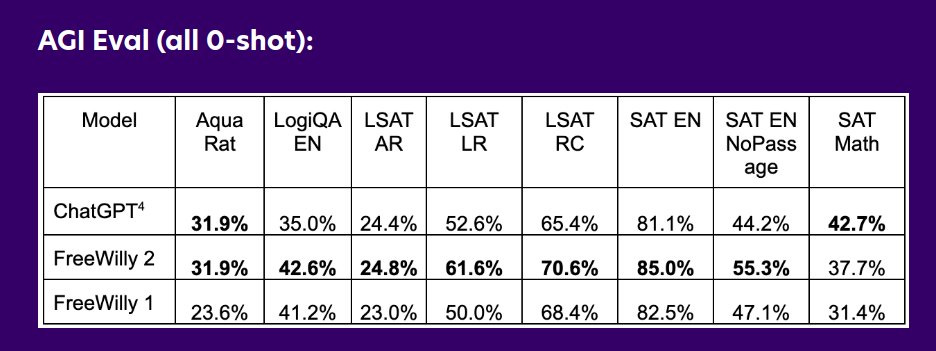

Stability AI and CarperAI lab, unveiled FreeWilly1 and its successor FreeWilly2, two powerful new, open-access, Large Language Models. These models showcase remarkable reasoning capabilities across diverse benchmarks. FreeWilly1 is built upon the original LLaMA 65B foundation model and fine-tuned using a new synthetically-generated dataset with Supervised Fine-Tune (SFT) in standard Alpaca format. Similarly, FreeWilly2 harnesses the LLaMA 2 70B foundation model and demonstrates competitive performance with GPT-3.5 for specific tasks.

For internal evaluation, they’ve utilized EleutherAI's lm-eval-harness, enhanced with AGIEval integration. Both models serve as research experiments, released to foster open research under a non-commercial license.

Stability AI released SDXL 1.0, featured on Amazon Bedrock

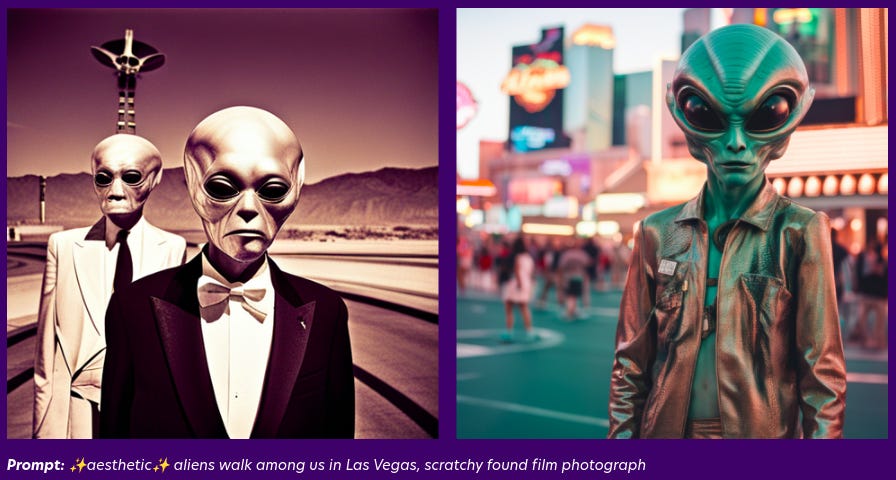

Stability AI has announced the release of Stable Diffusion XL (SDXL) 1.0, its advanced text-to-image model. The model will be featured on Amazon Bedrock, providing access to foundation models from leading AI startups. SDXL 1.0 generates vibrant, accurate images with improved colors, contrast, lighting, and shadows. It is available through Stability AI's API, GitHub page, and consumer applications.

The model is also accessible on Amazon SageMaker JumpStart. Stability API's new fine-tuning beta feature allows users to specialize generation on specific subjects. SDXL 1.0 has one of the largest parameter counts and has been widely used by ClipDrop users and Stability AI's Discord community.

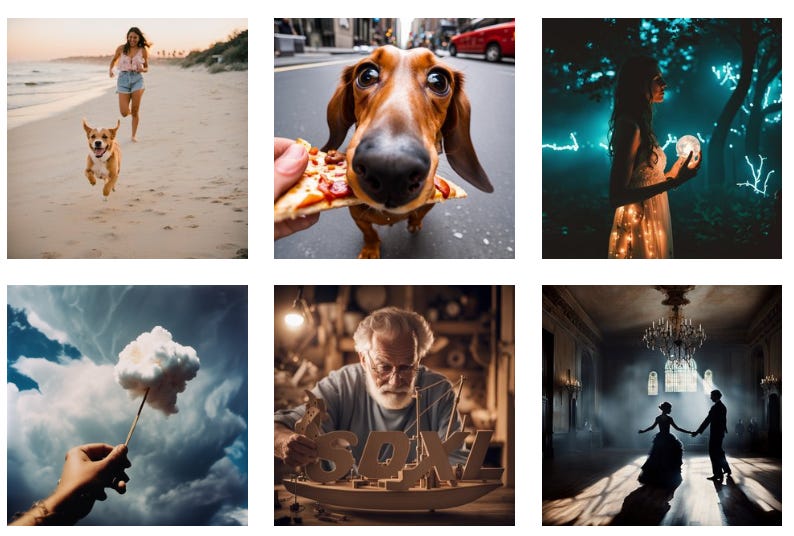

(Images created using Stable Diffusion XL 1.0, featured on Amazon Bedrock)

Stability AI launches LLM code generator

Stability AI has released StableCode, an LLM generative AI product for coding. It aims to assist programmers in their daily work and provide a learning tool for new developers. StableCode uses three different models to enhance coding efficiency. The base model was trained in various programming languages, including Python, Go, Java, and more. It was then further trained on 560B tokens of code.

The instruction model was tuned for specific use cases by training it on 120,000 code instruction/response pairs. StableCode offers a unique solution for developers to improve their coding skills and productivity.

Stability collabs with NVIDIA to 2x the AI Image gen speed

Stability AI has partnered with NVIDIA to enhance the speed of their text-to-image generative AI product, Stable Diffusion XL. By integrating NVIDIA TensorRT, a performance optimization framework, they have significantly improved the speed and efficiency of SDXL.

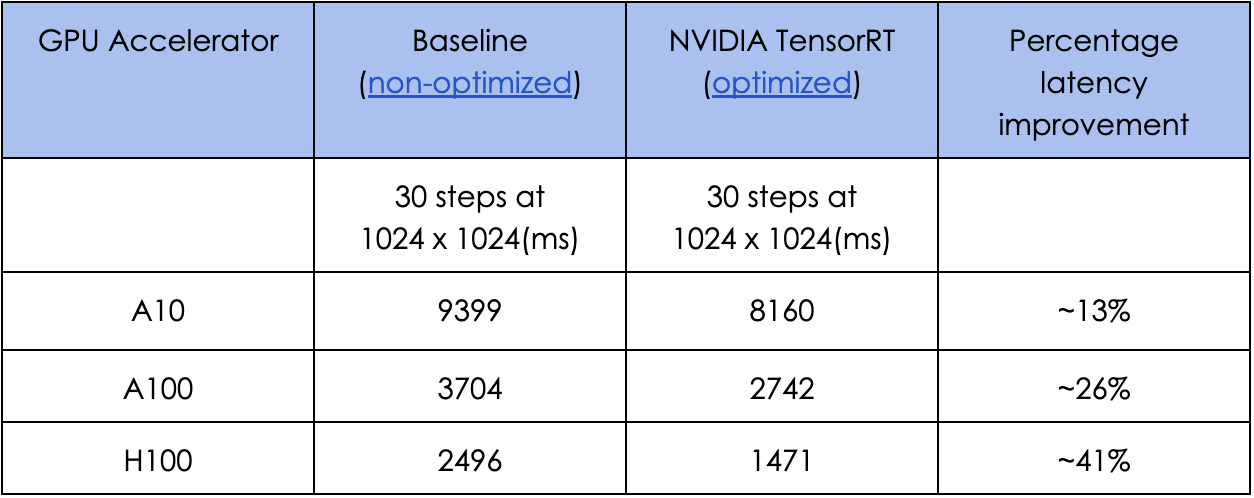

The integration has doubled performance on NVIDIA H100 chips, generating HD images in just 1.47 seconds. The NVIDIA TensorRT model is also faster and better in latency and throughput than the non-optimized model on A10, A100, and H100 GPU accelerators. This collaboration aims to increase both the speed and accessibility of SDXL.

Latency performance comparison:

Stability AI’s 1st Japanese Vision-Language Model

Stability AI has released Japanese InstructBLIP Alpha, a vision-language model that generates textual descriptions for input images and answers questions about them. It is built upon the Japanese StableLM Instruct Alpha 7B and leverages the InstructBLIP architecture.

(Figure. Output: “Two persons sitting on a bench looking at Mt.Fuji”)

The model can accurately recognize Japan-specific objects and process text input, such as questions. It is available on Hugging Face Hub for inference and additional training, exclusively for research. This model has various applications, including search engine functionality, scene description, and providing textual descriptions for blind individuals.

Stability AI launches text-to-music AI

Stability AI has launched Stable Audio, a music and sound generation product. Stable Audio utilizes generative AI techniques to provide faster and higher-quality music and sound effects through a user-friendly web interface.

The product offers a free version for generating and downloading tracks up to 45 seconds long and a subscription-based 'Pro' version for commercial projects with 90-second downloadable tracks. Stable Audio allows users to input descriptive text prompts and desired audio length to generate customized tracks. The underlying model was trained using music and metadata from AudioSparx, a music library.

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI Enthusiasts.

Thanks for reading, and see you tomorrow. 😊