Rabbit’s AI Device Can Do Things Phones Never Will

Plus: Luma AI is introduces Genie 1.0, ByteDance introduces MagicVideo-V2.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 185th edition of The AI Edge newsletter. This edition brings you Rabbit’s AI-powered pocket device that does digital tasks for you.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

📱 Rabbit unveils r1, an AI pocket device to do tasks for you

🚀Luma AI takes first step towards building multimodal AI

🎥 ByteDance releases MagicVideo-V2 for high-aesthetic video

📚 Knowledge Nugget: An “AI Breakthrough” on Systematic Generalization in Language? by

Let’s go!

Rabbit unveils r1, an AI pocket device to do tasks for you

Tech startup Rabbit unveiled r1, an AI-powered companion device that does digital tasks for you. r1 operates as a standalone device, but its software is the real deal– it operates on Rabbit OS and the AI tech underneath. Rather than a ChatGPT-like LLM, this OS is based on a “Large Action Model” (a sort of universal controller for apps).

The Rabbit OS introduces “rabbits”– AI agents that execute a wide range of tasks, from simple inquiries to intricate errands like travel research or grocery shopping. By observing and learning human behaviors, LAM also removes the need for complex integrations like APIs and apps, enabling seamless task execution across platforms without users having to download multiple applications.

Why does this matter?

If Humane can’t do it, Rabbit just might. This can usher in a new era of human-device interaction where AI doesn’t just understand natural language; it performs actions based on users’ intentions to accomplish tasks. It will revolutionize the online experience by efficiently navigating multiple apps using natural language commands.

Luma AI takes first step towards building multimodal AI

Luma AI is introducing Genie 1.0, its first step towards building multimodal AI. Genie is a text-to-3d model capable of creating any 3d object you can dream of in under 10 seconds with materials, quad mesh retopology, variable polycount, and in all standard formats. You can try it on web and in Luma’s iOS app now.

Why does this matter?

Luma is launching 3D model-creating AI models in an increasingly competitive space. Stability AI recently launched a similar tool; Autodesk, Adobe, and Nvidia are beginning to dip their toes too. But Luma’s results are promising, and more capable models will make a headway; time will tell.

ByteDance releases MagicVideo-V2 for high-aesthetic video

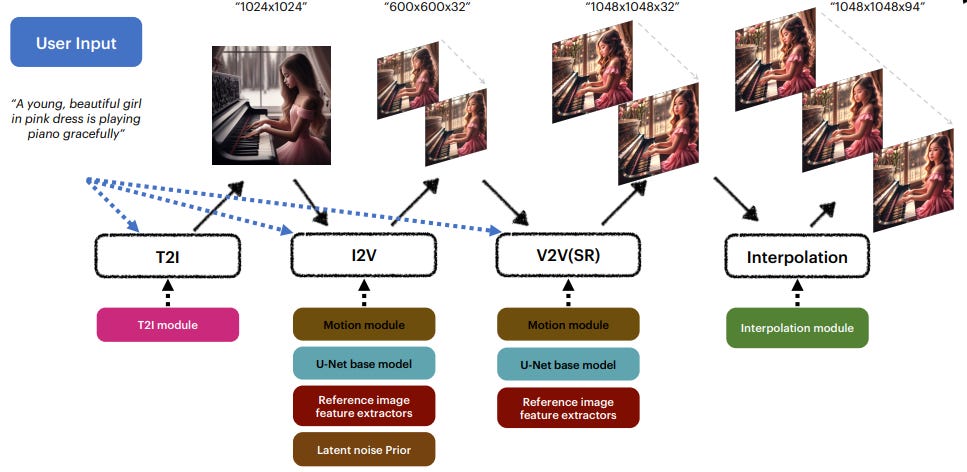

ByteDance research has introduced MagicVideo-V2, which integrates the text-to-image model, video motion generator, reference image embedding module, and frame interpolation module into an end-to-end video generation pipeline. Benefiting from these architecture designs, MagicVideo-V2 can generate an aesthetically pleasing, high-resolution video with remarkable fidelity and smoothness.

It demonstrates superior performance over leading Text-to-Video systems such as Runway, Pika 1.0, Morph, Moon Valley, and Stable Video Diffusion model via user evaluation at large scale.

Why does this matter?

The growing demand for high-fidelity video generation from textual descriptions has catalyzed significant research in text-to-video. This provides a new strategy for generating smooth and high-aesthetic videos.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: An “AI Breakthrough” on Systematic Generalization in Language?

In this interesting article,

discusses recent progress in AI's systematic language understanding. It presents a puzzle illustrating how humans comprehend language through compositionality, systematicity, and productivity.It also highlights a study introducing a neural network trained via "meta-learning" to solve such puzzles, nearing human performance. However, it notes the network's heavy training requirements compared to human adaptability. The article questions the true human-likeness of the network's generalization and emphasizes the need for further research on AI's broader learning abilities.

Why does this matter?

The article challenges prior beliefs about AI's language comprehension abilities. Ultimately, it could be a significant step forward in AI's language understanding while highlighting areas that require deeper exploration and scrutiny.

What Else Is Happening❗

🛒Walmart unveils new generative AI-powered capabilities for shoppers and associates.

At CES 2024, Walmart introduced new AI innovations, including generative AI-powered search for shoppers and an assistant app for associates. Using its own tech and Microsoft Azure OpenAI Service, the new design serves up a curated list of the personalized items a shopper is looking for. (Link)

✨Amazon’s Alexa gets new generative AI-powered experiences.

The company revealed three developers delivering new generative AI-powered Alexa experiences, including AI chatbot platform Character.AI, AI music company Splash, and Voice AI game developer Volley. All three experiences are available in the Amazon Alexa Skill Store. (Link)

🖼️Getty Images launches a new GenAI service for iStock customers.

It announced a new service at CES 2024 that leverages AI models trained on Getty’s iStock stock photography and video libraries to generate new licensable images and artwork. Called Generative AI by iStock and powered partly by Nvidia tech, it aims to guard against generations of known products, people, places, or other copyrighted elements. (Link)

💻Intel challenges Nvidia and Qualcomm with 'AI PC' chips for cars.

Intel will launch automotive versions of its newest AI-enabled chips, taking on Qualcomm and Nvidia in the market for semiconductors that can power the brains of future cars. Intel aims to stand out by offering chips that automakers can use across their product lines, from lowest-priced to premium vehicles. (Link)

🔋New material found by AI could reduce lithium use in batteries.

A brand new substance, which could reduce lithium use in batteries by up to 70%, has been discovered using AI and supercomputing. Researchers narrowed down 32 million potential inorganic materials to 18 promising candidates in less than a week– a process that could have taken more than two decades with traditional methods. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊