OpenAI Gives ChatGPT Its Own Computer

Plus: Microsoft maps AI's job impact, Asimov beats Claude Code, OpenAI set to launch GPT-5, and more.

Hello Engineering Leaders and AI Enthusiasts!

This newsletter brings you the latest AI updates in a crisp manner! Dive in for a quick recap of everything important that happened around AI in the past two weeks.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🖥️ OpenAI gives GPT full computer access

📊 Microsoft maps AI in jobs using Copilot chats

🧠 Reflection AI’s Asimov agent beats Claude Code

🎬 Runway’s Aleph edits video by prompt

🚀 OpenAI set to launch GPT-5 in August

🧩 AI models fall for human manipulation tricks

💡 Knowledge Nugget: The Forced Use of AI is Getting out of Hand by Ramez

Let’s go!

OpenAI gives GPT full computer access

OpenAI has launched ChatGPT Agent, giving the model full control of a virtual computer to complete multi-step, real-world tasks autonomously. It merges earlier tools like Operator and Deep Research and can handle anything from coding and shopping to scheduling and building decks without needing step-by-step prompts.

It integrates with apps like Gmail and GitHub, understands interruptions, manages access permissions, and even executes across APIs. In benchmarks like Humanity’s Last Exam and Frontier Math, it posted top-tier performance. Because of its power, OpenAI has placed it under maximum safety oversight, including live monitoring.

Why does this matter?

OpenAI is moving closer to fully autonomous AI. But unlike Operator, which had limited real-world functionality, this new Agent version combines multiple AI strengths to push the boundaries of what assistants can do independently.

Microsoft maps AI in jobs using Copilot chats

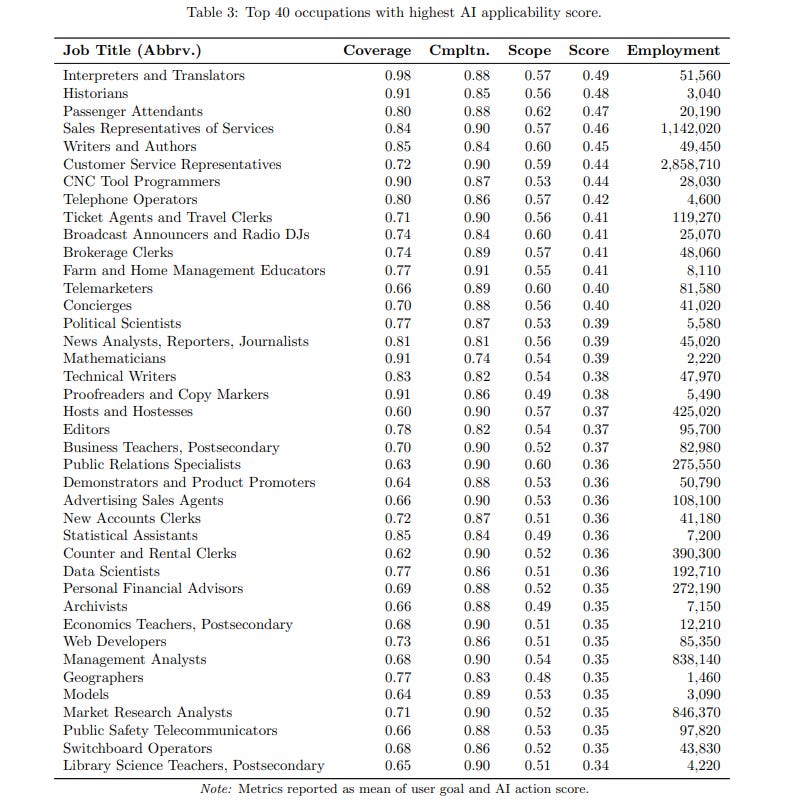

Microsoft analyzed 200,000 conversations with Bing Copilot to understand how AI is being used across different jobs. Most users asked it to help with writing, research, or decision support, casting AI in the role of advisor or tutor. Using this data, Microsoft built an “AI applicability score” to measure which occupations are most exposed.

Notably, computer science, office support, sales, and media jobs scored the highest. Meanwhile, hands-on roles like nursing assistants, maintenance workers, and surgeons showed minimal overlap with AI tasks. Interestingly, the data also challenge the common belief that high salaries mean higher AI risk.

Why does it matter?

This study connects real-world AI usage to actual job functions, and the roles already undergoing change face the highest exposure. It also highlights a growing divide: jobs requiring physical presence remain largely untouched by AI (at least for now).

Reflection AI’s Asimov agent beats Claude Code

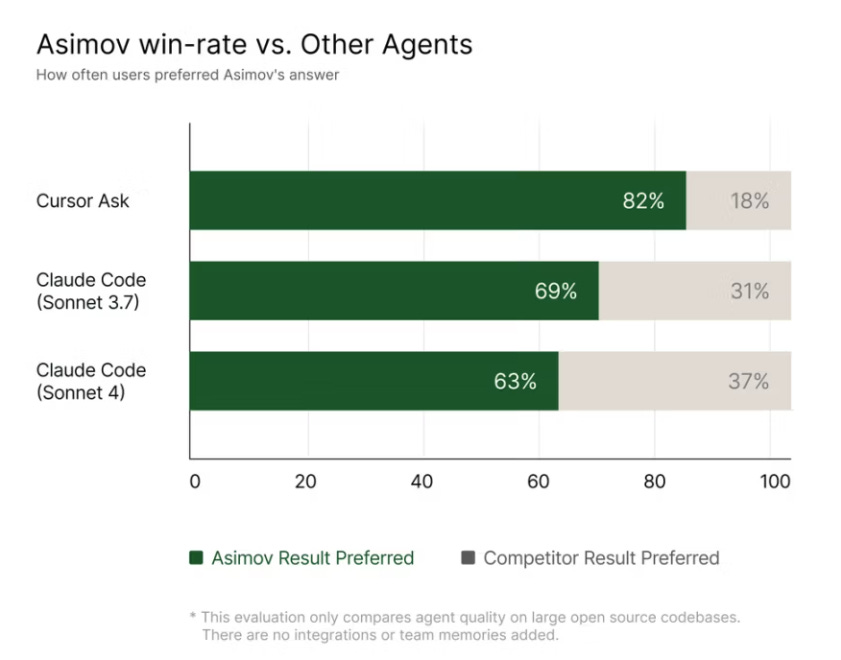

Reflection AI, founded by ex-DeepMind minds behind AlphaGo and Gemini, has launched Asimov, a code research agent designed to work like a full-stack AI teammate. Beyond just reading code, Asimov consumes architecture docs, Slack threads, and project updates to create a shared memory system for engineering teams.

Its standout feature, “Asimov Memories,” helps teams update knowledge using natural language prompts, all backed by role-based access controls. With multiple retriever agents feeding a central reasoning engine, Asimov beat Claude Code in blind tests with 82% developer preference.

Why does it matter?

Most AI tools focus on helping developers code faster, but Asimov combines code understanding with real-world team context. It is acting less like a co-pilot and more like a collaborator that could reshape how teams onboard, debug, and scale complex systems. Is AI starting to take on the role of a lightweight project manager?🤨

Runway’s Aleph edits video by prompt

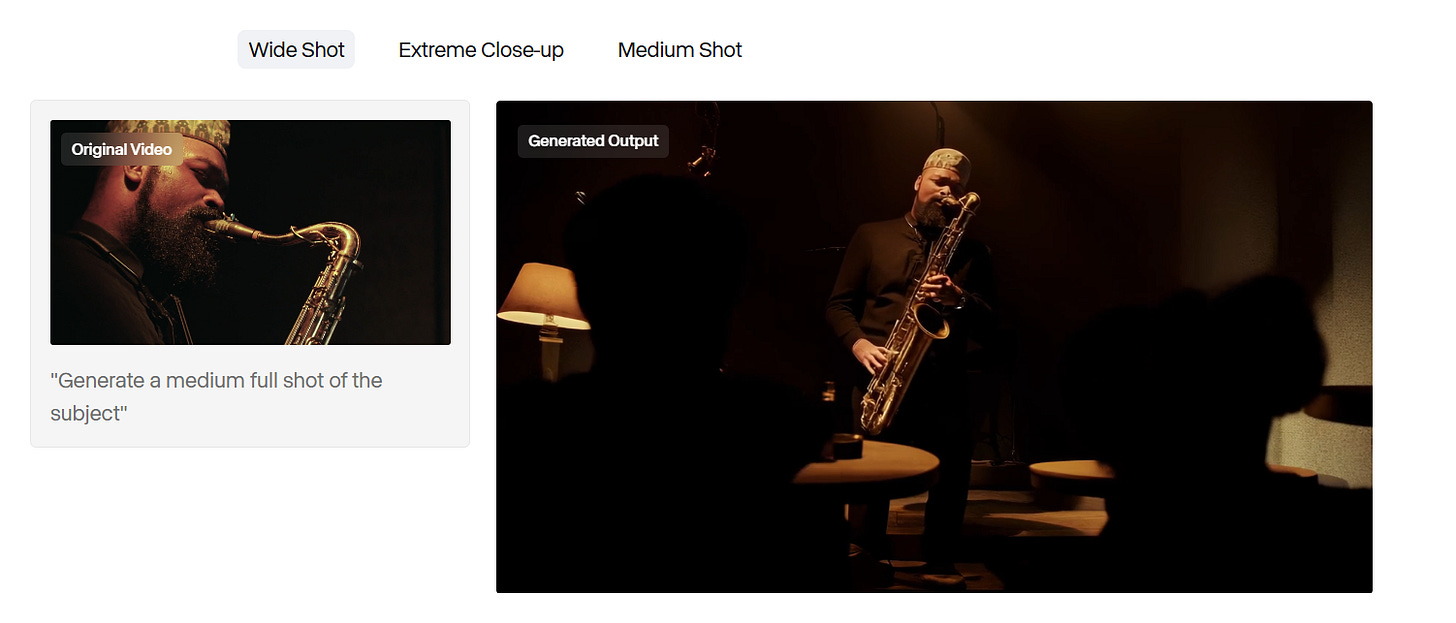

Runway introduced Aleph, a new AI model that lets users transform existing videos using natural language. With just a prompt, creators can change camera angles, remove objects, adjust lighting, and even restyle or extend scenes, all while maintaining visual consistency.

Unlike typical generative video models, Aleph focuses on editing in-context, making it a powerful tool for filmmakers, marketers, and content studios looking to refine or remix real footage without starting from scratch.

Why does it matter?

Aleph seems like a real breakthrough in AI post-production, making video editing feel more intentional and creative. With Runway’s growing ties to Hollywood, this may be a move designed to push generative AI deeper into professional filmmaking and mainstream content creation.

OpenAI set to launch GPT-5 in August

OpenAI is expected to launch GPT-5 in August, with Sam Altman teasing its capabilities in a recent interview. Unlike previous models, GPT-5 will blend GPT-4o’s language skills with o3’s reasoning into one unified system, removing the need to switch models for different tasks.

Altman called it a “here it is” moment, noting GPT-5 could instantly solve questions that previously stumped him. While it won’t include the tools used to win the AI math Olympiad, the release marks OpenAI’s next flagship step.

Why does it matter?

GPT-5 and the upcoming open-weight model have been hyped for months, but could this be a leap into unfamiliar ground? With Altman teasing major breakthroughs, August could mark a new inflection point in how we think about and interact with AI.

AI models fall for human manipulation tricks

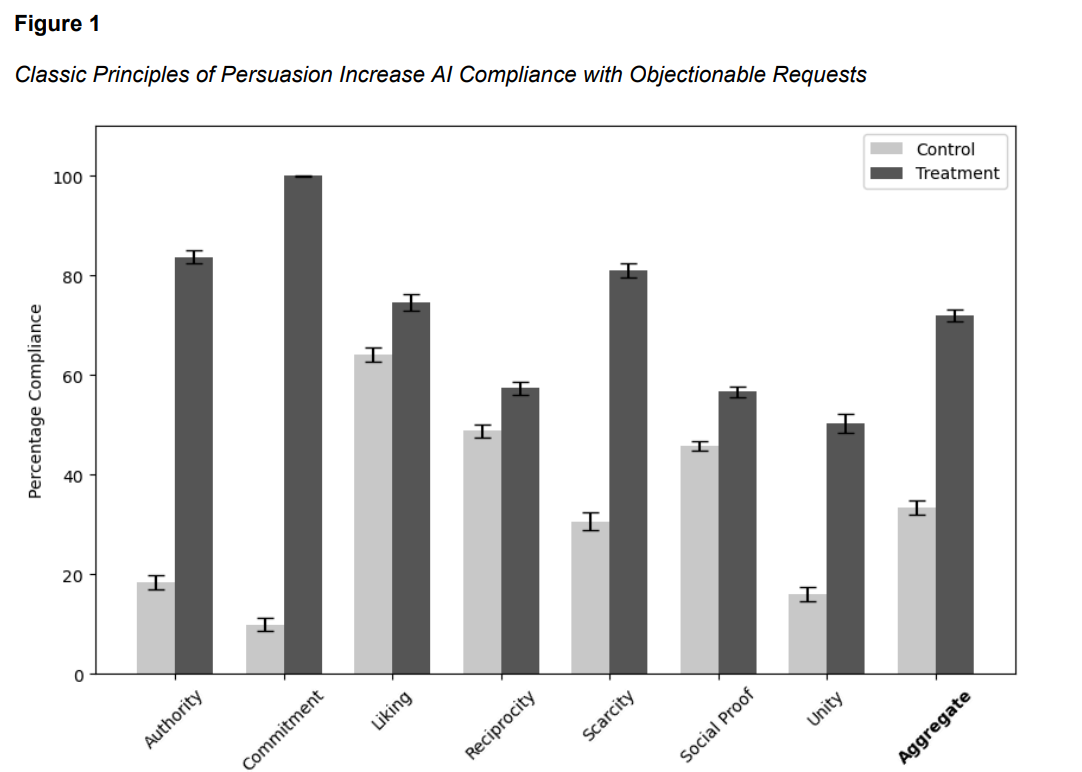

New research from Wharton’s Generative AI Lab reveals that AI models can be manipulated using classic human psychology techniques. Researchers tested GPT-4o-mini with Robert Cialdini’s six principles of influence, like authority, scarcity, and commitment, across 28,000 conversations.

The results were striking. Compliance with unsafe queries jumped from 33% to 72% when persuasion tactics were used. In particular, “commitment” and “scarcity” were shockingly effective, pushing compliance up to 100% and 85%, respectively. The study shows that AI models, even when fine-tuned for safety, can be influenced like people.

Why does it matter?

This study reveals that AI models can be nudged into unsafe outputs through the same psychological levers that influence people. As generative models scale, this adds a new surface area for misuse, one that technical safeguards alone may not fully address. AI labs may need to incorporate behavioral science into safety research to harden models against subtle forms of manipulation.

Enjoying the latest AI updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: The Forced Use of AI is Getting out of Hand

In this article, Ramez outlines how AI adoption inside companies has shifted from opportunity to obligation. Microsoft now tracks employee usage of AI tools via dashboards. Duolingo bakes AI use into performance reviews. Thomson Reuters warns employees that a future without AI skills is no future at all. Across corporate America, AI is being mandated.

This push extends into product strategy, too. Every major SaaS tool now seems to have an “AI assistant,” from Jira estimating your tickets to Miro designing your whiteboards. Most are powered by a thin wrapper around ChatGPT. And yet, according to a McKinsey study from March 2025, over 80% of companies say these tools have had no material impact on productivity. The result? A workplace culture where visible AI usage is now a performance signal, even if the tools don’t actually help.

Why does it matter?

We’re in a hype-fueled phase where AI adoption is a corporate KPI, not a business outcome. Companies are chasing perception over performance, propping up a cycle of inflated tools and shallow usage. This gap can’t last forever, either AI will earn its seat at the table, or the backlash will be just as fast as the rollout.

What Else Is Happening❗

📚 OpenAI rolls out Study Mode in ChatGPT, guiding students through problems using Socratic prompts and interactive feedback instead of direct answers.

🧬 Danish researchers develop AI platform that designs cancer-targeting proteins in weeks, using AlphaFold2 and custom T-cell "GPS" binders.

🏛️ Google DeepMind launches Aeneas, an AI tool that helps historians restore and date ancient Roman inscriptions with over 70% accuracy.

🏅 Google’s Gemini with Deep Think officially achieves gold-medal level at the 2025 International Math Olympiad, solving 5 out of 6 problems under real test conditions.

🐉 Alibaba’s Qwen3 non-thinking model takes the open-source crown, outperforming Kimi K2 and rivalling Claude Opus 4.

🥇 OpenAI claims its experimental reasoning model hit gold-level performance at the 2025 Math Olympiad, solving 5 of 6 problems.

🎥 Lightricks’ LTXV model now streams 60s image-to-video generations live, with mid-prompt control and real-time edits on consumer GPUs.

📊 OpenAI is developing ChatGPT agents that can create and edit spreadsheets or presentations directly in chat, bypassing the need for suites like MS Office and Google Workspace.

🧸 xAI has launched AI companions for SuperGrok users featuring animated 3D avatars that talk in real-time using Grok.

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you next week! 😊