NVIDIA’s Single-Slot GPUs for 3x Faster GenAI

Plus: Amazon Music launches Maestro, an AI-based playlist generator, Standford’s report reflects industry dominance and rising training costs in AI.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 255th edition of The AI Edge newsletter. This edition features “NVIDIA’s Single-Slot GPUs for 3x Faster GenAI.”

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🎮 NVIDIA RTX A400 A1000: Lower-cost single slot GPUs

🎵 Amazon Music launches Maestro, an AI-based playlist generator

📊 Stanford’s report reflects industry dominance and rising training costs in AI

📚 Knowledge Nugget: Which LLM is the Best? Let's Build a Model Evaluation Framework and Find Out by

Let’s go!

NVIDIA RTX A400 A1000: Lower-cost single slot GPUs

NVIDIA is expanding its lineup of professional RTX graphics cards with two new desktop GPUs - the RTX A400 and RTX A1000. These new GPUs are designed to bring enhanced AI and ray-tracing capabilities to workstation-class computers. The RTX A1000 GPU is already available from resellers, while the RTX A400 GPU is expected to launch in May.

NVIDIA RTX A400

With 24 tensor cores for AI processing, the A400 enables professionals to run AI apps directly on their desktops, such as intelligent chatbots and copilots. The GPU allows creatives to produce vivid, physically accurate 3D renderings. The A400 also features four display outputs, making it ideal for high-density display environments such as financial services, command and control, retail, and transportation.

NVIDIA RTX A1000

With 72 Tensor Cores, the A1000 offers 3x faster generative AI processing for tools like Stable Diffusion. The A1000 also excels in video processing, as it can process up to 38% more encoding streams and offers up to 2x faster decoding performance than the previous generation. With their slim single-slot design and power consumption of just 50W, the A400 and A1000 GPUs offer impressive features for compact, energy-efficient workstations.

Why does it matter?

NVIDIA RTX A400 and A1000 GPUs provide professionals with cutting-edge AI, graphics, and computing capabilities to increase productivity and unlock creative possibilities. These GPUs can be used by industrial designers, creatives, architects, engineers, healthcare teams, and financial professionals to improve their workflows and achieve faster and more accurate results. With their advanced features and energy efficiency, these GPUs have the potential to impact the future of AI in various industries.

Amazon Music launches Maestro, an AI-based playlist generator

Amazon Music is launching its AI-powered playlist generator, Maestro, following a similar feature introduced by Spotify. Maestro allows users in the U.S. to create playlists by speaking or writing prompts. The AI will then generate a song playlist that matches the user's input. This feature is currently in beta and is being rolled out to a subset of Amazon Music's free, Prime, and Unlimited subscribers on iOS and Android.

Like Spotify's AI playlist generator, Amazon has built safeguards to block inappropriate prompts. However, the technology is still new, and Amazon warns that Maestro "won't always get it right the first time."

Why does it matter?

Introducing AI-powered playlist generators could profoundly impact how we discover and consume music in the future. These AI tools can revolutionize music curation and personalization by allowing users to create highly tailored playlists simply through prompts. This trend could increase user engagement, drive more paid subscriptions, and spur further innovation in AI-powered music experiences as companies offer more cutting-edge features.

Standford’s report reflects industry dominance and rising training costs in AI

The AI Index, an independent report by the Stanford Institute for Human-Centered Artificial Intelligence (HAI), provides a comprehensive overview of global AI trends in 2023.

The report states that the industry outpaced academia in AI development and deployment. Out of the 149 foundational models published in 2023, 108 (72.5%) were from industry compared to just 28 (18.8%) from academia.

Google (18) leads the way, followed by Meta (11), Microsoft (9), and OpenAI (7).

United States leads as the top source with 109 foundational models out of 149, followed by China (20) and the UK (9). In case of machine learning models, the United States again tops the chart with 61 notable models, followed by China (15) and France (8).

Regarding AI models' training and computing costs, Gemini Ultra leads with a training cost of $191 million, followed by GPT-4, which has a training cost of $78 million.

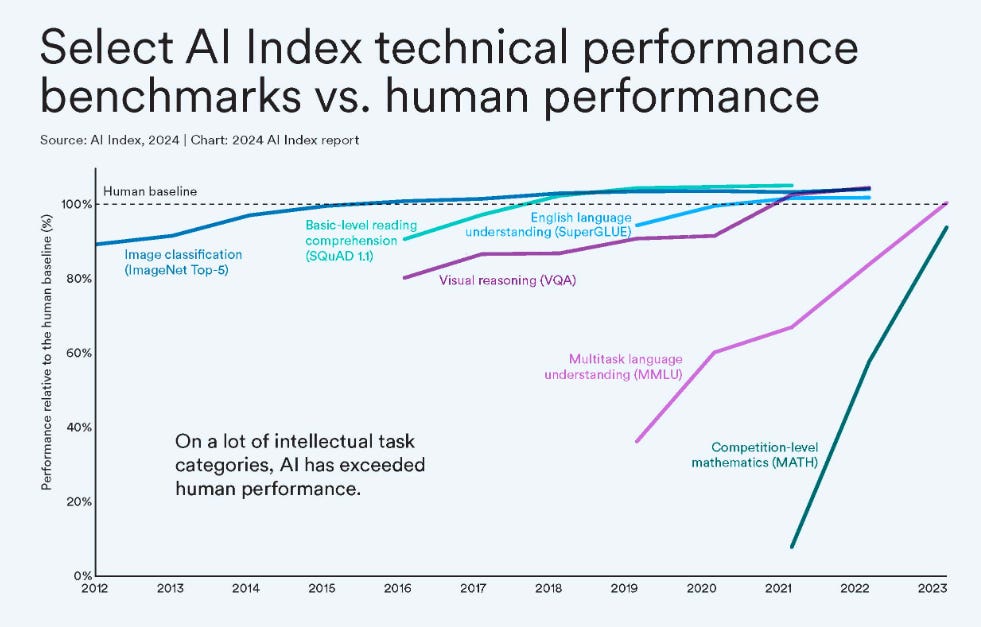

Lastly, in 2023, AI reached human performance levels in many key AI benchmarks, such as reading comprehension, English understanding, visual thinking, image classification, etc.

Why does it matter?

Industry dominance in AI research suggests that companies will continue to drive advancements in the field, leading to more advanced and capable AI systems. However, the rising costs of AI training may pose challenges, as it could limit access to cutting-edge AI technology for smaller organizations or researchers.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Which LLM is the Best? Let's Build a Model Evaluation Framework and Find Out

In this article,

discusses the difficulty of determining the best LLM, as different benchmarks and evaluation methods can produce different results. He argues that no single LLM will be the best for a use case and factors like cost, inference time, and tuning options matter.To address this, the author introduces an open-source LLM evaluation framework called BSD_Evals. This framework allows individuals to create an evaluation process tailored to their specific use cases. With BSD_Evals, users can write prompts, provide expected responses, and test various LLM providers and cloud services.

The framework generates an evaluation summary, a matrix showing how each model performed, and a runtime matrix displaying API response times. The author suggests that BSD_Evals can be used to evaluate model performance, prompt engineering, monitor production applications, and more.

Why does it matter?

Claims about the best LLM are often based on benchmarks that may not accurately reflect real-world performance for a particular application. With a customized evaluation framework like BSD_Evals, we could see more objective comparisons of LLMs, better matching of models to applications, and improved monitoring of LLMs. It will also empower individuals to make informed decisions about choosing the right LLM model for their needs and applications.

What Else Is Happening❗

💰 OpenAI offers a 50% discount for off-peak GPT usage

Users can now leverage GPT-4's capabilities at a 50% discount during off-peak hours. It makes the GPT-4 language model more accessible than ever. Individual researchers, startups, or small businesses can experiment with GPT-4 at an affordable price. Users can also schedule GPT-4 tasks for off-peak hours to optimize costs, especially for projects with flexible deadlines. (Link)

💻 AMD unveils AI chips for business laptops and desktops

AMD introduces a new series of semiconductors for artificial intelligence-enabled business laptops and desktops as the chip designer looks to expand its share of the lucrative "AI PC" market. These chips will be available on HP (HPQ.N) and Lenovo (0992.HK) platforms starting in the second quarter 2024. These PCs can run LLMs and AI apps directly on the device. (Link)

🧠 Anthropic Claude 3 Opus is now available on Amazon Bedrock

Anthropic’s most advanced LLM, Opus, is now accessible through Amazon Bedrock. Amazon is the first managed service supporting Anthropic’s family of all three Claude 3 models. Developers can use Claude 3 Opus to build generative AI applications that automate tasks, develop revenue workflows, conduct financial forecasts, and even help with R&D. (Link)

👤 Zendesk launches an AI-powered customer experience platform

Zendesk unveiled an AI-powered customer experience platform that comprises “highly sophisticated” AI agents, an agent copilot, and new capabilities that ensure proper support staffing and enhance the quality of AI responses. All these features constitute the end-to-end solution for brands that want to manage the quantity and quality of customer interactions. (Link)

💼 Intel and The Linux Foundation launch Open Platform for Enterprise AI (OPEA)

Intel and the Linux Foundation announced the launch of the Open Platform for Enterprise AI (OPEA), a project to foster the development of open, multi-provider, and composable generative AI systems. OPEA’s goal is to pave the way for releasing “hardened,” “scalable” generative AI systems that harness the best open-source innovation from across the ecosystem. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊