Nvidia launches offline AI trainable on local data

Plus: ChatGPT can remember conversations, Cohere launches open source LLM for 101 languages

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 210th edition of The AI Edge newsletter. This edition brings you Nvidia new offline AI chatbot which is trainable on local data.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

💻Nvidia launches offline AI chatbot trainable on local data

🧠 ChatGPT can now remember conversations

🌐 Cohere launches open-source LLM in 101 languages

📚 Knowledge Nugget: Skills for Succeeding in the AI-driven future By

Let’s go!

Nvidia launches offline AI chatbot trainable on local data

NVIDIA has released Chat with RTX, a new tool allowing users to create customized AI chatbots powered by their own local data on Windows PCs equipped with GeForce RTX GPUs. Users can rapidly build chatbots that provide quick, relevant answers to queries by connecting the software to files, videos, and other personal content stored locally on their devices.

Features of Chat with RTX include support for multiple data formats (text, PDFs, video, etc.), access to LLM like Mistral, running offline for privacy, and fast performance via RTX GPUs. From personalized recommendations based on influencing videos to extracting answers from personal notes or archives, there are many potential applications.

Why does this matter?

OpenAI and its cloud-based approach now face fresh competition from this Nvidia offering as it lets solopreneurs develop more tailored workflows. It shows how AI can become more personalized, controllable, and accessible right on local devices. Instead of relying solely on generic cloud services, businesses can now customize chatbots with confidential data for targeted assistance.

ChatGPT can now remember conversations

OpenAI is testing a memory capability for ChatGPT to recall details from past conversations to provide more helpful and personalized responses. Users can explicitly tell ChatGPT what memories to remember or delete conversationally or via settings. Over time, ChatGPT will provide increasingly relevant suggestions based on users preferences, so they don’t have to repeat them.

This feature is rolled out to only a few Free and Plus users and OpenAI will share broader plans soon. OpenAI also states memories bring added privacy considerations, so sensitive data won't be proactively retained without permission.

Why does this matter?

ChatGPT's memory feature allows for more personalized, contextually-aware interactions. Its ability to recall specifics from entire conversations brings AI assistants one step closer to feeling like cooperative partners, not just neutral tools. For companies, remembering user preferences increases efficiency, while individuals may find improved relationships with AI companions.

Cohere launches open-source LLM in 101 languages

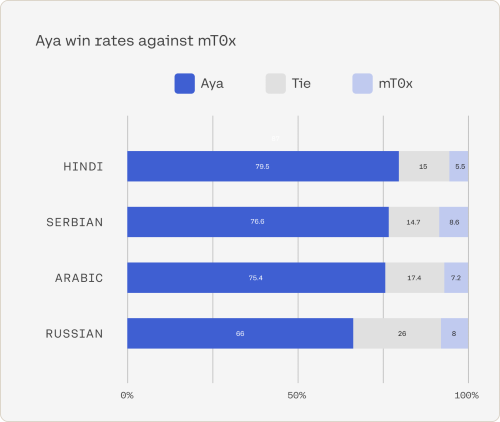

Cohere has launched Aya, a new open-source LLM supporting 101 languages, over twice as many as existing models support. Backed by the large dataset covering lesser resourced languages, Aya aims to unlock AI potential for overlooked cultures. Benchmarking shows Aya significantly outperforms other open-source massively multilingual models.

The release tackles the data scarcity outside of English training content that limits AI progress. By providing rare non-English fine-tuning demonstrations, it enables customization in 50+ previously unsupported languages. Experts emphasize that Aya represents a crucial step toward preserving linguistic diversity.

Why does this matter?

With over 100 languages supported, more communities globally can benefit from generative models tailored to their cultural contexts. It also signifies an ethical shift: recognizing AI’s real-world impact requires serving people inclusively. Models like Aya, trained on diverse data, inch us toward AI that can help everyone.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Skills for Succeeding in the AI-driven future

In this insightful article,

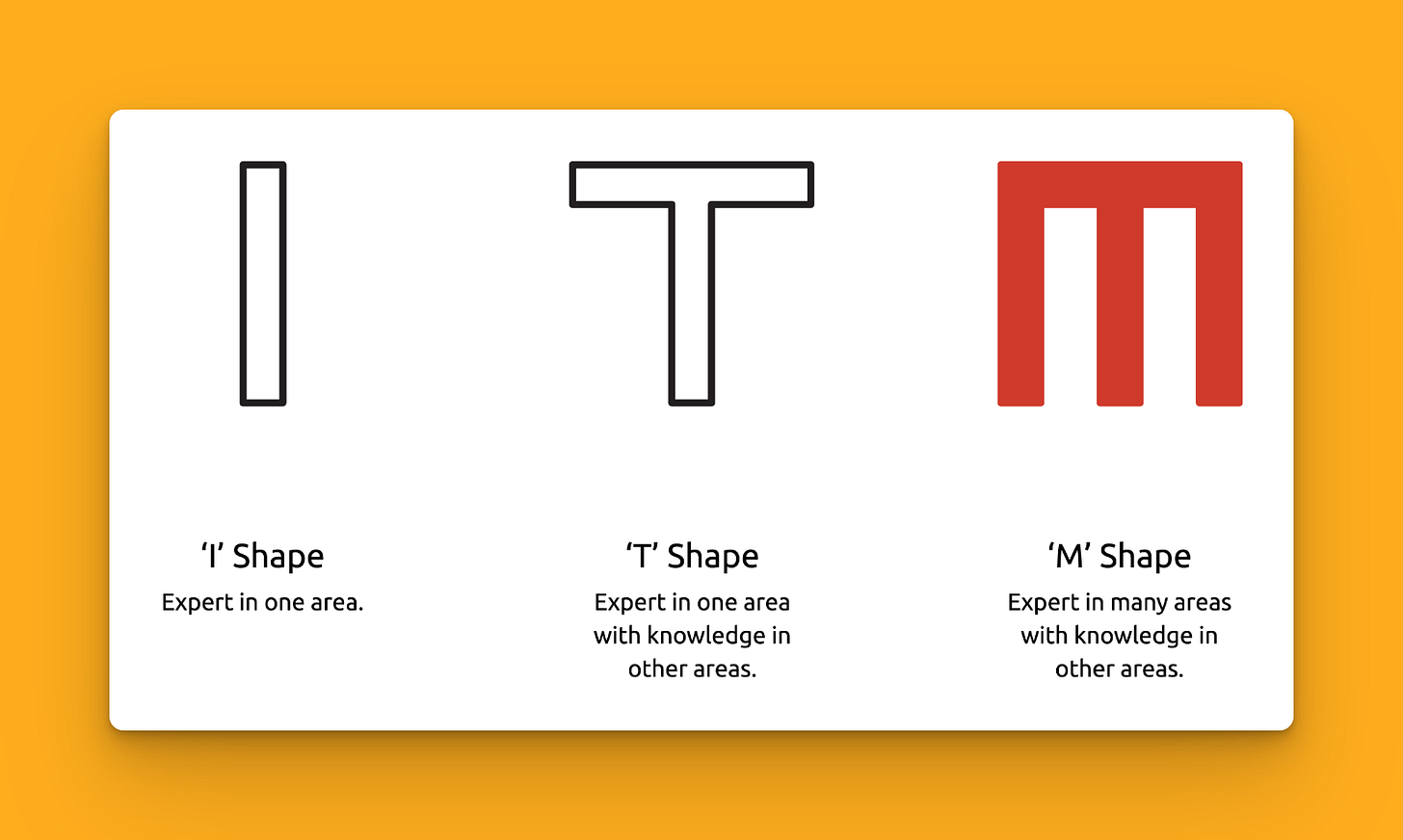

explains that retaining expansive knowledge is becoming unnecessary with AI's instant informational access. We need skills like adapting to new tech, having diverse abilities, and sound judgment in a flood of information.It is equally important to shift our thinking. We don't need to understand AI tech details deeply. We should use higher-level knowledge to handle complexity and guide AI's potential. Now, it's about moving from narrow skills to broad industry know-how. Working well with AI needs skills that cut across different areas. People with updated skills working with AI can bring out incredible innovation.

Why does this matter?

The accelerating pace of AI and automation demands that professionals become adaptable generalists with “M-shaped” skill sets rather than narrow specialists. Companies that restructure roles and operations around these multidimensional workers will unlock more creativity and value. For individuals, continuously learning adaptable skills in this AI environment creates new career options.

What Else Is Happening❗

🆕 Nous Research released 1M-Entry 70B Llama-2 model with advanced steerability

Nous Research has released its largest model yet - Nous Hermes 2 Llama-2 70B - trained on over 1 million entries of primarily synthetic GPT-4 generated data. The model uses a more structured ChatML prompt format compatible with OpenAI, enabling advanced multi-turn chat dialogues. (Link)

💬 Otter launches AI meeting buddy that can catch up on meetings

Otter has introduced a new feature for its AI chatbot to query past transcripts, in-channel team conversations, and auto-generated overviews. This AI suite aims to outperform and replace competitors' paid offerings like Microsoft, Zoom and Google by simplifying recall and productivity for users leveraging Otter's complete meeting data. (Link)

⤴️ OpenAI CEO forecasts smarter multitasking GPT-5

At the World Government Summit, OpenAI CEO Sam Altman remarked that the upcoming GPT-5 model will be smarter, faster, more multimodal, and better at everything across the board due to its generality. There are rumors that GPT-5 could be a multimodal AI called "Gobi" slated for release in spring 2024 after training on a massive dataset. (Link)

🎤 ElevenLabs announced expansion for its speech to speech in 29 languages

ElevenLabs’s Speech to Speech is now available in 29 languages, making it multilingual. The tool, launched in November, lets users transform their voice into another character with full control over emotions, timing, and delivery by prompting alone. This update just made it more inclusive! (Link)

🧳 Airbnb plans to build ‘most innovative AI interfaces ever

Airbnb plans to leverage AI, including its recent acquisition of stealth startup GamePlanner, to evolve its interface into an adaptive "ultimate concierge". Airbnb executives believe the generative models themselves are underutilized and want to focus on improving the AI application layer to deliver more personalized, cross-category services. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊