Hello, Engineering Leaders and AI enthusiasts

Today, we’ll focus on NVIDIA, The World Leader in AI Computing. They stand at the forefront of AI as the engine of innovation.

Let’s play the recap on major NVIDIA announcements to explore its evolution.

Let’s go!

🛡️ NVIDIA launches software that builds AI guardrails

🧠 NVIDIA introduces Real-Time Neural Appearance Models

💥 NVIDIA uses AI to bring NPCs to life

🏙️ Neuralangelo, NVIDIA’s new AI model, turns 2D video into 3D structures

🌟 NVIDIA’s Biggest AI Breakthroughs

🆕 NVIDIA’s tool to curate trillion-token datasets for pretraining LLMs

📈 NVIDIA’s new software boosts LLM performance by 8x🖌️ Getty Images’s new AI art tool powered by NVIDIA

🤝 NVIDIA's new collab for text-to-3D AI

⚡ NVIDIA brings 4x AI boost with TensorRT-LLM

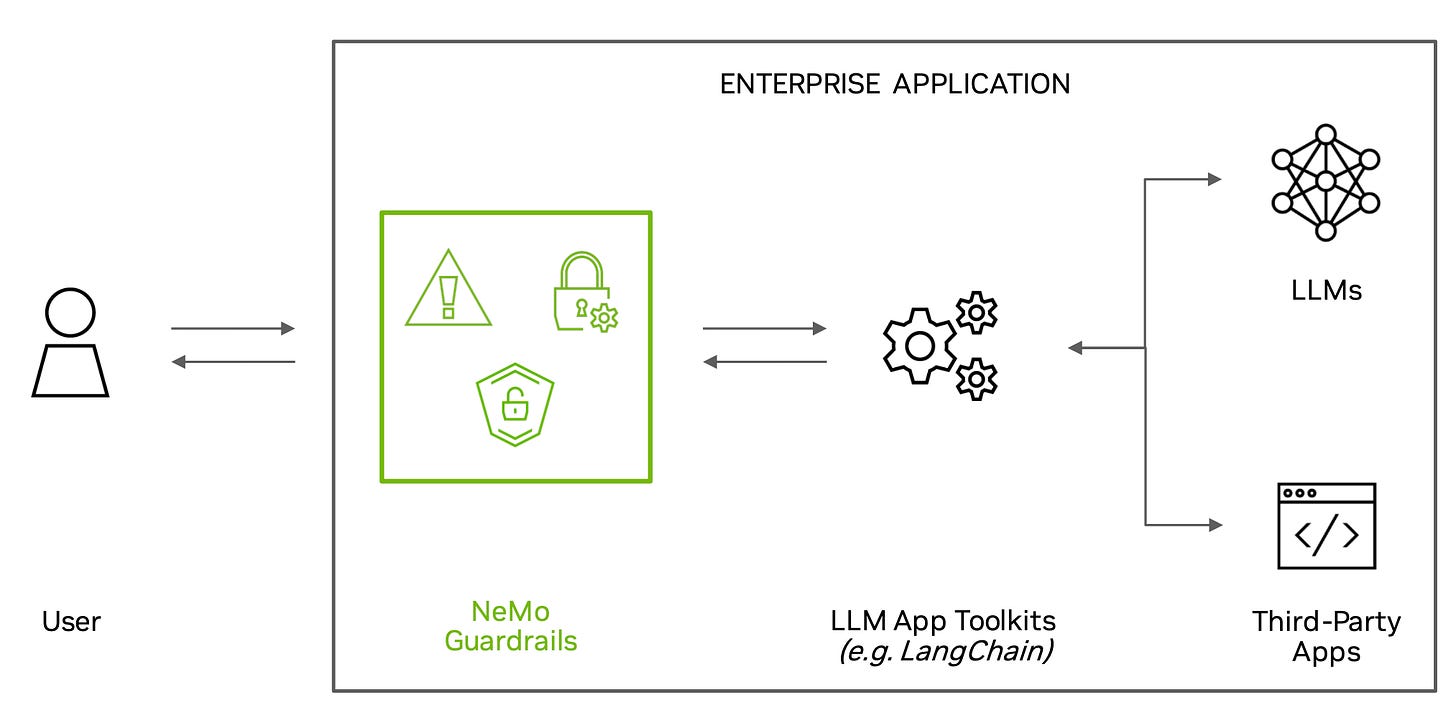

NVIDIA Introduces NeMo Guardrails: Open-Source AI Chatbot Security Solution

NVIDIA unveiled NeMo Guardrails, an open-source software designed to help developers ensure that AI chatbots powered by large language models stay accurate, appropriate, secure, and on-topic. NeMo Guardrails allows developers to set topical, safety, and security boundaries for AI applications, ensuring that the generated text remains within the desired domain and adheres to the company's safety and security requirements.

The software is compatible with all LLMs, including OpenAI's ChatGPT, and can be easily integrated into popular tools like LangChain and Zapier. The software is part of the NVIDIA NeMo framework, available on GitHub.

NVIDIA's Real-Time Neural Appearance Models deliver a level of realism never seen before

NVIDIA Research shared a research paper that discusses a system for real-time rendering of scenes with complex appearances previously reserved for offline use. It is achieved with a combination of algorithmic and system-level innovations.

The appearance model uses learned hierarchical textures interpreted through neural decoders that generate reflectance values and importance-sampled directions. The decoders incorporate two graphics priors to support accurate mesoscale reconstruction and efficient importance sampling, facilitating anisotropic sampling and level-of-detail rendering.

NVIDIA uses AI to bring NPCs to life

NVIDIA has announced the NVIDIA Avatar Cloud Engine (ACE) for Games. This cloud-based service provides developers access to various AI models, including NLP models, facial animation models, and motion capture models.

ACE for Games can create NPCs that can have intelligent, unscripted, and dynamic conversations with players, express emotions, and realistically react to their surroundings.

It can help developers in:

Creating more realistic and believable NPCs with more natural and engaging conversations with players.

Saving time and money by providing them access to various AI models.

Neuralangelo, NVIDIA’s new AI model, turns 2D video into 3D structures

NVIDIA Research has introduced a new AI model for 3D reconstruction called Neuralangelo. It uses neural networks to turn 2D video clips from any device– cell phone to drone capture– into detailed 3D structures, generating lifelike virtual replicas of buildings, sculptures, and other real-world objects.

Neuralangelo’s ability to translate the textures of complex materials — including roof shingles, panes of glass, and smooth marble — from 2D videos to 3D assets significantly surpasses prior methods. The high fidelity makes its 3D reconstructions easier for developers and creative professionals to rapidly create usable virtual objects for their projects using smartphone footage.

NVIDIA’s Biggest AI Breakthroughs

Reveals a new chip GH200

Nvidia announced a new chip GH200, designed to run AI models. It has the same GPU as the H100, Nvidia’s current highest-end AI chip, but pairs it with 141 gigabytes of cutting-edge memory and a 72-core ARM central processor. This processor is designed for the scale-out of the world’s data centers.

The adoption of Universal Scene Description (OpenUSD)

Announced new frameworks, resources, and services to accelerate the adoption of Universal Scene Description (USD), known as OpenUSD. Through its Omniverse platform and a range of technologies and APIs, including ChatUSD and RunUSD, NVIDIA aims to advance the development of OpenUSD, a 3D framework that enables interoperability between software tools and data types for creating virtual worlds.

An AI Workbench

Introduced AI Workbench, a developer toolkit that simplifies creating, testing, and customizing pre-trained generative AI models. The toolkit allows developers to scale these models to various platforms, including PCs, workstations, enterprise data centers, public clouds, and NVIDIA DGX Cloud. This will speed up the adoption of custom generative AI for enterprises worldwide.

The Partnership between NVIDIA and Hugging Face

NVIDIA and Hugging Face have partnered to bring generative AI supercomputing to developers. Integrating NVIDIA DGX Cloud into the Hugging Face platform will accelerate the training and tuning of large language models (LLMs) and make it easier to customize models for various industries. This partnership aims to connect millions of developers to powerful AI tools, enabling them to build advanced AI applications more efficiently.

NVIDIA’s tool to curate trillion-token datasets for pretraining LLMs

Most software/tools made to create massive datasets for training LLMs are not publicly released or scalable. This requires LLM developers to build their own tools to curate large language datasets. To meet this growing need, Nvidia has developed and released the NeMo Data Curator– a scalable data-curation tool that enables you to curate trillion-token multilingual datasets for pretraining LLMs. It can scale the following tasks to thousands of compute cores.

The tool curates high-quality data that leads to improved LLM downstream performance and will significantly benefit LLM developers attempting to build pretraining datasets.

NVIDIA’s new software boosts LLM performance by 8x

NVIDIA has developed a software called TensorRT-LLM to supercharge LLM inference on H100 GPUs. It includes optimized kernels, pre and post processing steps, and multi-GPU/multi-node communication primitives for high performance. It allows developers to experiment with new LLMs without deep knowledge of C++ or NVIDIA CUDA. The software also offers an open-source modular Python API for easy customization and extensibility.

(Comparisons between an NVIDIA A100 and NVIDIA H100)

Additionally, it allows users to quantize models to FP8 format for better memory utilization. TensorRT-LLM aims to boost LLM deployment performance and is available in early access, soon to be integrated into the NVIDIA NeMo framework. Users can apply for access through the NVIDIA Developer Program, with a focus on enterprise-grade AI applications.

Getty Images’s new AI art tool powered by NVIDIA

Getty Images has launched a generative AI art tool called Generative AI, which uses an AI model provided by Nvidia to render images from text descriptions. The tool is designed to be "commercially safer" than rival solutions, with safeguards to prevent disinformation and copyright infringement.

Getty Images will compensate contributors whose work is used to train the AI generator and share revenues generated from the tool. The tool can be accessed on Getty's website or integrated into apps and websites through an API, with pricing based on prompt volume.

NVIDIA's new collab for text-to-3D AI

NVIDIA and Masterpiece Studio have launched a new text-to-3D AI playground called Masterpiece X - Generate. The tool aims to make 3D art more accessible by using generative AI to create 3D models based on text prompts. It is browser-based and requires no prior knowledge or skills.

Users simply type in what they want to see, and the program generates the 3D model. While it may not be suitable for high-fidelity or AAA game assets, it is great for quickly iterating and exploring ideas.

The resulting assets are compatible with popular 3D software. The tool is available on mobile and works on a credit basis. By creating an account, you'll get 250 credits and will be able to use Generate freely.

NVIDIA brings 4x AI boost with TensorRT-LLM

NVIDIA is bringing its TensorRT-LLM AI model to Windows, providing a 4x boost to consumer PCs running GeForce RTX and RTX Pro GPUs. The update includes a new scheduler called In-Flight batching, allowing for dynamic processing of smaller queries alongside larger compute-intensive tasks.

Optimized open-source models are now available for download, enabling higher speedups with increased batch sizes. TensorRT-LLM can enhance daily productivity tasks such as chat engagement, document summarization, email drafting, data analysis, and content generation. It solves the problem of outdated or incomplete information by using a localized library filled with specific datasets. TensorRT acceleration is now available for Stable Diffusion, improving generative AI diffusion models by up to 2x.

The company has also released RTX Video Super Resolution version 1.5, enhancing LLMs and improving productivity.

That's all for now!

Join the prestigious readership of The AI Edge alongside professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other top organizations.

Thanks for reading, and see you tomorrow. 😊

![[video-to-gif output image] [video-to-gif output image]](https://substackcdn.com/image/fetch/$s_!5VdE!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F7c1d1eb5-af47-46af-8dc8-b56261abcf1c_600x338.gif)