NVIDIA Advances Its Open-Model Strategy with Nemotron 3

Plus: OpenAI introduced GPT-5.2-Codex, Gemini 3 Flash gets faster and cheaper, and Z.ai’s GLM-4.7 tops open-source AI.

Hello Engineering Leaders and AI Enthusiasts!

This newsletter brings you the latest AI updates in just 4 minutes! Dive in for a quick summary of everything important that happened in AI over the last week.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🚀 NVIDIA debuts Nemotron 3 family of open models

🔒 OpenAI launches GPT-5.2-Codex for secure coding

⚡ Google bets on speed with Gemini 3 Flash

🏆 Z.ai’s GLM-4.7 tops open-source coding AI

🧠 Zoom’s AI beats Gemini on tough reasoning test

💡Knowledge Nugget: AI Is Causing Layoffs, Just Not in the Way You Think by Eric Lamb

Let’s go!

NVIDIA debuts Nemotron 3 family of open models

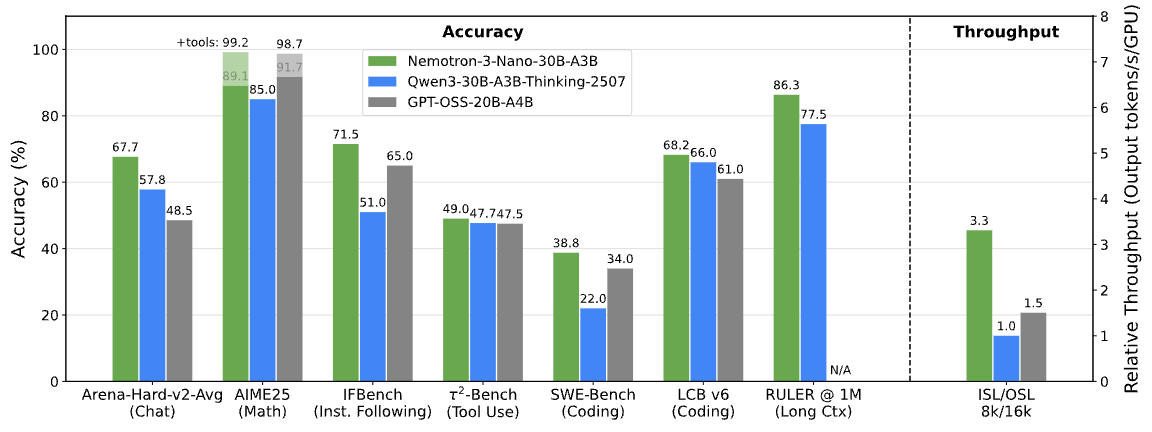

NVIDIA introduced Nemotron 3, a new family of open models built specifically for multi-agent systems, marking its most serious move yet into large-scale model development. The lineup includes Nano (30B), Super (100B), and Ultra (500B), with Nano already available and larger versions expected in 2026. NVIDIA says Nano beats similar-sized models on coding and instruction-following while running more than three times faster.

NVIDIA is also publishing training data, fine-tuning tools, and reinforcement learning environments alongside the models. Companies like Cursor, Perplexity, ServiceNow, and CrowdStrike are already using Nemotron 3 across coding, search, enterprise automation, and cybersecurity.

Why does it matter?

With many Western AI labs doubling down on closed ecosystems and in-house chips, open model momentum has drifted outside the U.S. NVIDIA reshapes that balance, giving developers a credible open option while keeping advanced AI workloads closely tied to NVIDIA’s platform.

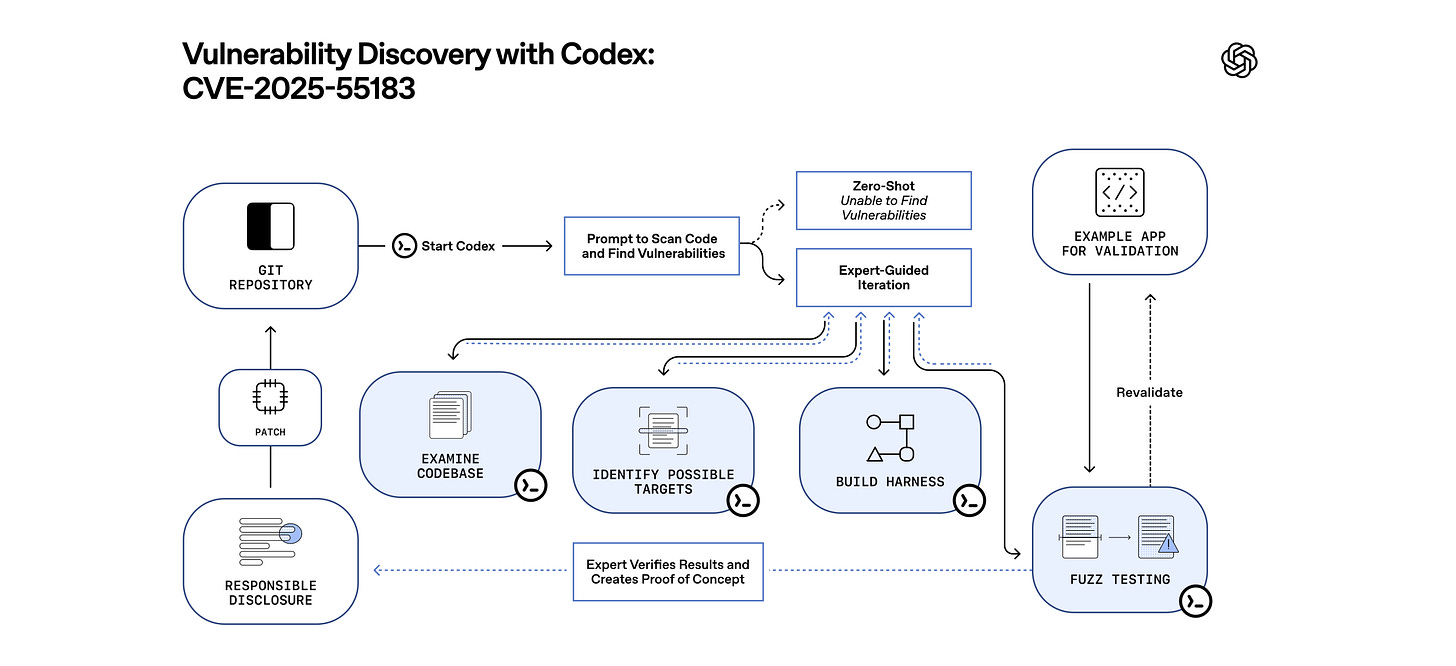

OpenAI launches GPT-5.2-Codex for secure coding

OpenAI introduced GPT-5.2-Codex, a coding-focused version of GPT-5.2 designed for real-world software engineering and cybersecurity work. The new model is tuned for long-running tasks like refactors, migrations, and debugging across large repositories, using native context compaction to hold onto critical details over hours of autonomous work.

OpenAI says GPT-5.2-Codex posts state-of-the-art results on developer benchmarks like SWE-Bench Pro and Terminal-Bench, with improvements aimed at practical reliability rather than flashy demos.

Why does it matter?

This is OpenAI quietly admitting that chatbots were the warm-up act. The real payoff is coding agents that can work for hours without losing context. If this holds up in practice, it shifts AI value from clever demos to systems that actually replace chunks of day-to-day engineering work.

Google’s Gemini 3 Flash gets faster and cheaper

Google rolled out Gemini 3 Flash, a speed-focused version of its flagship model that still holds up on frontier benchmarks. Flash runs three times faster than Gemini 3 Pro, costs a quarter as much, and matches or beats Pro across several reasoning and coding tests.

Google has already made Flash the default model across the Gemini app and Search’s AI Mode. On Humanity’s Last Exam, Flash scored 33.7%, nearly on par with GPT-5.2, signaling that faster, cheaper models are closing the gap with top-tier reasoning systems.

Why does it matter?

Somehow, the faster model ends up being the more important release. By pairing strong reasoning with low latency and aggressive pricing, Gemini 3 Flash makes high-end AI far easier to use at scale and gives Google another lever to steadily chip away at OpenAI’s lead in everyday AI workflows.

Z.ai’s GLM-4.7 tops open-source coding AI

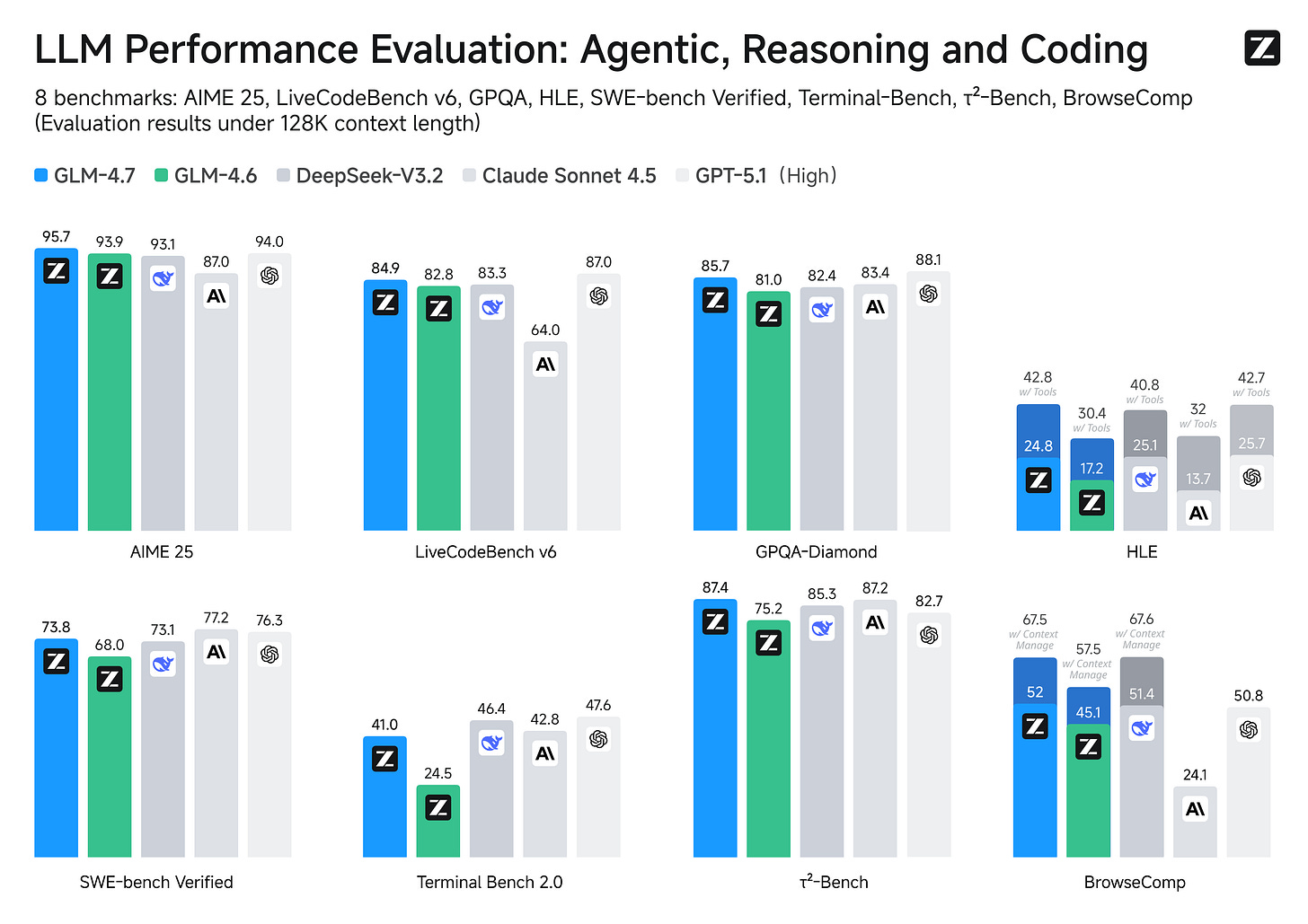

Z.ai, a Chinese AI startup, released GLM-4.7, an open-source coding model that posted a 73.8% score on SWE-Bench, setting a new high for open systems. That result puts it ahead of rivals like DeepSeek-V3.2 and Kimi K2, and in striking distance of top Western coding models.

Z.ai open-sourced the weights just days before its anticipated Hong Kong IPO, signaling confidence in both its technology and open-model strategy.

Why does it matter?

Chinese labs are shipping strong open models at a relentless cadence. With fresh capital and improving access to high-end compute, the gap to Western frontier systems is shrinking faster than many expected and 2026 could be a real inflection point.

Zoom’s AI beats Gemini on tough reasoning test

Zoom says its federated AI system scored 48.1% on Humanity’s Last Exam, edging past Google’s Gemini 3 Pro on one of the toughest reasoning benchmarks in AI. Instead of relying on a single model, Zoom orchestrates leading models from OpenAI, Anthropic, and Google alongside its own smaller models, using a routing layer called Z-scorer to pick the best model per task.

The system will power AI Companion 3.0, promising stronger summaries, reasoning, and task automation across Zoom’s platform.

Why does it matter?

Zoom isn’t exactly known as an AI research heavyweight, which makes this result stand out, even if it still needs independent confirmation. The bigger takeaway is stitching together leading models can be a faster, more realistic path for enterprises to reach high-end AI performance than trying to build a frontier model on their own.

Enjoying the latest AI updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: AI Is Causing Layoffs, Just Not in the Way You Think

In this piece, Eric Lamb argues that AI is being linked to layoffs in headlines, but not for the reason most people think. While 2025 saw the highest layoffs since 2020, fewer than 5% of job cuts explicitly cited AI as the cause. Economic pressure, restructuring, and market conditions dominated instead. Reports from Goldman Sachs and the Brookings Institution have found no meaningful correlation between AI exposure and job losses, wages, or hiring trends so far. In short, the data doesn’t support the idea that AI is already replacing knowledge workers at scale.

What is hanging is the narrative. AI labs benefit from the urgency to justify massive investment. Media outlets benefit from attention-grabbing storylines. And corporate executives benefit from framing layoffs as future-facing “AI-driven efficiency,” even when cuts reflect older business decisions. Over time, this creates a feedback loop where AI is invoked as justification long before it delivers clear productivity gains.

Why does it matter?

AI is changing how layoffs are justified, not who gets replaced, at least for now. Treating hype as capability can push leaders into premature decisions before AI delivers measurable workforce impact.

What Else Is Happening❗

📺 Kapwing’s research finds over 20% of YouTube videos shown to new users are low-quality AI-generated content, with top channels pulling billions of views and millions in ad revenue.

🔊 Alibaba released Qwen3-TTS, a new text-to-speech model with 49 voices across 10 languages and Chinese dialects, claiming lower error rates than MiniMax and ElevenLabs with near-human naturalness.

🧩 OpenAI expanded ChatGPT’s app directory, opening submissions to third-party developers and giving users a browsable hub to discover and use integrated services directly in chats.

🔮 Stanford HAI predicts 2026 will bring a healthcare “ChatGPT moment” and a shift from AI hype to hard evaluation of real-world impact, productivity, and risk.

📊 Six leading AI models now pass all three CFA exams, with Gemini 3.0 Pro scoring a record 97.6% on Level I, highlighting rapid gains in financial reasoning.

🔍 A Perplexity–Harvard study finds Comet browser users rely on AI agents mainly for research and cognitive work, with usage shifting from casual tasks to deeper knowledge workflows over time.

🎧 Google rolled out Gemini-powered live speech translation to any Android headphones, supporting 70+ languages while preserving tone and context in real time.

🧪 Meta FAIR introduced Self-play SWE-RL, a coding training method where a single model creates and fixes its own bugs, boosting SWE-bench scores without human data.

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you next week! 😊