More Agents = More Performance: Tencent Research

Plus: Google DeepMind’s MC-ViT understands long-context video, ElevenLabs lets you turn your voice into passive income.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 209th edition of The AI Edge newsletter. This edition brings you “More Agents = More Performance: Tencent Research.”

And a huge shoutout to our incredible readers. We appreciate you😊

In today’s edition:

🔍 More Agents = More Performance: Tencent Research

🎥 Google DeepMind’s MC-ViT understands long-context video

🎙 ElevenLabs lets you turn your voice into passive income

💡 Knowledge Nugget: The myth of AI omniscience: AI's epistemological limits by @Chris Walker

Let’s go!

“More agents = more performance” - The Tencent Research Team

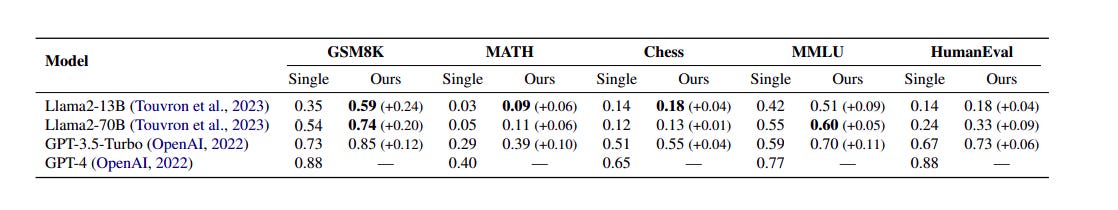

The Tencent Research Team has released a paper claiming that the performance of language models can be significantly improved by simply increasing the number of agents. The researchers use a “sampling-and-voting” method in which the input task is fed multiple times into a language model with multiple language model agents to produce results. After that, majority voting is applied to these answers to determine the final answer.

The researchers prove this methodology by experimenting with different datasets and tasks, showing that the performance of language models increases with the size of the ensemble, i.e., with the number of agents (results below). They also established that even smaller LLMs can match/outperform their larger counterparts by scaling the number of agents. (Example below)

Why does it matter?

Using multiple agents to boost LLM performance is a fresh tactic to tackle single models' inherent limitations and biases. This method eliminates the need for complicated methods such as chain-of-thought prompting. While it is not a silver bullet, it can be combined with existing complicated methods that stimulate the potential of LLMs and enhance them to achieve further performance improvements.

Google DeepMind’s MC-ViT understands long-context video

Researchers from Google DeepMind and the University of Cornell have combined to develop a method allowing AI-based systems to understand longer videos better. Currently, most AI-based models can comprehend videos for up to a short duration due to the complexity and computing power.

That’s where MC-ViT aims to make a difference, as it can store a compressed "memory” of past video segments, allowing the model to reference past events efficiently. Human memory consolidation theories inspire this method by combining neuroscience and psychology. The MC-ViT method provides state-of-the-art action recognition and question answering despite using fewer resources.

Why does it matter?

Most video encoders based on transformers struggle with processing long sequences due to their complex nature. Efforts to address this often add complexity and slow things down. MC-ViT offers a simpler way to handle longer videos without major architectural changes.

ElevenLabs lets you turn your voice into passive income

ElevenLabs has developed an AI voice cloning model that allows you to turn your voice into passive income. Users must sign up for their “Voice Actor Payouts” program.

After creating the account, upload a 30-minute audio of your voice. The cloning model will create your professional voice clone with AI that resembles your original voice. You can then share it in Voice Library to make it available to the growing community of ElevenLabs.

After that, whenever someone uses your professional voice clone, you will get a cash or character reward according to your requirements. You can also decide on a rate for your voice usage by opting for a standard royalty program or setting a custom rate.

Why does it matter?

By leveraging ElevenLabs' AI voice cloning, users can potentially monetize their voices in various ways, such as providing narration for audiobooks, voicing virtual assistants, or even lending their voices to advertising campaigns. This innovation democratizes the field of voice acting, making it accessible to a broader audience beyond professional actors and voiceover artists. Additionally, it reflects the growing influence of AI in reshaping traditional industries.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: The myth of AI omniscience: AI's epistemological limits

In this article,

challenges the idea that AI knows everything. He says while AI systems can excel in specific tasks, they are limited by their methods of acquiring knowledge. Walker talks about problems like biases in data and limits in algorithms. He also says real-life situations are hard for AI to handle. AI doesn't think like humans; it just looks for patterns in data. Walker wants people to know these limits and not become fully dependent on AI.Why does it matter?

It is worth considering that this mythical image of AI may only serve to inspire awe and fear and is a complete distraction from the AI issues that matter, like model bias, deep fakes, misinformation, and job displacement. To meet the very real opportunities offered by AI, we must dispel the myths, committing to a rational dialogue on its capabilities.

What Else Is Happening❗

🤖 NVIDIA CEO Jensen Huang advocates for each country’s sovereign AI

While speaking at the World Governments Summit in Dubai, the NVIDIA CEO strongly advocated the need for sovereign AI. He said, “Every country needs to own the production of their own intelligence.” He further added, “It codifies your culture, your society’s intelligence, your common sense, your history – you own your own data.” (Link)

💰 Google to invest €25 million in Europe to uplift AI skills

Google has pledged 25 million euros to help the people of Europe learn how to use AI. With this funding, Google wants to develop various social enterprise and nonprofit applications. The tech giant is also looking to run “growth academies” to support companies using AI to scale their companies and has expanded its free online AI training courses to 18 languages. (Link)

💼 NVIDIA surpasses Amazon in market value

NVIDIA Corp. briefly surpassed Amazon.com Inc. in market value on Monday. Nvidia rose almost 0.2%, closing with a market value of about $1.78 trillion. While Amazon fell 1.2%, it ended with a closing valuation of $1.79 trillion. With this market value, NVIDIA Corp. temporarily became the 4th most valuable US-listed company behind Alphabet, Microsoft, and Apple. (Link)

🪟 Microsoft might develop an AI upscaling feature for Windows 11

Microsoft may release an AI upscaling feature for PC gaming on Windows 11, similar to Nvidia's Deep Learning Super Sampling (DLSS) technology. The "Automatic Super Resolution" feature, which an X user spotted in the latest test version of Windows 11, uses AI to improve supported games' frame rates and image detail. Microsoft is yet to announce the news or hardware specifics, if any. (Link)

📚 Fandom rolls out controversial generative AI features

Fandom hosts wikis for many fandoms and has rolled out many generative AI features. However, some features like “Quick Answers” have sparked a controversy. Quick Answers generates a Q&A-style dropdown that distills information into a bite-sized sentence. Wiki creators have complained that it answers fan questions inaccurately, thereby hampering user trust. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From ML to ChatGPT to generative AI and LLMs, We break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊