Microsoft’s new research with 1B tokens 💥

Plus: OpenAI’s next big goal revealed. AI can now detect Wildfires.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 57th edition of The AI Edge newsletter. This edition brings you “Microsoft’s LongNet scaling transformers to 1B tokens”.

And a huge shoutout to our amazing readers. We appreciate you!😊

In today’s edition:

🤖 Microsoft’s LongNet scales transformers to 1B tokens.

⚡️ OpenAI’s Superalignment - The next big goal!

🔥 AI can now detect wildfires.

📚 Knowledge Nugget: Navigating the Complexities of LLM Quantization by

Let’s go!

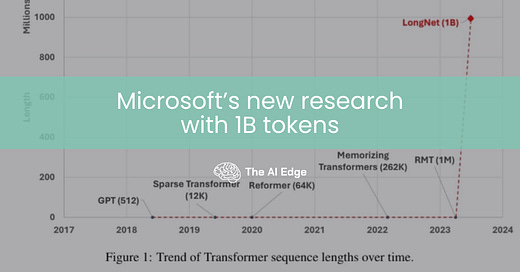

Microsoft’s LongNet scales transformers to 1B tokens

Microsoft research’s recently launched LongNet allows language models to have a context window of over 1 billion tokens without sacrificing the performance on shorter sequences.

LongNet achieves this through dilated attention, exponentially expanding the model's attentive field as token distance increases.

This breakthrough offers significant advantages:

1) It maintains linear computational complexity and a logarithmic token dependency;

2) It can be used as a distributed trainer for extremely long sequences;

3) Its dilated attention can seamlessly replace standard attention in existing Transformer models.

Why does this matter?

LongNet’s ability to scale sequence length in language models is noteworthy for processing and understanding long pieces of text. And the capability to handle sequences of over 1B tokens allows for more comprehensive analysis and modeling of textual data, enabling advancements in NLP. Whereas Existing methods face issues with computational complexity or model expressivity, limiting the maximum sequence length.

OpenAI’s Superalignment - The next big goal!

OpenAI has launched Superalignment, a project dedicated to addressing the challenge of aligning artificial superintelligence with human intent. Over the next four years, 20% of OpenAI's computing power will be allocated to this endeavor. The project aims to develop scientific and technical breakthroughs by creating an AI-assisted automated alignment researcher.

This researcher will evaluate AI systems, automate searches for problematic behavior, and test alignment pipelines. Superalignment will comprise a team of leading machine learning researchers and engineers open to collaborating with talented individuals interested in solving the issue of aligning superintelligence.

Why does this matter?

By dedicating resources and expertise to Superalignment, OpenAI aims to develop scientific and technical advancements to control AI systems effectively. This effort is crucial in safeguarding the development and deployment of AI technologies, ensuring they remain beneficial and aligned with human values as they surpass human capabilities.

AI can now detect and prevent wildfires

Cal Fire, the California Department of Forestry and Fire Protection, uses AI to help detect wildfires more effectively without the human eye. Advanced cameras equipped with autonomous smoke detection capabilities are replacing the reliance on human eyes to spot potential fire outbreaks.

Detecting wildfires is challenging due to their occurrence in remote areas with limited human presence and their unpredictable nature fueled by environmental factors. To address these challenges, innovative solutions and increased vigilance are necessary to identify and respond to wildfires timely.

Why does this matter?

Integrating AI in wildfires can make a notable difference in saving lives and protecting communities. AI can detect fires faster, respond more effectively, and take proactive measures to prevent wildfires from spreading, ensuring the safety and well-being of Californians in the face of this ongoing threat.

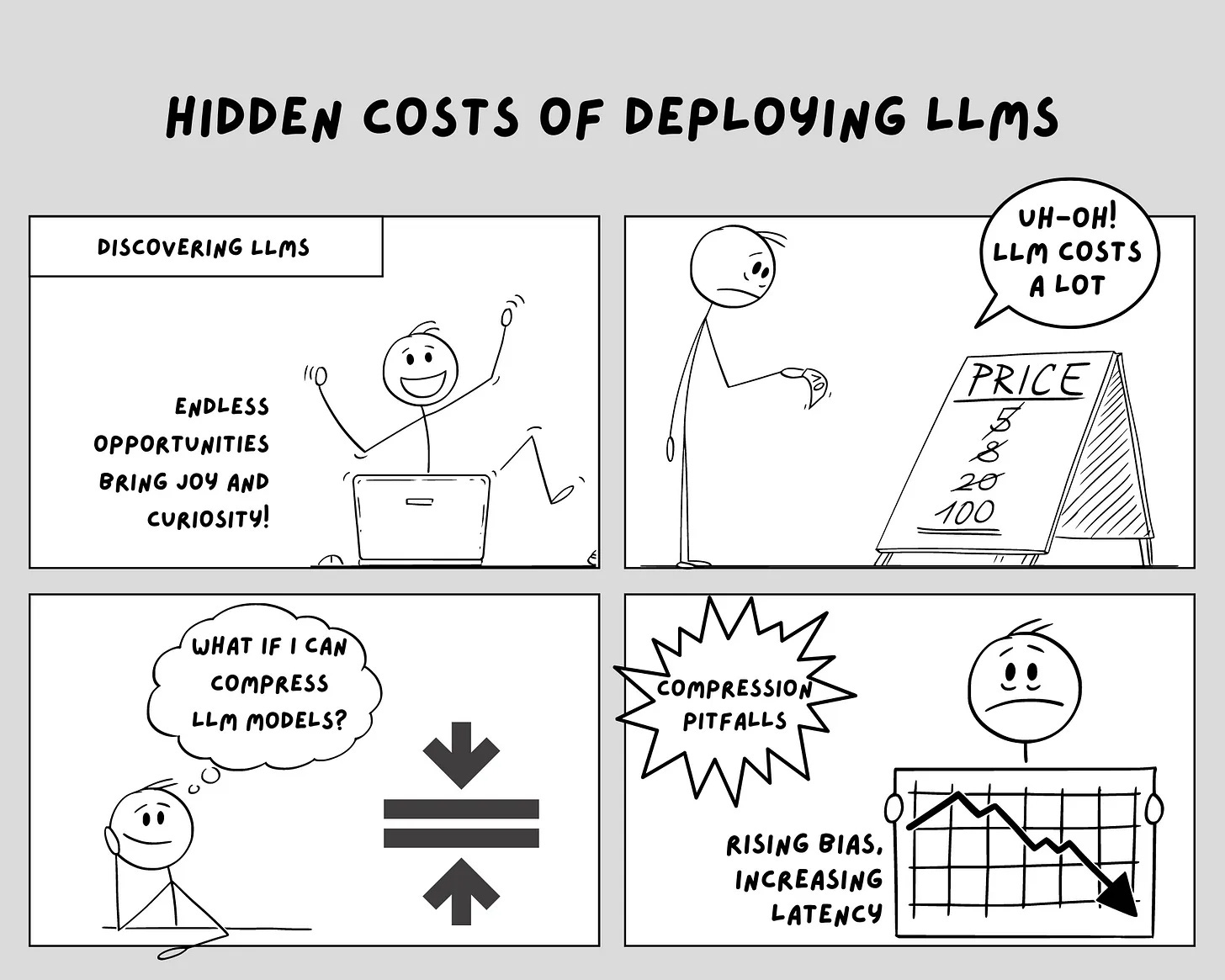

Knowledge Nugget: Navigating the Complexities of LLM Quantization: Techniques, Trade-offs, and Real-World Implications

In this article, The author

discusses the current state of LLM compression.Here are the highlighted key points:

Compression can potentially reduce the size of neural networks by 4 times while maintaining a minimal decrease in perplexity and other quality metrics.

However, it is important to note that the expected reduction in latency may not be achieved through compression.

LLMs with 10B or more parameters tend to experience a decline in quality when quantizing activations.

The impact of LLM compression on bias is currently unknown. If findings from previous studies on smaller models hold for larger LLMs, compression may have adverse effects.

Alternatively, utilizing a smaller, fine-tuned LLM for a specific task might prove more effective than compressing a significantly larger LLM.

Why does this matter?

The discussion on LLM compression's implications helps inform decision-making regarding model size reduction, latency optimization, quality assessment, bias exploration, and the selection of appropriate LLM deployment strategies. It contributes to advancing the field of language modeling and ensuring efficient and effective utilization of these powerful models in practical applications.

What Else Is Happening❗

🎥 ControlNet’s AI lets you create a FREE promo video! Isn’t it amazing? (Link)

🔍 Flacuna provides valuable insights into the performance of LLMs.(Link)

📊 Gartner survey: 79% of Strategists embrace AI and Analytics success. (Link)

💰 Spotify CEO’s Neko Health raises $65M for full-body scan preventative healthcare. (Link)

🔬 VA researchers working on AI that can predict prostate cancer! (Link)

🚁 US to acquire 1k AI-controlled armed drones soon! (Link)

🛠️ Trending Tools

Ask Klëm: AI wardrobe stylist demystifies personal style, offering real-time answers and recommendations.

Opnbx AI: Generative AI understands prospects better than average cold emails ever could.

Automaited: World's first general process AI automating any business process with ease. Chat and automate tasks with a click.

Afforai: AI framework for document interaction. Summarization, translation, and Q&A at 5x better quality, 10x more coverage.

NeuralCam: AI camera that automatically edits photos by removing people and capturing backgrounds.

Artius: Transform existing images into professional-looking AI headshots for corporate profiles or team bios.

Solan AI: 10x more efficient content creation with ChatGPT. Tap into limitless AI potential.

Entry Point AI: The no-code platform for custom AI models. Manage data, generate examples, estimate costs, and optimize models.

That's all for now!

Subscribe to The AI Edge and join the impressive list of readers that includes professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other reputable organizations.

Thanks for reading, and see you tomorrow. 😊