Microsoft's First SoTA SLM to be Shipped with Windows

Plus: Google unveils new AI tools for branding and product marketing, Adobe introduces Firefly AI-powered Generative Remove to Lightroom.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 280th edition of The AI Edge newsletter. This edition features “Microsoft's First SoTA SLM to be Shipped with Windows.”

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🧠 Microsoft's first SoTA SLM to be shipped with Windows

📈 Google unveils new AI tools for branding and product marketing

🎨 Adobe introduces Firefly AI-powered Generative Remove to Lightroom

📚 Knowledge Nugget: RAG RAG on the Wall by

Let’s go!

Microsoft's first SoTA SLM to be shipped with Windows

Microsoft announced a new small language model called Phi Silica. It has 3.3 billion parameters, which makes it the smallest model in Microsoft's Phi family of models. Phi Silica is designed specifically for the Neural Processing Units (NPUs) in Microsoft's new Copilot+ PCs. Despite its small size, Phi Silica can generate 650 tokens per second using only 1.5 Watts of power. This allows the PC's main processors to be free for other tasks.

Developers can access Phi Silica through the Windows App SDK and other AI-powered features like OCR, Studio Effects, Live Captions, and Recall User Activity APIs. Microsoft plans to release additional APIs, including Vector Embedding, RAG API, and Text Summarization. These AI-powered PCs will have dedicated AI chips for running LLMs and other AI workloads.

Why does it matter?

As Microsoft continues to invest in developing small language models and integrating AI into its Windows platform, Phi Silica represents a significant step forward in making advanced AI capabilities more accessible to developers and end-users. Also, as major PC manufacturers want to introduce AI-powered laptops this summer, Microsoft might lead the way by introducing CoPilot PC+ and now Phi Silica.

Google unveils new AI tools for branding and product marketing

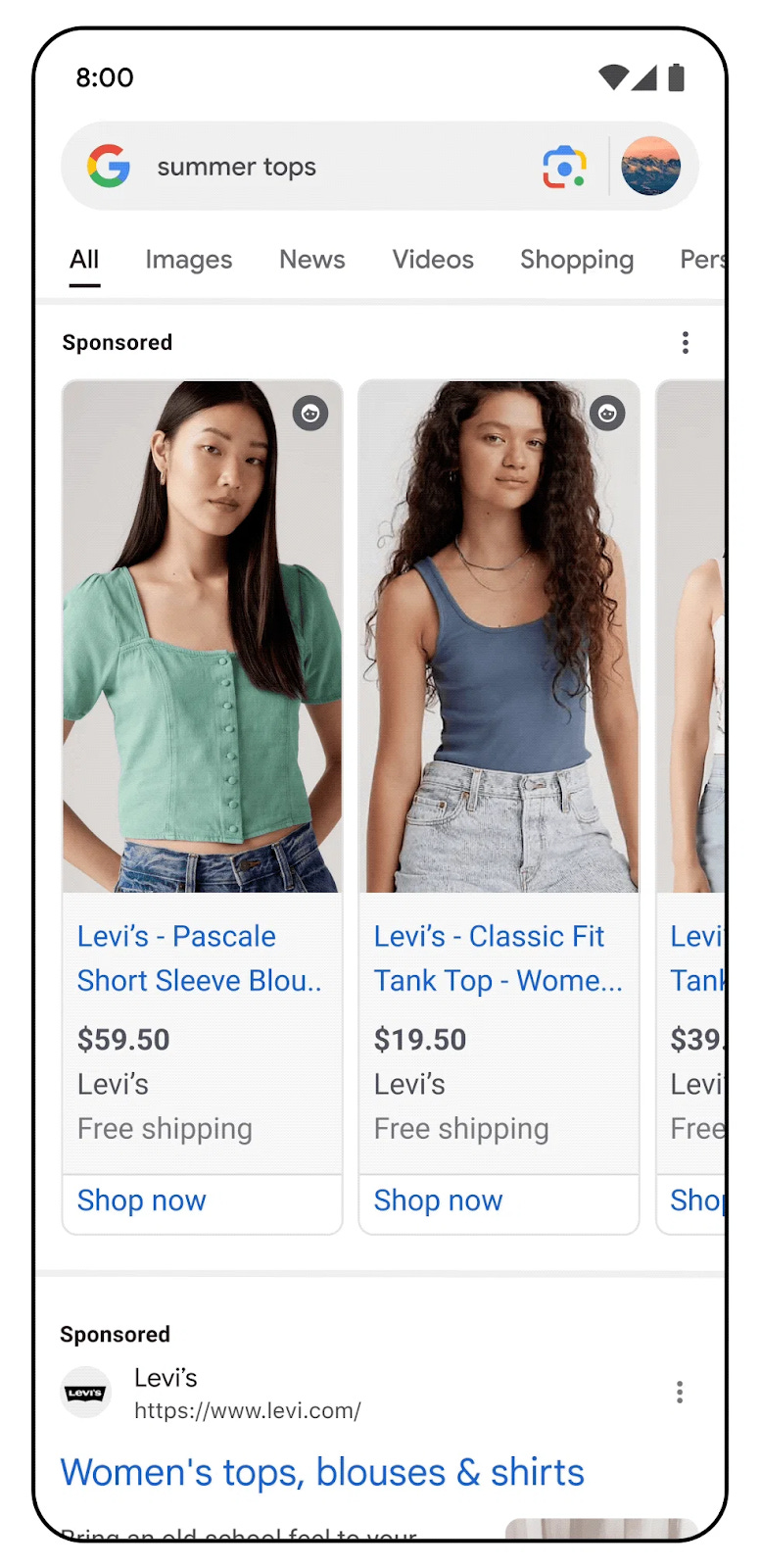

Google has introduced several new AI-powered features to help retailers and brands better connect with shoppers. First, Google has created a new visual brand profile that will appear in Google Search results. This profile uses information from Google Merchant Center and Google's Shopping Graph to showcase a brand's identity, products, and offerings.

Additionally, Google is expanding its AI-powered tools to help brands create more engaging content and ads. This includes new features in Google's Product Studio, allowing brands to generate images matching their unique style.

Google is also launching immersive ad formats powered by generative AI, such as the ability to include short product videos, virtual try-on experiences, and 3D product views directly in search ads. These new AI-driven tools aim to help brands forge stronger, more personalized connections with consumers throughout the shopping journey.

Why does it matter?

As AI continues advancing, such tools could shape the future of marketing by delivering more personalized, hyper-relevant, and visually compelling experiences that better connect consumers with brands and products.

Adobe introduces Firefly AI-powered Generative Remove to Lightroom

Adobe has added a new AI-powered feature called Generative Remove to its Lightroom photo editing software. Generative Remove uses Adobe's Firefly generative AI model to allow users to seamlessly remove objects from photos, even if the objects have complex backgrounds. The feature can remove images' stains, wrinkles, reflections, and more.

Adobe has been integrating Firefly's capabilities across its Creative Cloud apps to generate images, apply styles, fill areas, and remove objects through the new Generative Remove tool in Lightroom. It works closely with photographers to continue improving and expanding this object-removal capability. The company also announced a new Lens Blur effect that uses AI to add realistic depth-of-field blur to photos.

Why does it matter?

The Generative Remove feature will make it easier for photographers, designers, and other creatives to edit their images, saving time and effort. Looking ahead, we can expect Adobe and other creative software companies to explore new ways to harness Gen AI to automate tedious tasks, provide intelligent assistance, and enable entirely new creative possibilities.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: RAG RAG on the Wall

In this article,

discusses an advanced technique called "Small-to-Big" for improving retrieval augmented generation (RAG) models. The traditional RAG models embed and retrieve large chunks of text to generate responses, which can lead to suboptimal results.The "Small-to-Big" approach aims to address this by decoupling the data used for retrieval (small, semantically precise chunks) from the data used for synthesis (larger chunks with more context). The approach has two main phases.

Ingestion phase: Documents are processed to separate the small passage chunks used for embedding and retrieval from the larger context chunks used for synthesis.

Retrieval phase: The user query is transformed into multiple search queries embedded and used to retrieve the most relevant small passages. A reranker then selects the top passages, and their associated larger context chunks are used to build the final context for the language model to generate a response.

Why does it matter?

The "Small-to-Big" method could transform RAG and AI systems, enabling them to give better answers by allowing them to search through small pieces of information more precisely while still understanding the full context of larger texts. The method enhances both recall precision and contextual richness, potentially leading to more effective and efficient AI systems.

What Else Is Happening❗

🤖 Elon Musk’s xAI plans to make Grok multimodal

According to public developer documents, Elon Musk’s AI company, xAI, is making progress on adding multimodal inputs to the Grok chatbot. This means soon, users may be able to upload photos to Grok and receive text-based answers. This was first teased in a blog post last month from xAI, which said Grok-1.5V will offer “multimodal models in a number of domains.” (Link)

👨💻 Microsoft’s new Copilot AI agents to work like virtual employees

Microsoft will soon allow businesses and developers to build AI-powered Copilots that can work like virtual employees and perform tasks automatically. Instead of Copilot sitting idle waiting for queries, it will be able to monitor email inboxes and automate tasks or data entry that employees normally have to do manually. (Link)

🌍 Microsoft Edge introduces real-time AI translation and dubbing for YouTube

Microsoft Edge is set to introduce real-time translation and dubbing for videos on platforms like YouTube, LinkedIn, and Coursera. This new AI-powered feature will translate spoken content live, offering dubbing and subtitles. Currently, the feature supports translations from Spanish to English and English to German, Hindi, Italian, Russian, and Spanish. (Link)

🛡️ WitnessAI builds guardrails for Gen AI models

WitnessAI is developing tools to make Gen AI models safer for businesses. The company’s platform monitors employee interactions and custom AI models, applying policies to reduce risks like data leaks and biased outputs. The platform also offers modules to enforce usage rules and protect sensitive information. Lastly, it encrypts and isolates data for each customer. (Link)

💻 Microsoft’s Azure AI Studio supports GPT-4o

Microsoft has announced that Azure AI Studio is now generally available and supports OpenAI’s GPT-4o model, which joins over 1,600 other models, including Mistral, Meta, Nvidia, etc. Developers can use this multimodal foundational model to incorporate text, image, and audio processing into their apps to provide generative and conversational AI experiences. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊