Microsoft's Annual Report Predicts AI's Impact on Jobs

Plus: Amazon’s new AI for its users, Cambridge develops braille-reading robots.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 200th edition of The AI Edge newsletter. This edition brings you Microsoft's annual report, “Future of Work 2023”, which predicts AI's impact on jobs.

And a huge shoutout to our incredible readers. We appreciate you😊

In today’s edition:

📊 Microsoft's annual report predicts AI's impact on jobs

.✨Amazon’s new AI helps enhance virtual try-on experiences

🤖 Cambridge researchers develop braille-reading robots 2x faster than humans

💡 Knowledge Nugget: Why Custom GPTs are better than plugins, for creators and users! By

Let’s go!

Microsoft's annual report predicts AI's impact on jobs

Microsoft released its annual ‘Future of Work 2023’ report with a focus on AI, It highlights the 2 major shifts in how work is done in the past three years, driven by remote and hybrid work technologies and the advancement of Gen AI. The report aims to provide research-based insights and guidance on reimagining work for the better.

This year's edition focuses on integrating LLMs into work and offers a unique perspective on areas that deserve attention. The report emphasizes the role of research in shaping the future of work and aims to help colleagues worldwide make progress in this regard.

Why does this matter?

Microsoft's annual report provides valuable insights into how emerging technologies like AI and remote work transform the workplace. It helps organizations adapt to the future of work in an ethical, human-centric way. The report helps the world reimagine work to enhance productivity, collaboration, well-being, and inclusion.

Amazon’s new AI helps enhance virtual try-on experiences

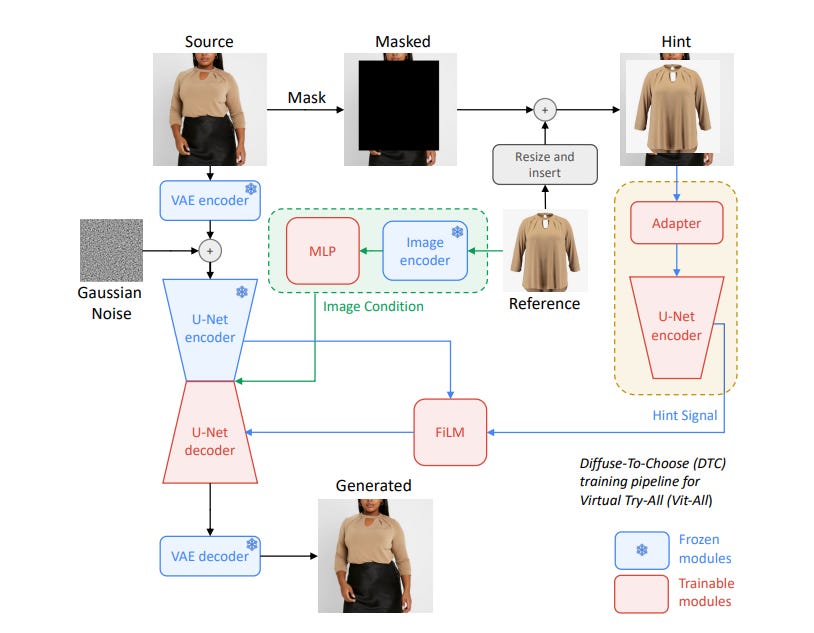

Amazon researchers have developed the “Diffuse to Choose” AI tool. It's a new image inpainting model that combines the strengths of diffusion models and personalization-driven models; It allows customers to virtually place products from online stores into their homes to visualize fit and appearance in real-time.

The model incorporates fine-grained features from a reference image into the main diffusion model, preserving the reference item's details. Amazon plans to release the code and a demo of DTC soon.

Why does this matter?

Extensive testing shows that Diffuse to Choose outperforms existing zero-shot diffusion inpainting methods and few-shot diffusion personalization algorithms. This model has the potential to greatly improve the virtual visualization of products in online shopping, making it easier for buyers to make informed decisions.

Cambridge researchers develop braille-reading robots 2x faster than humans

Cambridge researchers developed a robotic sensor reading braille 2x faster than humans. The sensor, which incorporates AI techniques, was able to read braille at 315 words per minute with 90% accuracy.

While the robot was not developed as an assistive technology, the high sensitivity required to read braille makes it ideal for testing the development of robot hands or prosthetics with comparable sensitivity to human fingertips. The researchers used ML algorithms to train the robotic sensor to quickly slide over lines of braille text and accurately recognize the letters.

Why does this matter?

This new AI-powered braille reader demonstrates how robotics and AI can be combined to surpass human capabilities and open up new possibilities for assisting the visually impaired. By rapidly reading braille at over double the speed of humans, this technology could greatly accelerate access to written materials for blind individuals. Its great step toward independence and empowerment to millions of blind and visually impaired people worldwide.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Why Custom GPTs are better than plugins for creators and users!

In this article, the author

explains Custom GPTs are better than plugins because they come with pre-configured actions and specific knowledge for the task at hand. Users can easily switch conversations depending on the task, making it quicker and more intuitive.Custom GPTs are open to everyone and can be listed on the "GPT Store" or shared via a direct URL, resulting in more availability. Adding knowledge to GPTs is simple, as users can upload files in various formats. GPTs also have inbuilt capabilities like web browsing and image generation. Conversation starters and the ability to mention other GPTs within conversations are additional advantages. No coding or servers are required for knowledge-driven GPTs.

Why does this matter?

By pre-configuring GPTs with specialized knowledge and skills for different tasks, they can provide faster, more relevant assistance. The open ecosystem where anyone can build, share, and access custom GPTs also democratizes AI, making it more accessible. By putting intuitive, customizable AI in the hands of all users, this technology has the potential to greatly augment human capabilities and positively transform fields like education, healthcare and scientific research.

What Else Is Happening❗

💡 OpenAI bringing GPTs (AI models) into conversations, Type @ and select the GPT

Paid users can now type "@", select a GPT from a list, and have it join the conversation with an understanding of the full context. This move aims to make GPTs more discoverable and useful for different use cases. They are also planning to introduce monetization for developers. (Link)

🖼️ Midjourney Niji V6 is out

Midjourney Niji v6 is specifically tuned for anime-style art. It offers advanced capabilities in interpreting complex prompts and blending text with imagery, allowing for more nuanced and expressive creations. (Link)

📱 Yelp uses AI to provide summary reviews on its iOS app, and much more

It highlights a location's atmosphere, best dishes, prices, and more. The feature uses LLMs to analyze user feedback and showcase what a business is known for based on first-hand reviews. However, it does not include the pros and cons mentioned by users. The AI summaries will appear based on a reliable number of recent reviews recommended by Yelp's software. (Link)

📰 The New York Times is creating a team to explore the use of AI in its newsroom

The team will focus on experimenting with Gen AI and other ML techniques to enhance reporting and how the Times is presented to readers. The publication plans to hire an ML engineer, a software engineer, a designer, and a couple of editors for the AI newsroom initiative. (Link)

🔄 Semron aims to replace chip transistors with 'memcapacitors'

And enable advanced AI capabilities on consumer electronics devices at an affordable price. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From ML to ChatGPT to generative AI and LLMs, We break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊