Microsoft Challenges AI Processing Status Quo

Plus: LivePotrait animates images from video with precision, Groq’s LLM engine surpasses Nvidia GPU processing.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 313th edition of The AI Edge newsletter. This edition features Microsoft’s attempt to change the status quo in AI processing.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🖼️ LivePotrait animates images from video with precision

⏱️ Microsoft’s ‘MInference’ slashes LLM processing time by 90%

🚀 Groq’s LLM engine surpasses Nvidia GPU processing

🧠 Knowledge Nugget: Adapting to AI: The Future Role of Engineers in Software Development by

Let’s go!

LivePotrait animates images from video with precision

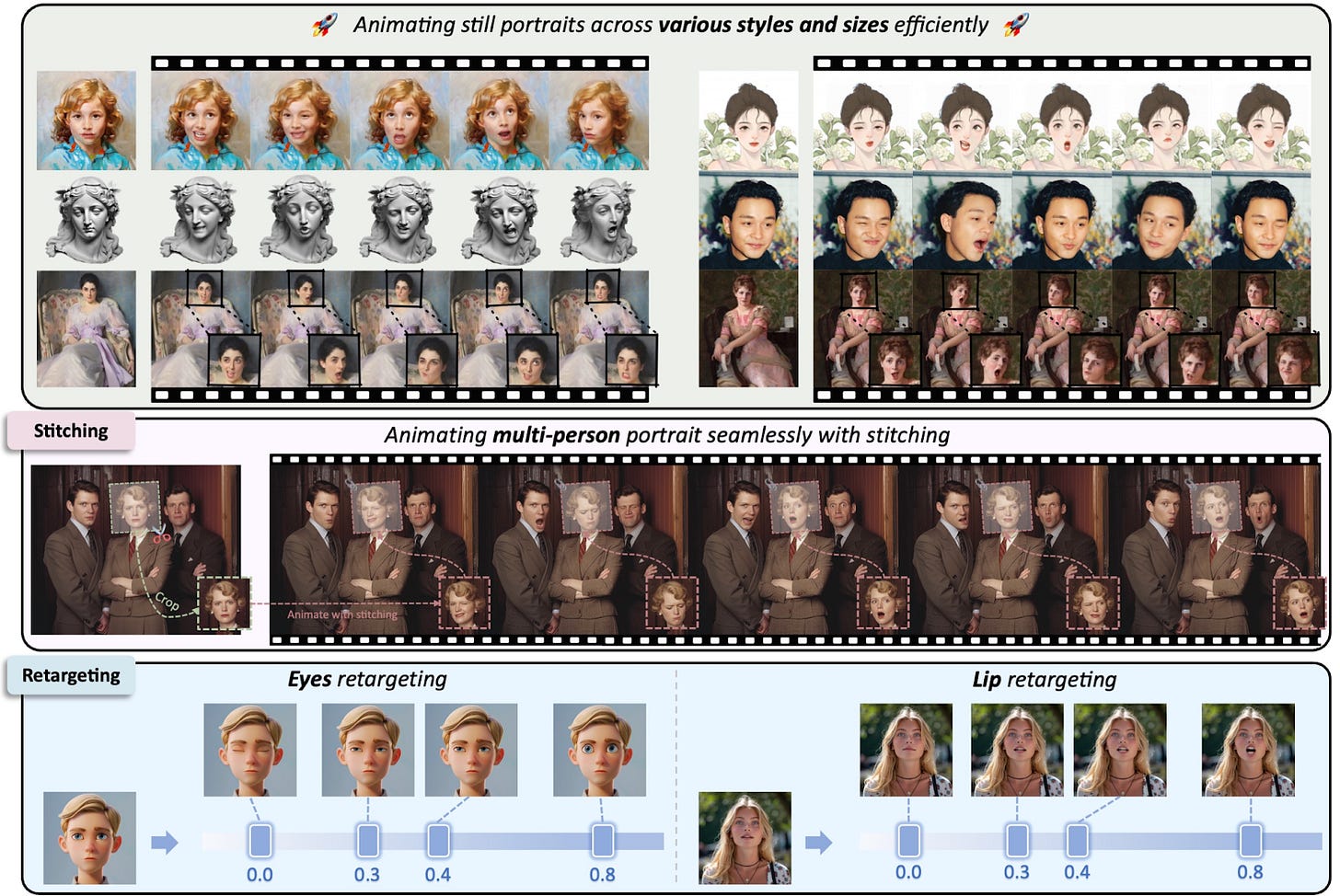

LivePortrait is a new method for animating still portraits using video. Instead of using expensive diffusion models, LivePortrait builds on an efficient "implicit keypoint" approach. This allows it to generate high-quality animations quickly and with precise control.

The key innovations in LivePortrait are:

1) Scaling up the training data to 69 million frames, using a mix of video and images, to improve generalization.

2) Designing new motion transformation and optimization techniques to get better facial expressions and details like eye movements.

3) Adding new "stitching" and "retargeting" modules that allow the user to precisely control aspects of the animation, like the eyes and lips.

4) This allows the method to animate portraits across diverse realistic and artistic styles while maintaining high computational efficiency.

5) LivePortrait can generate 512x512 portrait animations in just 12.8ms on an RTX 4090 GPU.

Why does it matter?

The advancements in generalization ability, quality, and controllability of LivePotrait could open up new possibilities, such as personalized avatar animation, virtual try-on, and augmented reality experiences on various devices.

Microsoft’s ‘MInference’ slashes LLM processing time by 90%

Microsoft has unveiled a new method called MInference that can reduce LLM processing time by up to 90% for inputs of one million tokens (equivalent to about 700 pages of text) while maintaining accuracy. MInference is designed to accelerate the "pre-filling" stage of LLM processing, which typically becomes a bottleneck when dealing with long text inputs.

Microsoft has released an interactive demo of MInference on the Hugging Face AI platform, allowing developers and researchers to test the technology directly in their web browsers. This hands-on approach aims to get the broader AI community involved in validating and refining the technology.

Why does it matter?

By making lengthy text processing faster and more efficient, MInference could enable wider adoption of LLMs across various domains. It could also reduce computational costs and energy usage, putting Microsoft at the forefront among tech companies and improving LLM efficiency.

Groq’s LLM engine surpasses Nvidia GPU processing

Groq, a company that promises faster and more efficient AI processing, has unveiled a lightning-fast LLM engine. Their new LLM engine can handle queries at over 1,250 tokens per second, which is much faster than what GPU chips from companies like Nvidia can do. This allows Groq's engine to provide near-instant responses to user queries and tasks.

Groq's LLM engine has gained massive adoption, with its developer base rocketing past 280,000 in just 4 months. The company offers the engine for free, allowing developers to easily swap apps built on OpenAI's models to run on Groq's more efficient platform. Groq claims its technology uses about a third of the power of a GPU, making it a more energy-efficient option.

Why does it matter?

Groq’s lightning-fast LLM engine allows for near-instantaneous responses, enabling new use cases like on-the-fly generation and editing. As large companies look to integrate generative AI into their enterprise apps, this could transform how AI models are deployed and used.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Adapting to AI: The Future Role of Engineers in Software Development

In this article,

discusses the rapid advancements in AI and the impact of LLMs on software engineering. LLMs can generate nearly 50% of the new code in Google's codebase. Tools like GitHub Copilot have become vital for code development.The author wants to convey that the routine coding tasks will be increasingly handled by AI, allowing engineers to focus more on higher-level tasks like planning, risk checking, and design. Engineers should give tasks to the AI and only step in when needed. When AI doesn't work, the responsibility changes to providing feedback so the AI can fix the problem itself.

Why does it matter?

This shift necessitates a change in the skill set of all engineers, requiring them to become proficient at delegating tasks to AI. The ability to adapt, guide AI, and learn from feedback will be vital for future software engineers.

What Else Is Happening❗

🛡️ Japan’s Defense Ministry introduces basic policy on using AI

This comes as the Japanese Self-Defense Forces grapple with challenges such as manpower shortages and the need to harness new technologies. The ministry believes AI has the potential to overcome these challenges in the face of Japan's declining population. (Link)

🩺 Thrive AI Health democratizes access to expert-level health coaching

Thrive AI Health, a new company, funded by OpenAI and Thrive Global, uses AI to provide personalized health coaching. The AI assistant can leverage an individual's data to provide recommendations on sleep, diet, exercise, stress management, and social connections. (Link)

🖥️ Qualcomm and Microsoft rely on AI wave to revive the PC market

Qualcomm and Microsoft are embarking on a marketing blitz to promote a new generation of "AI PCs." The goal is to revive the declining PC market. This strategy only applies to a small share of PCs sold this year, as major software vendors haven’t agreed to the AI PC trend. (Link)

🤖 Poe’s Previews let you see and interact with web apps directly within chats

This feature works especially well with advanced AI models like Claude 3.5 Sonnet, GPT-4o, and Gemini 1.5 Pro. Previews enable users to create custom interactive experiences like games, animations, and data visualizations without needing programming knowledge. (Link)

🎥 Real-time AI video generation less than a year away: Luma Labs chief scientist

Luma’s recently released video model, Dream Machine, was trained on enormous video data, equivalent to hundreds of trillions of words. According to Luma's chief scientist, Jiaming Song, this allows Dream Machine to reason about the world in new ways. He predicts realistic AI-generated videos will be possible within a year. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊