Meta’s Largest Llama Drops Next Week

Plus: Mistral AI adds 2 new models to its growing family of LLMs, FlashAttention-3 enhances computation power of NVIDIA GPUs.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 319th edition of The AI Edge newsletter. This edition features Meta’s release of Llama 3 400B and more.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🤖 Meta’s Llama 3 400B drops next week

🚀 Mistral AI adds 2 new models to its growing family of LLMs

⚡ FlashAttention-3 enhances computation power of NVIDIA GPUs

📚 Knowledge Nugget: Recursion in AI is scary. But let’s talk solutions. by

Let’s go!

Meta’s Llama 3 400B drops next week

Meta plans to release the largest version of its open-source Llama 3 model on July 23, 2024. It boasts over 400 billion parameters and multimodal capabilities.

It is particularly exciting as it performs on par with OpenAI's GPT-4o model on the MMLU benchmark despite using less than half the parameters. Another compelling aspect is its open license for research and commercial use.

Why does it matter?

With its open availability and impressive performance, the model could democratize access to cutting-edge AI capabilities, allowing researchers and developers to leverage it without relying on expensive proprietary APIs.

Mistral AI adds 2 new models to its growing family of LLMs

Mistral launched Mathstral 7B, an AI model designed specifically for math-related reasoning and scientific discovery. It has a 32k context window and is published under the Apache 2.0 license.

(Source)

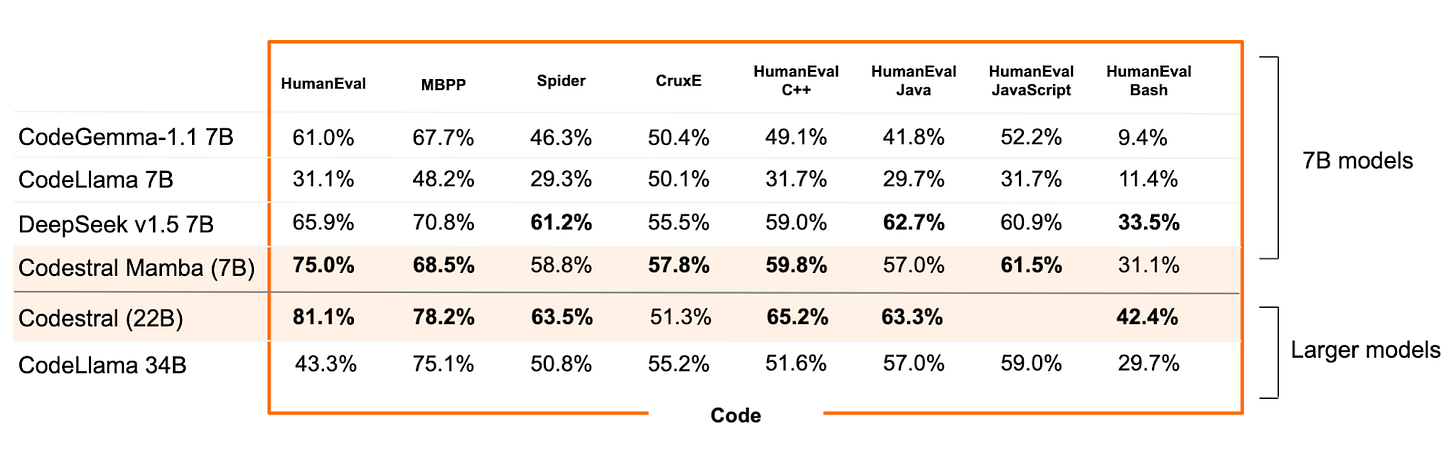

Mistral also launched Codestral Mamba, a Mamba2 language model specialized in code generation, available under an Apache 2.0 license. Mistral AI expects it to be a great local code assistant after testing it on in-context retrieval capabilities up to 256k tokens.

(Source)

Why does it matter?

While Mistral is known for its powerful open-source AI models, these new entries are examples of the excellent performance/speed tradeoffs achieved when building models for specific purposes.

FlashAttention-3 enhances computation power of NVIDIA GPUs

Researchers from Colfax Research, Meta, Nvidia, Georgia Tech, Princeton University, and Together AI have introduced FlashAttention-3, a new technique that significantly speeds up attention computation on Nvidia Hopper GPUs (H100 and H800).

Attention is a core component of the transformer architecture used in LLMs. But as LLMs grow larger and handle longer input sequences, the computational cost of attention becomes a bottleneck.

FlashAttention-3 takes advantage of new features in Nvidia Hopper GPUs to maximize performance. It achieves up to 75% usage of the H100 GPU’s maximum capabilities.

Why does it matter?

The faster attention computation offered by FlashAttention-3 has several implications for LLM development and applications. It can: 1) significantly reduce the time to train LLMs, enabling experiments with larger models and datasets; 2) extend the context window of LLMs, unlocking new applications, and 3) slash the cost of running models in production.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Recursion in AI is scary. But let’s talk solutions.

In this insightful article,

discusses the concept of exponential growth in AI capabilities, comparing it to Moore's Law in computing. He raises concerns about two main issues– the potential for incredibly rapid AI advancement leading to unpredictable outcomes, and the problem of "wireheading," where an AI might learn to manipulate its own reward signals.The author proposes a baseline solution to address these concerns and presents these ideas as a starting point for discussion and further refinement by the AI research community.

Why does it matter?

The article contributes to the ongoing debate about AI safety and alignment, offering potential approaches to mitigate risks associated with rapidly advancing AI technology.

What Else Is Happening❗

📊Microsoft unveiled an AI model to understand and work with spreadsheets

Microsoft researchers introduced SpreadsheetLLM, a pioneering approach for encoding spreadsheet contents into a format that can be used with LLMs. It optimizes LLMs’ powerful understanding and reasoning capability on spreadsheets. (Link)

📱Anthropic releases Claude app for Android, bringing its AI chatbot to more users

The Claude Android app will work just like the iOS version released in May. It includes free access to Anthropic’s best AI model, Claude 3.5 Sonnet, and upgraded plans through Pro and Team subscriptions. (Link)

🚀Vectara announces Mockingbird, a purpose-built LLM for RAG

Mockingbird has been optimized specifically for RAG (Retrieval-Augmented Generation) workflows. It achieves the world’s leading RAG output quality, with leading hallucination mitigation capabilities, making it perfect for enterprise RAG and autonomous agent use cases. (Link)

🔍Apple, Nvidia, Anthropic used thousands of YouTube videos to train AI

A new investigation claims that tech companies used subtitles from YouTube channels to train their AI, even though YouTube prohibits harvesting its platform content without permission. The dataset of 173,536 YT videos called The Pile included content from Harvard, NPR, MrBeast, and 'The Late Show With Stephen Colbert.' (Link)

🕵️♂️Microsoft faces UK antitrust investigation over hiring of Inflection AI staff

UK regulators are formally investigating Microsoft’s hiring of Inflection AI staff. The UK’s Competition and Markets Authority (CMA) has opened a phase 1 merger investigation into the partnership. Progression to phase 2 could hinder Microsoft’s AI ambitions. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊