Meta's AI for Real-Time Brain Image Decoding

Plus: Fuyu-8B, a superfast multimodal model for AI agents, Set-of-Mark to boost GPT-4V's capabilites.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 129th edition of The AI Edge newsletter. This edition brings you Meta’s new AI for real-time decoding of images from brain activity.

And a huge shoutout to our amazing readers! We appreciate you😊

In today’s edition:

🧠 Meta’s new AI for real-time decoding of images from brain activity

🚀 Fuyu-8B: A simple, superfast multimodal model for AI agents

🔥 GPT-4V got even better with Set-of-Mark (SoM)

📚 Knowledge Nugget: AI-enabled SaaS vs Moatless AI by

Let’s go!

Meta’s new AI for real-time decoding of images from brain activity

New Meta research has showcased an AI system that can be deployed in real time to reconstruct, from brain activity, the images perceived and processed by the brain at each instant.

Using magnetoencephalography (MEG), this AI system can decode the unfolding of visual representations in the brain with an unprecedented temporal resolution.

The results:

While the generated images remain imperfect, overall results show that MEG can be used to decipher, with millisecond precision, the rise of complex representations generated in the brain.

Why does this matter?

Only a few days ago, researchers from Meta discovered how to turn brain waves into speech using non-invasive methods like EEG and MEG. It seems Meta is getting closer to development of AI systems designed to learn and reason like humans with every research initiative.

Fuyu-8B: A simple, superfast multimodal model for AI agents

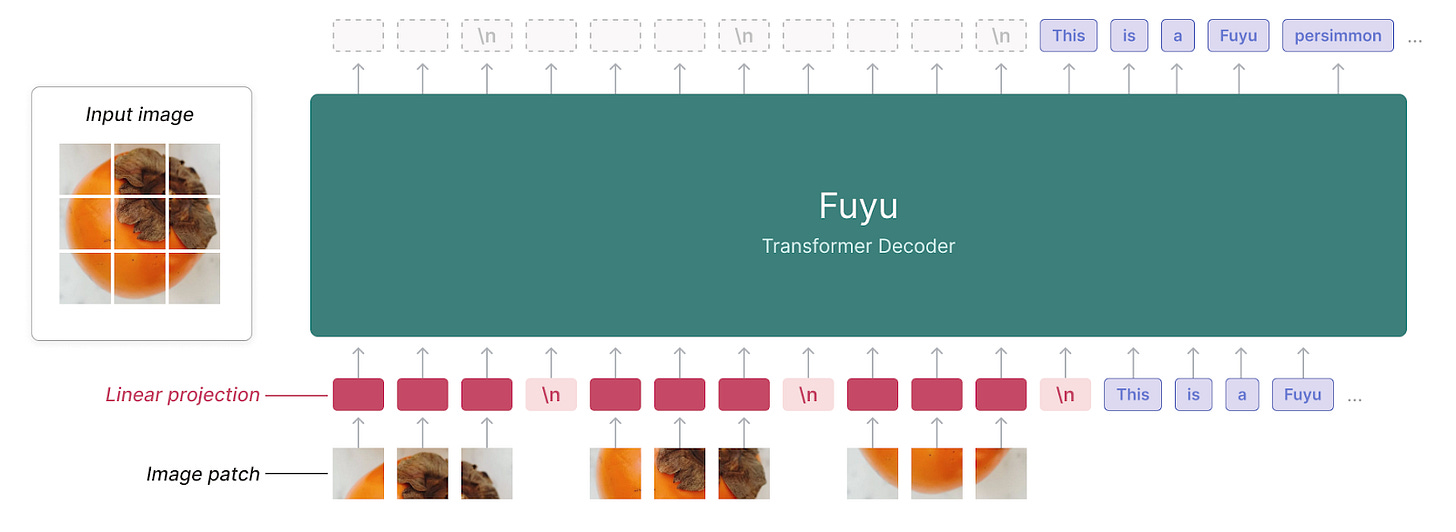

Adept is releasing Fuyu-8B, a small version of the multimodal1 model that powers its product. The model is available on Hugging Face. What sets Fuyu-8B apart is:

Its extremely simple architecture doesn’t have an image encoder. This allows easy interleaving of text and images, handling arbitrary image resolutions, and dramatically simplifies both training and inference.

It is super fast for copilot use cases where latency really matters. You can get responses for large images in less than 100 milliseconds.

Despite being optimized for Adept’s use case, it performs well at standard image understanding benchmarks such as visual question-answering and natural-image-captioning.

Why does this matter?

Fuyu’s simple architecture makes it easier to understand, scale, and deploy than other multi-modal models. Since it is open-source and fast, it is ideal for building useful AI agents that require fast foundation models that can see the visual world.

GPT-4V got even better with Set-of-Mark (SoM)

New research has introduced Set-of-Mark (SoM), a new visual prompting method, to unleash extraordinary visual grounding abilities in large multimodal models (LMMs), such as GPT-4V.

As shown below, researchers employed off-the-shelf interactive segmentation models, such as SAM, to partition an image into regions at different levels of granularity and overlay these regions with a set of marks, e.g., alphanumerics, masks, boxes.

The experiments show that SoM significantly improves GPT-4V’s performance on complex visual tasks that require grounding.

Why does this matter?

In the past, a number of works attempted to enhance the abilities of LLMs by refining the way they are prompted or instructed. Thus far, prompting LMMs is rarely explored in academia. SoM represents a pioneering move in the domain and can help pave the road towards more capable LMMs.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: AI-enabled SaaS vs Moatless AI

Several large enterprise SaaS companies have announced and/or launched their generative AI products– Slack, Salesforce, Dropbox, Microsoft, Google to name a few. This is a direct threat to generative AI startups that are building useful productivity applications for enterprise customers but have a limited sustainable, competitive advantage (i.e., moatless).

So how can startups build moats in this environment?

answers this with 5 non-exhaustive approaches to build moat around enterprise AI products. He also recaps in detail the AI value chain and recent AI features from enterprise SaaS companies.Why does this matter?

The article highlights strategies for startups to succeed in a the competitive landscape of generative AI. It also outlines the AI value chain, emphasizing that the primary value from AI will be generated at the application layer.

What Else Is Happening❗

🌐IBM expands relationship with AWS to deepen generative AI capabilities and expertise.

The two organizations plan to deliver joint solutions and services upgraded with generative AI capabilities designed to help clients across critical use cases. IBM Consulting also aims to deepen and expand its generative AI expertise on AWS by training 10,000 consultants. (Link)

🤖Nvidia brings generative AI compatibility to robotics platforms.

The company is integrating generative AI into its systems, including the Nvidia Isaac ROS 2.0 and Nvidia Isaac Sim 2023 platforms, with the goal of accelerating its adoption among roboticists. Some 1.2 million developers have also interfaced with the Nvidia AI and Jetson platforms, including AWS, Cisco and John Deere. (Link)

🤝PwC partners with OpenAI for AI-powered consultation on complex problems.

The accounting firm will use AI to consult on complex matters in tax, legal and human resources, such as carrying out due diligence on companies, identifying compliance issues and even recommending whether to authorize business deals. (Link)

🚀Infosys, Google Cloud expand alliance to help firms transform into AI-first organisations.

It will help enterprises build AI-powered experiences leveraging Infosys Topaz offerings and Google Cloud’s generative AI solutions. Infosys will also create the new global Generative AI Labs to develop industry-specific AI solutions and platforms. (Link)

🎶Universal Music Group and Bandlab announce first-if-its-kinf strategic AI collaboration.

The alliance will advance the companies’ shared commitment to ethical use of AI and the protection of artist and songwriter rights. They will also pioneer market-led solutions with pro-creator standards to ensure new technologies serve the creator community effectively and ethically. (Link)

That's all for now!

Subscribe to The AI Edge and join the impressive list of readers that includes professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other reputable organizations.

Thanks for reading, and see you tomorrow. 😊