Meta's All-in-One Generative Speech AI

Plus: OpenLLaMa, a licensed open reproduction of Meta's LLaMa

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 44th edition of The AI Edge newsletter. This edition brings you Meta’s Voicebox, the first generative AI model for speech to generalize across tasks with state-of-the-art performance.

And a huge shoutout to our amazing readers. We appreciate you!😊

In today’s edition:

🤖Meta’s all-in-one generative speech model

🚀OpenLLaMa: An open reproduction of Meta’s LLaMA

📚 Knowledge Nugget: The secret sauce behind 100K context window in LLMs

Let’s go!

Meta’s all-in-one generative speech AI model

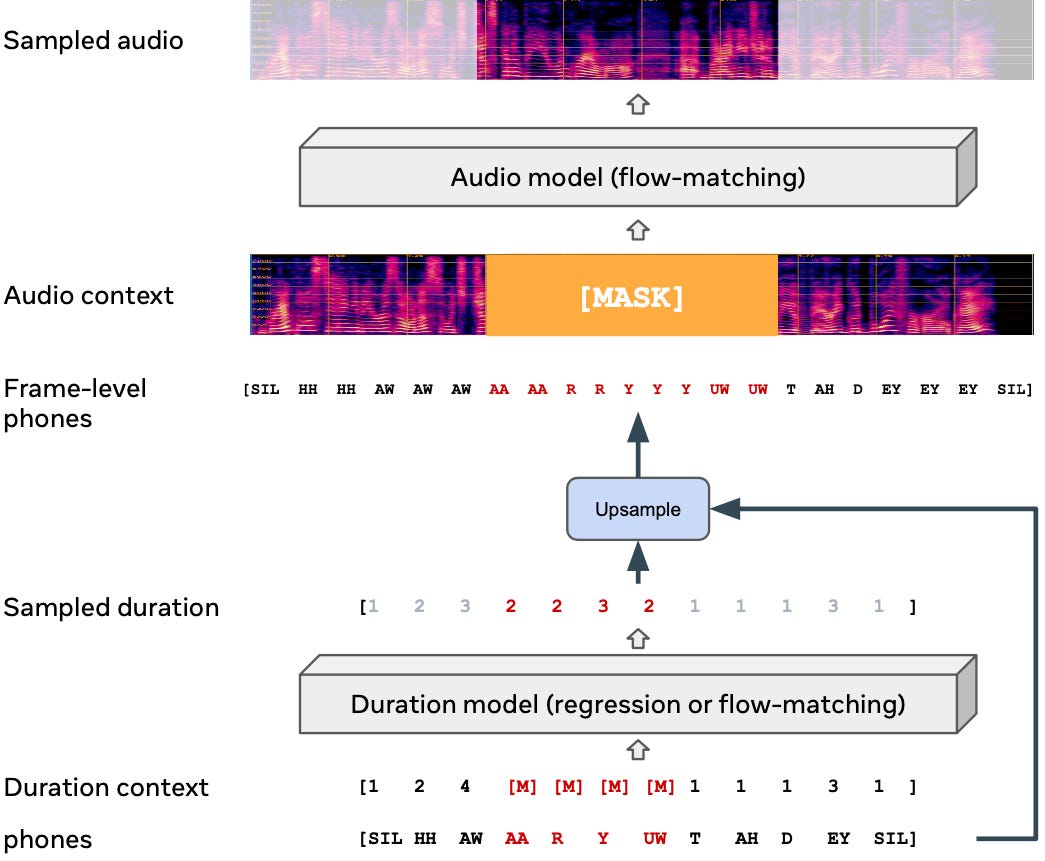

Meta introduces Voicebox, the first generative AI model that can perform various speech-generation tasks it was not specifically trained to accomplish with SoTA performance. It can perform:

Text-to-speech synthesis in 6 languages

Noise removal

Content editing

Cross-lingual style transfer

Diverse sample generation

One of the main limitations of existing speech synthesizers is that they can only be trained on data that has been prepared expressly for that task. Voicebox is built upon the Flow Matching model, which is Meta’s latest advancement on non-autoregressive generative models that can learn highly non-deterministic mapping between text and speech.

Using an input audio sample of just two seconds in length, Voicebox can match the sample’s audio style and use it for text-to-speech generation.

Why does this matter?

As the first versatile model that successfully performs task generalization, Voicebox could usher in a new era of generative AI for speech. Other scalable generative AI models with task generalization capabilities have sparked excitement about potential applications in text, image, and video generation. This might induce a similar impact for speech in the future and encourage further research.

OpenLLaMa: An open reproduction of Meta’s LLaMA

OpenLLaMA is a licensed open-source reproduction of Meta AI's LLaMA 7B and 13B trained on the RedPajama dataset. This reproduction includes three models: 3B, 7B, and 13B, all trained on 1T tokens. They offer PyTorch and JAX weights for the pre-trained OpenLLaMA models, along with evaluation results and a comparison to the original LLaMA models.

Why does this matter?

OpenLLaMA aims to train Apache-licensed ‘drop-in’ replacements for Meta’s LLaMA models. Given the popularity of models based on LLaMA-13B, this one should be pretty useful. This contributes to the open-source AI community and empowers developers with accessible and reliable language models.

Knowledge Nugget: The secret sauce behind 100K context window in LLMs

This thought-provoking article shares several techniques to accelerate the training and inference of LLMs while effectively utilizing a wide context window of up to 100,000 input tokens. These techniques include ALiBi positional embedding, Sparse Attention, FlashAttention, Multi-Query attention, Conditional computation, and the utilization of powerful 80GB A100 GPUs.

Moreover, the article discusses:

Why context length matters and why it can be a game changer

What are the main limitations in the original Transformer architecture when working with large context lengths

The computational complexity of the Transformer architecture

Why does this matter?

LLM training and inference are crucial for improving efficiency and performance. Implementing these techniques makes it possible to achieve faster training and more efficient inference, enabling LLMs to process larger context lengths. This not only reduces costs but also enhances language understanding and generation capabilities.

What Else Is Happening

🔥 This amazing shader auto-converts 2D images to 3D in 5 mins! (Link)

🌟 AI reconstructs 3D scenes from reflections in the human eye (Link)

📱 Microsoft releases Bing widget for iOS for direct access to its AI chatbot (Link)

🚀 Google's project Tailwind to get a new name and early access soon! (Link)

🚘 JLR partners with Everstream Analytics' AI to tackle supply chain issues. (Link)

Trending Tools

Brief AI: Generate article summaries instantly with BRIEF.AI - Simplify reading.

Gated: Share your focus online with Gated - See what matters, skip the rest.

ChatFlow: Answer visitor questions instantly with your AI chatbot - ChatGPT for any website.

Intellecs AI: Retrieve information efficiently - Upload PDFs, get instant answers.

Tended AI: Impress customers with AI-generated responses - Complete tenders in minutes.

Sonic Link: Chat and interact with your AI replica - Connect on a whole new level.

Classpoint: Transform presentations with whiteboard tools, polls, and AI-generated questions.

Olvy: Organize and analyze feedback effortlessly - Integrated AI for user insights.

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI enthusiasts.

Thanks for reading, and see you tomorrow.

The newsletter is great, Really like the format of "Why does it matter?"

Overall it feels a bit too sophisticated for the mainstream. Perhaps it's meant to be. Simplifying concepts in layman's terms could make it more appealing, if that's what you're going for.