Meta Introduces 'Prompt Engineering with Llama 2'

Plus: NVIDIA released AI RTX Video HDR, Google introduces AutoRT.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 196th edition of The AI Edge newsletter. This edition brings you the “Meta releases guide on ‘Prompt Engineering with Llama 2'.”

And a huge shoutout to our incredible readers. We appreciate you😊

In today’s edition:

📖 Meta introduces guide on ‘Prompt Engineering with Llama 2'

🎬NVIDIA’s AI RTX Video HDR transforms video to HDR quality

🤖 Google introduces a model for orchestrating robotic agents

📚 Knowledge Nugget: AI is not just Gen AI by

Let’s go!

Meta releases guide on ‘Prompt Engineering with Llama 2'

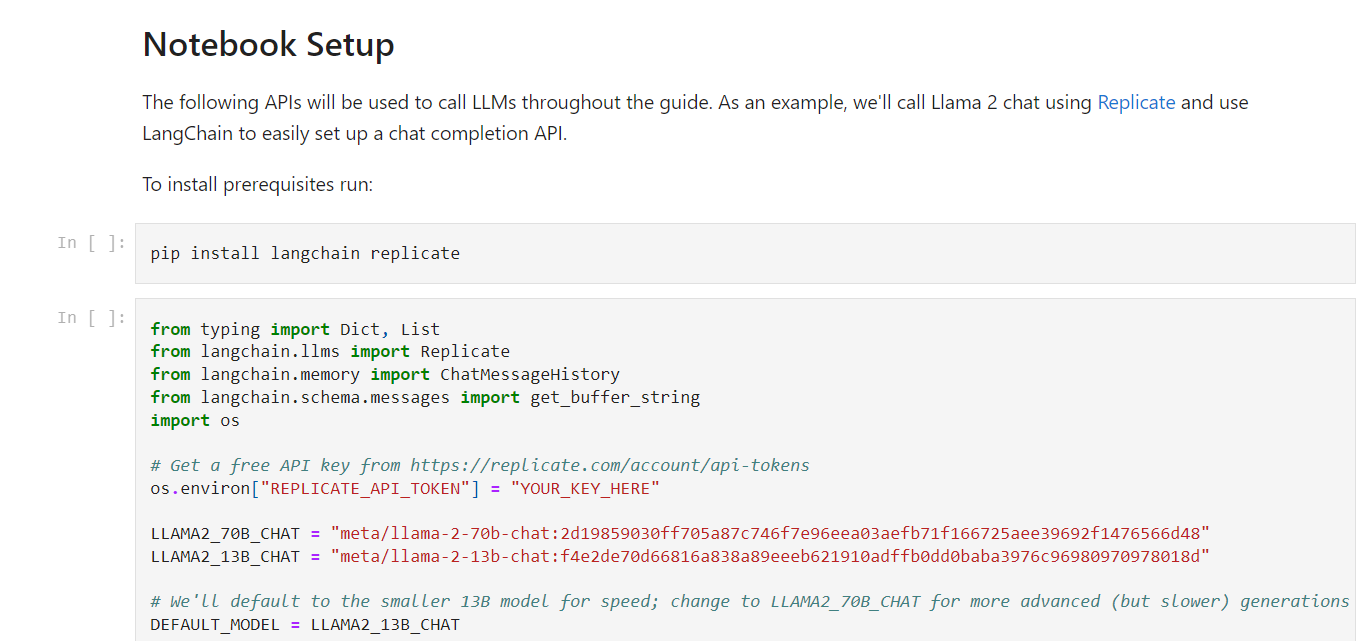

Meta introduces 'Prompt Engineering with Llama 2', It's an interactive guide created by research teams at Meta that covers prompt engineering & best practices for developers, researchers & enthusiasts working with LLMs to produce stronger outputs. It's the new resource created for the Llama community.

Access the Jupyter Notebook in the llama-recipes repo ➡️ https://bit.ly/3vLzWRL

Why does this matter?

Having these resources helps the LLM community learn how to craft better prompts that lead to more useful model responses. Overall, it enables people to get more value from LLMs like Llama.

NVIDIA introduces AI RTX Video HDR, which transforms video to HDR quality

NVIDIA released AI RTX Video HDR, which transforms video to HDR quality, It works with RTX Video Super Resolution. The HDR feature requires an HDR10-compliant monitor.

RTX Video HDR is available in Chromium-based browsers, including Google Chrome and Microsoft Edge. To enable the feature, users must download and install the January Studio driver, enable Windows HDR capabilities, and enable HDR in the NVIDIA Control Panel under "RTX Video Enhancement."

Why does this matter?

AI RTX Video HDR provides a new way for people to enhance the Video viewing experience. Using AI to transform standard video into HDR quality makes the content look much more vivid and realistic. It also allows users to experience cinematic-quality video through commonly used web browsers.

Google introduces a model for orchestrating robotic agents

Google introduces AutoRT, a model for orchestrating large-scale robotic agents. It's a system that uses existing foundation models to deploy robots in new scenarios with minimal human supervision. AutoRT leverages vision-language models for scene understanding and grounding and LLMs for proposing instructions to a fleet of robots.

By tapping into the knowledge of foundation models, AutoRT can reason about autonomy and safety while scaling up data collection for robot learning. The system successfully collects diverse data from over 20 robots in multiple buildings, demonstrating its ability to align with human preferences.

Why does this matter?

This allows for large-scale data collection and training of robotic systems while also reasoning about key factors like safety and human preferences. AutoRT represents a scalable approach to real-world robot learning that taps into the knowledge within foundation models. This could enable faster deployment of capable and safe robots across many industries.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

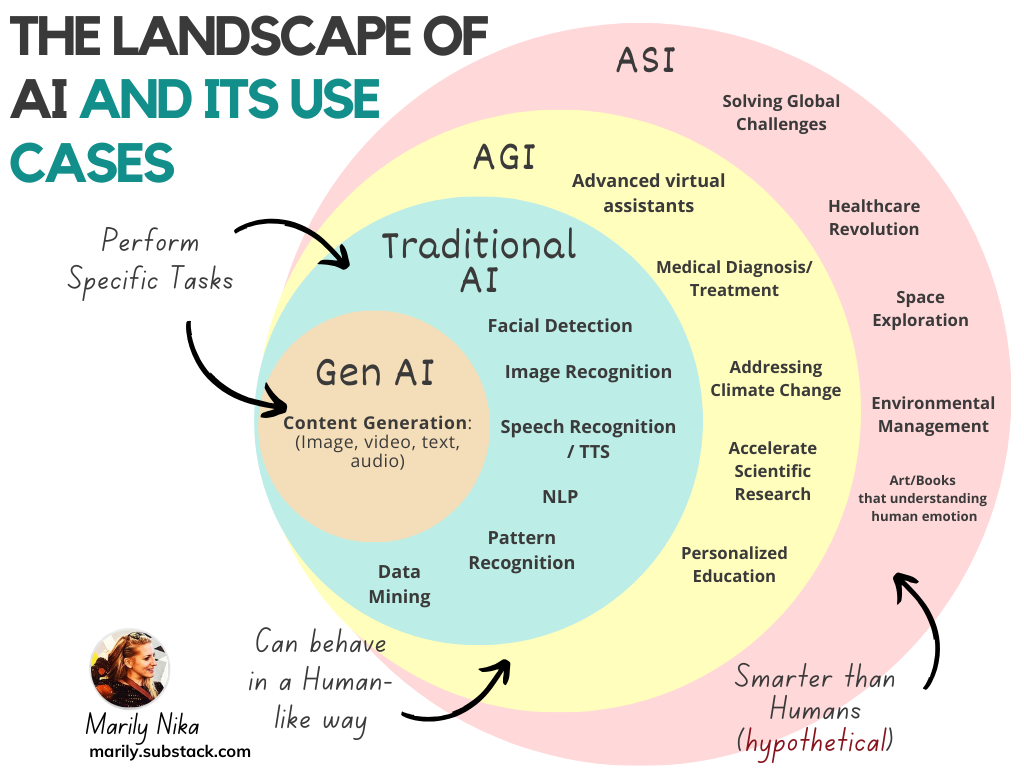

Knowledge Nugget: AI is not just Gen AI.

AI is not just limited to Generative AI. There are various subsets and use cases of AI. Traditional AI includes computer vision, speech recognition, NLP, robotics, and data analytics. Gen AI, on the other hand, focuses on generating images, videos, music, and text and assisting in design and game development.

Looking ahead, there is the potential for Artificial General Intelligence (AGI) and Artificial Superintelligence (ASI) to tackle complex problems, accelerate scientific research, revolutionize healthcare, and even provide solutions to global challenges. AI is a vast and evolving field with diverse applications.

Why does this matter?

This broader view of AI highlights the vast potential of artificial intelligence beyond just generative models. While tools like DALL-E are impressively creative, AI has so many other critical use cases across industries, science, and society. Considering the full scope of AI applications underscores how transformative the technology can be in areas like healthcare, transportation, energy, and more.

What Else Is Happening❗

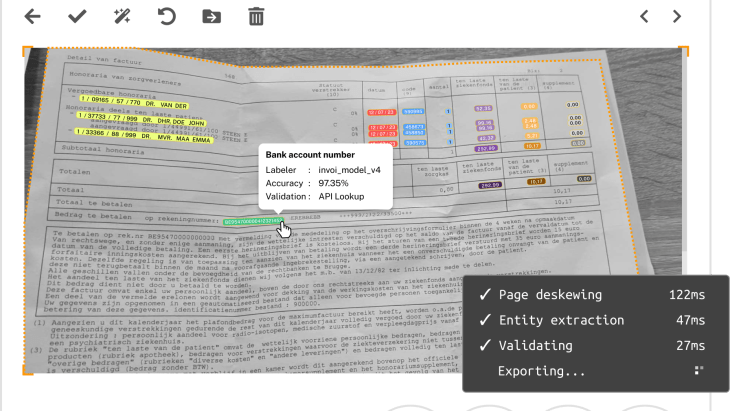

🤑 Google's Gradient invests $2.4M in Send AI for enterprise data extraction

Dutch startup Send AI has secured €2.2m ($2.4M) in funding from Google's Gradient Ventures and Keen Venture Partners to develop its document processing platform. The company uses small, open-source AI models to help enterprises extract data from complex documents, such as PDFs and paper files. (Link)

🎨 Google Arts & Culture has launched Art Selfie 2

A feature that uses Gen AI to create stylized images around users' selfies. With over 25 styles, users can see themselves as an explorer, a muse, or a medieval knight. It also provides topical facts and allows users to explore related stories and artifacts. (Link)

🤖 Google announced new AI features for education @ Bett ed-tech event in the UK

These features include AI suggestions for questions at different timestamps in YouTube videos and the ability to turn a Google Form into a practice set with AI-generated answers and hints. Google is also introducing the Duet AI tool to assist teachers in creating lesson plans. (Link)

🎁 Etsy has launched a new AI feature, "Gift Mode"

Which generates over 200 gift guides based on specific preferences. Users can take an online quiz to provide information about who they are shopping for, the occasion, and the recipient's interests. The feature then generates personalized gift guides from the millions of items listed on the platform. The feature leverages machine learning and OpenAI's GPT-4. (Link)

💔 Google DeepMind’s 3 researchers have left the company to start their own AI startup named ‘Uncharted Labs’

The team, consisting of David Ding, Charlie Nash, and Yaroslav Ganin, previously worked on Gen AI systems for images and music at Google. They have already raised $8.5M of its $10M goal. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From ML to ChatGPT to generative AI and LLMs, We break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊