Jamba Triples AI Throughput on Lengthy Text

Plus: Google DeepMind launches SAFE, X’s Grok gets a major upgrade

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 242nd edition of The AI Edge newsletter. This edition brings you details on AI21 Labs’s Jamba that squeezes 3x more throughput from long contexts.

And a huge shoutout to our incredible readers. We appreciate you😊

In today’s edition:

🤖AI21 Labs’ Jamba triples AI throughput

📃Google DeepMind's AI fact-checker outperforms humans

💻X’s Grok gets a major upgrade

💡 Knowledge Nugget: Everything you need to know about identifying hallucinations by LLMs by

Let’s go!

AI21 Labs’ Jamba triples AI throughput

AI21 Labs has released Jamba, the first-ever production-grade AI model based on the Mamba architecture. This new architecture combines the strengths of both traditional Transformer models and the Mamba SSM, resulting in a model that is both powerful and efficient. Jamba boasts a large context window of 256K tokens, while still fitting on a single GPU.

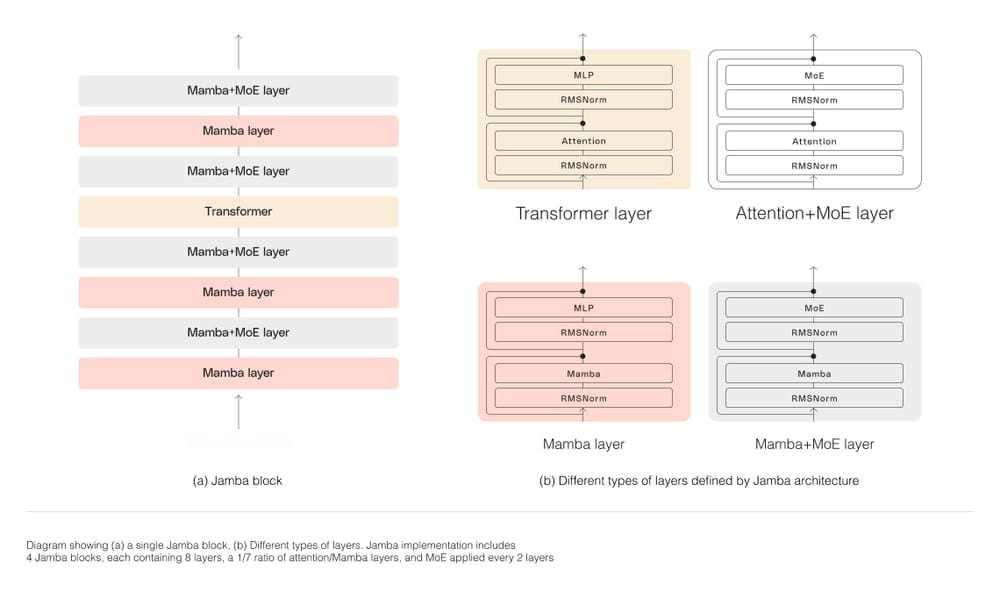

Jamba's hybrid architecture, composed of Transformer, Mamba, and mixture-of-experts (MoE) layers, optimizes for memory, throughput, and performance simultaneously.

The model has demonstrated remarkable results on various benchmarks, matching or outperforming state-of-the-art models in its size class. Jamba is being released with open weights under Apache 2.0 license and will be accessible from the NVIDIA API catalog.

Why does this matter?

Jamba’s hybrid architecture makes it the only model capable of processing 240k tokens on a single GPU. This could make AI tasks like machine translation and document analysis much faster and cheaper, without requiring extensive computing resources.

Google DeepMind's AI fact-checker outperforms humans

Google DeepMind has developed an AI system called Search-Augmented Factuality Evaluator (SAFE) that can evaluate the accuracy of information generated by large language models more effectively than human fact-checkers. In a study, SAFE matched human ratings 72% of the time and was correct in 76% of disagreements with humans.

While some experts question the use of "superhuman" to describe SAFE's performance, arguing for benchmarking against expert fact-checkers, the system's cost-effectiveness is undeniable, being 20 times cheaper than human fact-checkers.

Why does this matter?

As language models become more powerful and widely used, SAFE could combat misinformation and ensure the accuracy of AI-generated content. SAFE’s efficiency could be a game-changer for consumers relying on AI for tasks like research and content creation.

X’s Grok gets a major upgrade

X.ai, Elon Musk's AI startup, has introduced Grok-1.5, an upgraded AI model for their Grok chatbot. This new version enhances reasoning skills, especially in coding and math tasks, and expands its capacity to handle longer and more complex inputs with a 128,000-token context window.

Grok chatbots are known for their ability to discuss controversial topics with a rebellious touch. The improved model will first be tested by early users on X, with plans for wider availability later. This release follows the open-sourcing of Grok-1 and the inclusion of the chatbot in X's $8-per-month Premium plan.

Why does this matter?

This is significant because Grok-1.5 represents an advancement in AI assistants, potentially offering improved help with complex tasks and better understanding of user intent through its larger context window and real-time data ability. This could impact how people interact with chatbots in the future, making them more helpful and reliable.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Everything you need to know about identifying hallucinations by LLMs

In her article,

discusses a paper that introduces ExHalder, a framework for detecting hallucinations in news headlines generated by large language models (LLMs). The author address the challenge of limited labeled data for training hallucination detectors by creating a system that identifies misleading headlines and provides natural language explanations for its decisions.ExHalder consists of a reasoning classifier, a hinted classifier, and an explainer, which work together during training and inference to improve the accuracy of hallucination detection by exploring the problem from different angles.

Why does this matter?

ExHalder's ability to detect and explain hallucinations in LLM-generated news headlines is crucial for maintaining media integrity and preventing misinformation. Its potential extends beyond journalism, with applications in finance and healthcare, making it a valuable tool in the fight against misleading information across various domains.

Source

What Else Is Happening❗

🛡️Microsoft tackles Gen AI risks with new Azure AI tools

Microsoft has launched new Azure AI tools to address the safety and reliability risks associated with generative AI. The tools, currently in preview, aim to prevent prompt injection attacks, hallucinations, and the generation of personal or harmful content. The offerings include Prompt Shields, prebuilt templates for safety-centric system messages, and Groundedness Detection. (Link)

🤝Lightning AI partners with Nvidia to launch Thunder AI compiler

Lightning AI, in collaboration with Nvidia, has launched Thunder, an open-source compiler for PyTorch, to speed up AI model training by optimizing GPU usage. The company claims that Thunder can achieve up to a 40% speed-up for training large language models compared to unoptimized code. (Link)

🥊SambaNova's new AI model beats Databricks' DBRX

SambaNova Systems' Samba-CoE v0.2 Large Language Model outperforms competitors like Databricks' DBRX, MistralAI's Mixtral-8x7B, and xAI's Grok-1. With 330 tokens per second using only 8 sockets, Samba-CoE v0.2 demonstrates remarkable speed and efficiency without sacrificing precision. (Link)

🌍Google.org launches Accelerator to empower nonprofits with Gen AI

Google.org has announced a six-month accelerator program to support 21 nonprofits in leveraging generative AI for social impact. The program provides funding, mentorship, and technical training to help organizations develop AI-powered tools in areas such as climate, health, education, and economic opportunity, aiming to make AI more accessible and impactful. (Link)

📱Pixel 8 to get on-device AI features powered by Gemini Nano

Google is set to introduce on-device AI features like recording summaries and smart replies on the Pixel 8, powered by its small-sized Gemini Nano model. The features will be available as a developer preview in the next Pixel feature drop, marking a shift from Google's primarily cloud-based AI approach. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From ML to ChatGPT to generative AI and LLMs, We break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊