Inflection 2.5: A New Era of Personal AI is Here!

Plus: Google announces LLMs on a device with MediaPipe, and GaLore: A new method for memory-efficient LLM training

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 227th edition of The AI Edge newsletter. This edition brings you Inflection 2.5: A new era of personal AI is here.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🗣️Inflection 2.5: A new era of personal AI is here!

and

🔍Google announces LLMs on device with MediaPipe

🤖GaLore: A new method for memory-efficient LLM training

📚 Knowledge Nugget: Comparison of fusion mechanisms in multimodal AI models by

Let’s go!

Inflection 2.5: A new era of personal AI is here!

Inflection.ai, the company behind the personal AI app Pi, has recently introduced Inflection-2.5, an upgraded large language model (LLM) that competes with top LLMs like GPT-4 and Gemini. The in-house upgrade offers enhanced capabilities and improved performance, combining raw intelligence with the company's signature personality and empathetic fine-tuning.

This upgrade has made significant progress in coding and mathematics, keeping Pi at the forefront of technological innovation. With Inflection-2.5, Pi has world-class real-time web search capabilities, providing users with high-quality breaking news and up-to-date information. This empowers Pi users with a more intelligent and empathetic AI experience.

Why does it matter?

Inflection-2.5 challenges leading language models like GPT-4 and Gemini with their raw capability, signature personality, and empathetic fine-tuning. This will provide a new alternative for startups and enterprises building personalized applications with generative AI capabilities.

Google announces LLMs on device with MediaPipe

Google’s new experimental release called the MediaPipe LLM Inference API allows LLMs to run fully on-device across platforms. This is a significant development considering LLMs' memory and computing demands, which are over a hundred times larger than traditional on-device models.

The MediaPipe LLM Inference API is designed to streamline on-device LLM integration for web developers and supports Web, Android, and iOS platforms. It offers several key features and optimizations that enable on-device AI. These include new operations, quantization, caching, and weight sharing. Developers can now run LLMs on devices like laptops and phones using MediaPipe LLM Inference API.

Why does it matter?

Running LLMs on devices using MediaPipe and TensorFlow Lite allows for direct deployment, reducing dependence on cloud services. On-device LLM operation ensures faster and more efficient inference, which is crucial for real-time applications like chatbots or voice assistants. This innovation helps rapid prototyping with LLM models and offers streamlined platform integration.

GaLore: A new method for memory-efficient LLM training

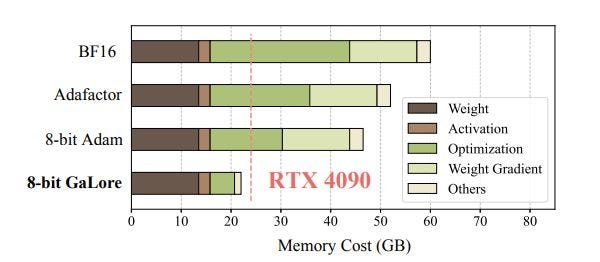

Researchers have developed a new technique called Gradient Low-Rank Projection (GaLore) to reduce memory usage while training large language models significantly. Tests have shown that GaLore achieves results similar to full-rank training while reducing optimizer state memory usage by up to 65.5% when pre-training large models like LLaMA.

It also allows pre-training a 7 billion parameter model from scratch on a single 24GB consumer GPU without needing extra techniques. This approach works well for fine-tuning and outperforms low-rank methods like LoRA on GLUE benchmarks while using less memory. GaLore is optimizer-independent and can be used with other techniques like 8-bit optimizers to save additional memory.

Why does it matter?

The gradient matrix's low-rank nature will help AI developers during model training. GaLore minimizes the memory cost of storing gradient statistics for adaptive optimization algorithms. It enables training large models like LLaMA with reduced memory consumption, making it more accessible and efficient for researchers.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Comparison of fusion mechanisms in multimodal AI models

In this article,

and compare different multimodal AI models, conducting experiments to understand efficacy.Here are several critical experiments and their results:

The penguin test was conducted on Gemini, GPT4, and LLAVA, with each model showcasing the integration of modalities distinctively.

“Which Way Is The Man Facing?” is an experiment that showcased different responses, highlighting the nuances in their fusion mechanisms and how they combine their capabilities

Bias Introduction Test illustrates the impact of the models' construction and fusion processes on yielding varied results.

Gemini's sequential processing involves feeding tokens to the LLM model and compounding misrepresentation.

GPT4 and LLAVA use parallel processing to detect discrepancies more effectively.

Further, the article discusses challenges in multimodal systems and the impact of providing the current visual state to the Copilot engine to improve intuition for operations.

Why does it matter?

Comparing the fusion mechanisms in multimodal AI models is crucial for advancing AI systems' understanding, performance, and ethical considerations. This ultimately contributes to the responsible application of AI technologies in the real world.

What Else Is Happening❗

📱Adobe makes creating social content on mobile easier

Adobe has launched an updated version of Adobe Express, a mobile app that now includes Firefly AI models. The app offers features such as a "Text to Image" generator, a "Generative Fill" feature, and a "Text Effects" feature, which can be utilized by small businesses and creative professionals to enhance their social media content. Creative Cloud members can also access and work on creative assets from Photoshop and Illustrator directly within Adobe Express. (Link)

🛡️OpenAI now allows users to add MFA to user accounts

To add extra security to OpenAI accounts, users can now enable Multi-Factor Authentication (MFA). To set up MFA, users can follow the instructions in the OpenAI Help Center article "Enabling Multi-Factor Authentication (MFA) with OpenAI." MFA requires a verification code with their password when logging in, adding an extra layer of protection against unauthorized access. (Link)

🏅US Army is building generative AI chatbots in war games

The US Army is experimenting with AI chatbots for war games. OpenAI's technology is used to train the chatbots to provide battle advice. The AI bots act as military commanders' assistants, offering proposals and responding within seconds. Although the potential of AI is acknowledged, experts have raised concerns about the risks involved in high-stakes situations. (Link)

🧑🎨 Claude 3 builds the painting app in 2 minutes and 48 seconds

Claude 3, the latest AI model by Anthropic, created a multiplayer drawing app in just 2 minutes and 48 seconds. Multiple users could collaboratively draw in real-time with user authentication and database integration. The AI community praised the app, highlighting the transformative potential of AI in software development. Claude 3 could speed up development cycles and make software creation more accessible. (Link)

🧪Cognizant launches AI lab in San Francisco to drive innovation

Cognizant has opened an AI lab in San Francisco to accelerate AI adoption in businesses. The lab, staffed with top researchers and developers, will focus on innovation, research, and developing cutting-edge AI solutions. Cognizant's investment in AI research positions them as a thought leader in the AI space, offering advanced solutions to meet the modernization needs of global enterprises. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊