IBM’s AI Chip Mimics Human Brain

Plus: NVIDIA’s trillion-token Data Curator, A guide to developing trustworthy LLMs.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 84th edition of The AI Edge newsletter. This edition brings you IBM’s brain-like chip for greener, more efficient AI.

And a huge shoutout to our incredible readers. We appreciate you!😊

In today’s edition:

🧠 IBM’s AI chip mimics human brain

🚀 NVIDIA’s tool to curate trillion-token datasets for pretraining LLMs

🔍 Trustworthy LLMs: A survey and guideline for evaluating LLMs' alignment

📚 Knowledge Nugget: Generative AI’s New Clothes by Atin Gupta

Let’s go!

IBM’s AI chip mimics the human brain

The human brain can achieve remarkable performance while consuming little power. IBM’s new prototype chip works similarly to connections in human brains. Thus, it could make AI more energy efficient and less battery draining for devices like smartphones.

The chip is primarily analogue but also has digital elements, which makes it easier to put into existing AI systems.

It addresses the concerns raised about emissions from warehouses full of computers powering AI systems. It could also cut the water needed to cool power-hungry data centers.

Why does this matter?

The advancements suggest the emergence of brain-like chips in the near future. It would mean large and more complex AI workloads could be executed in low-power or battery-constrained environments, for example, cars, mobile phones, and cameras. It promises new and better AI applications with reduced costs.

NVIDIA’s tool to curate trillion-token datasets for pretraining LLMs

Most software/tools made to create massive datasets for training LLMs are not publicly released or scalable. This requires LLM developers to build their own tools to curate large language datasets. To meet this growing need, Nvidia has developed and released the NeMo Data Curator– a scalable data-curation tool that enables you to curate trillion-token multilingual datasets for pretraining LLMs. It can scale the following tasks to thousands of compute cores.

The tool curates high-quality data that leads to improved LLM downstream performance and will significantly benefit LLM developers attempting to build pretraining datasets.

Why does this matter?

Apart from improving model downstream performance with high-quality data, applying the above modules to your datasets helps reduce the burden of combing through unstructured data sources. Plus, it can potentially lead to greatly reduced pretraining costs, meaning relatively faster and cheaper development of AI applications.

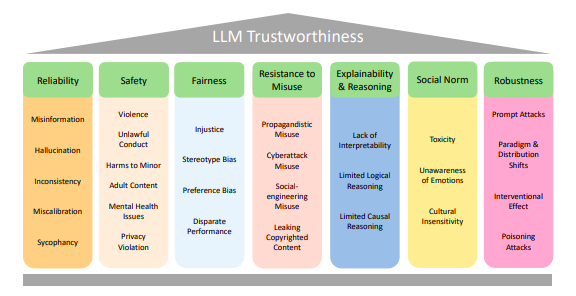

Trustworthy LLMs: A survey and guideline for evaluating LLMs' alignment

Ensuring alignment, which refers to making models behave in accordance with human intentions, has become a critical task before deploying LLMs in real-world applications. This new research has proposed a more fine-grained taxonomy of LLM alignment requirements. It not only helps practitioners unpack and understand the dimensions of alignments but also provides actionable guidelines for data collection efforts to develop desirable alignment processes.

It also thoroughly surveys the categories of LLMs that are likely to be crucial to improve their trustworthiness and shows how to build evaluation datasets for alignment accordingly.

Why does this matter?

The proposed framework facilitates a transparent, multi-objective evaluation of LLM trustworthiness. And it enables systematic iteration and deployment of LLMs. For instance, OpenAI has to devote six months to iteratively align GPT-4 before release. Thus, with clear and comprehensive guidance, it can facilitate faster time to market for AI applications that are safe, reliable, and aligned with human values.

Knowledge Nugget: Generative AI’s New Clothes

Generative AI has seen unprecedented interest and usage by consumers. But changing user behavior presents significant challenges to its adoption.

This intriguing article by Atin Gupta discusses the complexity of building AI products. To begin with, companies creating AI software for enterprises face three core, interrelated challenges:

Data - fragmentation, quality, sufficiency, and context

Systems fragmentation

Workflow fragmentation

So what do to? Among other solution ideas, the author’s argument revolves around the idea that AI adoption should be integrated into the user experience, allowing users to start small with manageable wins.

Why does this matter?

Understanding how AI products are adopted and embraced by users is vital for companies developing these technologies. It encompasses more than just the technical aspects. The article helps understand how to shape AI products that align with the needs of both sponsors and end-users.

What Else Is Happening❗

🚀Google appears to be readying new AI-powered tools for ChromeOS (Link)

👁️🗨️Zoom rewrites policies to make clear user videos aren’t used to train AI (Link)

💰Anthropic raises $100M in funding from Korean telco giant SK Telecom (Link)

📈Modular, AI startup challenging Nvidia, discusses funding at $600M valuation (Link)

🌲California turns to AI to spot wildfires, feeding on video from 1,000+ cameras (Link)

🔍FEC to regulate AI deepfakes in political ads ahead of 2024 election (Link)

🛠️ Trending Tools

User Persona Generator: Get a detailed marketing persona in 10 seconds by describing your business and target audience.

Kyligence Copilot: AI assistant that generates business summaries, identifies high-risk tasks, and guides you to find the root cause.

AI Keywording: Get accurate keywords and compelling descriptions for your images in seconds with our AI-powered tool.

PitchPower: Generate powerful proposals tailored to your clients in seconds by entering some information about your services.

Tweetify It: Turn long-form content into short, engaging posts for any social media platform. Save time every day!

Gling AI: Automatically identify and eliminate silences and unwanted takes from your videos with cutting-edge AI technology.

Chatwith: Create a support agent trained on your website content and API to handle visitor questions and boost customer service.

Backtrack 2.0: Record meetings with a 5-hour window of audio/video that overwrites throughout the day. Turn it into AI notes/summaries.

🧐Monday Musings: AI and the Frontier Paradox

We have been calling many different technologies AI for more than half a century. (Have we?!)

For a long time, detecting objects in images or videos was cutting-edge AI, now it’s just one of many technologies that allow you to order an autonomous vehicle ride from Waymo in San Francisco. We no longer call it AI. Soon we’ll just call it a car. Similarly, object detection on ImageNet was a major breakthrough of deep learning in 2012 and is now on every smartphone. No longer AI.

This “AI effect” is actually part of a larger human phenomenon we call the frontier paradox.

This interesting read examines the phenomenon in detail and suggests what we think of as AI is going to change again. And again.

Check it out and let us know what you think!

(Source)

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI Enthusiasts.

Thanks for reading, and see you tomorrow. 😊