Zephyr: Open-source GPT-3.5 Alternative by Hugging Face

Plus: An AI model that understands video, OpenAI's huge ChatGPT updates.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 135th edition of The AI Edge newsletter. This edition brings you Zephyr-7b-beta, an open-access GPT-3.5 alternative.

And a huge shoutout to our incredible readers. We appreciate you😊

In today’s edition:

🤗 Hugging Face released Zephyr-7b-beta, an open-access GPT-3.5 alternative

🎥Twelve Labs introduces an AI model that understands video

🚀 OpenAI has rolled out huge ChatGPT updates

📚 Knowledge Nugget: How LLMs are changing search

Let’s go!

Hugging Face released Zephyr-7b-beta, an open-access GPT-3.5 alternative

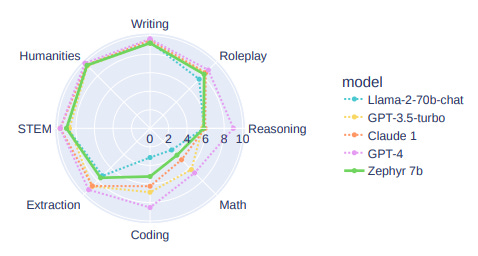

The latest Zephyr-7b-beta by Hugging Face’s H4 team is topping all 7b models on chat evals and even 10x larger models. It is as good as ChatGPT on AlpacaEval and outperforms Llama2-Chat-70B on MT-Bench.

Zephyr 7B is a series of chat models based on:

Mistral 7B base model

The UltraChat dataset with 1.4M dialogues from ChatGPT

The UltraFeedback dataset with 64k prompts & completions judged by GPT-4

Here's what the process looks like:

Why does this matter?

Notably, this approach requires no human annotation and no sampling compared to other approaches. Moreover, using a small base LM, the resulting chat model can be trained in hours on 16 A100s (80GB). You can run it locally without the need to quantize.

This is an exciting milestone for developers as it would dramatically reduce concerns over cost/latency, while also allowing them to experiment and innovate with GPT alternatives.

Twelve Labs introduces an AI model that understands video

It is announcing its latest video-language foundation model, Pegasus-1, along with a new suite of Video-to-Text APIs. Twelve Labs adopts a “Video First” strategy, focusing its model, data, and systems solely on processing and understanding video data. It has four core principles:

Efficient Long-form Video Processing

Multimodal Understanding

Video-native Embeddings

Deep Alignment between Video and Language Embeddings

Pegasus-1 exhibits massive performance improvement over previous SoTA video-language models and other approaches to video summarization.

Why does this matter?

This may be one of the most important foundational multi-modal AI models intersecting with video. We have models understating text, PDFs, images, etc. But video understanding paves the way for a completely new realm of applications.

OpenAI has rolled out huge ChatGPT updates

You can now chat with PDFs and data files. With new beta features, ChatGPT plus users can now summarize PDFs, answer questions, or generate data visualizations based on prompts.

You can now use features without manually switching. ChatGPT Plus users now won’t have to select modes like Browse with Bing or use Dall-E from the GPT-4 dropdown. Instead, it will guess what they want based on context.

Why does this matter?

OpenAI is gradually rolling out new features, retaining ChatGPT as the number one LLM. While it sparked a wave of game-changing tools before, its new innovations will challenge startups to compete better. Either way, OpenAI seems pivotal in driving innovation and advancements in the AI landscape.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: How LLMs are changing search

Google is on “code red” due to the threat of LLMs.

LLMs can rapidly analyze a larger number of search results to generate answers for users. And they are leveling the playing field once again. What does this mean for Google going forward?

This short, interesting article answers how LLMs are disrupting traditional search engines. It also discusses how LLMs must improve their ability to use context effectively and the cost challenges of implementing LLM-based search. But with OpenAI’s inference costs dropping and smaller, cheaper models like Llama and Mistral 7B demonstrating progress, the timeline might be shorter than you think.

Why does this matter?

The article addresses the significant shift in the landscape of online search brought on by LLMs and the potential implications for dominant search engines like Google. This transformation may have far-reaching consequences for users and businesses, as it impacts the way we retrieve information.

What Else Is Happening❗

💰Google commits to invest $2 billion in OpenAI rival Anthropic.

Google invested $500 million upfront into Anthropic earlier and had agreed to add $1.5 billion more over time. The move follows Amazon’s commitment made last month to invest $4 billion in Anthropic. (Link)

💬WhatsApp is working on new AI support chatbot feature for faster servicing.

The new capability will streamline in-app issue resolution without emailing. Whatsapp will respond in a chat with AI-generated messages and users will also be able to interact with manual chat support in a few taps. The feature will also resolve common issues and answer about WhatsApp features. (Link)

🧠Perplexity announced 2 new in-house models, pplx-7b-chat and pplx-70b-chat.

Both models are built on top of open-source LLMs and are available as an alpha release, via Labs and pplx-api. The AI startup claims the models prioritize intelligence, usefulness, and versatility on an array of tasks, without imposing moral judgments or limitations. (Link)

🆒Google Bard now responds in real time– and you can cut off its response.

Bard previously only sent a response when it was complete, but now you can view a response as it’s getting generated. You can switch between “Respond in real time” and “Respond when complete”. Like ChaGPT, you can also cut off the bot mid-response. (Link)

🚀Citibank is planning to grant majority of its 40,000+ coders access to GenAI.

As part of a small pilot program, the Wall Street giant has quietly allowed about 250 of its developers to experiment with generative AI. Now, it’s planning to expand that program to the majority of its coders next year. (Link)

That's all for now!

If you are new to The AI Edge newsletter, subscribe to get daily AI updates and news directly sent to your inbox for free!

Thanks for reading, and see you tomorrow. 😊