Groq’s New AI Chip Outperforms ChatGPT

Plus: BABILong: The new benchmark to assess LLMs for long docs, Stanford’s AI model identifies sex from brain scans with 90% accuracy.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 214th edition of The AI Edge newsletter. This edition brings you “Groq’s New AI Chip Outperforms ChatGPT.”

And a huge shoutout to our incredible readers. We appreciate you😊

In today’s edition:

🚀 Groq’s New AI Chip Outperforms ChatGPT

📊 BABILong: The new benchmark to assess LLMs for long docs

👥 Stanford’s AI model identifies sex from brain scans with 90% accuracy

💡 Knowledge Nugget: Superhuman: What can AI do in 30 minutes? by

Let’s go!

Groq’s new AI chip turbocharges LLMs, outperforms ChatGPT

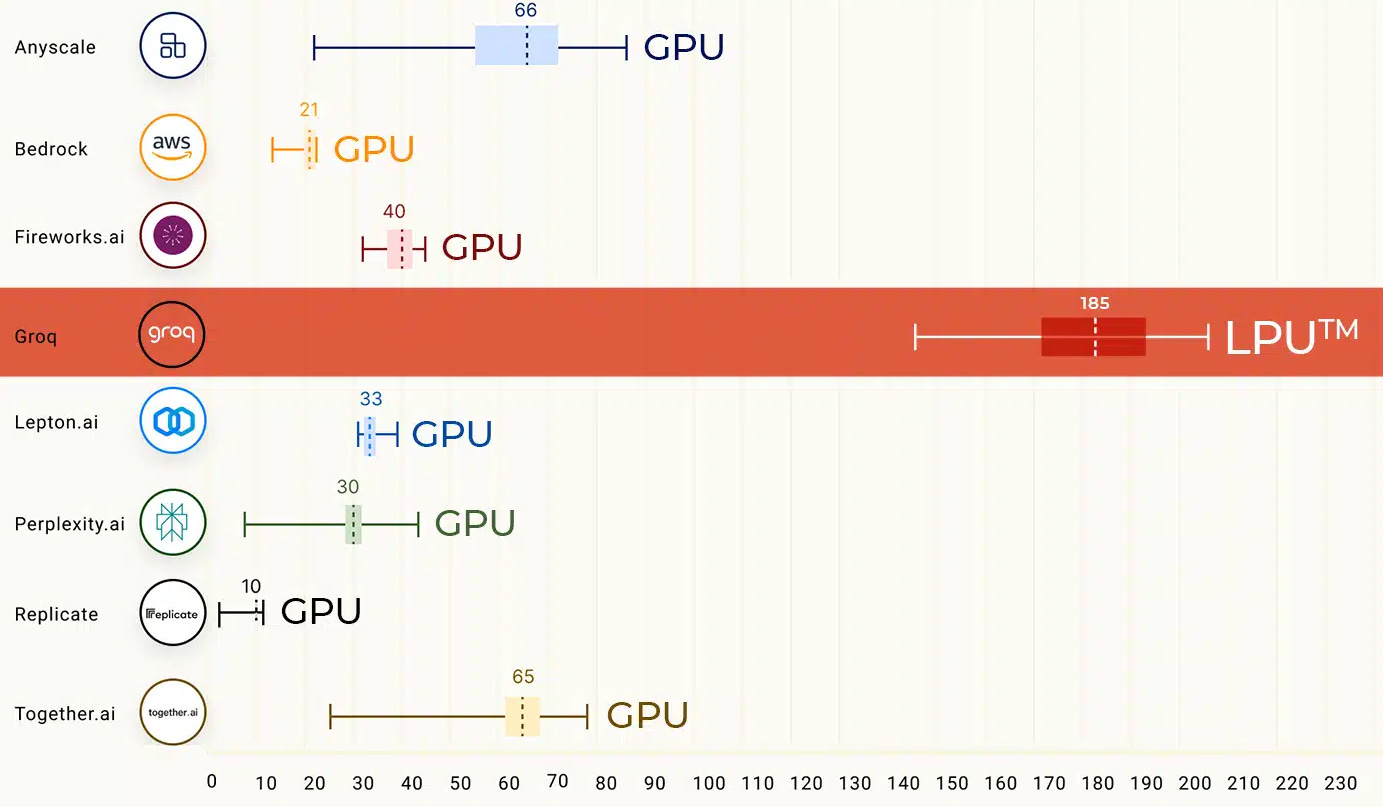

Groq has developed a special AI hardware known as the first-ever Language Processing Unit (LPU) that aims to increase the processing power of current AI models that normally work on GPU. These LPUs can process up to 500 tokens/second, far superior to Gemini Pro and ChatGPT-3.5, which can only process between 30 and 50 tokens/second.

The company has designed its first-ever LPU-based AI chip named “GroqChip,” which uses a "tensor streaming architecture" that is less complex than traditional GPUs, enabling lower latency and higher throughput. This makes the chip a suitable candidate for real-time AI applications such as live-streaming sports or gaming.

Why does it matter?

Groq’s AI chip is the first-ever chip of its kind designed in the LPU system category. The LPUs developed by Groq can improve the deployment of AI applications and could present an alternative to Nvidia's A100 and H100 chips, which are in high demand but have massive shortages in supply. It also signifies advancements in hardware technology specifically tailored for AI tasks. Lastly, it could stimulate further research and investment in AI chip design.

BABILong: The new benchmark to assess LLMs for long docs

The research paper delves into the limitations of current generative transformer models like GPT-4 when tasked with processing lengthy documents. It identifies a significant GPT-4 and RAG dependency on the initial 25% of input, indicating potential for enhancement. To address this, the authors propose leveraging recurrent memory augmentation within the transformer model to achieve superior performance.

Introducing a new benchmark called BABILong (Benchmark for Artificial Intelligence for Long-context evaluation), the study evaluates GPT-4, RAG, and RMT (Recurrent Memory Transformer). Results demonstrate that conventional methods prove effective only for sequences up to 10^4 elements, while fine-tuning GPT-2 with recurrent memory augmentations enables handling tasks involving up to 10^7 elements, highlighting its significant advantage.

Why does it matter?

The recurrent memory allows AI researchers and enthusiasts to overcome the limitations of current LLMs and RAG systems. Also, the BABILong benchmark will help in future studies, encouraging innovation towards a more comprehensive understanding of lengthy sequences.

Stanford’s AI model identifies sex from brain scans with 90% accuracy

Stanford medical researchers have developed a new-age AI model that determines the sex of individuals based on brain scans, with over 90% success. The AI model focuses on dynamic MRI scans, identifying specific brain networks—such as the default mode, striatum, and limbic networks—as critical in distinguishing male from female brains.

Why does it matter?

Over the years, there has been a constant debate in the medical field and neuroscience about whether sex differences in brain organization exist. AI has hopefully ended the debate once and for all. The research acknowledges that sex differences in brain organization are vital for developing targeted treatments for neuropsychiatric conditions, paving the way for a personalized medicine approach.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Superhuman: What can AI do in 30 minutes?

In this article,

tries to prove how AI is the new superhuman in today’s day and age. He runs a 30-minute experiment where he tries to do the maximum work a product launch that a product manager would do but with the help of AI. The product was related to an educational game that had to be launched soon.After running the experiment with the help of Bing, Midjourney, and Elevenlabs, the author finds that if we use AI, we can perform tasks like market research, positioning document creating, email campaigns, website creation, logo design, and “hero shot” graphics, social media campaigns for multiple platforms, and video creation and scripting within just 30-mins, proving it is superhuman.

Why does it matter?

AI's capability to swiftly tackle tasks typically handled by humans signifies a transformative potential, marking a shift in project management approaches for businesses and industries. By harnessing AI, processes can be streamlined, decision-making accelerated, and efficiency gains achieved. This highlights the evolving role of humans in the workforce, emphasizing the necessity to adapt and leverage AI to augment capabilities and attain remarkable performance.

What Else Is Happening❗

💼 Microsoft to invest $2.1 billion for AI infrastructure expansion in Spain.

Microsoft Vice Chair and President Brad Smith announced on X that they will expand their AI and cloud computing infrastructure in Spain via a $2.1 billion investment in the next two years. This announcement follows the $3.45 billion investment in Germany for the AI infrastructure, showing the priority of the tech giant in the AI space. (Link)

🔄 Graphcore explores sales talk with OpenAI, Softbank, and Arm.

The British AI chipmaker and NVIDIA competitor Graphcore is struggling to raise funding from investors and is seeking a $500 billion deal with potential purchasers like OpenAI, Softbank, and Arm. This move comes despite raising $700 million from investors Microsoft and Sequoia, which are valued at $2.8 billion as of late 2020. (Link)

💼 OpenAI’s Sora can craft impressive video collages

One of OpenAI’s employees, Bill Peebles, demonstrated Sora’s (the new text-to-video generator from OpenAI) prowess in generating multiple videos simultaneously. He shared the demonstration via a post on X, showcasing five different angles of the same video and how Sora stitched those together to craft an impressive video collage while keeping quality intact. (Link)

🚫 US FTC proposes a prohibition law on AI impersonation

The US Federal Trade Commission (FTC) proposed a rule prohibiting AI impersonation of individuals. The rule was already in place for US governments and US businesses. Now, it has been extended to individuals to protect their privacy and reduce fraud activities through the medium of technology, as we have seen with the emergence of AI-generated deep fakes. (Link)

📚 Meizu bid farewell to the smartphone market; shifts focus on AI

Meizu, a China-based consumer electronics brand, has decided to exit the smartphone manufacturing market after 17 years in the industry. The move comes after the company shifted its focus to AI with the ‘All-in-AI’ campaign. Meizu is working on an AI-based operating system, which will be released later this year, and a hardware terminal for all LLMs. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From ML to ChatGPT to generative AI and LLMs, We break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊