GPT-4V vs. Gemini Pro: Who Wins the Visual Face-Off?

Plus: DeepMind researchers introduce Mobile ALOHA, 32 techniques to mitigate hallucination in LLMs.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 181st edition of The AI Edge newsletter. This edition brings you research examining visual capabilities of GPT-4V and Gemini Pro.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🎥 OpenAI's GPT-4V and Google's Gemini Pro compete in visual capabilities

🌍Google DeepMind researchers introduce Mobile ALOHA

💼 32 techniques to mitigate hallucination in LLMs: A systematic overview

📚 Knowledge Nugget: The Post A.I. Creative Class by

Let’s go!

OpenAI's GPT-4V and Google's Gemini Pro compete in visual capabilities

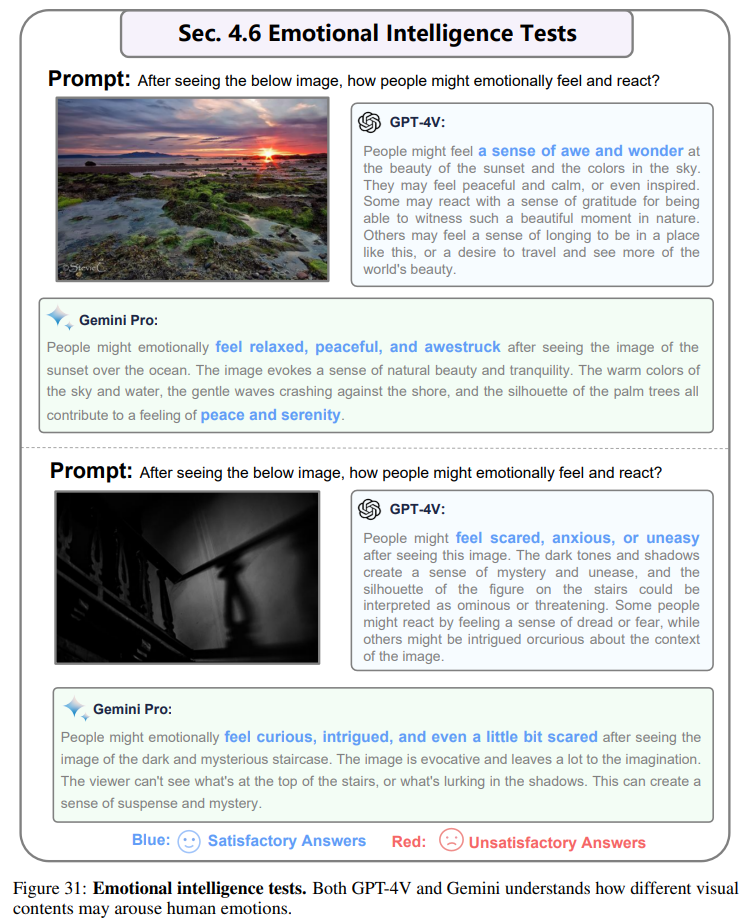

Two new papers from Tencent Youtu Lab, the University of Hong Kong, and numerous other universities and institutes comprehensively compare the visual capabilities of Gemini Pro and GPT-4V, currently the most capable multimodal language models (MLLMs).

Both models perform on par on some tasks, with GPT-4V rated slightly more powerful overall. The models were tested in areas such as image recognition, text recognition in images, image and text understanding, object localization, and multilingual capabilities.

Why does this matter?

While both are impressive models, they have room for improvement in visual comprehension, logical reasoning, and robustness of prompts. The road to multimodal general-purpose AI is still a long one, the paper concludes.

Google DeepMind researchers introduce Mobile ALOHA

Student researchers at DeepMind introduce ALOHA: 𝐀 𝐋ow-cost 𝐎pen-source 𝐇𝐀rdware System for Bimanual Teleoperation. With 50 demos, the robot can autonomously complete complex mobile manipulation tasks:

Cook and serve shrimp

Call and take elevator

Store a 3Ibs pot to a two-door cabinet

And more.

ALOHA is open-source and built to be maximally user-friendly for researchers– it is simple, dependable and performant. The whole system costs <$20k, yet it is more capable than setups with 5-10x the price.

Why does this matter?

Imitation learning from human-provided demos is a promising tool for developing generalist robots, but there are still some challenges for wider adoption. This research seek to tackle the challenges of applying imitation learning to bimanual mobile manipulation

32 techniques to mitigate hallucination in LLMs: A systematic overview

New paper from Amazon AI, Stanford University, and others presents a comprehensive survey of over 32 techniques developed to mitigate hallucination in LLMs. Notable among these are Retrieval Augmented Generation, Knowledge Retrieval, CoNLI, and CoVe.

Furthermore, it introduces a detailed taxonomy categorizing these methods based on various parameters, such as dataset utilization, common tasks, feedback mechanisms, and retriever types. This classification helps distinguish the diverse approaches specifically designed to tackle hallucination issues in LLMs. It also analyzes the challenges and limitations inherent in these techniques.

Why does this matter?

Hallucinations are a critical issue as we use language generation capabilities for sensitive applications like summarizing medical records, financial analysis reports, etc. This paper serves as a valuable resource for researchers and practitioners seeking a comprehensive understanding of the current landscape of hallucination in LLMs and the strategies employed to address this pressing issue.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: The Post A.I. Creative Class

As Large Language Models become more adept at reasoning like humans, who will have leverage and how - in the new world?

This article by

explores the concept of a "creative class" and speculates about the future role of these individuals in an economy significantly influenced by AI. The article suggests that individuals in this creative class, armed with deep domain knowledge and creative capital, may play a pivotal role in creating new jobs and shaping the future workforce.Additionally, it discusses the evolving skill sets necessary for leveraging AI, emphasizing the importance of critical thinking, domain expertise, and interdisciplinary skills.

Why does this matter?

The article offers insights into the transformative potential of AI, the evolving nature of work, and the skills required to navigate and thrive in an AI-centric world.

What Else Is Happening❗

🖥️Intel spins out a new enterprise-focused GenAI software company.

Called Articul8 AI, the new entity builds off a proof-of-concept from an Intel collaboration with Boston Consulting Group (BCG) early last May. Intel, using its hardware and a combination of open source and internally sourced software, created a GenAI system that can read text and images– running inside BCG’s data centers to address BCG’s security requirements. (Link)

🌐Microsoft Edge is now an ‘AI browser,’ apparently.

Microsoft has been adding more and more AI features to its Edge browser over the past year, and now the company is branding it the “AI browser.” The naming now appears on iOS and Android versions of Edge, and if you search for Edge in Apple’s App Store you’ll likely see an ad that highlights all the AI-powered features. (Link)

📱Samsung to announce new phones ‘powered by AI’ on January 17.

Samsung recently announced it will host a news conference in San Jose, California, on January 17 where it will unveil its newest Galaxy phones. The devices will offer an all-new mobile experience powered by AI. (Link)

🌊Researchers map human activity at sea with more precision than before using AI.

Advances in AI and satellite imagery allowed researchers to create the clearest picture yet of human activity at sea, revealing clandestine fishing activity and a boom in offshore energy development. The research is led by Google-backed nonprofit Global Fishing Watch. (Link)

🚸Researchers use AI to identify suicide risk factors in adolescents.

Norwegian and Danish scientists have used ML to identify risk factors for suicide attempts among young people. With a sensitivity and specificity of 90.1 percent, their model performed significantly better than other algorithms. It is the most accurate of its kind to date and can identify young people at risk better than other models. (Link)

That's all for now!

Subscribe to The AI Edge and join the impressive list of readers that includes professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other reputable organizations.

Thanks for reading, and see you tomorrow. 😊