Google’s ScreenAI Can ‘See’ Graphics Like Humans Do!

Plus: How AI ‘worms’ pose security threats in connected systems, New benchmarking method challenges LLMs’ reasoning abilities

Hello, Engineering Leaders and AI Enthusiasts!

Welcome to the 223rd edition of The AI Edge newsletter. This edition brings you details on ScreenAI, Google’s vision-language model.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

👀Google’s ScreenAI can ‘see’ graphics like humans do

🐛 How AI ‘worms’ pose security threats in connected systems

🧠 New benchmarking method challenges LLMs' reasoning abilities

📚 Knowledge Nugget: GPT-4's hidden cost: Is your language pricing you out of AI innovation? by

Let’s go!

Google’s ScreenAI can ‘see’ graphics like humans do

Google Research has introduced ScreenAI, a Vision-Language Model that can perform question-answering on digital graphical content like infographics, illustrations, and maps while also annotating, summarizing, and navigating UIs. The model combines computer vision (PaLI architecture) with text representations of images to handle these multimodal tasks.

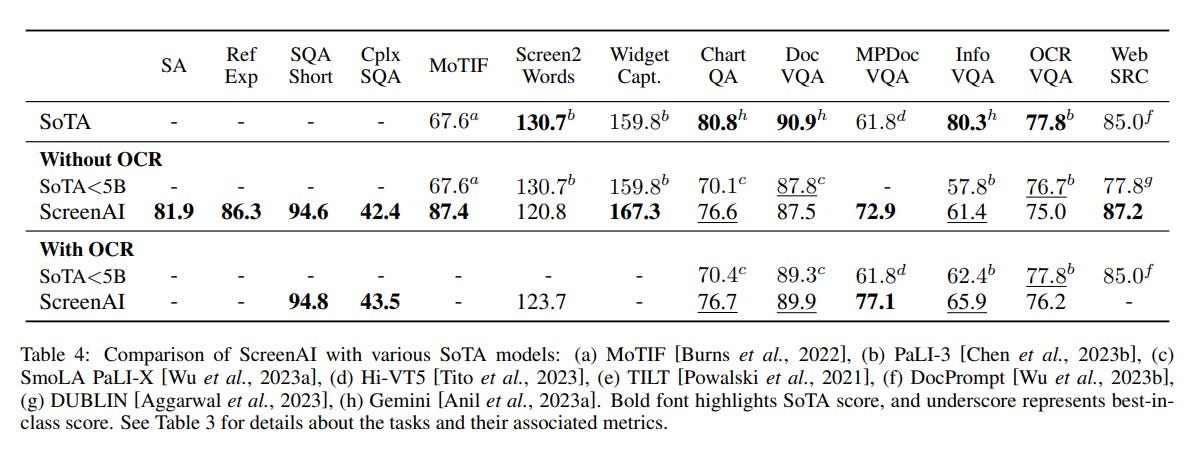

Despite having just 4.6 billion parameters, ScreenAI achieves new state-of-the-art results on UI- and infographics-based tasks and new best-in-class performance on others, compared to models of similar size.

While ScreenAI is best-in-class on some tasks, further research is needed to match models like GPT-4 and Gemini, which are significantly larger. Google Research has released a dataset with ScreenAI's unified representation and two other datasets to help the community experiment with more comprehensive benchmarking on screen-related tasks.

Why does this matter?

ScreenAI's breakthrough in unified visual and language understanding bridges the disconnect between how humans and machines interpret ideas across text, images, charts, etc. Companies can now leverage these multimodal capabilities to build assistants that summarize reports packed with graphics, analysts that generate insights from dashboard visualizations, and agents that manipulate UIs to control workflows.

How AI ‘worms’ pose security threats in connected systems

Security researchers have created an AI "worm" called Morris II to showcase vulnerabilities in AI ecosystems where different AI agents are linked together to complete tasks autonomously.

The researchers tested the worm in a simulated email system using ChatGPT, Gemini, and other popular AI tools. The worm can exploit these AI systems to steal confidential data from emails or forward spam/propaganda without human approval. It works by injecting adversarial prompts that make the AI systems behave maliciously.

While this attack was simulated, the research highlights risks if AI agents are given too much unchecked freedom to operate.

Why does it matter?

This AI "worm" attack reveals that generative models like ChatGPT have reached capabilities that require heightened security to prevent misuse. Researchers and developers must prioritize safety by baking in controls and risk monitoring before commercial release. Without industry-wide commitments to responsible AI, regulation may be needed to enforce acceptable safeguards across critical domains as systems gain more autonomy.

New benchmarking method challenges LLMs’ reasoning abilities

Researchers at Consequent AI have identified a "reasoning gap" in large language models like GPT-3.5 and GPT-4. They introduced a new benchmarking approach called "functional variants," which tests a model's ability to reason instead of just memorize. This involves translating reasoning tasks like math problems into code that can generate unique questions requiring the same logic to solve.

When evaluating several state-of-the-art models, the researchers found a significant gap between performance on known problems from benchmarks versus new problems the models had to reason through. The gap was 58-80%, indicating the models do not truly understand complex problems but likely just store training examples. The models performed better on simpler math but still demonstrated limitations in reasoning ability.

Why does this matter?

This research reveals that reasoning still eludes our most advanced AIs. We risk being misled by claims of progress made by the Big Tech if their benchmarks reward superficial tricks over actual critical thinking. Moving forward, model creators will have to prioritize generalization and logic over memorization if they want to make meaningful progress towards general intelligence.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: GPT-4's Hidden Cost: Is Your Language Pricing You Out of AI Innovation?

In his thought-provoking piece,

argues that GPT-4's token-based pricing model puts non-English languages at a significant cost disadvantage. He shares an analysis showing that the overhead cost when processing an equivalent number of characters in non-English languages can be over 7 times higher than in English. For example, Armenian and Burmese users would pay over 700% more to process the same amount of text compared to English users.To tackle this, Urbański suggests OpenAI consider moving to a character-based pricing model for more equitable access across languages. He also advises diversifying training data to improve GPT-4's performance and lower token counts for non-English texts.

Why does this matter?

Token-based pricing in LLMs exposes a critical blindspot in AI development - equitable access. True progress in any field depends on inclusive innovation. If significant segments across the globe cannot participate in LLM development due to cost barriers, it’s not only unfair but it also limits the global advancement of AI.

What Else Is Happening❗

💊 AI may enable personalized prostate cancer treatment

Researchers used AI to analyze prostate cancer DNA and found two distinct subtypes called "evotypes." Identifying these subtypes could allow for better prediction of prognosis and personalized treatments. (Link)

🎥 Vimeo debuts AI-powered video hub for business collaboration

Vimeo has launched a new product called Vimeo Central, an AI-powered video hub to help companies improve internal video communications, collaboration, and analytics. Key capabilities include a centralized video library, AI-generated video summaries and highlights, enhanced screen recording and video editing tools, and robust analytics. (Link)

📱 Motorola revving up for AI-powered Moto X50 Ultra launch

Motorola is building hype for its upcoming Moto X50 Ultra phone with a Formula 1-themed teaser video highlighting the device's powerful AI capabilities. The phone will initially launch in China on April 21 before potentially getting a global release under the Motorola Edge branding. (Link)

📂 Copilot will soon fetch and parse your OneDrive files

Microsoft is soon to launch Copilot for OneDrive, an AI assistant that will summarize documents, extract information, answer questions, and follow commands related to files stored in OneDrive. Copilot can generate outlines, tables, and lists based on documents, as well as tailored summaries and responses. (Link)

⚡ Huawei's new AI chip threatens Nvidia's dominance in China

Huawei has developed a new AI chip, the Ascend 910B, which matches the performance of Nvidia's A100 GPU based on assessments by SemiAnalysis. The Ascend 910B is already being used by major Chinese companies like Baidu and iFlytek and could take market share from Nvidia in China due to US export restrictions on Nvidia's latest AI chips. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊