Google Presents Brain-to-Music AI

Plus: ChatGPT's custom instructions feature, Meta-Transformer to process 12 modalities.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 68th edition of The AI Edge newsletter. This edition brings you Google’s new research: Brain2Music.

A huge shoutout to our incredible readers. We appreciate you! 😊

In today’s edition:

🧠 Google presents brain-to-music AI

🤖 ChatGPT will now remember who you are & what you want

🎯 Meta-Transformer lets AI models process 12 modalities

💡 Knowledge Nugget: LLMs store data using Vector DB. Why and how? byLet’s go!

Google presents brain-to-music AI

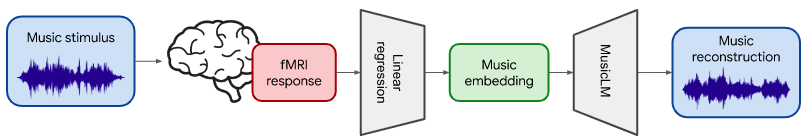

New research called Brain2Music by Google and institutions from Japan has introduced a method for reconstructing music from brain activity captured using functional magnetic resonance imaging (fMRI). The generated music resembles the musical stimuli that human subjects experience with respect to semantic properties like genre, instrumentation, and mood.

The paper explores the relationship between the Google MusicLM (text-to-music model) and the observed human brain activity when human subjects listen to music.

Why does this matter?

Although text-to-music models are rapidly developing, their internal processes are still poorly understood. This study is the first to provide a quantitative interpretation from a biological perspective. An exciting next step could be to attempt the reconstruction of music or musical attributes from a subject’s imagination, which could qualify for an actual mind-reading AI.

ChatGPT will now remember who you are & what you want

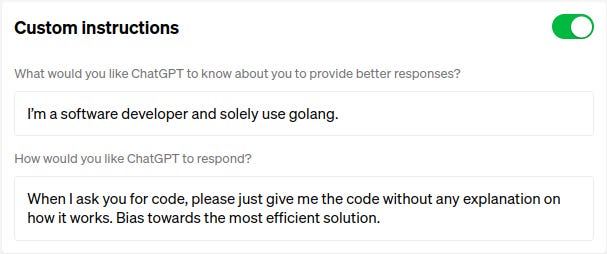

OpenAI is rolling out custom instructions to give you more control over how ChatGPT responds. It allows you to add preferences or requirements that you’d like ChatGPT to consider when generating its responses.

ChatGPT will remember and consider the instructions every time it responds in the future, so you won’t have to repeat your preferences or information. Currently available in beta in the Plus plan, the feature will expand to all users in the coming weeks.

Why does this matter?

This feature sets a new standard for user interactions with AI, a critical step in shaping the future of language models. It also emphasizes steerability to ensure AI models can be molded to better serve a global user base. (Steerability refers to the ability to control or influence the AI model’s behavior based on specific instructions/preferences of a user).

Meta-Transformer lets AI models process 12 modalities

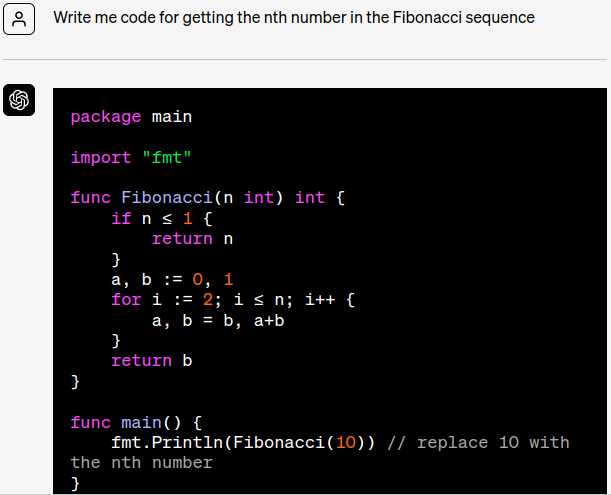

New research has proposed Meta-Transformer, a novel unified framework for multimodal learning. It is the first framework to perform unified learning across 12 modalities, and it leverages a frozen encoder to perform multimodal perception without any paired multimodal training data.

Experimentally, Meta-Transformer achieves outstanding performance on various datasets regarding 12 modalities, which validates the further potential of Meta-Transformer for unified multimodal learning.

Why does this matter?

Each data modality presents unique data patterns, making it difficult to adapt AI models trained on one modality to another. This research helps address the challenges associated with unifying them into a single framework. It also shows a promising trend toward developing unified multimodal AI with a transformer backbone.

Knowledge Nugget: LLMs store data using Vector DB. Why and how?

Traditionally, computing has been deterministic, where the output strictly adheres to the programmed logic. However, LLMs leverage similarity search during the training phase.

‘s short but insightful article explains how LLMs utilize Vector DB and similarity search to enhance their understanding of textual data, enabling more nuanced information processing. It also provides an example of how a sentence is transformed into a vector, references OpenAI's embedding documentation, and an interesting video for further information.Why does this matter?

The article helps comprehend the inner workings of LLMs, vector representations, and the role of non-deterministic search in advancing AI capabilities. It paves the way for developing more impactful AI applications.

What Else Is Happening❗

🎬Now you can have better quality and control over text-driven video editing (Link)

🚀GitHub’s Copilot Chat AI feature is now available in public beta (Link)

🛡️OpenAI and other AI giants reinforce AI safety, security, and trustworthiness with voluntary commitments (Link)

👥Google introduces its AI Red Team, the ethical hackers making AI safer (Link)

🧠Research to merge human brain cells with AI secures national defence funding (Link)

🔧Google DeepMind is using AI to design specialized AI chips faster (Link)

🛠️ Trending Tools

Onboard AI: Turns any GitHub repo link into a subject matter expert. Ask the AI chat questions about the repo.

Hawkflow AI: AI-powered monitoring tool for Data Scientists to uncover new insights about models and data.

Alphy: An AI assistant to search, learn, and interact with audiovisual content and ask further questions like ChatGPT.

WordWand AI: Uses ChatGPT to give Zendesk agents tools to speed up customer support with personalized responses.

Paragrapho: Distills news into 100-word summaries using AI, providing brief yet comprehensive insights.

Deadale: Leverages AI to create varied versions of a video by altering voice and visuals for different target audiences.

Review Insights Pro: AI-powered app that analyzes customer reviews to help small businesses understand their customers.

Storyboard AI: Leverages AI to reduce the time needed to create concepts, scripts, and full storyboards for video agencies and creators.

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI enthusiasts

Thanks for reading, and see you Monday. 😊