Ex-OpenAI Co-founder Launches Safe Superintelligence Inc.

Plus: Microsoft drops vision-foundational AI, Research reveals AI model can predict anxiety levels.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 301st edition of The AI Edge newsletter. This edition features Microsoft’s new vision-foundational model that can perform diverse tasks.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🖥️ Microsoft debuts a vision-foundational model for diverse tasks

👨💼 Ex-OpenAI co-founder launches own AI company

🤖 Can AI read minds? New model can predict anxiety levels

🧠 Knowledge Nugget: Code Intelligence before Artificial Intelligence by

Let’s go!

Microsoft debuts a vision-foundational model for diverse tasks

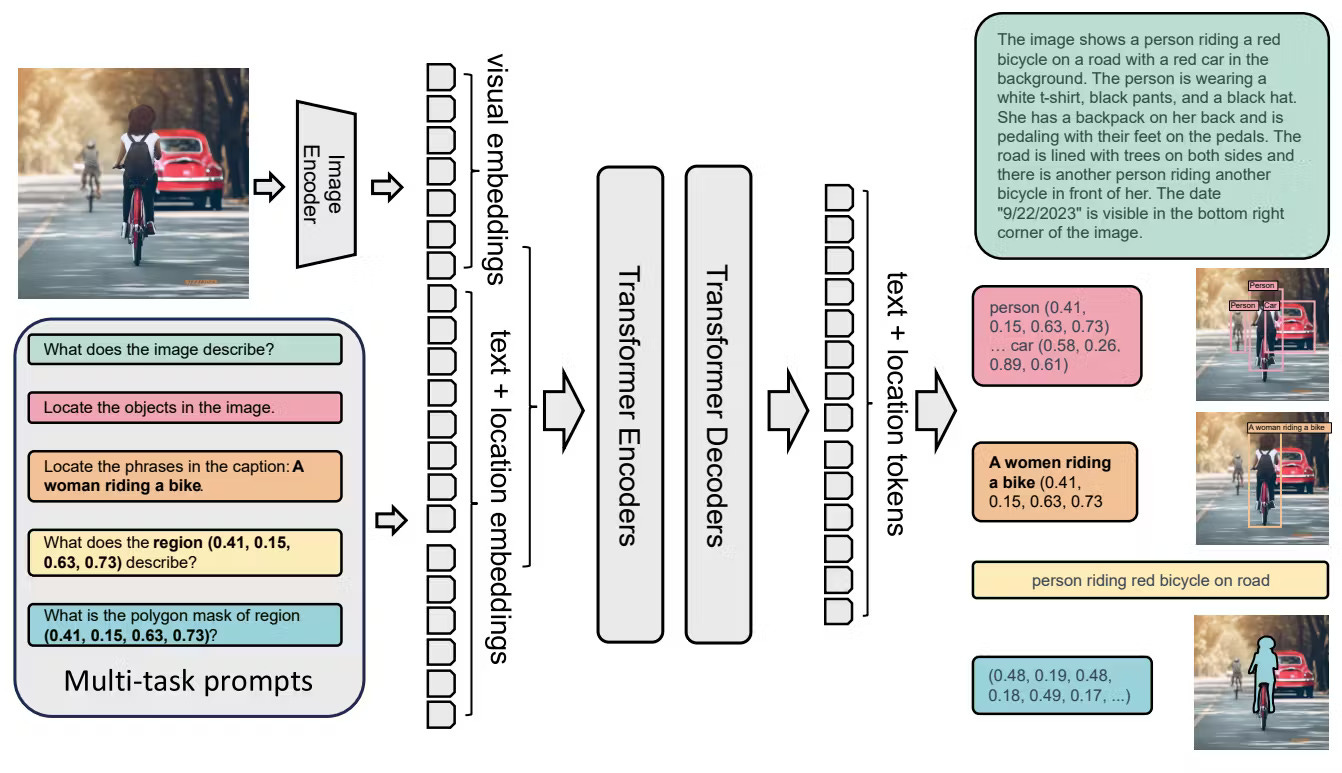

Florence 2, Microsoft’s vision-AI model, can perform diverse tasks like object detection, captioning, visual grounding, and visual question answering via image and text prompts. It displays excellent captioning, object detection, visual grounding, and segmentation.

The model comes in 232M and 771M parameter sizes and uses a sequence-to-sequence architecture, enabling multiple vision tasks without needing a task-specific architecture modification.

On fine-tuning the model with publicly available human-annotated data, Florence 2 showcased impressive results, offering tough competition to existing large vision models like Flamingo despite its compact size.

Why does it matter?

The model will equip enterprises with a standard approach to handling various vision-handling applications. This will save resources spent on separate task-specific vision models that need fine-tuning. Moreover, it may also be useful to developers as it would eliminate the need for separate vision models for smaller tasks, significantly saving compute costs.

Ex-OpenAI co-founder launches own AI company

Just a month after leaving OpenAI, ex-cofounder Ilya Sutskever has launched his own AI company, Safe Superintelligence Inc. (SSI), alongside former Y Combinator partner Daniel Gross and ex-OpenAI engineer Daniel Levy as co-founders.

According to the SSI’s launch statement on X, the company will prioritize safety, progress, and security. Sutskever also emphasizes that the company’s “singular focus” on a joint approach to safety and capabilities will prevent it from being distracted by management overhead or production cycles, unlike companies like OpenAI or Google.

(Source)

Why does it matter?

SSI’s launch clearly marks the emergence of a new key player in the race to build safe, powerful AI. Its mission statement emphasizes safety and the potential for groundbreaking developments that may shape the future of AI research and development. It would be interesting to see whether the startup will uphold its mission statement in the coming days.

Can AI read minds? New model can predict anxiety levels

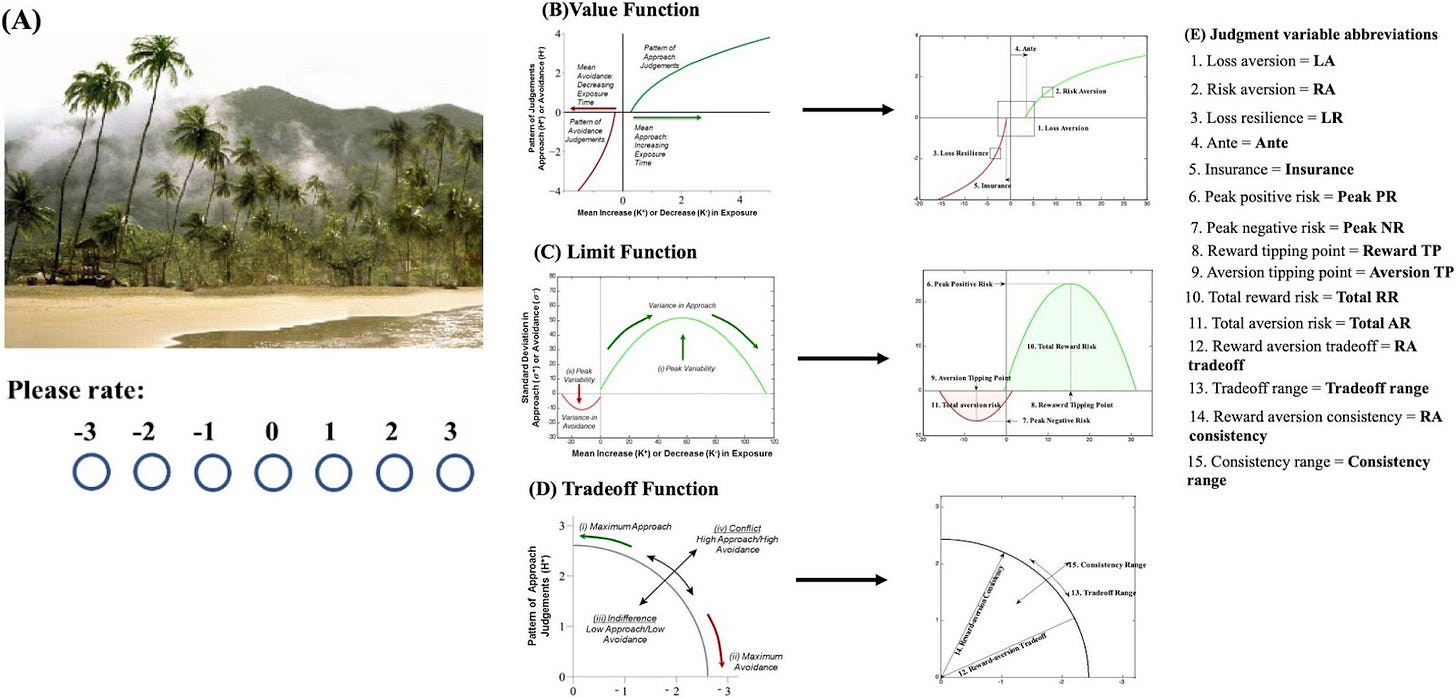

Researchers at the University of Cincinnati have developed an AI model that can identify people with an urgent risk of anxiety. The AI model uses minimal computational resources, a short picture rating task, and a small set of variables to make the prediction. The approach named “Comp Cog AI” integrates computational cognition and AI.

Participants rated 48 pictures with mildly emotional subject matter based on the degree to which they liked or disliked those pictures. The response data was then used to quantify the mathematical features of their judgments. Finally, the data was combined with ML algorithms to identify their anxiety levels.

Since the technology doesn’t rely on a native language, it is accessible to a wider audience and diverse settings to assess anxiety.

Why does it matter?

The picture rating feature can provide unbiased data to medical professionals on a person’s mental health status without subjecting them to direct questions that may trigger negative emotions. Given its 81% accuracy rate, the tool can become a useful app for detecting individuals with high anxiety risks.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Code Intelligence before Artificial Intelligence

Whether you want to test a product feature or detect a bug, you need an effective code. In this article,

discusses how generative AI technologies like code completion and chatbots help developers save coding time and become more efficient and productive.At the same time, understanding the existing codebase is challenging for them. Developers can address software development challenges and navigate complex codebases using a codebase-aware approach. Developers save time behind the manual effort by offering relevant code context to these generative AI tools and reducing the product development lifecycle.

Why does it matter?

The application of generative AI in coding is not uncommon. As its usage increases, new cases of AI hallucinations keep emerging. Thus, there is a need to adopt a more context-based coding approach.

What Else Is Happening❗

🤝 Deloitte, HPE, and NVIDIA partner up! The alliance combines Deloitte’s deep industry expertise and AI capabilities with the newly released HPE NVIDIA AI Compute solutions suite. The collaboration further seeks to advance industry-specific gen AI applications and assist clients across sectors in modernizing their data strategies by accessing innovative insights. (Link)

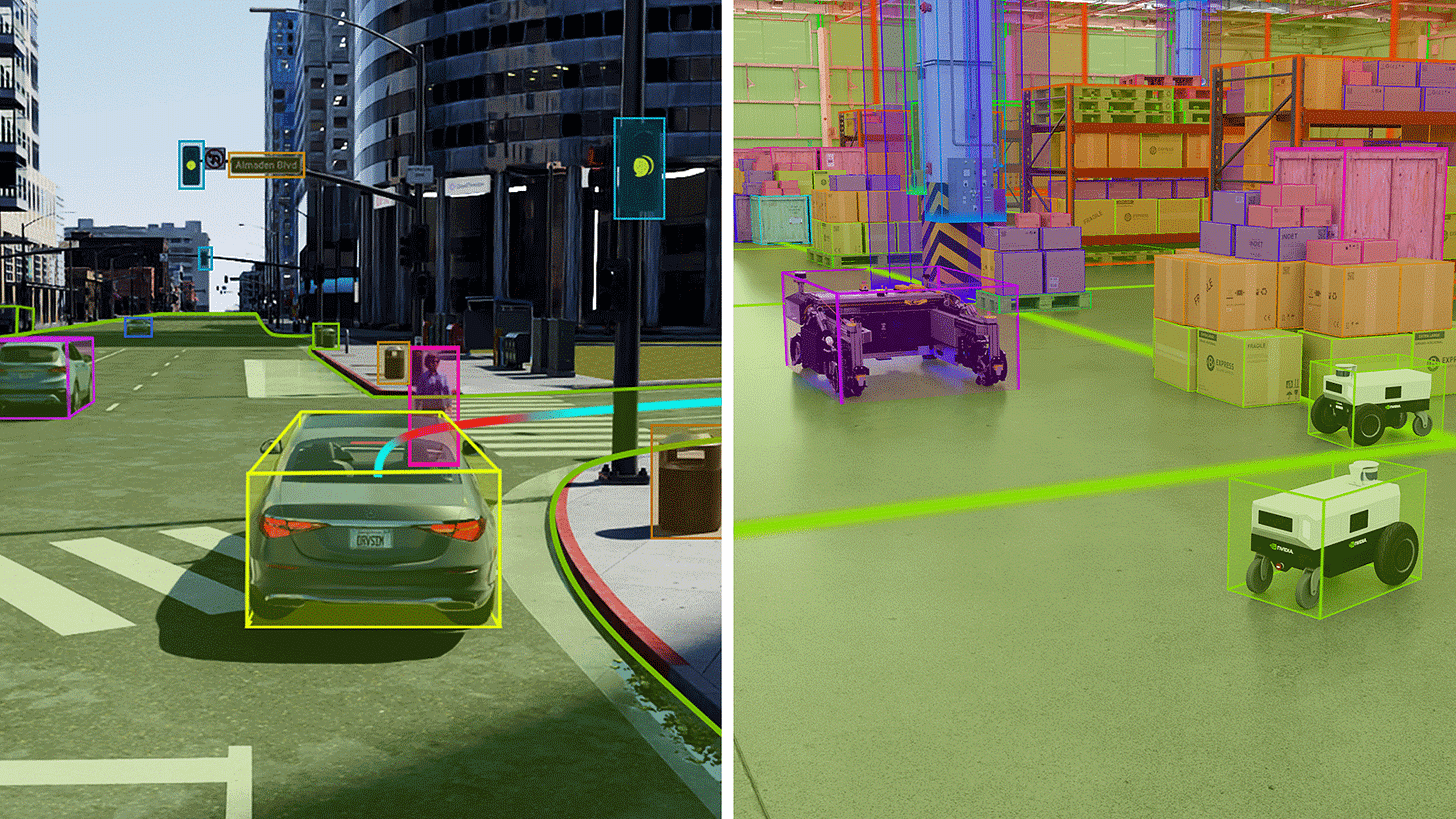

🚗 NVIDIA’s AI can turbocharge deployment of self-driving cars: NVIDIA has unveiled a new AI software, Omniverse Cloud Sensor RTX, that will likely accelerate the development of self-driving cars and robots.

The software combines real-world data with synthetic data, making it easy to test sensor perception in realistic virtual environments before deployment into the actual world. (Link)

🎶 YouTube trials AI-powered “Ask for music” feature: The feature seeks to make searching music more conversational for users. Users can search for music using voice commands and verbal prompts. It is speculated that the feature may be an addition to the AI-generated playlist cover available on YouTube. (Link)

🎥 Luma adds an “extend video” feature to Dream Machine: In contrast to Luma’s previous five-second limit for videos, the “extend video” feature will allow videos to be extended based on prompts. The AI model will consider the new context while extending the video. Additionally, the upgrade will enable Standard, Pro, and Premier users to remove watermarks. (Link)

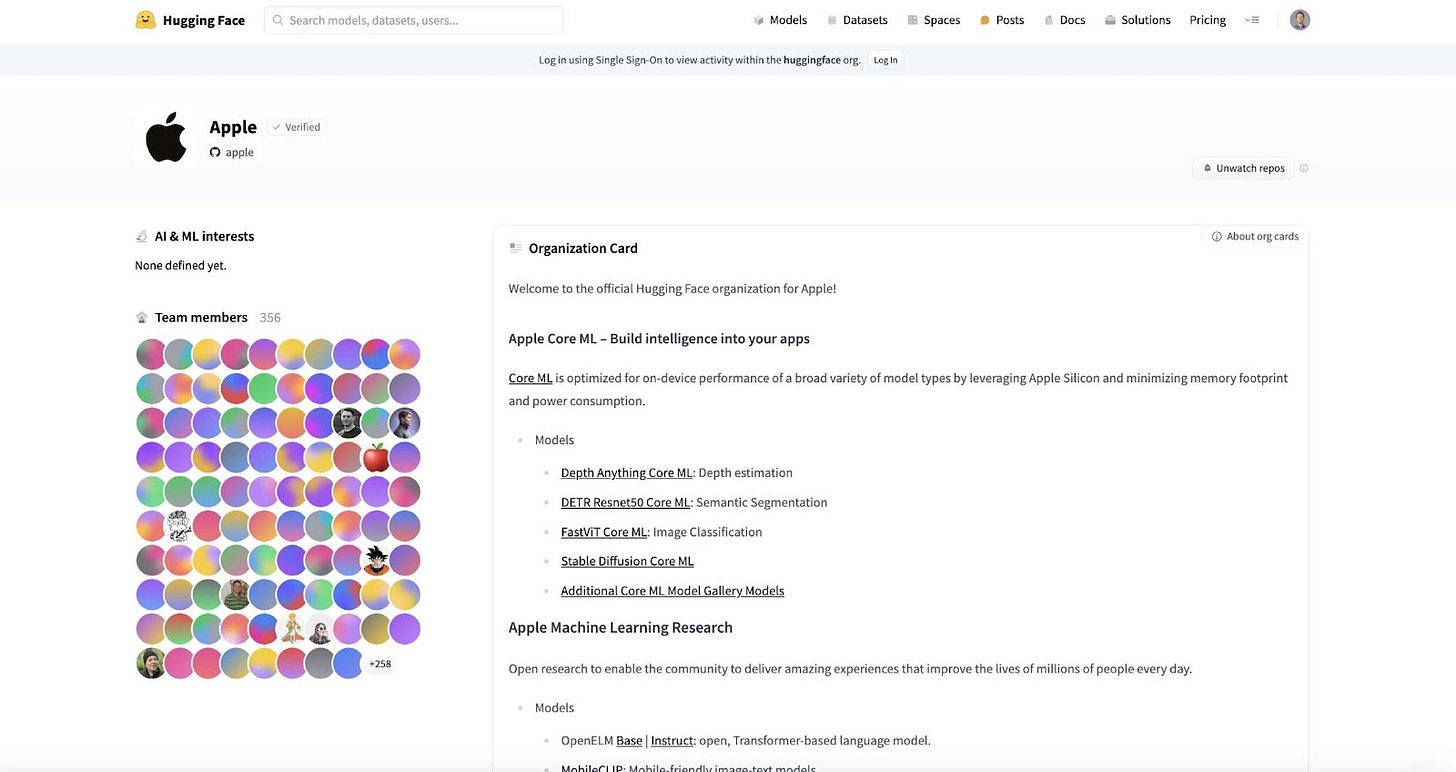

🛠️ Apple releases 20 core ML models on Hugging Face: The release includes 4 major datasets in addition to 20 core models as a part of Apple’s efforts to equip developers with advanced on-device AI capabilities.

These core ML models have been optimized to run exclusively on users’ devices and can be used for various applications like image classification, depth estimation, and semantic segmentation. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊