Devin: The First AI Software Engineer

Plus: Deepgram’s Aura empowers AI agents with authentic voices, Meta introduces two 24K GPU clusters to train Llama 3.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 230th edition of The AI Edge newsletter. This edition brings you “Devin: The First AI Software Engineer.”

And a huge shoutout to our incredible readers. We appreciate you😊

In today’s edition:

🤖 Devin: The first AI software engineer redefines coding

🗣️ Deepgram’s Aura empowers AI agents with authentic voices

🖥️ Meta introduces two 24K GPU clusters to train Llama 3

💡 Knowledge Nugget: 10 Prompt Engineering Hacks to get 10x Better Results by

Let’s go!

Devin: The first AI software engineer redefines coding

In the most groundbreaking development, the US-based startup Cognition AI has unveiled Devin, the world’s first AI software engineer. It is an autonomous agent that solves engineering tasks using its shell or command prompt, code editor, and web browser. Devin can also perform tasks like planning, coding, debugging, and deploying projects autonomously.

When evaluated on the SWE-Bench benchmark, which asks an AI to resolve GitHub issues found in real-world open-source projects, Devin correctly resolves 13.86% of the issues unassisted, far exceeding the previous state-of-the-art model performance of 1.96% unassisted and 4.80% assisted. It has successfully passed practical engineering interviews with leading AI companies and even completed real Upwork jobs.

Why does it matter?

There’s already a huge debate if Devin will replace software engineers. However, most production-grade software is too complex, unique, or domain-specific to be fully automated at this point. Perhaps, Devin could start handling more initial-level tasks in development. More so, it can assist developers in quickly prototyping, bootstrapping, and autonomously launching MVP for smaller apps and websites, for now

Deepgram’s Aura empowers AI agents with authentic voices

Deepgram, a top voice recognition startup, just released Aura, its new real-time text-to-speech model. It's the first text-to-speech model built for responsive, conversational AI agents and applications. Companies can use these agents for customer service in call centers and other customer-facing roles.

Aura includes a dozen natural, human-like voices with lower latency than any comparable voice AI alternative and is already being used in production by several customers. Aura works hand in hand with Deepgram's Nova-2 speech-to-text API. Nova-2 is known for its top-notch accuracy and speed in transcribing audio streams.

Why does it matter?

Deepgram’s Aura is a one-stop shop for speech recognition and voice generation APIs that enable the fastest response times and most natural-sounding conversational flow. Its human-like voice models render extremely fast (typically in well under half a second) and at an affordable price ($0.015 per 1,000 characters). Lastly, Deepgram’s transcription is more accurate and faster than other solutions as well.

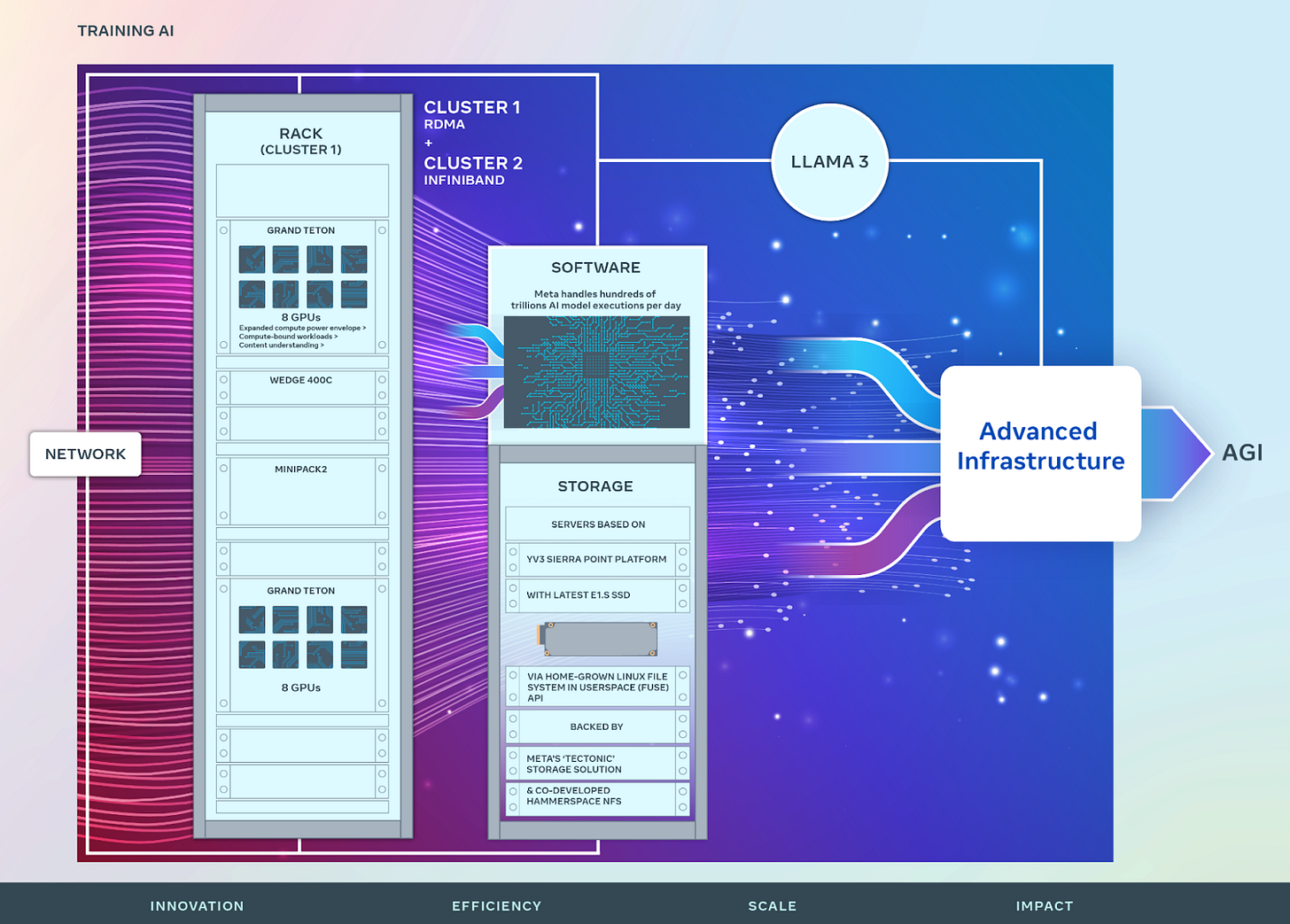

Meta introduces two 24K GPU clusters to train Llama 3

Meta has invested significantly in its AI infrastructure by introducing two 24k GPU clusters. These clusters, built on top of Grand Teton, OpenRack, and PyTorch, are designed to support various AI workloads, including the training of Llama 3.

Meta aims to expand its infrastructure build-out by the end of 2024. It plans to include 350,000 NVIDIA H100 GPUs, providing compute power equivalent to nearly 600,000 H100s. The clusters are built with a focus on researcher and developer experience.

This adds up to Meta’s long-term vision to build open and responsibly developed artificial general intelligence (AGI). These clusters enable the development of advanced AI models and power applications such as computer vision, NLP, speech recognition, and image generation.

Why does it matter?

Meta is committed to open compute and open source, driving innovation in the AI software and hardware industry. Introducing two new GPUs to train Llama 3 is also a push forward to their commitment. As a founding member of Open Hardware Innovation (OHI) and the Open Innovation AI Research Community, Meta wants to make AI transparent and trustworthy.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: 10 Prompt Engineering Hacks to get 10x Better Results

In today’s world, when tackling real-world challenges or crafting content pieces, there’s a need for creativity and innovation. However, according to research by the Wharton School, when you use AI assistants to brainstorm and search for new ideas, they sometimes provide very generic or repetitive solutions. The ideas may be high-quality, but sometimes, they lack diversity and limit the novelty factor.

That’s where the author

argues that we should use Chain-of-Thought (CoT) prompting. This approach involves breaking down the brainstorming task into micro-tasks. It makes AI’s ideas as diverse as those from human groups and leads to more unique ideas.The author also presents 10 practical strategies or prompt engineering hacks that make your LLM prompts work 10x better. It ensures that your prompt yields the most creative, diverse, and valuable responses.

Be specific in your request

Include context or background

Ask for examples or explanation

Use open-ended questions for broader insights

Request step-by-step instructions

Specify the desired format of your answer

Incorporate keywords for clarity

Limit your scope

Clarify the purpose

Incorporate a creative angle

Why does it matter?

Applying research-driven strategies for prompt engineering can significantly enhance the effectiveness of your interactions with AI, such as ChatGPT, Claude, and other open-source LLM. It can help you turn it into a more powerful tool for generating ideas, solving problems, and gaining knowledge.

What Else Is Happening❗

🎮 Google Play to display AI-powered FAQs and recent YouTube videos for games

At the Google for Games Developer Summit held in San Francisco, Google announced several new features for ‘Google Play listing for games’. These include AI-powered FAQs, displaying the latest YouTube videos, new immersive ad formats, and support for native PC game publishing. These new features will allow developers to display promotions and the latest YouTube videos directly in their listing and show them to users in the Games tab of the Play Store. (Link)

🛡️ DoorDash’s new AI-powered tool automatically curbs verbal abuses

DoorDash has introduced a new AI-powered tool named ‘SafeChat+’ to review in-app conversations and determine if a customer or Dasher is being harassed. There will be an option to report the incident and either contact DoorDash’s support team if you’re a customer or quickly cancel the order if you’re a delivery person. With this feature, DoorDash aims to reduce verbally abusive and inappropriate interactions between consumers and delivery people. (Link)

🔍 Perplexity has decided to bring Yelp data to its chatbot

Perplexity has decided to bring Yelp data to its chatbot. The company CEO, Aravind Srinivas, told the media that many people use chatbots like search engines. He added that it makes sense to offer information on things they look for, like restaurants, directly from the source. That’s why they have decided to integrate Yelp’s maps, reviews, and other details in responses when people ask for restaurant or cafe recommendations. (Link)

👗 Pinterest’s ‘body types ranges’ tool delivers more inclusive search results

Pinterest has introduced a new tool named body type ranges, which gives users a choice to self-select body types from a visual cue between four body type ranges to deliver personalized and more refined search results for women’s fashion and wedding inspiration. This tool aims to create a more inclusive place online to search, save, and shop. The company also plans to launch a similar feature for men’s fashion later this year. (Link)

🚀 OpenAI’s GPT-4.5 Turbo is all set to be launched in June 2024

According to the leak search engine results from Bing and DuckDuck Go, which indexed the OpenAI GPT-4.5 Turbo product page before an official announcement, OpenAI is all set to launch the new version of its LLM by June 2024. There is a discussion among the AI community that this could be OpenAI’s fastest, most accurate, and most scalable model to date. The details of GPT-4.5 Turbo were leaked by OpenAI’s web team, which now leads to a 404 page. (Link))

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From ML to ChatGPT to generative AI and LLMs, We break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊

Thanks for the feature Hiren!