Anthropic’s Claude Instant 1.2- Faster and Safer LLM

Plus: Google explores if LLMs generalize or memorize, IBM to host Llama 2 on watsonx.ai.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 82nd edition of The AI Edge newsletter. This edition brings you Anthropic’s latest version of Claude Instant.

And a huge shoutout to our incredible readers. We appreciate you!😊

In today’s edition:

🛡️ Anthropic’s Claude Instant 1.2- Faster and safer LLM

🤔Google attempts to answer if LLMs generalize or memorize

🔮 IBM plans to make Meta’s Llama 2 available on watsonx.ai

📚 Knowledge Nugget: What "GPT" actually means by

Let’s go!

Anthropic’s Claude Instant 1.2- Faster and safer LLM

Anthropic has released an updated version of Claude Instant, its faster, lower-priced yet very capable model which can handle a range of tasks including casual dialogue, text analysis, summarization, and document comprehension.

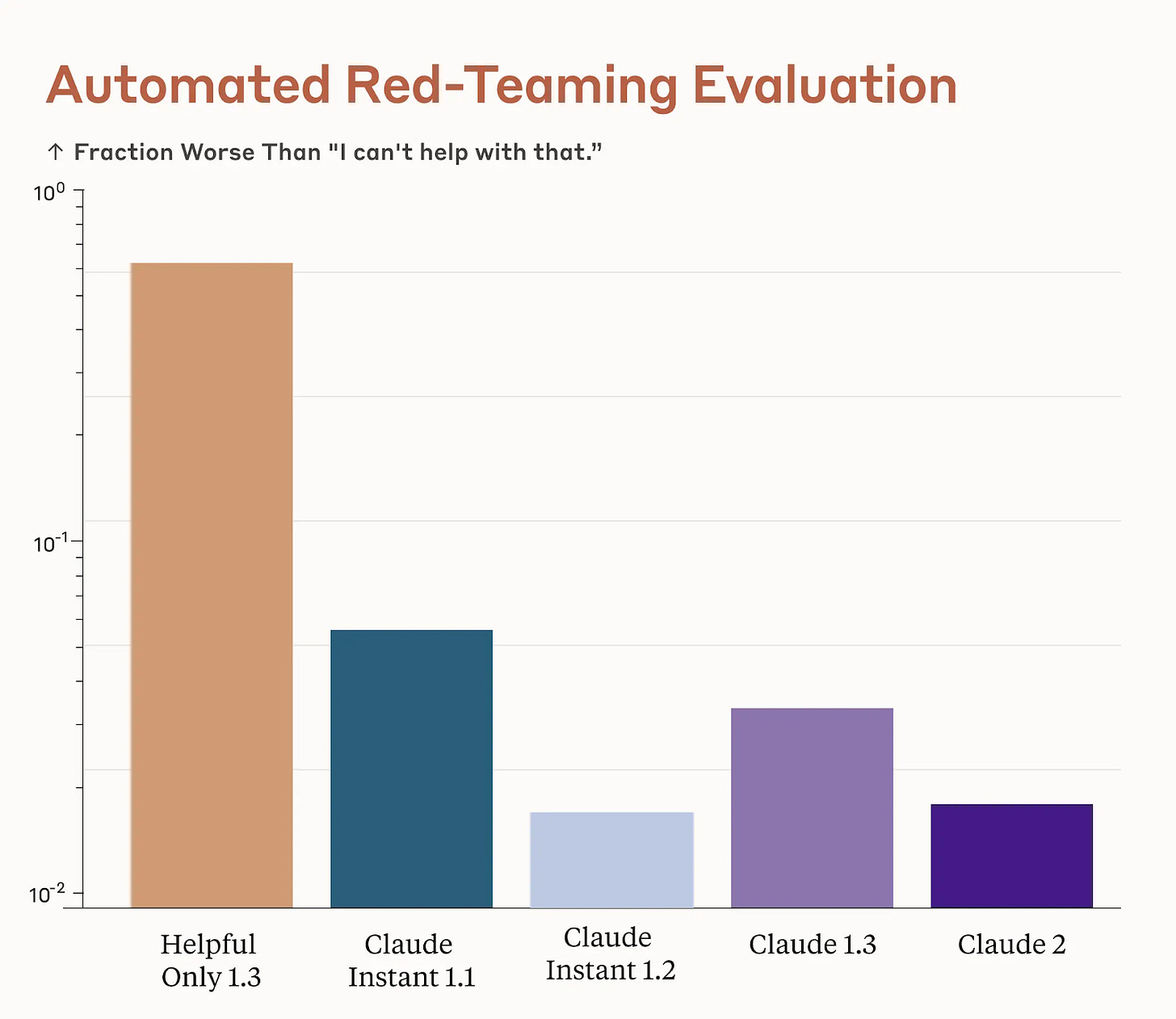

Claude Instant 1.2 incorporates the strengths of Claude 2 in real-world use cases and shows significant gains in key areas like math, coding, and reasoning. It generates longer, more structured responses and follows formatting instructions better. It has also made improvements on safety. It hallucinates less and is more resistant to jailbreaks, as shown below.

Why does this matter?

It looks like Claude Instant 1.2 is Anthropic’s safest AI model. However, it is an entry-level model intended to compete with similar offerings from OpenAI as well as startups such as Cohere. But with enhanced safety, skills, and context length same as Claude 2 (100K tokens), it can perhaps bring Anthropic a step closer to knowing how to challenge ChatGPT's supremacy.

Google attempts to answer if LLMs generalize or memorize

LLMs can certainly seem like they have a rich understanding of the world, but they might just be regurgitating memorized bits of the enormous amount of text they’ve been trained on. How can we tell if they’re generalizing or memorizing?

In this research, Google examines the training dynamics of a tiny model and reverse engineers the solution it finds – and in the process provides an illustration of the exciting emerging field of mechanistic interpretability. It seems that LLMs start by generalizing reasonably well but then change towards memorizing things.

Why does this matter?

While there is no definitive conclusion from the research, it highlights the somewhat mysterious behavior of deep learning models, especially around the balance between memorization and generalization. It is also one step closer to understanding the exact dynamics of when and why certain models transition between these (and possibly back again).

IBM plans to make Meta’s Llama 2 available on watsonx.ai

IBM will host Llama 2-chat 70B model in the watsonx.ai studio, with early access available to select clients and partners. This will build on IBM's collaboration with Meta on open innovation for AI, including work with open-source projects developed by Meta. It will also support IBM's strategy of offering both third-party and its own AI models.

Why does this matter?

Currently, AI builders can leverage models from IBM and the Hugging Face community. The future availability of Llama 2 in watsonx.ai will enable developers and researchers to test, share feedback and collaborate on AI technologies by these giants to drive further innovation.

Knowledge Nugget: What "GPT" actually means

Is the current state of AI a floor or a ceiling, and what types of use cases can we expect over the next 5-10 years?

In this intriguing article,

provides a concise overview of the evolution of AI, highlighting key concepts, advancements, and considerations for its future development and application. It also gives an interesting analogy of AI's progress and the iPhone's early days, emphasizing the ongoing exploration of innovative use cases.Why does this matter?

The article provides an understanding of where AI is going by explaining how we got to the current point in AI. It also gives a highly simplified view that is helpful in understanding the innovation that led to today’s state of AI.

What Else Is Happening❗

🛍️Amazon is testing a tool that uses AI to help sellers write descriptions for listings (Link)

🏛️White House launches AI-based contest to secure government systems from hacks (Link)

📜OpenAI is making custom instructions available to ChatGPT users on free plan (Link)

🤝Microsoft partners with Aptos blockchain to marry AI and web3 (Link)

🎨Google’s redesigned Arts & Culture app includes an AI-generated postcards feature, Play tab, and more (Link)

🛠️ Trending Tools

Gitya: AI-powered GitHub assistant for bug fixes and minor requests. Focus on high-impact engineering.

AI Reviews: AI-powered assistance to obtain feedback from clients, friends, and colleagues effortlessly.

MNDXT: AI companion for chats, texts, and images. Advanced AI-chat, captivating content, tailor-made images.

Logo Diffusion AI: Craft a distinctive logo effortlessly. AI generates shapes tailored to your brand’s essence.

AvidX: Breakthrough Intermediate English Plateau with AI-powered language practicing app.

Raay: Streamline form and survey creation with AI. Interactive, insightful summaries and seamless integrations.

SofaBrain: Powerful AI Interior Design Tool. Explore 20+ interior and exterior styles in stunning color palettes.

InterviewSage: Level up your interview game with self-hostable AI chatbot. Tailored questions and feedback.

📈 Thursday Trajectory

In the spotlight today: Tenstorrent

The AI chip maker raised a jaw-dropping $100M in a strategic financing up-round led by Hyundai and Samsung, with participation from Fidelity Ventures, Eclipse Ventures, Epiq Capital, Maverick Capital, and more.

Tenstorrent makes its own AI chips and sells its intellectual property and other technology to help customers build their own AI chips. It is also working on other uses for AI chips, such as for use in smart TVs.

The funding will be used to accelerate its product development, design and development of AI chiplets, and its ML software roadmap.

The Canadian startup is led by former Tesla employee Jim Keller and is currently valued at around $1 billion.

Maybe it can rival Nvidia's dominance in supplying chips for AI products?

That's all for now!

Join the prestigious readership of The AI Edge, alongside professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other top organizations.

Thanks for reading, and see you tomorrow. 😊