Anthropic’s Claude 3 Beats OpenAI’s GPT-4

Plus: TripsoSR: 3D object generation from a single image in <1s, Cloudflare's Firewall for AI protects LLMs from abuses.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 224th edition of The AI Edge newsletter. This edition brings you “Anthropic’s Claude 3 Beats OpenAI’s GPT-4.”

And a huge shoutout to our incredible readers. We appreciate you😊

In today’s edition:

🏆Anthropic’s Claude 3 Beats OpenAI’s GPT-4

🖼️ TripsoSR: 3D object generation from a single image in <1s

🔒 Cloudflare's Firewall for AI protects LLMs from abuses

💡 Knowledge Nugget: Decoder-only transformers: The workhorse of generative LLMs by

Let’s go!

Anthropic’s Claude 3 beats OpenAI’s GPT-4

Anthropic has launched Claude 3, a new family of models that has set new industry benchmarks across a wide range of cognitive tasks. The family comprises three state-of-the-art models in ascending order of cognitive ability: Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus. Each model provides an increasing level of performance, and you can choose the one according to your intelligence, speed, and cost requirements.

Opus and Sonnet are now available via claude.ai and the Claude API in 159 countries, and Haiku will join that list soon.

Claude 3 has set a new standard of intelligence among its peers on most of the common evaluation benchmarks for AI systems, including undergraduate-level expert knowledge (MMLU), graduate-level expert reasoning (GPQA), basic mathematics (GSM8K), and more.

In addition, Claude 3 displays solid visual processing capabilities and can process a wide range of visual formats, including photos, charts, graphs, and technical diagrams. Lastly, compared to Claude 2.1, Claude 3 exhibits 2x accuracy and precision for responses and correct answers.

Why does it matter?

In 2024, Gemini and ChatGPT caught the spotlight, but now Claude 3 has emerged as the leader in AI benchmarks. While benchmarks matter, only the practical usefulness of Claude 3 will tell if it is truly superior. This might also prompt OpenAI to release a new ChatGPT upgrade. However, with AI models becoming more common and diverse, it's unlikely that one single model will emerge as the ultimate winner.

TripsoSR: 3D object generation from a single image in <1s

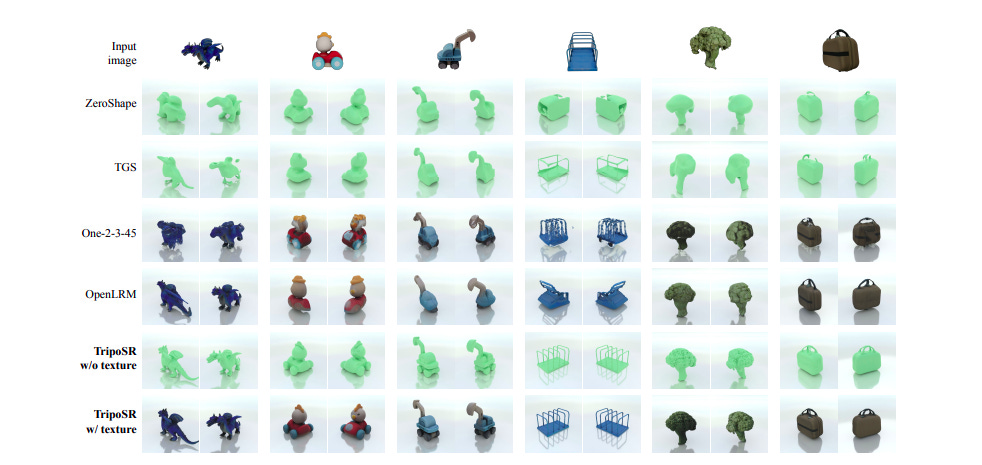

Stability AI has introduced a new AI model named TripsoSR in partnership with Trip AI. The model enables high-quality 3D object generation or rest from a single in less than a second. It runs under low inference budgets (even without a GPU) and is accessible to many users.

As far as performance, TripoSR can create detailed 3D models in a fraction of the time of other models. When tested on an Nvidia A100, it generates draft-quality 3D outputs (textured meshes) in around 0.5 seconds, outperforming other open image-to-3D models such as OpenLRM.

Why does it matter?

TripoSR caters to the growing demands of various industries, including entertainment, gaming, industrial design, and architecture. The availability of the model weights and source code for download further promotes commercialized, personal, and research use, making it a valuable asset for developers, designers, and creators.

Cloudflare's Firewall for AI protects LLMs from abuses

Cloudflare has released a Firewall for AI, a protection layer that you can deploy in front of Large Language Models (LLMs) to identify abuses before they reach the models. While the traditional web and API vulnerabilities also apply to the LLM world, Firewall for AI is an advanced-level Web Application Firewall (WAF) designed explicitly for LLM protection and placed in front of applications to detect vulnerabilities and provide visibility to model owners.

Cloudflare Firewall for AI is deployed like a traditional WAF, where every API request with an LLM prompt is scanned for patterns and signatures of possible attacks. You can deploy it in front of models hosted on the Cloudflare Workers AI platform or any other third-party infrastructure. You can use it alongside Cloudflare AI Gateway and control/set up a Firewall for AI using the WAF control plane.

Why does it matter?

As the use of LLMs becomes more widespread, there is an increased risk of vulnerabilities and attacks that malicious actors can exploit. Cloudflare is one of the first security providers to launch tools to secure AI applications. Using a Firewall for AI, you can control what prompts and requests reach their language models, reducing the risk of abuses and data exfiltration. It also aims to provide early detection and protection for both users and LLM models, enhancing the security of AI applications.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Decoder-only transformers: The workhorse of generative LLMs

Today, impactful research is constantly being released in the domain of LLMs, including anything from new foundation models to exotic topics like model merging. Despite these rapid advancements, however, one component of LLMs has remained constant– the decoder-only transformer architecture.

Shockingly, the architecture used by most modern LLMs is nearly identical to that of the original GPT model. We make the model much larger, modify it slightly, and use a more extensive training (and alignment) process. Thus, the decoder-only transformer architecture is one of the most fundamental ideas in AI research.

This article by

comprehensively explains this architecture, implementing its components from scratch, and explores how it has evolved in recent research. It mainly discusses:The Self-Attention Operation in LLMs

The Decoder-Only Transformer Block

The full decoder-only transformer architecture

Why does it matter?

The decoder-only transformer architecture is the backbone of most modern LLMs. So, understanding this architecture and its components will enable researchers to delve deeper into the nuances of language generation and push the boundaries of AI-driven natural language processing.

What Else Is Happening❗

🤖 ChatGPT can now read your responses out loud

ChatGPT has introduced a feature named ‘Read Aloud’ through which it can now read your responses. The feature has been rolled out to both web and mobile versions. On iOS or Android, users can tap and hold the message and then tap ‘Read Aloud;’ On the web, users can click the ‘Read Aloud’ button below the message. The feature is available on GPT-3.5 and GPT-4, providing support for over 37 languages. (Link)

💻 Wix’s new AI chatbot can build websites in a flash

Wix has introduced an AI website builder to help you build a website, images, and more using only prompts. To create a site, users must click the “Create with AI” button and answer a few of the chatbot’s questions, like the site name, what it’s about, and their goals. Users can create a website for free but will have to opt for premium plans if they want to accept payments or do not want to be limited to using a Wix domain name. (Link)

🛒 Amazon adds Claude 3 models to Bedrock

Anthropic launched a new LLM named Claude 3, the most powerful LLM to date, surpassing GPT-4. The major investor and partner of Anthropic, Amazon, has announced that it is adding the Claude 3 model to Bedrock, the AWS platform for building and running AI services in the cloud. Users of Amazon Bedrock can now have access to the middle-tier model, Claude 3 Sonnet, starting today, with Opus and Haiku “coming soon.” (Link)

🫀 AI tool detects kidney failure 6x faster compared to human experts

Kidney doctors at Sheffield Teaching Hospitals are using AI to detect kidney failure in their patients. Professor Albert Ong, consultant nephrologist and clinical lead for genetics at Sheffield Teaching Hospitals, said the AI tool is six times faster than manual processes, provides super-fast analysis of future kidney lifespan, and could be used by kidney clinics worldwide. (Link)

🚀 Groq launches GroqCloud, a developer playground to access Groq LPU

Groq has launched GroqCloud, a developer playground, to provide developer access to Groq's LPU Inference Engine, which has gained attention for its fast and efficient performance in running AI models. The product provides integrated documentation, code samples, and self-serve access, aiming to make AI development more accessible and affordable. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From ML to ChatGPT to generative AI and LLMs, We break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊