Amazon & Google Make Big Bets on Robots 💰

Amazon's 2 new robots, DeepMind's open source robotics tool, Google AI’s new feature will let you practice speaking.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 130th edition of The AI Edge newsletter. This edition brings you Tech titans Amazon and Google to enter the robotics race.

And a huge shoutout to our incredible readers. We appreciate you😊

In today’s edition:

🤖 Amazon’s 2 new-gen robots

🔧Google DeepMind’s open source tool for robotics

🎙️ Google AI’s new feature will let you practice speaking

📚 Knowledge Nugget: What is multi-modal AI? And why is the internet losing their mind about it? by Devansh.

Let’s go!

Amazon’s 2 new-gen robots

Amazon has announced two new robotic solutions, Sequoia and Digit, to assist employees and improve delivery for customers. Sequoia, operating at a fulfillment center in Houston, Texas, helps store and manage inventory up to 75% faster, allowing for quicker listing of items on Amazon.com and faster order processing. It integrates multiple robot systems to containerize inventory and features an ergonomic workstation to reduce the risk of injuries.

Sparrow, a new robotic arm, consolidates inventory in totes. Amazon is also testing mobile manipulator solutions and the bipedal robot Digit to enhance collaboration between robots and employees further.

Why does this matter?

This mindful move of Amazon will make the workplace better. The new robots will improve efficiency, reduce the risk of employee injuries, and demonstrate the company's commitment to robotics innovation.

Google DeepMind’s updated open-source tool for robotics

Google DeepMind has released MuJoCo 3.0, an updated version of their open-source tool for robotics research. This new release offers improved simulation capabilities, including better representation of various objects such as clothes, screws, gears, and donuts.

Additionally, MuJoCo 3.0 now supports GPU and TPU acceleration through JAX, enabling faster and more powerful computations.

Why does this matter?

Google DeepMind aims to enhance the capabilities of researchers working in the field of robotics and contribute to the development of more advanced and diverse robotic systems. Researchers can explore complex robotic tasks with enhanced precision, pushing the boundaries of what robots can achieve.

Google AI’s new feature will let you practice speaking

Google Search is introducing a new feature that allows English learners to practice speaking and improve their language skills. Android users in select countries can engage in interactive speaking practice sessions, receiving personalized feedback and daily reminders to keep practicing.

The feature is designed to supplement existing learning tools and is created in collaboration with linguists, teachers, and language experts. It includes contextual translation, personalized real-time feedback, and semantic analysis to help learners communicate effectively. The technology behind the feature, including a deep learning model called Deep Aligner, has led to significant improvements in alignment quality and translation accuracy.

Why does this matter?

Google Search's new English learning feature democratizes language education, offers practical speaking practice with expert collaboration, and employs advanced technology for real-world communication and effectiveness in language learning.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: What is multi-modal AI? And why is the internet losing their mind about it?

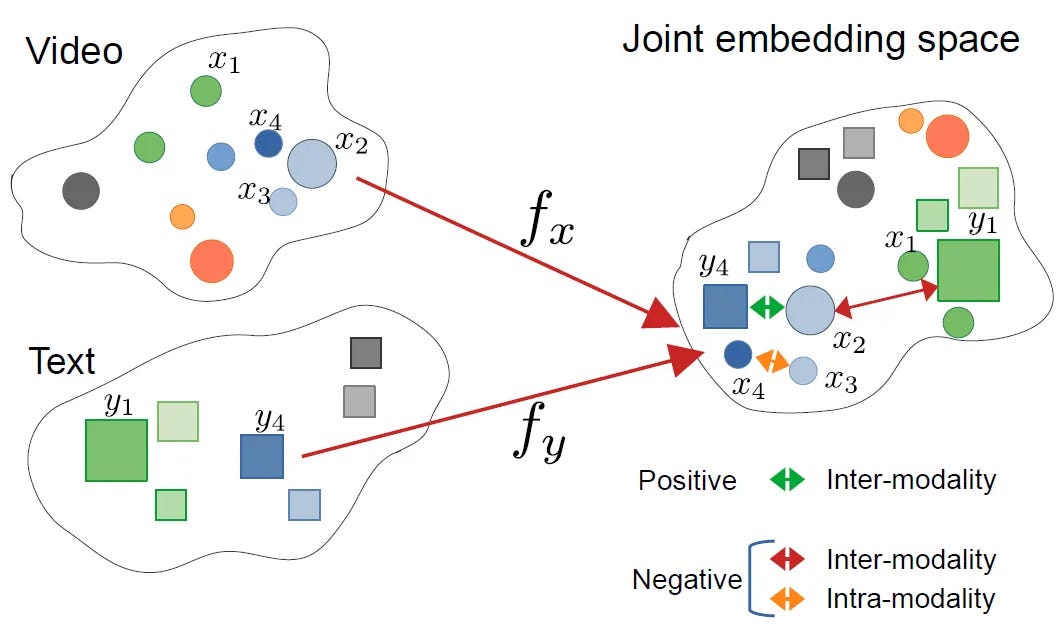

In this article, the author Devansh talking about the hype of Multi-modal AI over the internet. So let’s see what it actually is! Multi-modal AI refers to AI that integrates multiple types of data, such as language, sound, and tabular data, in the same training process.

This allows the model to sample from a larger search space, increasing its capabilities. While multi-modality is a powerful development, it doesn't address the fundamental issues with AI models like GPT, such as unreliability and fragility.

However, multi-modal embeddings, which create vector representations of data, hold more utility in developing better models. Overall, integrating multi-modal capabilities into AI models can be beneficial, but it's important not to overlook the fundamentals.

Why does this matter?

Multi-modal AI integrates various data types in AI training to broaden capabilities, but it doesn't solve fundamental issues like unreliability and fragility in models like GPT. Multi-modal embeddings offer utility for improving models, making multi-modality beneficial, but it's crucial not to ignore the core problems.

What Else Is Happening❗

🕐 OpenAI’s DALL·E 3 is now available in ChatGPT Plus and Enterprise

Users can now describe their vision in a conversation with ChatGPT, and the model will generate a selection of visuals for them to refine and iterate upon. DALL·E 3 is capable of generating visually striking and detailed images, including text, hands, & faces. It responds well to extensive prompts & supports landscape and portrait aspect ratios. (Link)

📍 Instagram's co-founder’s Artifact app enables users to explore recommended places

Users can now share their favorite restaurants, bars, shops, and other locations with friends through the app. The app also recently added generative AI tools to incorporate images into posts, making it more visually appealing to users. (Link)

🤝 Amazon teams up with Israeli startup UVeye to automate AI inspections of its delivery vehicles

The partnership will involve installing UVeye's automated, AI-powered vehicle scanning system in hundreds of Amazon warehouses in the U.S., Canada, Germany, and the U.K. This technology will help ensure the safety and efficiency of Amazon's delivery fleet, which currently consists of over 100,000 vehicles. (Link)

🤖 Walmart announced its Responsible AI Pledge

With an aim to set the standard for ethical AI by focusing on transparency, security, privacy, fairness, accountability, and customer-centricity. The company believes AI is integral to its operations, from personalizing customer experiences to managing the supply chain. (Link)

📈 Jasper launches a new AI copilot that aims to improve marketing outcomes

The copilot offers features such as performance analytics, a company intelligence hub, and campaign tools. These features will be rolled out in beta in November, with more capabilities planned for Q1 2024. (Link)

That's all for now!

If you are new to The AI Edge newsletter, subscribe to get daily AI updates and news directly sent to your inbox for free!

Thanks for reading, and see you tomorrow. 😊