Amazon Debuts AI Image Generator, Rivals Stable Diffusion, DALL·E, Adobe

Plus: AWS re:Invent updates, Perplexity's new online models, DeepMind's GNoME.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 158th edition of The AI Edge newsletter. This edition brings you Amazon’s AI image generator and new major updates from re:Invent.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🚀 Amazon’s AI image generator, and other announcements from AWS re:Invent

💡 Perplexity introduces PPLX online LLMs

💎 DeepMind’s AI tool finds 2.2M new crystals to advance technology

📚 Knowledge Nugget: Practical Tips for Finetuning LLMs Using LoRA by

Let’s go!

Amazon’s AI image generator, and other announcements from AWS re:Invent (Nov 29)

Titan Image Generator: Titan isn’t a standalone app or website but a tool that developers can build on to make their own image generators powered by the model. To use it, developers will need access to Amazon Bedrock. It’s aimed squarely at an enterprise audience, rather than the more consumer-oriented focus of well-known existing image generators like OpenAI’s DALL-E. (Source)

Amazon SageMaker HyperPod: AWS introduced Amazon SageMaker HyperPod, which helps reduce time to train foundation models (FMs) by providing a purpose-built infrastructure for distributed training at scale. (Source)

Clean Rooms ML: An offshoot of AWS’ existing Clean Rooms product, the service removes the need for AWS customers to share proprietary data with their outside partners to build, train and deploy AI models. You can train a private lookalike model across your collective data. (Source)

Amazon Neptune Analytics: It combines the best of both worlds– graph and vector databases– which has been a debate of sorts in AI circles about which database is more important in finding truthful information in generative AI applications. (Source)

Why does this matter?

It is evident by now that AI is the focus of AWS’s biggest yearly cloud event. Slowly, but surely, AWS is inching toward defending its longstanding lead as the top cloud provider in the market, showing it can compete with AI offerings from rivals Microsoft and Google.

However, two things that stand out are: offering a wide range of models through its Bedrock service and better, seamless data management tools to build and deploy enterprises’ own generative AI applications.

Perplexity introduces PPLX online LLMs

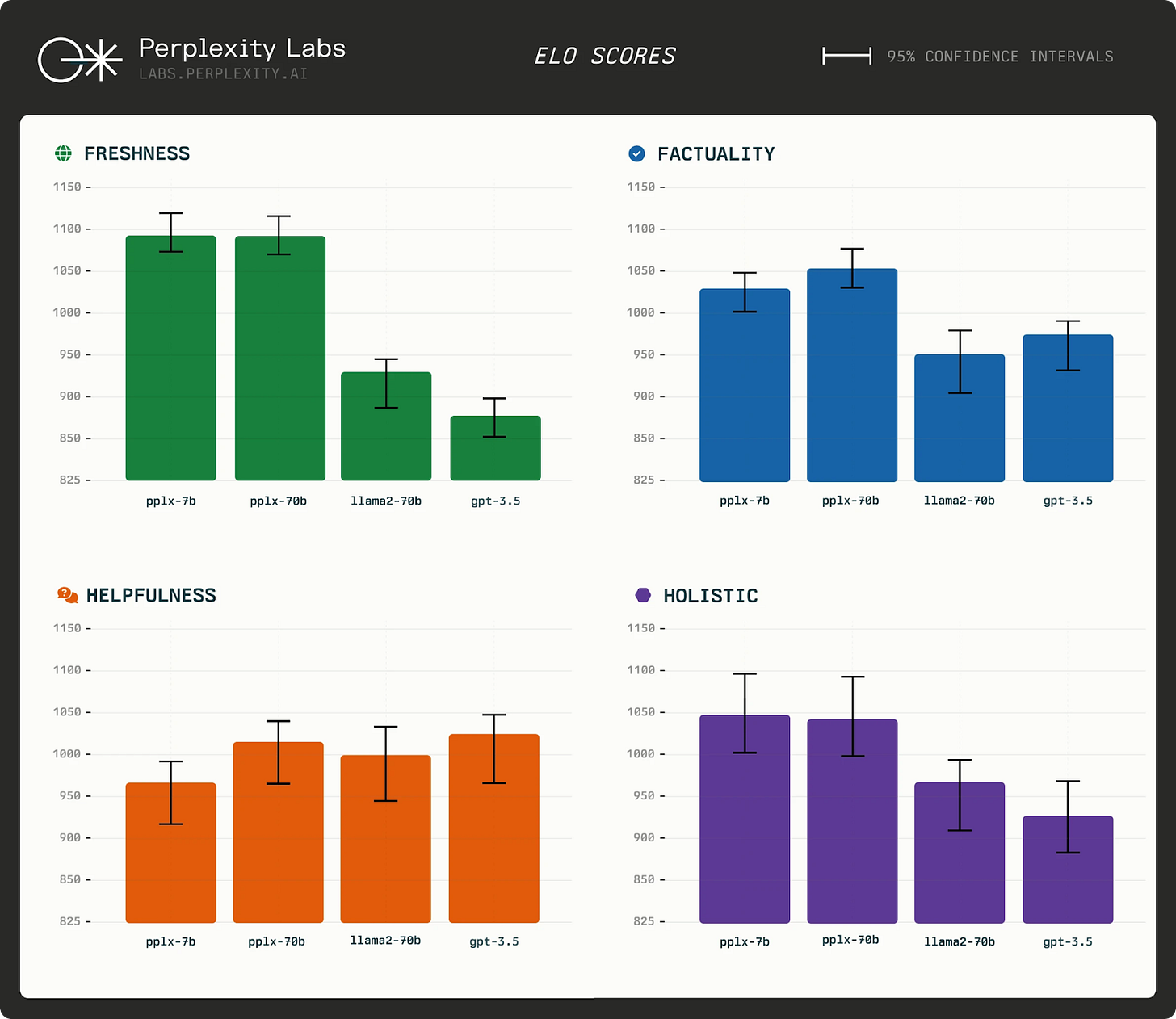

Perplexity AI shared two new PPLX models: pplx-7b-online and pplx-70b-online. The online models are focused on delivering helpful, up-to-date, and factual responses, and are publicly available via pplx-api, making it a first-of-its-kind API. They are also accessible via Perplexity Labs, our LLM playground.

The models are aimed at addressing two limitations of LLMs today– freshness and hallucinations. The PPLX models build on top of mistral-7b and llama2-70b base models.

Why does this matter?

Finally, there’s a model that can answer your questions like “What was the Warriors game score last night?” while matching and even surpassing gpt-3.5 and llama2-70b performance on Perplexity-related use cases (particularly for providing accurate and up-to-date responses.)

DeepMind’s AI tool finds 2.2M new crystals to advance technology

AI tool GNoME finds 2.2 million new crystals (equivalent to nearly 800 years’ worth of knowledge), including 380,000 stable materials that could power future technologies.

Modern technologies, from computer chips and batteries to solar panels, rely on inorganic crystals. Each new stable crystal takes months of painstaking experimentation. Plus, if they are unstable, they can decompose and wouldn’t enable new technologies.

Google DeepMind introduced Graph Networks for Materials Exploration (GNoME), its new deep learning tool that dramatically increases the speed and efficiency of discovery by predicting the stability of new materials. It can do at an unprecedented scale.

A-Lab, a facility at Berkeley Lab, is also using AI to guide robots in making new materials.

Why does this matter?

Should we say AI propelled us 800 years ahead into the future? It has revolutionized the discovery, experimentation, and synthesis of materials while driving the costs down. It can enable greener technologies (saving the planet) and even efficient computing (presumably for AI). AI has truly sparked a transformative era for many fields.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Practical Tips for Finetuning LLMs Using LoRA (Low-Rank Adaptation)

LoRA is among the most widely used and effective techniques for efficiently training custom LLMs. For those interested in open-source LLMs, it's an essential technique worth familiarizing oneself with.

In this insightful article,

discusses the primary lessons derived from his experiments. Additionally, he addresses some of the frequently asked questions related to the topic. If you are interested in finetuning custom LLMs, these insights will save you some time in "the long run" (no pun intended).Why does this matter?

The article shares practical tips and lessons from Raschka's hundreds of experiments. It highlights LoRA’s role in cost-effective training, impacting language model development and resource optimization.

What Else Is Happening❗

👥Microsoft to join OpenAI’s board as Sam Altman officially returns as CEO.

Sam Altman is officially back at OpenAI as CEO. Mira Murati will return to her role as CTO. The new initial board will consist of Bret Taylor (Chair), Larry Summers, and Adam D’Angelo. While Microsoft is getting a non-voting observer seat on the nonprofit board. (Link)

🔍AI researchers talked ChatGPT into coughing up some of its training data.

Long before the CEO/boardroom drama, OpenAI has been ducking questions about the training data used for ChatGPT. But AI researchers (including several from Google’s DeepMind team) spent $200 and were able to pull “several megabytes” of training data just by asking ChatGPT to “Repeat the word ”poem” forever.” Their attack has been patched, but they warn that other vulnerabilities may still exist. Check out the full report here. (Link)

💡A new startup from ex-Apple employees to focus on pushing OSs forward with GenAI.

After selling Workflow to Apple in 2017, the co-founders are back with a new startup that wants to reimagine how desktop computers work using generative AI called Software Applications Incorporated. They are prototyping with a variety of LLMs, including OpenAI’s GPT and Meta’s Llama 2. (Link)

🚀Krea AI introduces new features Upscale & Enhance, now live.

With this new AI tool, you can maximize the quality and resolution of your images in a simple way. It is available for free for all KREA users at krea.ai. See a demo below.

🏖️AI turns beach lifeguard at Santa Cruz.

As the winter swell approaches, UC Santa Cruz researchers are developing potentially lifesaving AI technology. They are working on algorithms that can monitor shoreline change, identify rip currents, and alert lifeguards of potential hazards, hoping to improve beach safety and ultimately save lives. (Link)

That's all for now!

Subscribe to The AI Edge and join the impressive list of readers that includes professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other reputable organizations.

Thanks for reading, and see you tomorrow. 😊