Alibaba's EMO Makes Photos Come Alive (And Lip-Sync!)

Plus: Microsoft introduces 1-bit LLM, Ideogram launches text-to-image model version 1.0

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 221st edition of The AI Edge newsletter. This edition brings you Alibaba's EMO that makes photos come alive (and lip-sync!).

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

📸Alibaba's EMO makes photos come alive (and lip-sync!)

💻 Microsoft introduces 1-bit LLM

🖼️ Ideogram launches text-to-image model version 1.0

📚 Knowledge Nugget: Scaling ChatGPT: Five Real-World Engineering Challenges by

Let’s go!

Alibaba's EMO makes photos come alive (and lip-sync!)

Researchers at Alibaba have introduced an AI system called “EMO” (Emote Portrait Alive) that can generate realistic videos of you talking and singing from a single photo and an audio clip. It captures subtle facial nuances without relying on 3D models.

EMO uses a two-stage deep learning approach with audio encoding, facial imagery generation via diffusion models, and reference/audio attention mechanisms.

Experiments show that the system significantly outperforms existing methods in terms of video quality and expressiveness.

Why does this matter?

By combining EMO with OpenAI’s Sora, we could synthesize personalized video content from photos or bring photos from any era to life. This could profoundly expand human expression. We may soon see automated TikTok-like videos.

Microsoft introduces 1-bit LLM

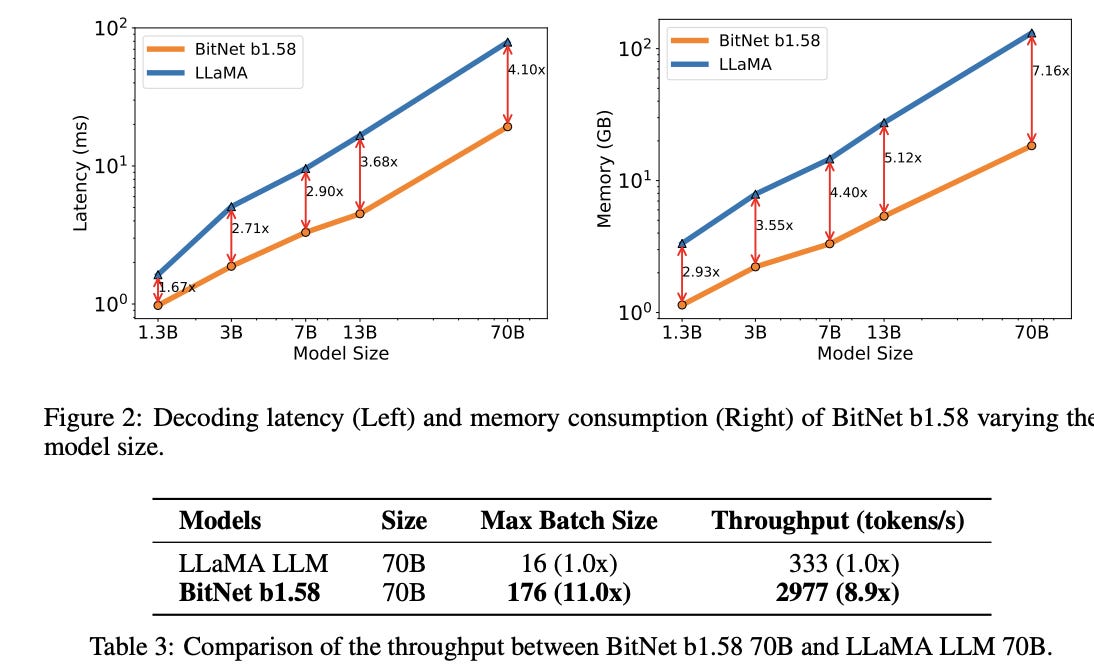

Microsoft has launched a radically efficient AI language model dubbed 1-bit LLM. It uses only 1.58 bits per parameter instead of the typical 16, yet performs on par with traditional models of equal size for understanding and generating text.

Building on research like BitNet, this drastic bit reduction per parameter boosts cost-effectiveness relating to latency, memory, throughput, and energy usage by 10x. Despite using a fraction of the data, 1-bit LLM maintains accuracy.

Why does this matter?

Traditional LLMs often require extensive resources and are expensive to run while their swelling size and power consumption give them massive carbon footprints.

This new 1-bit technique points towards much greener AI models that retain high performance without overusing resources. By enabling specialized hardware and optimized model design, it can drastically improve efficiency and cut computing costs, with the ability to put high-performing AI directly into consumer devices.

Ideogram launches text-to-image model version 1.0

Ideogram has launched a new text-to-picture app called Ideogram 1.0. It's their most advanced ever. Dubbed a "creative helper," it generates highly realistic images from text prompts with minimal errors. A built-in "Magic Prompt" feature effortlessly expands basic prompts into detailed scenes.

The Details:

Ideogram 1.0 significantly cuts image generation errors in half compared to other apps. And users can make custom picture sizes and styles. So it can do memes, logos, old-timey portraits, anything.

Magic Prompt takes basic prompts like "vegetables orbiting the sun" and turns them into full scenes with backstories. That would take regular people hours to write out word-for-word.

Tests show that Ideogram 1.0 beats DALL-E 3 and Midjourney V6 at matching prompts, making sensible pictures, looking realistic, and handling text.

Why does this matter?

This advancement in AI image generation hints at a future where generative models commonly assist or even substitute human creators across personalized gift items, digital content, art, and more.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Scaling ChatGPT: Five Real-World Engineering Challenges

This insightful article by

features OpenAI engineering lead pulling back the curtain on the intricacies behind ChatGPT's wild ride to 100 million+ weekly users.Here, Evan emphasizes the critical role of GPU RAM and caching mechanisms in improving the model efficiency. According to him, the core scaling issue lay in ChatGPT's reliance on scarce graphics cards for computation.

Despite Microsoft cloud support, GPU supply chain bottlenecks globally constrained OpenAI’s capacity to meet explosive demand – those “at capacity” messages were very real.

He mentions balancing batch size as a key metric when scaling to ensure effective GPU utilization without under or overloading.

Evan then addresses the importance of identifying the right metrics to measure server load accurately and various bottlenecks that can arise during the scaling process, from memory bandwidth limitations to network constraints.

Why does this matter?

The challenges above demonstrate how hardware limitations can motivate software innovation through efficient workloads. With any luck, such solutions could someday make large language models more accessible. For now, surging AI apps continue vying for limited chips.

Source

What Else Is Happening❗

🎵Adobe launches new GenAI music tool

Adobe introduces Project Music GenAI Control, allowing users to create music from text or reference melodies with customizable tempo, intensity, and structure. While still in development, this tool has the potential to democratize music creation for everyone. (Link)

🎥Morph makes filmmaking easier with Stability AI

Morph Studio, a new AI platform, lets you create films simply by describing desired scenes in text prompts. It also enables combining these AI-generated clips into complete movies. Powered by Stability AI, this revolutionary tool could enable anyone to become a filmmaker. (Link)

💻 Hugging Face, Nvidia, and ServiceNow release StarCode 2 for code generation.

Hugging Face along with Nvidia and Service Now launches StarCoder 2, an open-source code generator available in three GPU-optimized models. With improved performance and less restrictive licensing, it promises efficient code completion and summarization. (Link)

📅Meta set to launch Llama 3 in July

Meta plans to launch Llama 3 in July to compete with OpenAI's GPT-4. It promises increased responsiveness, better context handling, and double the size of its predecessor. With added tonality and security training, Llama 3 seeks more nuanced responses. (Link)

🤖 Apple subtly reveals its AI plans

Apple CEO Tim Cook reveals plans to disclose Apple's generative AI efforts soon, highlighting opportunities to transform user productivity and problem-solving. This likely indicates exciting new iPhone and device features centered on efficiency. (Link)

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you tomorrow. 😊