AI Weekly Rundown (September 2 to September 8)

News from Meta, Stability AI, Amazon, Intel and other AI giants.

Hello, Engineering Leaders and AI Enthusiasts,

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:

✅ Meta AI's New Dataset Understands 122 Languages

✅ Stability AI’s 1st Japanese Vision-Language Model

✅ Transformers as Support Vector Machines

✅ Amazon’s AI-powered palm recognition breakthrough

✅ Intel is going after the AI opportunity in multiple ways

✅ Introducing Refact Code LLM, for real-time code completion and chat

✅ Google Deepmind’s new AI benchmark on bioinformatics code

✅ CityDreamer - New Gen AI model creates unlimited 3D cities

✅ Scientists train a neural network to identify PC users’ fatigue

✅ Introducing Falcon 180B, largest and most powerful open LLM

✅ Apple is spending millions of dollars a day to train AI

✅ Microsoft and Paige to build the largest image-based AI model to fight cancer

Let’s go!

Meta AI's New Dataset Understands 122 Languages

Meta AI announced Belebele, a multilingual reading comprehension dataset with 122 language variants. It allows for evaluating text models in high, medium, and low-resource languages, expanding the language coverage of natural language understanding benchmarks.

The Belebele dataset consists of questions based on short passages from the Flores-200 dataset, with four multiple-choice answers. The questions were designed to test different levels of general language comprehension. The dataset enables direct comparison of model performance across all languages and was used to evaluate multilingual masked language models and large language models. The results show that smaller multilingual models perform better in understanding multiple languages.

Stability AI’s 1st Japanese Vision-Language Model

Stability AI has released Japanese InstructBLIP Alpha, a vision-language model that generates textual descriptions for input images and answers questions about them. It is built upon the Japanese StableLM Instruct Alpha 7B and leverages the InstructBLIP architecture.

(Figure. Output: “Two persons sitting on a bench looking at Mt.Fuji”)

The model can accurately recognize Japan-specific objects and process text input, such as questions. It is available on Hugging Face Hub for inference and additional training, exclusively for research. This model has various applications, including search engine functionality, scene description, and providing textual descriptions for blind individuals.

Transformers as Support Vector Machines

This paper establishes a formal equivalence between the optimization geometry of self-attention in transformers and a hard-margin Support Vector Machine (SVM) problem. It shows that optimizing the attention layer of transformers converges towards an SVM solution that minimizes the nuclear norm of the combined parameter.

The study also proves the convergence of gradient descent under suitable conditions and introduces a more general SVM equivalence for nonlinear prediction heads. These findings suggest that transformers can be interpreted as a hierarchy of SVMs that separate and select optimal tokens.

Amazon’s AI-powered palm recognition breakthrough

Amazon One is a fast, convenient, and contactless device that lets customers use the palm of their hand for everyday activities like paying at a store, presenting a loyalty card, verifying their age, or entering a venue. No phone, no wallet.

Amazon One does this by combining generative AI, machine learning, cutting-edge biometrics, and optical engineering.

Currently, Amazon One is being rolled out to more than 500 Whole Foods Market stores and dozens of third-party locations, including travel retailers, sports and entertainment venues, convenience stores, and grocers. It can also detect fake hands and reject them. It has already been used over 3 million times with 99.9999% accuracy.

Intel is going after the AI opportunity in multiple ways

Intel is aggressively pursuing opportunities in the AI space by expanding beyond data center-based AI accelerators. CEO Pat Gelsinger believes that AI will move closer to end-users due to economic, physical, and privacy considerations. They are incorporating AI into various products, including server CPUs like Sapphire Rapids, which come with built-in AI accelerators for inference tasks.

Furthermore, Intel is set to launch Meteor Lake PC CPUs with dedicated AI hardware to accelerate AI workloads directly on user devices. This approach aligns with Intel's dominant position in the CPU market, making it attractive for software providers to support their AI hardware.

📢 Invite friends and get rewards 🤑🎁

Enjoying AI updates? Refer friends and get perks and special access to The AI Edge.

Get 400+ AI Tools and 500+ Prompts for 1 referral.

Get A Free Shoutout! for 3 referrals.

Get The Ultimate Gen AI Handbook for 5 referrals.

When you use the referral link above or the “Share” button on any post, you'll get credit for any new subscribers. Simply send the link in a text, email or share it on social media with friends.

Introducing Refact Code LLM, for real-time code completion and chat

Refact LLM 1.6B model is primarily for real-time code completion (infill) in multiple programming languages and works as a chat. It achieves the state-of-the-art performance among the code LLMs, coming closer to HumanEval as Starcoder while being 10x smaller in size. It also beats other code models, as shown below. First, a tl;dr

1.6b parameters

20 programming languages

4096 tokens context

code completion and chat capabilities

pre-trained on permissive licensed code and available for commercial use

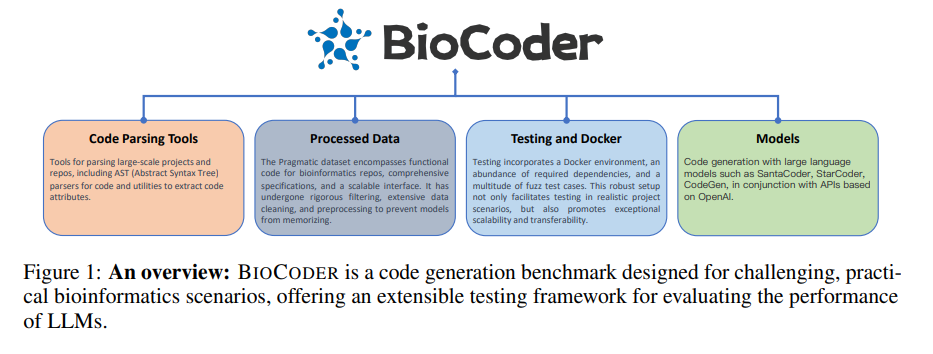

Google Deepmind’s new AI benchmark on bioinformatics code

Google Deepmind and Yale University researchers have introduced BioCoder, a benchmark for testing the ability of AI models to generate bioinformatics-specific code. BioCoder includes 2,269 coding problems based on functions and methods from bioinformatics GitHub repositories.

In tests with several code generators, including InCoder, CodeGen, SantaCoder, and ChatGPT, OpenAI's GPT-3.5 Turbo performed exceptionally well in the benchmark. The team plans to explore other open models, such as Meta's LLamA2, in future tests.

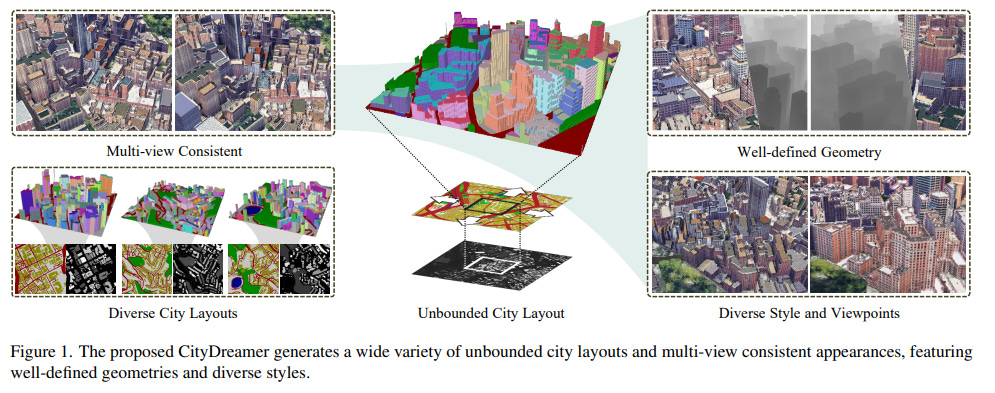

CityDreamer - New Gen AI model creates unlimited 3D cities

CityDreamer is a generative AI model that can create unlimited 3D cities by separating the generation of buildings from other background objects. This allows for better handling of the diverse appearance of buildings in urban environments.

The model uses two datasets, OSM and GoogleEarth, to enhance the realism of the generated cities. These datasets provide realistic city layouts and appearances that can be easily scaled to other cities worldwide.

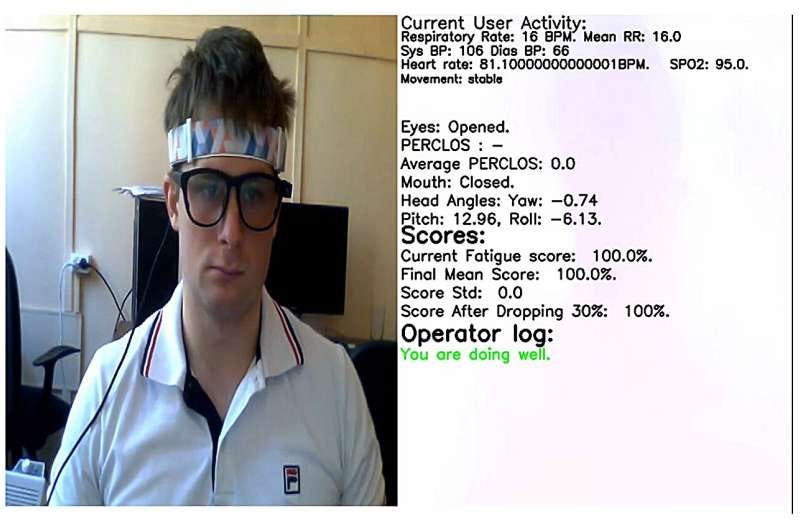

Scientists train a neural network to identify PC users’ fatigue

Scientists from St. Petersburg University and other organizations have created a database of eye movement strategies of PC users in different states of fatigue. They plan to use this data to train neural network models that can accurately track the functional state of operators, ensuring safety in various industries. The database includes a comprehensive set of indicators collected through sensors such as video cameras, eye trackers, heart rate monitors, and electroencephalographs.

An example of human fatigue analysis using video recording.

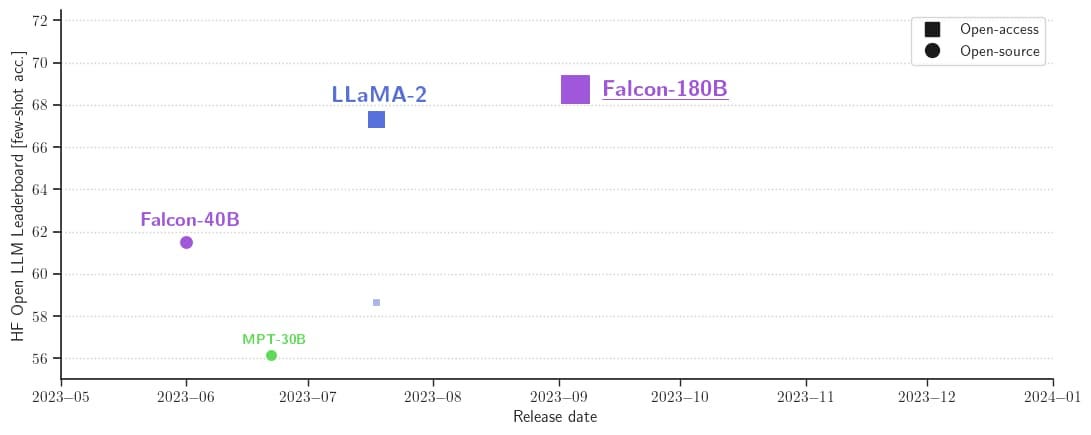

Introducing Falcon 180B, largest and most powerful open LLM

UAE’s Technology Innovation Institute (TII) has released Falcon 180B, a new state-of-the-art for open models. It is the largest openly available language model, with 180 billion parameters, trained on a massive 3.5 trillion tokens using TII's RefinedWeb dataset. It's currently at the top of the Hugging Face Leaderboard for pre-trained Open LLMs and is available for both research and commercial use.

The model performs exceptionally well in various tasks like reasoning, coding, proficiency, and knowledge tests, even beating competitors like Meta's LLaMA 2. Among closed-source models, it ranks just behind OpenAI's GPT 4 and performs on par with Google's PaLM 2 Large, which powers Bard, despite being half the model's size.

Apple is spending millions of dollars a day to train AI

Reportedly, Apple has been expanding its budget for building AI to millions of dollars a day. It has a unit of around 16 members, including several former Google engineers, working on conversational AI. It is working on multiple AI models to serve a variety of purposes.

Apple wants to enhance Siri to be your ultimate digital assistant, doing multi-step tasks without you lifting a finger and using voice commands.

It is developing an image generation model and is researching multimodal AI, which can recognize and produce images or video as well as text.

A chatbot is in the works that would interact with customers who use AppleCare.

Microsoft and Paige to build the largest image-based AI model to fight cancer

Paige, a technology disruptor in healthcare, has joined forces with Microsoft to build the world’s largest image-based AI models for digital pathology and oncology.

Paige developed the first Large Foundation Model using over one billion images from half a million pathology slides across multiple cancer types. Now, it is developing a new AI model with Microsoft that is orders-of-magnitude larger than any other image-based AI model existing today, configured with billions of parameters.

Paige will utilize Microsoft’s advanced supercomputing infrastructure to train the technology at scale and ultimately deploy it to hospitals and laboratories across the globe using Azure.

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI Enthusiasts.

Thanks for reading, and see you on Monday. 😊