AI Weekly Rundown (March 9 to March 15)

Major AI announcements from Meta, OpenAI, DeepMind, Apple, Cohere, and more.

Hello Engineering Leaders and AI Enthusiasts!

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:

🖼️ Huawei's PixArt-Σ paints prompts to perfection

🧠 Meta cracks the code to improve LLM reasoning

📈 Yi Models exceed benchmarks with refined data

🚀 Cohere introduces production-scale AI for enterprises

🎯 RFM-1 redefines robotics with human-like reasoning

🎧 Spotify introduces audiobook recommendations

👨💻 Devin: The first AI software engineer redefines coding

🗣️ Deepgram’s Aura empowers AI agents with authentic voices

🖥️ Meta introduces two 24K GPU clusters to train Llama 3

🎮 DeepMind's SIMA: The AI agent that's a Jack of all games

⚡ Claude 3 Haiku: Anthropic's lightning-fast AI solution for enterprises

🤖 ChatGPT gets a body with "Figure 01"

🛠️ Apple’s new recipe to build performant multimodal models

💥 Cerebras’ chip for enabling 10x larger models than GPT-4

💼 Apple buys startup DarwinAI ahead of a big push into GenAI in 2024

Let’s go!

Huawei's PixArt-Σ paints prompts to perfection

Researchers from Huawei's Noah's Ark Lab introduced PixArt-Σ, a text-to-image model that can create 4K resolution images with impressive accuracy in following prompts. Despite having significantly fewer parameters than models like SDXL, PixArt-Σ outperforms them in image quality and prompt matching.

The model uses a "weak-to-strong" training strategy and efficient token compression to reduce computational requirements. It also relies on carefully curated training data with high-resolution images and accurate descriptions. The researchers claim that PixArt-Σ can keep up with commercial alternatives such as Adobe Firefly 2, Google Imagen 2, OpenAI DALL-E 3, and Midjourney v6.

Meta explores improving LLM reasoning

Meta researchers investigated using reinforcement learning (RL) to improve the reasoning abilities of large language models (LLMs). They compared algorithms like Proximal Policy Optimization (PPO) and Expert Iteration (EI) and found that the simple EI method was particularly effective, enabling models to outperform fine-tuned models by nearly 10% after several training iterations.

However, the study also revealed that the tested RL methods have limitations in further improving LLMs' logical capabilities. The researchers suggest that stronger exploration techniques, such as Tree of Thoughts, XOT, or combining LLMs with evolutionary algorithms, are important for achieving greater progress in reasoning performance.

Yi models exceed benchmarks with refined data

01.AI has introduced the Yi model family, a series of language and multimodal models that showcase impressive multidimensional abilities. Based on 6B and 34B pretrained language models, the Yi models have been extended to include chat models, 200K long context models, depth-upscaled models, and vision-language models.

The performance of the Yi models can be attributed to the high-quality data resulting from 01.AI's data-engineering efforts. By constructing a massive 3.1 trillion token dataset of English and Chinese corpora and meticulously polishing a small-scale instruction dataset, 01.AI has created a solid foundation for its models. The company believes that scaling up model parameters using thoroughly optimized data will lead to even more powerful models.

Cohere’s introduces production-scale AI for enterprises

Cohere, an AI company, has introduced Command-R, a new large language model (LLM) designed to address real-world challenges, such as inefficient workflows, data analysis limitations, slow response times, etc.

Command-R focuses on two key areas: Retrieval Augmented Generation (RAG) and Tool Use. RAG allows the model to access and process information from private databases, improving the accuracy of its responses. Tool Use allows Command-R to interact with external software tools and APIs, automating complex tasks.

Command-R offers several features beneficial for businesses, including:

Multilingual capabilities: Supports 10 major languages

Cost-effectiveness: Offers a longer context window and reduced pricing compared to previous models

Wider accessibility: Available through Cohere's API, major cloud providers, and free weights for research on HuggingFace

Overall, it empowers businesses to leverage AI for improved decision-making, increased productivity, and enhanced customer experiences.

RFM-1 redefines robotics with human-like reasoning

Covariant has introduced RFM-1, a Robotics Foundation Model that gives robots ChatGPT-like understanding and reasoning capabilities.

TLDR;

RFM-1 is an 8 billion parameter transformer trained on text, images, videos, robot actions, and sensor readings from Covariant's fleet of high-performing robotic systems deployed in real-world environments.

Similar to how we understand how objects move, RFM-1 can predict future outcomes/consequences based on initial images and robot actions.

RFM-1 leverages NLP to enable intuitive interfaces for programming robot behavior. Operators can instruct robots using plain English, lowering barriers to customizing AI behavior for specific needs.

RFM-1 can also communicate issues and suggest solutions to operators.

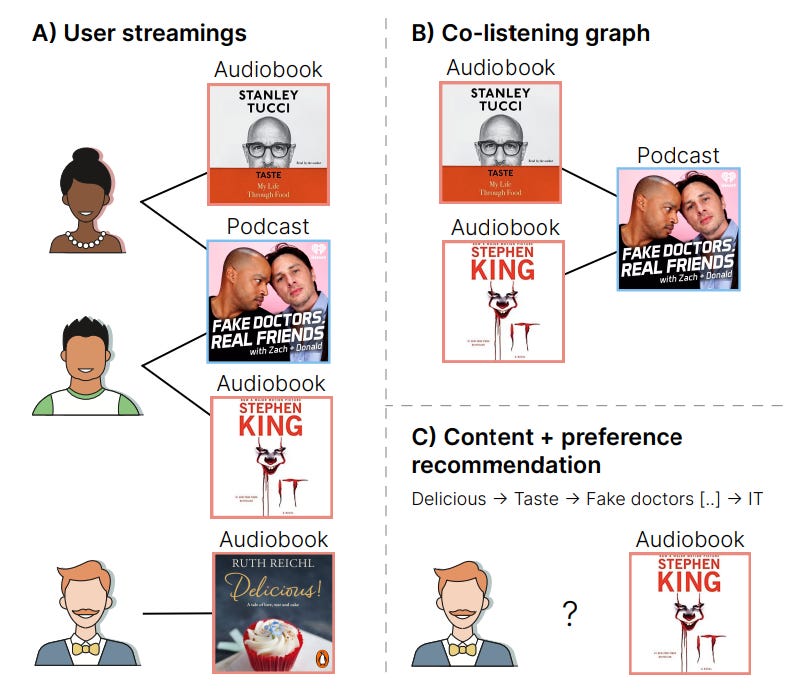

Spotify now recommends audiobooks (with AI)

Spotify has introduced a novel recommendation system called 2T-HGNN to provide personalized audiobook recommendations to its users. The system addresses the challenges of introducing a new content type (audiobooks) into an existing platform, such as data sparsity and the need for scalability.

2T-HGNN leverages a technique called "Heterogeneous Graph Neural Networks" (HGNNs) to uncover connections between different content types. Additionally, a "Two Tower" (2T) model helps ensure that recommendations are made quickly and efficiently for millions of users.

Interestingly, the system also uses podcast consumption data and weak interaction signals to uncover user preferences and predict future audiobook engagement.

Devin: The first AI software engineer redefines coding

In a groundbreaking development, the US-based startup Cognition AI has unveiled Devin, the world’s first AI software engineer. It is an autonomous agent that solves engineering tasks using its own shell, code editor, and web browser. It performs tasks like planning, coding, debugging, and deploying projects autonomously.

When evaluated on the SWE-Bench benchmark, which asks an AI to resolve GitHub issues found in real-world open-source projects, Devin correctly resolves 13.86% of the issues unassisted, far exceeding the previous state-of-the-art (SOTA) model performance of 1.96% unassisted and 4.80% assisted. It has also successfully passed practical engineering interviews with leading AI companies and even completed real Upwork jobs.

Deepgram’s Aura empowers AI agents with authentic voices

Deepgram, a top voice recognition startup, just released Aura, its new real-time text-to-speech model. It's the first TTS model built for responsive, conversational AI agents and applications. Companies can use these agents for customer service in call centers and other customer-facing roles.

Aura includes a dozen natural, human-like voices with lower latency than any comparable voice AI alternative and is already being used in production by several customers. It works hand in hand with Deepgram's Nova-2 speech-to-text API, which is known for its top-notch accuracy and speed in transcribing audio streams.

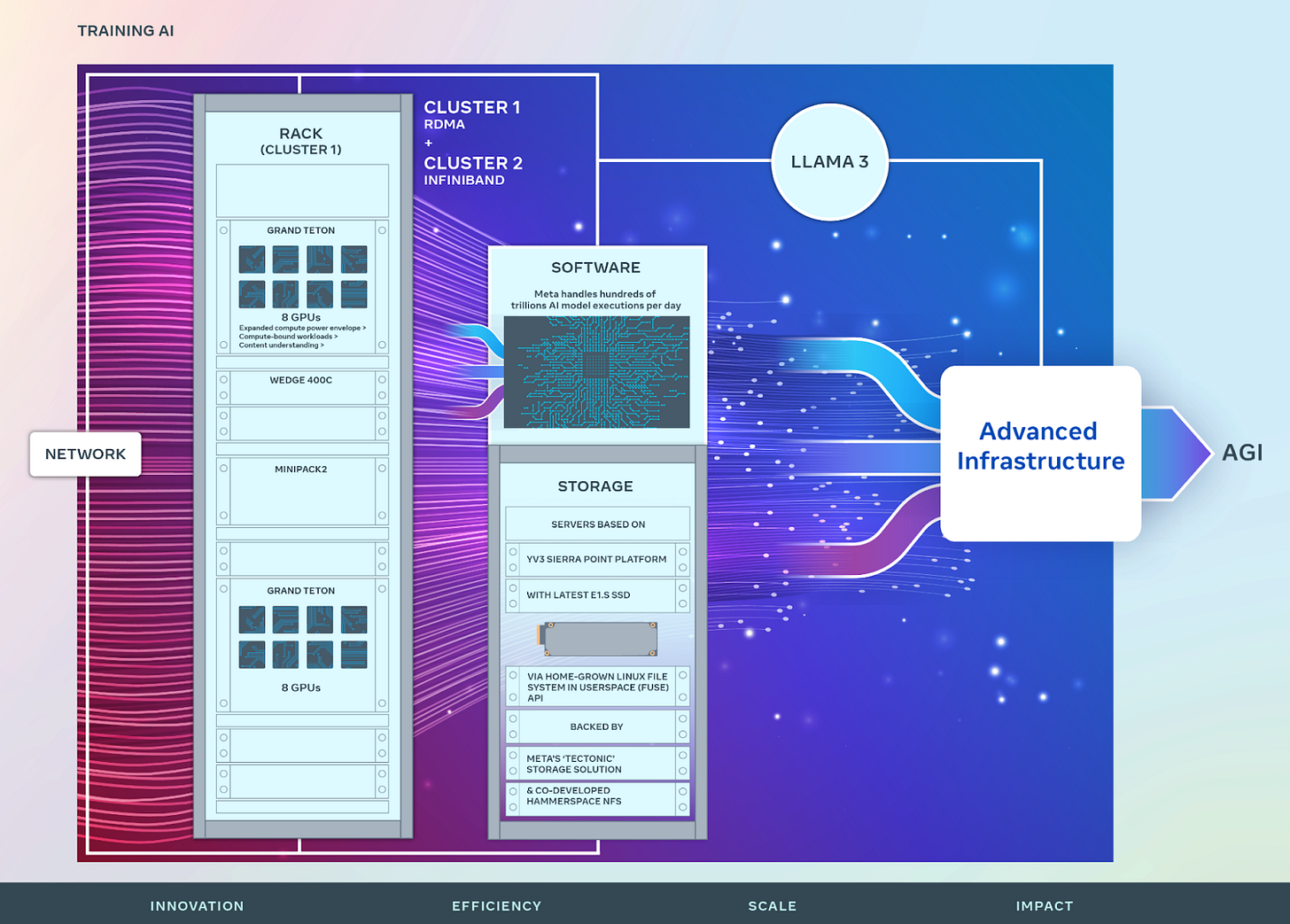

Meta introduces two 24K GPU clusters to train Llama 3

Meta has introduced two 24k GPU clusters. Built on top of Grand Teton, OpenRack, and PyTorch, these are designed to support various AI workloads, including the training of Llama 3.

Meta aims to expand its infrastructure build-out by the end of 2024. It plans to include 350,000 NVIDIA H100 GPUs, providing compute power equivalent to nearly 600,000 H100s. The clusters are built with a focus on researcher and developer experience. It adds to Meta’s long-term vision to build open and responsibly developed artificial general intelligence (AGI).

Enjoying the weekly updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

DeepMind's SIMA: The AI agent that's a Jack of all games

DeepMind has introduced SIMA (Scalable Instructable Multiworld Agent), a generalist AI agent that can understand and follow natural language instructions to complete tasks across video game environments. Trained in collaboration with eight game studios on nine different games, SIMA marks a significant milestone in game-playing AI by showing the ability to generalize learned skills to new gaming worlds without requiring access to game code or APIs.

(SIMA comprises pre-trained vision models, and a main model that includes a memory and outputs keyboard and mouse actions.)

SIMA was evaluated on 600 basic skills, including navigation, object interaction, and menu use. In tests, SIMA agents trained on multiple games significantly outperformed specialized agents trained on individual games. Notably, an agent trained on all but one game performed nearly as well on the unseen game as an agent specifically trained on it, showcasing SIMA's remarkable ability to generalize to new environments.

Claude 3 Haiku: Anthropic's lightning-fast AI solution for enterprises

Anthropic has released Claude 3 Haiku, their fastest and most affordable AI model. With impressive vision capabilities and strong performance on industry benchmarks, Haiku is designed to tackle a wide range of enterprise applications. The model's speed - processing 21K tokens per second for prompts under 32K tokens - and cost-effective pricing model make it an attractive choice for businesses needing to analyze large datasets and generate timely outputs.

In addition to its speed and affordability, Claude 3 Haiku prioritizes enterprise-grade security and robustness. The model is now available through Anthropic's API or on claude.ai for Claude Pro subscribers.

OpenAI-powered "Figure 01" can chat, perceive, and complete tasks

Figure, in collaboration with OpenAI, has developed a groundbreaking robot called "Figure 01" that can engage in full conversations and execute tasks based on verbal requests, even those that are ambiguous or context-dependent. This is made possible by connecting the robot to a multimodal AI model trained by OpenAI, which integrates language and vision.

The AI model processes the robot's entire conversation history, including images, enabling it to generate appropriate verbal responses and select the most suitable learned behaviors to carry out given commands. The robot's actions are controlled by visuomotor transformers that convert visual input into precise physical movements.

Apple’s new recipe to master multimodal AI

Apple's MM1 shows SOTA language and vision capabilities. It was trained on a filtered dataset of 500 million text-image pairs from the web, including 10% text-only docs to improve language understanding.

The research team experimented with different configurations during training. They discovered that using an external pre-trained high-resolution image encoder improved visual recognition. Combining different image, text, and caption data ratios led to the best performance. Synthetic caption data also enhanced few-shot learning abilities.

The experiment cements that using a blend of image caption, interleaved image text, and text-only data is crucial for achieving SOTA few-shot results across multiple benchmarks.

Cerebras WSE-3: AI chip enabling 10x larger models than GPT-4

Cerebras Systems has unveiled its latest wafer-scale AI chip, the WSE-3. It boasts an incredible 4 trillion transistors, making it one of the most powerful AI chips on the market. The third-generation wafer-scale AI mega chip is twice as powerful as its predecessor while being power efficient. The chip's transistor density has increased by over 50 percent thanks to the latest manufacturing technology.

One of the most remarkable features of WSE-3 is its ability to enable AI models that are 10x larger than GPT-4 and Gemini models.

Apple acquires Canadian AI startup DarwinAI

DarwinAI has developed AI technology for visually inspecting components during the manufacturing process and serves customers in a range of industries. But one of its core technologies is making AI systems smaller and faster. This could be helpful to Apple, which is focused on running AI on devices rather than entirely in the cloud.

This under-the-radar acquisition comes ahead of Apple's big AI push this year. CEO Tim Cook has promised that Apple will “break new ground” in AI this year, and an announcement is expected as soon as the company’s worldwide developers conference in June.

That's all for now!

Subscribe to The AI Edge and gain exclusive access to content enjoyed by professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other esteemed organizations.

Thanks for reading, and see you on Monday. 😊