AI Weekly Rundown (March 2 to March 8)

Major AI announcements from Google, Anthropic, Microsoft, Inflection, and more.

Hello Engineering Leaders and AI Enthusiasts!

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:👀 Google’s ScreenAI can ‘see’ graphics like humans do

🐛 How AI ‘worms’ pose security threats in connected systems

🧠 New benchmarking method challenges LLMs' reasoning abilities

🏆 Anthropic’s Claude 3 Beats OpenAI’s GPT-4

🖼️ TripsoSR: 3D object generation from a single image in <1s

🛡️ Cloudflare's Firewall for AI protects LLMs from abuses🐋 Microsoft's Orca AI beats 10x bigger models in math

🎨 GPT-4V wins at turning designs into code

🎥 Haiper by DeepMind alums joins the AI video race

🗣️ Microsoft's NaturalSpeech makes AI sound human

🔍 Google’s search update targets AI-generated spam

🤖 Google's RT-Sketch teaches robots with doodles

🚀 Inflection-2.5: GPT4-like performance with 40% less compute

📱 Google’s new tool enables LLMs to run fully on-device

💡 GaLore: For memory-efficient pre-training & fine-tuning of LLMs

Let’s go!

Google’s ScreenAI can ‘see’ graphics like humans do

Google Research has introduced ScreenAI, a Vision-Language Model that can perform question-answering on digital graphical content like infographics, illustrations, and maps while also annotating, summarizing, and navigating UIs. The model combines computer vision (PaLI architecture) with text representations of images to handle these multimodal tasks.

Despite having just 4.6 billion parameters, ScreenAI achieves new state-of-the-art results on UI- and infographics-based tasks and new best-in-class performance on others, compared to models of similar size.

How AI ‘worms’ pose security threats in connected systems

Security researchers have created an AI "worm" called Morris II to showcase vulnerabilities in AI ecosystems where different AI agents are linked together to complete tasks autonomously.

The researchers tested the worm in a simulated email system using ChatGPT, Gemini, and other popular AI tools. The worm can exploit these AI systems to steal confidential data from emails or forward spam/propaganda without human approval. It works by injecting adversarial prompts that make the AI systems behave maliciously.

The research highlights risks if AI agents are given too much unchecked freedom to operate.

New benchmarking method challenges LLMs’ reasoning abilities

Researchers at Consequent AI have identified a "reasoning gap" in LLMs like GPT-3.5 and GPT-4. They introduced a new benchmarking approach called "functional variants," which tests a model's ability to reason instead of just memorize. This involves translating reasoning tasks like math problems into code that can generate unique questions requiring the same logic to solve.

When evaluating several state-of-the-art models, the researchers found a significant gap between performance on known problems from benchmarks versus new problems the models had to reason through. The gap was 58-80%, indicating the models do not truly understand complex problems but likely just store training examples.

Anthropic’s Claude 3 beats OpenAI’s GPT-4

Anthropic has launched Claude 3, a new family of models that has set new industry benchmarks across a wide range of cognitive tasks. The family comprises three state-of-the-art models in ascending order of cognitive ability: Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus. Each model provides an increasing level of performance, and you can choose the one according to your intelligence, speed, and cost requirements.

Opus and Sonnet are now available via claude.ai and the Claude API in 159 countries, and Haiku will join that list soon.

Claude 3 also displays solid visual processing capabilities and can process a wide range of visual formats, including photos, charts, graphs, and technical diagrams. Compared to Claude 2.1, Claude 3 exhibits 2x accuracy and precision for responses and correct answers.

TripsoSR: 3D object generation from a single image in <1s

Stability AI has introduced a new AI model named TripsoSR in partnership with Trip AI. The model enables high-quality 3D object generation from a single in less than a second. It runs under low inference budgets (even without a GPU) and is accessible to many users.

When tested on an Nvidia A100, it generates draft-quality 3D outputs (textured meshes) in around 0.5 seconds, outperforming other open image-to-3D models such as OpenLRM.

Cloudflare's Firewall for AI protects LLMs from abuses

Cloudflare has released a Firewall for AI, a protection layer you can deploy in front of LLMs to identify abuses before they reach the models. While the traditional web and API vulnerabilities also apply to the LLM world, Firewall for AI is an advanced-level Web Application Firewall (WAF) designed explicitly for LLM protection and placed in front of applications to detect vulnerabilities and provide visibility to model owners.

It is deployed like a traditional WAF, where every API request with an LLM prompt is scanned for patterns and signatures of possible attacks.

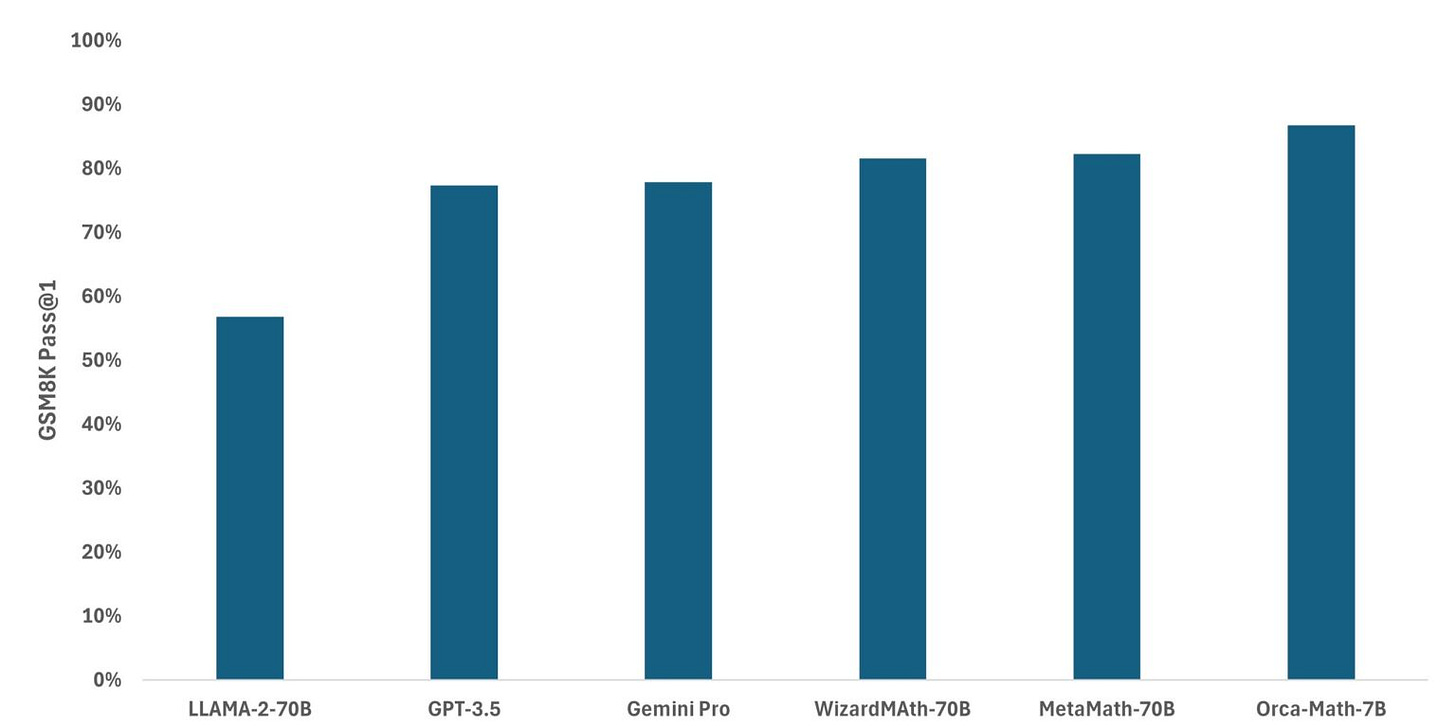

Microsoft's Orca AI beats 10x bigger models in math

Microsoft's Orca team has developed Orca-Math, an AI model that excels at solving math word problems despite its compact size of just 7 billion parameters. It outperforms models 10x larger on the GSM8K benchmark, achieving 86.81% accuracy without relying on external tools or tricks. The model's success is attributed to training on a high-quality synthetic dataset of 200k math problems created using multi-agent flows and an iterative learning process involving AI teacher and student agents.

GPT-4V wins at turning designs into code

GenAI can enable a new paradigm of front-end development where LLMs directly convert visual designs into code implementation. New research formalizes this as “Design2Code” task and conducts comprehensive benchmarking. It also:

Introduces Design2Code benchmark consisting of diverse real-world webpages as test examples

Develops comprehensive automatic metrics that complement human evaluations

Proposes new multimodal prompting methods that improve over direct prompting baselines.

Finetunes open-source Design2Code-18B model that matches the performance of Gemini Pro Vision on both human and automatic evaluation

It also finds that 49% of the GPT-4V-generations webpages were good enough to replace the original references, while 64% were even better designed than the original references.

DeepMind alums' Haiper joins the AI video race

DeepMind alums Yishu Miao and Ziyu Wang have launched Haiper, a video generation tool powered by their own AI model, and offers a free website where users can generate short videos using text prompts.

The startup has raised $19.2 million in funding and focuses on improving its AI model to deliver high-quality, realistic videos. They aim to build a core video generation model that can be offered to developers and address challenges like the "uncanny valley" problem in AI-generated human figures.

Enjoying the weekly updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Microsoft's NaturalSpeech makes AI sound human

Microsoft and its partners have created NaturalSpeech 3, a new Text-to-Speech system that makes computer-generated voices sound more human. Powered by FACodec architecture and factorized diffusion models, NaturalSpeech 3 breaks down speech into different parts, like content, tone, and sound quality, to create a natural-sounding speech that fits specific prompts, even for voices it hasn't heard before.

NaturalSpeech 3 works better than other voice tech in terms of quality, similarity, tone, and clarity. It keeps getting better as it learns from more data. This research is a big step towards a future where talking to computers is as easy as chatting with friends.

Google’s search update targets AI-generated spam

Google has announced significant changes to its search ranking algorithms to reduce low-quality and AI-generated spam content in search results. The March update targets three main spam practices: mass distribution of unhelpful content, abusing site reputation to host low-quality content, and repurposing expired domains with poor content.

While Google is not devaluing all AI-generated content, it aims to judge content primarily on its usefulness to users. Most of the algorithm changes are effective immediately, though sites abusing their reputation have a 60-day grace period to change their practices.

Google's RT-Sketch teaches robots with doodles

Google has introduced RT-Sketch, a new approach to teaching robots tasks using simple sketches. Users can quickly draw a picture of what they want the robot to do, like rearranging objects on a table. It focuses on the essential parts of the sketch, ignoring distracting details.

Test results show that RT-Sketch performs comparably to image or language-conditioned agents in simple settings with written instructions on straightforward tasks. However, it did better when instructions were confusing or there were distracting objects present. It can also interpret and act upon sketches with varying levels of detail.

Inflection 2.5: A new era of personal AI is here!

Inflection.ai, the company behind the personal AI app Pi, has introduced Inflection-2.5, an upgraded LLM that competes with the likes of GPT-4 and Gemini. The upgrade offers enhanced capabilities and improved performance, combining raw intelligence with the company's signature personality and empathetic fine-tuning.

This upgrade has made significant progress in coding and mathematics. Inflection-2.5 can empower Pi users with a more intelligent and empathetic AI experience.

Google announces LLMs on device with MediaPipe

Google’s new experimental release, the MediaPipe LLM Inference API, allows LLMs to run fully on-device across platforms. This is a significant development, considering LLMs' memory and computing demands are over 100x larger than traditional on-device models.

It is designed to streamline on-device LLM integration for web developers and supports Web, Android, and iOS platforms. It offers several key features and optimizations that enable on-device AI, such as new operations, quantization, caching, and weight sharing. Developers can now run LLMs on devices like laptops and phones using the MediaPipe LLM Inference API.

GaLore: A new method for memory-efficient LLM training

Researchers have developed a new technique called Gradient Low-Rank Projection (GaLore) to significantly reduce memory usage while training LLMs. Tests have shown that GaLore achieves results similar to full-rank training while reducing optimizer state memory usage by up to 65.5% when pre-training large models like LLaMA.

It also allows pre-training a 7 billion parameter model from scratch on a single 24GB consumer GPU without extra techniques. GaLore is optimizer-independent and can be used with other techniques like 8-bit optimizers to save additional memory.

That's all for now!

Subscribe to The AI Edge and gain exclusive access to content enjoyed by professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other esteemed organizations.

Thanks for reading, and see you on Monday. 😊