AI Weekly Rundown (June 3 to June 9)

Google, Meta, Apple, Salesforce, Argilla, Tafi, and more making significant AI strides.

Hello, Engineering Leaders and AI enthusiasts,

Another week with small but impactful AI developments. Here are the highlights from last week.

In today’s edition:

🖼️Google simplifies text-to-image AI

🧠Thought Cloning - AI agents learning to think

💡ReWOO achieves 5x token efficiency

🧩Meta's AI segmentation game changer

📈Argilla Feedback promises to boost performance of LLMs

🆕Apple enters the AI race with new features

📷Google’s AI gives Visual Captions to meetings

🤖GGML for AI training at the edge

🚀Tafi launches text to 3D character AI engine

🔍MeZo redefining LM Training- Less memory, better results

🎯Google Bard goes geek

🖥️CodeTF transforming code intelligence

⚡️Google DeepMind AI breaks records

🌍Google introduces SQuId in 42 languages

🔁Meta plans to put AI everywhere on its platforms

Let’s go!

Google simplifies text-to-image AI

Diffusion models let you create amazing images given the right prompt (often detailed). But some things are hard to express in text, like where objects should go or how big they should be. How can we get this kind of control?

Google Research and UC Berkeley introduced self-guidance, a zero-shot approach that allows for direct control of the shape, position, and appearance of objects in generated images.

It leverages the rich internal representations learned by pre-trained text-to-image diffusion models – namely, intermediate activations and attention – to steer attributes of entities and interactions between them. Moreover, the method can also be used for editing real images.

Thought Cloning - AI agents learning to think

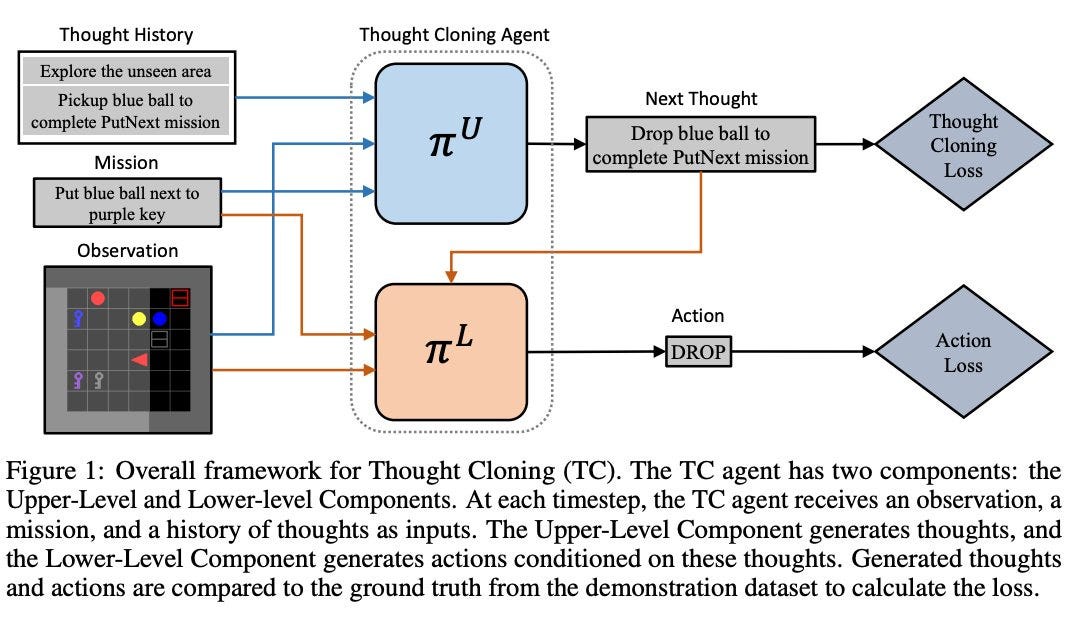

Researchers propose a new approach called Thought Cloning to enhance the cognitive abilities of AI agents in reinforcement learning. Instead of just copying what humans do, it tries to understand the thoughts behind those actions. They demonstrate improved performance and adaptability, particularly in unfamiliar situations.

Thought Cloning also benefits AI safety, interpretability, and debugging, allowing for easier identification and resolution of issues while creating more powerful and safer AI agents.

ReWOO achieves 5x token efficiency

Researchers have proposed ReWOO (Reasoning WithOut Observation), a modular paradigm to reduce token consumption. The idea behind ReWOO is to separate the reasoning process of the LLM from external observations, which would help reduce the token consumption significantly. It also minimizes the computational load associated with repeated prompts.

ReWOO achieves 5x token efficiency and 4% accuracy improvement on HotpotQA, a multi-step reasoning benchmark.

Meta's AI segmentation game changer

Meta’s researchers have developed HQ-SAM (High-Quality Segment Anything Model), a new model that improves the segmentation capabilities of the existing SAM. SAM struggles to segment complex objects accurately, despite being trained with 1.1 billion masks. HQ-SAM is trained on a dataset of 44,000 fine-grained masks from various sources, achieving impressive results on nine segmentation datasets across different tasks.

HQ-SAM retains SAM's prompt design, efficiency, and zero-shot generalizability while requiring minimal additional parameters and computation. Training HQ-SAM on the provided dataset takes only 4 hours on 8 GPUs.

Argilla Feedback promises to boost performance of LLMs

Argilla Feedback is an open-source platform designed to collect human feedback and improve the performance and safety of LLMs at the enterprise level. It’s a critical solution for fine-tuning and Reinforcement Learning from Human Feedback (RLHF).

It simplifies the collection of human and machine feedback, making the refinement and evaluation of LLMs more efficient. The image below illustrates the different stages of training and fine-tuning LLMs, emphasizing where human feedback is incorporated and the expected outcomes at each stage.

Apple enters the AI race with new features

Apple announced a host of updates at the WWDC 2023. Yet, the word “AI” was not used even once, despite today’s pervasive AI hype-filled atmosphere. The phrase “machine learning” was used a couple of times. (And AI is nothing but machine learning). However, here are a few announcements Apple made that use AI as the underlying technology.

Apple Vision Pro, a revolutionary spatial computer that seamlessly blends digital content with the physical world. It uses advanced ML techniques.

Upgraded Autocorrect in iOS 17 that is powered by a transformer language model for improved prediction capabilities.

Improved Dictation in iOS 17 that leverages a new speech recognition model to make it even more accurate.

Live Voicemail that turns voicemail audio into text on the fly, which is powered by a neural engine.

Personalized Volume, which uses ML to understand environmental conditions and listening preferences over time to automatically fine-tune the media experience.

Journal, a new app for users to reflect and practice gratitude, uses on-device ML for personalized suggestions to inspire entries.

Google’s AI gives Visual Captions to meetings

What if AI could show context-relevant visuals in online meetings? Google Research introduced a system for real-time visual augmentation of verbal communication called Visual Captions. It uses verbal cues to augment synchronous video communication with interactive visuals on-the-fly.

Researchers fine-tuned an LLM to proactively suggest relevant visuals in open-vocabulary conversations using a dataset curated for this purpose. Moreover, the system is also robust against typical mistakes that may often appear in real-time speech-to-text transcription (as seen above). Plus, Visual Captions is open-sourced.

GGML for AI training at the edge

GGML is a C library that provides a set of machine learning tools and defines low-level ML primitives, such as tensor types. It introduces a binary format designed for distributing large language models (LLMs). This format allows LLMs to be efficiently shared and utilized on various hardware devices.

GGML leverages a technique called "quantization," which enables large language models to run effectively on consumer-grade hardware. It has also been applied to Meta’s LLaMA for fast, local inference and to OpenAI's Whisper.

Tafi launches text to 3D character AI engine

Tafi announced a groundbreaking text-to-3D character engine that will transform how artists and developers create high-quality 3D characters. This innovative technology makes it easier and faster than ever before to bring ideas to life by converting text input into 3D characters.

It will help:

Create top-notch 3D characters quickly and effortlessly using plain text.

Generate billions of unique variations of 3D characters.

Easily export the character directly into Blender, Unreal, or Unity.

In the upcoming update, Tafi will enhance its compatibility with popular game engines and 3D software applications, such as adding support for NVIDIA's Omniverse.

MeZo redefining LM training- Less memory, better results

MeZo, a memory-efficient zeroth-order optimizer, adapts the classical zeroth-order SGD method to operate in place, thereby fine-tuning language models with the same memory footprint as inference.

With a single A100 80GB GPU, MeZO can train a 30-billion parameter OPT.

Achieves comparable performance to fine-tuning with backpropagation across multiple tasks, with up to 12x memory reduction.

Can effectively optimize non-differentiable objectives (e.g., maximizing accuracy or F1).

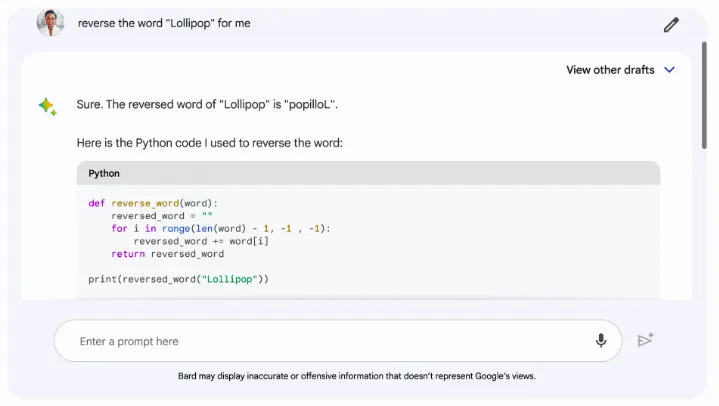

Google Bard goes geek

Google launched two improvements for Bard. First, Bard can now respond more accurately to mathematical tasks, coding questions, and string manipulation prompts due to a new technique called “implicit code execution”.

In this update, Google combined the capabilities of both LLMs and traditional code (System 2) to help improve accuracy in Bard’s responses.

Second, Bard has a new export action to Google Sheets. So when Bard generates a table in its response — like if you ask it to “create a table for volunteer sign-ups for my animal shelter” — you can now export it right to Sheets.

CodeTF transforming code intelligence

Salesforce AI Research introduces CodeTF, an open-source library that utilizes Transformer-based models to enhance code intelligence. It simplifies developing and deploying robust models for software engineering tasks by offering a modular and extensible framework. It aims to facilitate easy integration of SOTA CodeLLMs into real-world applications.

CodeTF provides access to pre-trained Code Language Models, supports popular code benchmarks, and includes standardized interfaces for efficient training and serving. With language-specific parsers and utility functions, it enables the extraction of code attributes. By bridging the gap between generative AI and software engineering, CodeTF serves as a comprehensive solution for developers, researchers, and practitioners.

Google’s DeepMind AI breaks records with a 70% faster sorting algorithm

Google DeepMind has introduced AlphaDev, an AI system that uses reinforcement learning to uncover improved computer science algorithms. Alphadev’s ability to sort algorithms in C++ surpasses the current best algorithm by 70% and revolutionizes the concept of computational efficiency.

AlphaDev discovered faster algorithms by taking a different approach than traditional methods, focusing on the computer's assembly instructions rather than refining existing algorithms. It ventured into unexplored territory, going beyond the usual path to achieve its breakthroughs.

Google introduces SQuId (Speech Quality Identification) in 42 languages

Google introduces SQuId (Speech Quality Identification), a regression model with 600M parameters. It is trained on over a million quality ratings across 42 languages and tested in 65 languages. It serves as a complement to human ratings, providing a more efficient and standardized evaluation method.

It can be used to complement human ratings for evaluating many languages and is the largest published effort of this type to date.

Meta plans to put AI everywhere on its platforms

Meta has announced plans to integrate generative AI into its platforms, including Facebook, Instagram, WhatsApp, and Messenger. The company shared a sneak peek of AI tools it was building, including ChatGPT-like chatbots planned for Messenger and WhatsApp that could converse using different personas. It will also leverage its image generation model to let users modify images and create stickers via text prompts.

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI enthusiasts.

Thanks for reading, and see you Monday.