AI Weekly Rundown (June 29 to July 5)

Major AI announcements from Google, Apple, xAI, Meta, ElevenLabs, and more.

Hello Engineering Leaders and AI Enthusiasts!

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:

🚀 Google announces advancements in Vertex AI models

📊 LMSYS's new Multimodal Arena compares top VLMs

👓 Apple's Vision Pro gets an AI upgrade

🧠 JARVIS-inspired Grok 2 aims to answer any user query

🍏 Apple unveils a public demo of its ‘4M’ AI model

🛒 Amazon hires Adept’s top executives to build an AGI team

⚡ Meta’s 3D Gen creates 3D assets at lightning speed

🔍 Perplexity AI upgrades Pro Search with more advanced problem-solving

🔒 The first Gen AI framework that keeps your prompts always encrypted

🎯 New AI system decodes brain activity with near perfection

🗣️ ElevenLabs has exciting AI voice updates

🤖 A French AI startup launches ‘real-time’ AI voice assistant

Let’s go!

Google announces advancements in Vertex AI models

Google has rolled out significant improvements to its Vertex AI platform, including the general availability of Gemini 1.5 Flash with a massive 1 million-token context window. Also, Gemini 1.5 Pro now offers an industry-leading 2 million-token context capability. Google is introducing context caching for these Gemini models, slashing input costs by 75%.

Moreover, Google launched Imagen 3 in preview and added third-party models like Anthropic's Claude 3.5 Sonnet on Vertex AI.

They've also made Grounding with Google Search generally available and announced a new service for grounding AI agents with specialized third-party data. Plus, they've expanded data residency guarantees to 23 countries, addressing growing data sovereignty concerns.

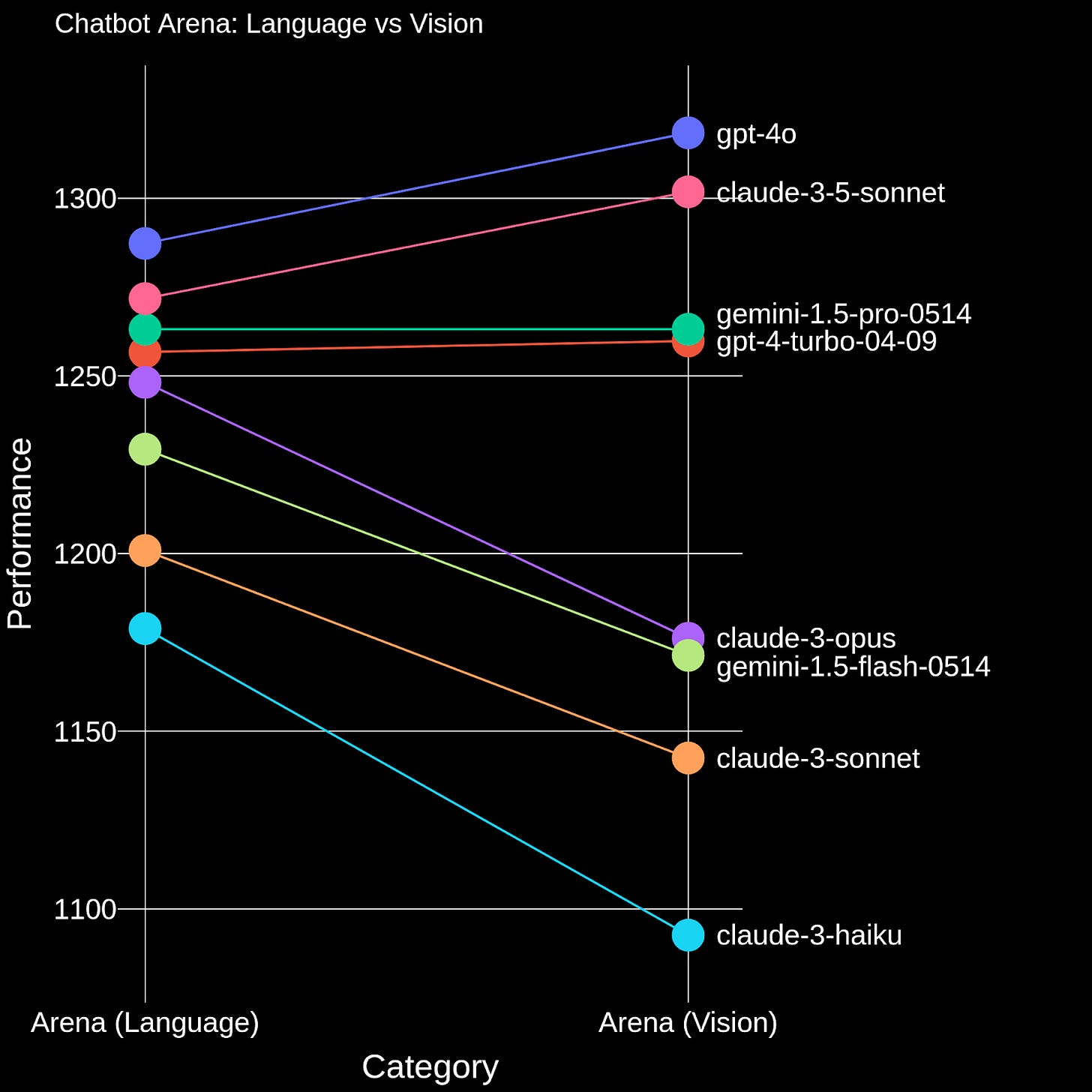

LMSYS's new Multimodal Arena compares top AI models' visual processing abilities

LMSYS Org added image recognition to Chatbot Arena to compare vision language models (VLMs), collecting over 17,000 user preferences in just two weeks. OpenAI's GPT-4o and Anthropic's Claude 3.5 Sonnet outperformed other models in image recognition. Also, the open-source LLaVA-v1.6-34B performed comparably to some proprietary models.

These AI models tackle diverse tasks, from deciphering memes to solving math problems with visual aids. However, the examples provided show that even top models can stumble when interpreting complex visual information or handling nuanced queries.

👓 Apple's Vision Pro gets an AI upgrade

Apple is reportedly working to bring its Apple Intelligence features to the Vision Pro headset, though not this year. Meanwhile, Apple is tweaking its in-store Vision Pro demos, allowing potential buyers to view personal media and try a more comfortable headband. Apple's main challenge is adapting its AI features to a mixed-reality environment.

The company is tweaking its retail strategy for Vision Pro demos, hoping to boost sales of the pricey headset. Apple is also exploring the possibility of monetizing AI features through subscription services like "Apple Intelligence+."

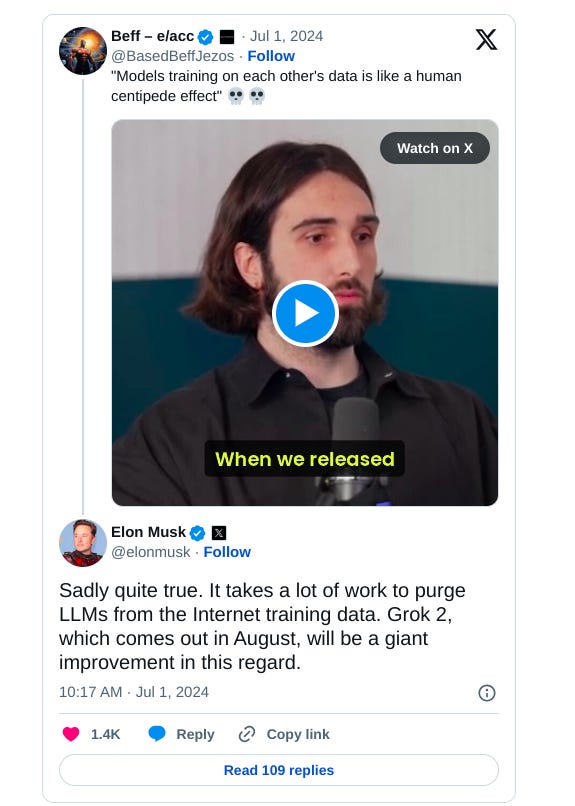

JARVIS-inspired Grok 2 aims to answer any user query

Elon Musk has announced the release dates for two new AI assistants from xAI. The first, Grok 2, will be launched in August. Musk says Grok 2 is inspired by JARVIS from Iron Man and The Hitchhiker's Guide to the Galaxy and aims to answer virtually any user query. This ambitious goal is fueled by xAI's focus on "purging" LLM datasets used for training.

Musk also revealed that an even more powerful version, Grok 3, is planned for release by the end of the year. Grok 3 will leverage the processing power of 100,000 Nvidia H100 GPUs, potentially pushing the boundaries of AI performance even further.

Apple unveils a public demo of its ‘4M’ AI model

Apple and the Swiss Federal Institute of Technology Lausanne (EPFL) have released a public demo of the ‘4M’ AI model on Hugging Face. The 4M (Massively Multimodal Masked Modeling) model can process and generate content across multiple modalities, such as creating images from text, detecting objects, and manipulating 3D scenes using natural language inputs.

While companies like Microsoft and Google have been making headlines with their AI partnerships and offerings, Apple has been steadily advancing its AI capabilities. The public demo of the 4M model suggests that Apple is now positioning itself as a significant player in the AI industry.

Amazon hires Adept’s top executives to build an AGI team

Amazon is hiring the co-founders, including the CEO and several other key employees, from the AI startup Adept.CEO David Luan will join Amazon's AGI autonomy group, which is led by Rohit Prasad, who is spearheading a unified push to accelerate Amazon's AI progress across different divisions like Alexa and AWS.

Amazon is consolidating its AI projects to develop a more advanced LLM to compete with OpenAI and Google's top offerings. This unified approach leverages the company's collective resources to accelerate progress in AI capabilities.

Enjoying the weekly updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Meta’s 3D Gen creates 3D assets at lightning speed

Meta has introduced Meta 3D Gen, a new state-of-the-art, fast pipeline for text-to-3D asset generation. It offers 3D asset creation with high prompt fidelity and high-quality 3D shapes and textures in less than a minute.

According to Meta, the process is three to 10 times faster than existing solutions. The research paper even mentions that when assessed by professional 3D artists, the output of 3DGen is preferred a majority of time compared to industry alternatives, particularly for complex prompts, while being from 3× to 60× faster.

A significant feature of 3D Gen is its support physically-based rendering (PBR), necessary for 3D asset relighting in real-world applications.

Perplexity upgrades Pro Search

Perplexity AI has improved Pro Search to tackle more complex queries, perform advanced math and programming computations, and deliver even more thoroughly researched answers. Everyone can use Pro Search five times every four hours for free, and Pro subscribers have unlimited access.

Perplexity suggests the upgraded Pro Search “can pinpoint case laws for attorneys, summarize trend analysis for marketers, and debug code for developers—and that’s just the start”. It can empower all professions to make more informed decisions.

The first Gen AI framework that keeps your prompts always encrypted

Edgeless Systems introduced Continuum AI, the first generative AI framework that keeps prompts encrypted at all times with confidential computing by combining confidential VMs with NVIDIA H100 GPUs and secure sandboxing.

The Continuum technology has two main security goals. It first protects the user data and also protects AI model weights against the infrastructure, the service provider, and others. Edgeless Systems is also collaborating with NVIDIA to empower businesses across sectors to confidently integrate AI into their operations.

New AI system decodes brain activity with near perfection

Researchers have developed an AI system that can create remarkably accurate reconstructions of what someone is looking at based on recordings of their brain activity.

In previous studies, the team recorded brain activities using a functional MRI (fMRI) scanner and implanted electrode arrays. Now, they reanalyzed the data from these studies using an improved AI system that can learn which parts of the brain it should pay the most attention to.

As a result, some of the reconstructed images were remarkably close to the images the macaque monkey (in the study) saw.

ElevenLabs has exciting AI voice updates

ElevenLabs has partnered with estates of iconic Hollywood stars to bring their voices to the Reader App. Judy Garland, James Dean, Burt Reynolds, and Sir Laurence Olivier are now part of the library of voices on the Reader App.

(Source)

It has also introduced Voice Isolater. This tool removes unwanted background noise and extracts crystal-clear dialogue from any audio to make your next podcast, interview, or film sound like it was recorded in the studio. It will be available via API in the coming weeks.

(Source)

French AI startup launches ‘real-time’ AI voice assistant

A French AI startup, Kyutai, has launched a new ‘real-time’ AI voice assistant named Moshi. It is capable of listening and speaking simultaneously and in 70 different emotions and speaking styles, ranging from whispers to accented speech.

Kyutai claims Moshi is the first real-time voice AI assistant, with a latency of 160ms. You can try it via Hugging Face. It will be open-sourced for research in coming weeks.

That's all for now!

Subscribe to The AI Edge and gain exclusive access to content enjoyed by professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other esteemed organizations.

Thanks for reading, and see you on Monday. 😊