AI Weekly Rundown (June 24 to June 30)

News from Microsoft, Google, Baidu, Unity, Salesforce, Databricks, Meta, and more.

Hello, Engineering Leaders and AI Enthusiasts,

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:

⚡️ Microsoft ZeRO++: Unmatched efficiency for LLM training

📦 RepoFusion: Training code models to understand your repository

🏃♂️ MotionGPT: A versatile text-to-motion AI

🎨 DragDiffusion: Giving Diffusion models interactive point-based image editing

🧠 Google’s new pgvector power AI applications

📊 Verge polled 2k people about using AI

🏆 Baidu’s Ernie 3.5 beat ChatGPT on multiple metrics

💡 Unity's game-changing AI products for game development

🤖 Google DeepMind's upcoming chatbot to rival ChatGPT

🚀 Salesforce’s XGen can replace Meta’s LLaMA

🔥 Databricks launches LakehouseIQ and Lakehouse AI tools

🎯 Gen AI is now a bankable skill backed by industry titans

🖼️ AI converts brain EEG signals to HQ images

🔍 Meta discloses AI behind Facebook and Instagram recommendations

🌟 OpenFlamingo V2 launched 5 newly trained multimodal models

Let’s go!

Microsoft ZeRO++: Unmatched efficiency for LLM training

Training large models requires considerable memory and computing resources across hundreds or thousands of GPU devices. Efficiently leveraging these resources requires a complex system of optimizations to:

1)Partition the models into pieces that fit into the memory of individual devices

2)Efficiently parallelize computing across these devices

But training on many GPUs results in small per-GPU batch size, requiring frequent communication and training on low-end clusters where cross-node network bandwidth is limited results in high communication latency.

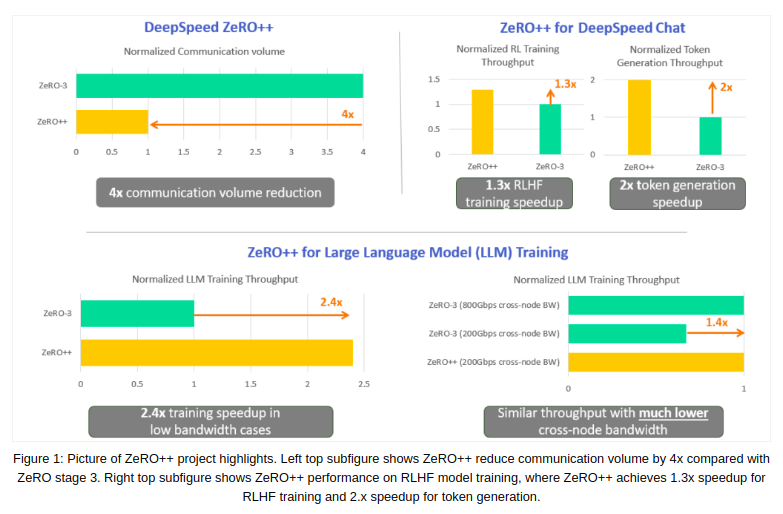

To address these issues, Microsoft Research has introduced three communication volume reduction techniques, collectively called ZeRO++.

It reduces total communication volume by 4x compared with ZeRO without impacting model quality, enabling better throughput even at scale.

RepoFusion: Training code models to understand your repository

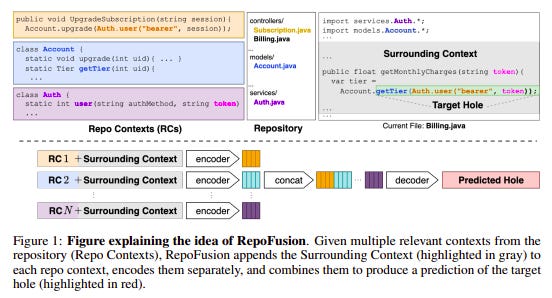

LLMs for code have gained significant popularity, especially with integration into code assistants like GitHub Copilot 2. However, they often struggle to generalize effectively in unforeseen or unpredictable situations, resulting in undesirable predictions. Instances of such scenarios include code that uses private APIs or proprietary software, work-in-progress code, and any other context that the model has not seen while training.

To address these limitations, one possible approach is enhancing their predictions by incorporating the wider context available in the repository. This research proposes RepoFusion, a framework to train models to incorporate relevant repository context. Models trained with repository context significantly outperform several larger models despite being smaller in size.

MotionGPT: A versatile text-to-motion AI

New research has proposed MotionGPT– a unified, versatile, and user-friendly motion-language model for multiple motion-relevant tasks. It is a generative pre-trained model which treats human motion as a foreign language and introduces natural language models into motion-relevant generation.

It is driven by the insight that fusing motion and language data into a single vocabulary makes the relationship between motion and language more apparent. This enhances the performance of motion-related tasks, even with larger-scale data and models.

MotionGPT achieves competitive performance across diverse tasks, including text-to-motion, motion-to-text, motion prediction, and motion in-between, with all available codes and data.

DragDiffusion: Giving Diffusion models interactive point-based image editing

Recently, DragGAN has enabled interactive point-based image editing, i.e., “drag” editing. Although achieving impressive results with pixel-level precision, its applicability is limited by the inherent capacity of the pre-trained generative adversarial networks GAN models (since it is based on GANs).

To remedy this, DragDiffusion extends such an editing framework to diffusion models. Plus, it achieves precise spatial control by optimizing the diffusion latent.

Google’s new pgvector power AI-enabled applications

Google Cloud has announced support for storing and querying vectors in Cloud SQL for PostgreSQL and AlloyDB for PostgreSQL. It allows users to store and index vector embeddings generated by LLMs using the pgvector PostgreSQL extension. It enables efficient searching for similar items using exact and approximate nearest-neighbor search algorithms.

This is a step-by-step tutorial on how to add genAI features to your own applications with just a few lines of code using pgvector, LangChain, and LLMs on Google Cloud.

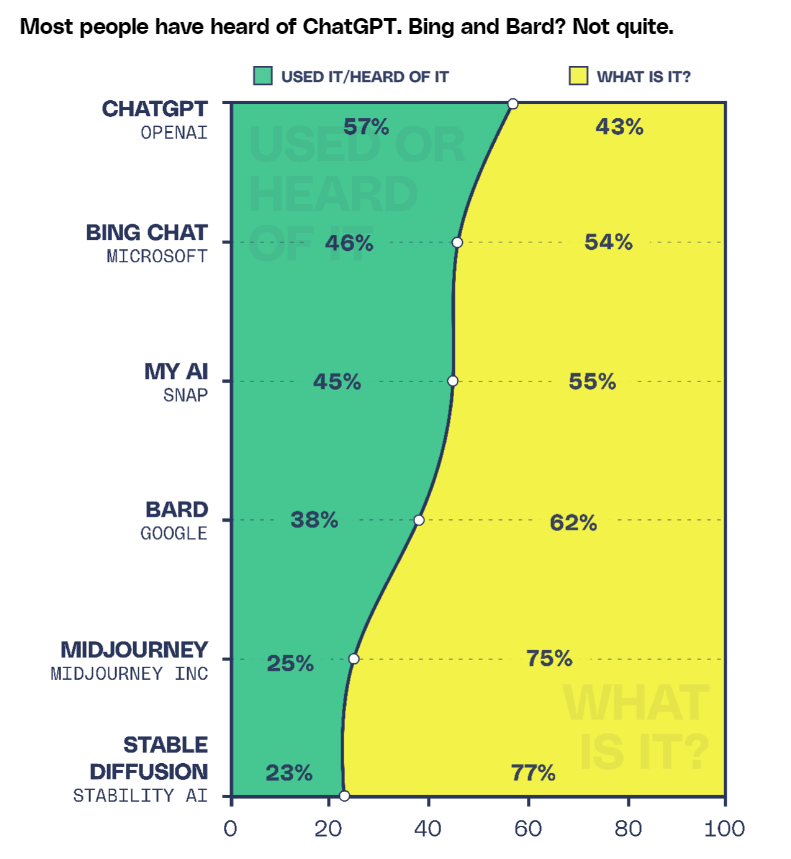

Verge polled 2k people about using AI

The potential impact of AI on the world is uncertain. While some envision opportunities to remove constraints, automate tasks, and revolutionize learning, others worry about its potential to spread misinformation, displace jobs, and pose safety risks if not properly managed.

And to find out what people think about AI and what they want, The Verge collaborated with Vox Media's Insights and Research team with research consultancy firm The Circus and surveyed over 2,000 US adults to understand their perspectives on AI.

The results reveal a mix of uncertainty, fear, and optimism surrounding this emerging technology. Many respondents have limited experience with AI and express concerns about its potential but also hold high expectations for its future benefits.

How is AI being used?

How do people feel about AI’s impact on society?

Baidu’s Ernie 3.5 beats ChatGPT on multiple metrics

Baidu said its latest version of the Ernie AI model, Ernie 3.5, surpassed ChatGPT in comprehensive ability scores and outperformed GPT-4 in several Chinese capabilities. The model comes with better training and inference efficiency, which positions it for faster and cheaper iterations in the future. Plus, it would support external plugins.

The statement from Baidu, China’s first big tech company to debut an AI product to rival ChatGPT, came in response to a test conducted by China Science Daily (a state newspaper). The datasets used in the test serve as benchmarks for evaluating the performance of AI models.

Unity's game-changing AI products for game development

Unity AI announced 3 game-changing AI products:

Unity Muse: Text-to-3D-application inside games.

Unity Sentis: It lets you embed any AI model into your game/application.

AI marketplace: Developers can tap into a selection of AI solutions to build games.

Plus, they curated a collection of solutions for AI-driven game development and gameplay enhancements. Check it out here!

Google DeepMind's upcoming chatbot to rival ChatGPT

DeepMind, the Google-owned research lab, claims its next large language model will rival OpenAI’s. It is using techniques from AlphaGo to make a ChatGPT-rivaling chatbot called Gemini. AlphaGo is DeepMind’s AI system that was the first to defeat a professional human player at the board game Go.

Gemini will also leverage innovations in reinforcement learning to accomplish tasks with which today’s language models struggle. At a high level, Gemini combines some of the strengths of AlphaGo-type systems with the language capabilities of the large models to give the system new capabilities such as planning, the ability to solve problems, and analyzing.

Salesforce’s XGen can replace Meta’s LLaMA

Salesforce Introduces XGen-7B, a new 7B LLM trained on up to 8K sequence length for 1.5 Trillion tokens. It is open-sourced under Apache License 2.0.

On standard NLP benchmarks, it achieves comparable or better results when compared with leading open-source LLMs- MPT, Falcon, LLaMA, Redpajama, and OpenLLaMA in a similar size.

It archives equally strong results both in text (e.g., MMLU, QA) and code (HumanEval) tasks.

The targeted evaluation on long sequence modeling benchmarks shows the benefits of 8K-seq models over 2K- and 4K-seq models.

XGen has the same architecture as Meta’s LLaMA models except for a different tokenizer. However, LLaMA 7B is trained on one trillion tokens compared to XGen-7B’s 1.5T tokens.

Databricks launches LakehouseIQ and Lakehouse AI tools

Databricks launched LakehouseIQ, a generative AI tool democratizing access to data insights. It allows anyone in an organization to search, understand and query internal corporate data by simply asking questions in plain English. No Python, SQL or data querying skills needed.

It also announced new Lakehouse AI innovations aimed at making it easier for its customers to build and govern their own LLMs on the lakehouse.

This move follows Databricks’ $1.3 billion acquisition of MosaicML and comes at a time when Snowflake– its main competitor– continues to make its own generative AI push.

Gen AI is now a bankable skill backed by industry titans

Microsoft has announced the launch of its new AI Skills Initiative aimed at addressing the technical skills gap in the global workforce. The initiative includes a grant challenge, free online courses, and a teacher training toolkit. The company has also introduced the first Professional Certificate on Generative AI as part of the initiative.

Additionally, Microsoft will provide grants to nonprofit organizations, social enterprises, and academic institutions that use GenAI for social and economic benefits.

AI converts brain EEG signals to HQ images

New research has proposed DreamDiffusion, a novel method for generating high-quality images directly from brain electroencephalogram (EEG) signals, without the need to translate thoughts into text. It leverages powerful pre-trained text-to-image diffusion models to generate realistic images from EEG signals only, which is a non-invasive and easily obtainable source of brain activity.

Much recent AI research has attempted to reconstruct visual information based on fMRI signals and has demonstrated the feasibility of reconstructing high-quality results from brain activities. However, they are still far from doing it conveniently and efficiently. DreamDiffusion addresses these issues.

Meta disclosed AI behind Facebook and Instagram recommendations

Meta is sharing 22 system cards that explain how AI-powered recommender systems work across Facebook and Instagram. These cards contain information and actionable insights everyone can use to understand and customize their specific AI-powered experiences in Meta’s products.

Moreover, Meta also shared its top ten most important prediction models rather than everything in the system to not dive into much technical detail can sometimes obfuscate transparency.

OpenFlamingo V2 launched 5 newly trained multimodal models

OpenFlamingo V2 has released 5 new models, spanning the 3B, 4B, and 9B scales, which have been introduced based on Mosaic's MPT-1B and 7B and Together.xyz's RedPajama-3B. These models are built on open-source models with less restrictive licenses than LLaMA.

OpenFlamingo models achieve over 80% of the performance of their Flamingo counterparts when looking at results from 7 evaluation datasets. Moreover, OpenFlamingo-3B and OpenFlamingo-9B achieve over 60% of the best fine-tuned performance by using only 32 in-context examples.

To further enhance the capabilities of OpenFlamingo, the training and evaluation code has undergone significant improvements. The evaluation suite now includes new datasets like TextVQA, VizWiz, HatefulMemes, and Flickr30k, expanding the scope of evaluation possibilities.

OpenFlamingo models can handle mixed sequences of images and text to generate text outputs. This enables the models to handle tasks like captioning, visual question answering, and image classification using relevant examples.

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI enthusiasts.

Thanks for reading, and see you on Monday! 😊