AI Weekly Rundown (June 17 to June 23)

News from Meta, Google, Microsoft, Dropbox, Stabe Diffusion and more...

Hello, Engineering Leaders and AI Enthusiasts,

Another week with small but impactful AI developments. Here are the highlights from last week.

In today’s edition:

✅ Meta’s all-in-one generative speech AI model - Voicebox

✅ OpenLLaMa: An open reproduction of Meta’s LLaMA

✅ GPT Engineer: One prompt generates the entire codebase

✅ Leverage OpenAI models for your data with Microsoft's new feature

✅ Google DeepMind’s RoboCat operates multiple robots

✅ vLLM: Easy, fast, and cheap LLM serving

✅ Dropbox Introduced Dash & $50M AI Initiative

✅ AI Workbook helps you build powerful apps

✅ Google’s AudioPaLM can speak and listen

✅ SDXL 0.9, the most advanced development in Stabe Diffusion

✅ MosaicML's MPT-30B beats GPT-3

Let’s go!

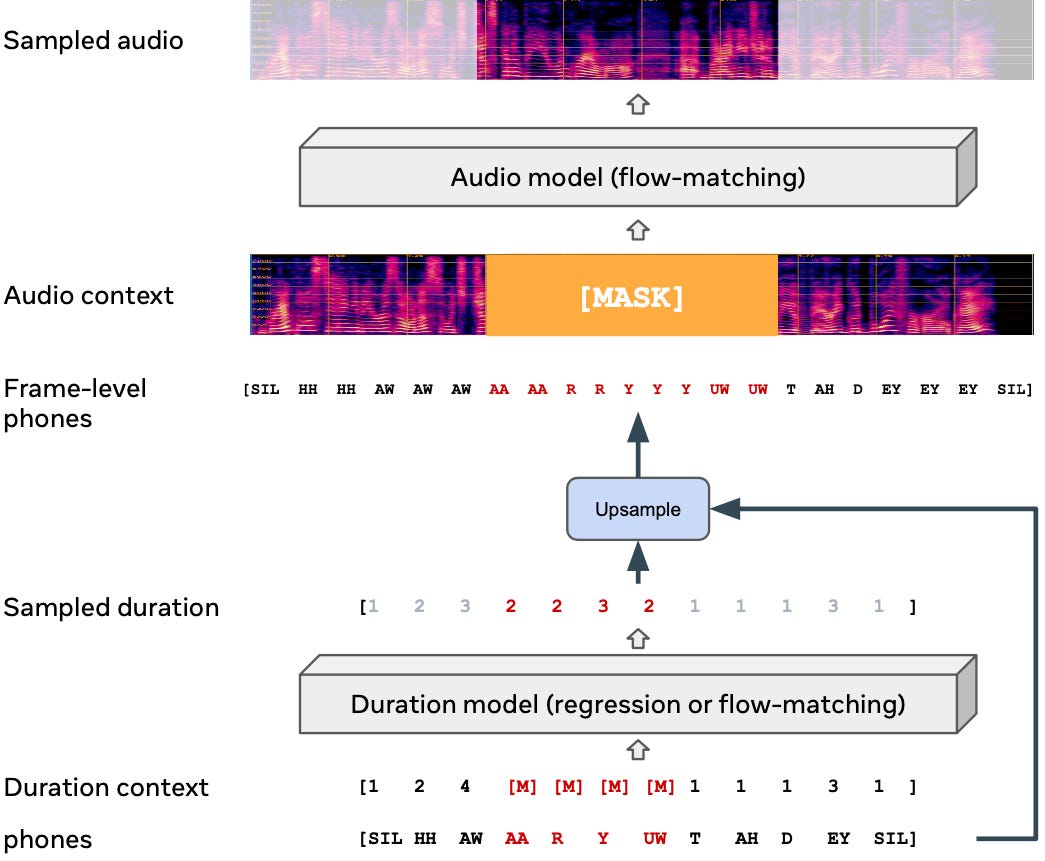

Meta’s all-in-one generative speech AI model

Meta introduces Voicebox, the first generative AI model that can perform various speech-generation tasks it was not specifically trained to accomplish with SoTA performance. It can perform:

Text-to-speech synthesis in 6 languages

Noise removal

Content editing

Cross-lingual style transfer

Diverse sample generation

One of the main limitations of existing speech synthesizers is that they can only be trained on data that has been prepared expressly for that task. Voicebox is built upon the Flow Matching model, which is Meta’s latest advancement on non-autoregressive generative models that can learn a highly non-deterministic mapping between text and speech.

Voicebox can use an input audio sample of just two seconds in length to match the sample’s audio style and use it for a text-to-speech generation.

OpenLLaMa: An open reproduction of Meta’s LLaMA

OpenLLaMA is a licensed open-source reproduction of Meta AI's LLaMA 7B and 13B trained on the RedPajama dataset. This reproduction includes three models: 3B, 7B, and 13B, all trained on 1T tokens. They offer PyTorch and JAX weights for the pre-trained OpenLLaMA models, along with evaluation results and a comparison to the original LLaMA models.

GPT Engineer: One prompt generates the entire codebase

GPT Engineer can generate an entire codebase based on a text prompt. You specify what you want it to build, it asks for clarification and then builds. It:

Is open source

Generates technical spec

Writes all necessary code

Lets you finish a coding project in minutes

Makes it easy to add your own reasoning steps, modify, and experiment

Leverage OpenAI models for your data with Microsoft's new feature

Microsoft announced Azure OpenAI Service on your data in a public preview. This feature lets you unlock the power of OpenAI models, including ChatGPT and GPT-4, with your own data. It includes data interaction and analysis, offering enhanced accuracy, speed, and insights.

The key use cases include:

1. Simplify document intake, and gain quick access to legal and financial data for better decision-making.

2. Harvest valuable customer insights, monetize data access, and gain deep industry and competitor insights.

3. Transform operations, improve customer experiences, and gain a competitive edge.

Google DeepMind’s RoboCat operates multiple robots

Google DeepMind has created RoboCat, an AI model that can control and operate multiple robots. It can learn to do new tasks on various robotic arms with just 100 demonstrations - and improves skills from self-generated training data.

RoboCat learns more quickly than other advanced models because it uses a wide range of datasets. This is a significant development for robotics research as it reduces the reliance on human supervision during training.

Here’s RoboCat’s training cycle, boosted by its ability to generate additional training data autonomously.

vLLM: Easy, fast, and cheap LLM serving

The performance of LLM serving is bottlenecked by memory. vLLM addresses this with PagedAttention, a novel attention algorithm that brings the classic idea of OS’s virtual memory and paging to LLM serving. It makes vLLM a high-throughput and memory-efficient inference and serving engine for LLMs.

vLLM outperforms HuggingFace Transformers by up to 24x (without requiring any model architecture changes) and Text Generation Inference (TGI) by up to 3.5x, in terms of throughput.

Dropbox Introduced Dash & $50M AI Initiative

Dropbox has launched two new AI-powered tools, Dropbox Dash and Dropbox AI, to enhance productivity and improve knowledge work. These tools leverage recent advancements in AI and ML to provide personalized assistance, answer questions, offer insights on content, and facilitate efficient content search within the Dropbox platform.

Meet Dropbox Dash: The AI-powered universal search tool for work

A quick look at Dropbox AI

AI Workbook helps you build powerful apps

AI Workbook is a notebook interface for using LLMs, images, and audio models all in one place! That will help you to build powerful applications and workflows in a single environment. It can:

Combine text, image, & audio models in one place.

Customize AI models using your data.

Share your work & collaborate.

Google’s AudioPaLM can speak and listen

Google Research presents a large language model that understands and creates spoken language interchangeably. It fuses PaLM-2 and AudioLM into a unified multimodal architecture that can perform tasks such as speech recognition and speech-to-speech translation.

It not only translates but also retains the speaker's identity and tone of voice. Plus, it learns from huge amounts of text data, making it even better at dealing with speech-related tasks.

The model significantly outperforms existing systems for speech translation tasks and can perform zero-shot speech-to-text translation for many languages for which input/target language combinations were not seen in training. And it demonstrates features of audio language models.

SDXL 0.9, the most advanced development in Stabe Diffusion

Stability AI announces SDXL 0.9, the most advanced development in the Stable Diffusion text-to-image suite of models. SDXL 0.9 produces massively improved image and composition detail over its predecessor, Stable Diffusion XL. Here’s an example of a prompt tested on both SDXL beta (left) and 0.9.

The key driver of this advancement in composition for SDXL 0.9 is its significant increase in parameter count over the beta version. SDXL 0.9 is run on two CLIP models, including one of the largest OpenCLIP models trained to date. This beefs up 0.9’s processing power and ability to create realistic imagery with greater depth and a higher resolution of 1024x1024.

MosaicML's MPT-30B beats GPT-3

MPT-30B is a decoder-style transformer pre-trained model, Containing a massive dataset of 1T tokens of English text and code. This model was trained by MosaicML. MosaicML's MPT-30B is the smallest model to beat GPT-3. It is significantly more powerful than MPT-7B and outperforms the original GPT-3.

It is part of the Mosaic Pretrained Transformer (MPT) models family, which uses a modified transformer architecture optimized for efficient training and inference.

MPT-30B possesses special features that set it apart from other LLMs, which include:

- An 8k token context window, which can be further extended via finetuning

- Support for context-length extrapolation via ALiBi

- Efficient inference + training via FlashAttention

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI enthusiasts.

Thanks for reading, and see you on Monday! 😊