AI Weekly Rundown (July 22 to July 28)

Major AI updates from major AI players! News from Stability AI, Meta, Google, Open AI, Microsoft and more.

Hello, Engineering Leaders and AI Enthusiasts,

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:

✅ Stability AI introduces 2 LLMs close to ChatGPT

✅ ChatGPT is coming to Android!

✅ Meta collabs with Qualcomm to enable on-device AI apps using Llama 2

✅ Worldcoin by OpenAI’s CEO will confirm your humanity

✅ RunwayML's new AI update brings images to life; no prompts

✅ FABRIC personalizes diffusion models with iterative feedback

✅ AI predicts code coverage faster and cheaper

✅ Introducing 3D-LLMs: Infusing 3D worlds into LLMs

✅ Alibaba Cloud brings Meta’s Llama to its clients

✅ Microsoft, Google, OpenAI, Anthropic Unite for Safe AI Progress

✅ Stability AI released SDXL 1.0, featured on Amazon Bedrock

✅ AWS prioritizing AI: 2 major updates!

✅ Stackoverflow launches AI tool for Developers

✅ NVIDIA, ServiceNow, and Accenture Partner to Boost GenAI Adoption

✅ Tracking objects in HQ without any tricks

Let’s go!

Stability AI introduces 2 LLMs close to ChatGPT

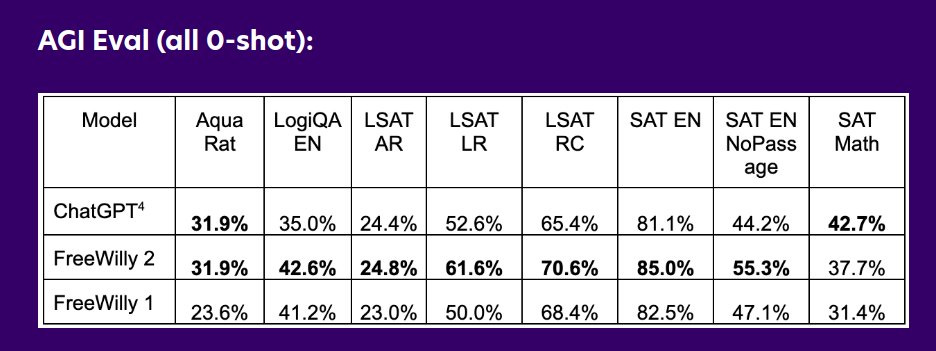

Stability AI and CarperAI lab, unveiled FreeWilly1 and its successor FreeWilly2, two powerful new, open-access, Large Language Models. These models showcase remarkable reasoning capabilities across diverse benchmarks. FreeWilly1 is built upon the original LLaMA 65B foundation model and fine-tuned using a new synthetically-generated dataset with Supervised Fine-Tune (SFT) in standard Alpaca format. Similarly, FreeWilly2 harnesses the LLaMA 2 70B foundation model and demonstrates competitive performance with GPT-3.5 for specific tasks.

For internal evaluation, they’ve utilized EleutherAI's lm-eval-harness, enhanced with AGIEval integration. Both models serve as research experiments, released to foster open research under a non-commercial license.

ChatGPT is coming to Android!

Open AI announces ChatGPT for Android users! The app will be rolling out to users next week, the company said but can be pre-ordered in the Google Play Store.

The company promises users access to its latest advancements, ensuring an enhanced experience. The app comes at no cost and offers seamless synchronization of chatbot history across multiple devices, as highlighted on the app's Play Store page.

Meta collabs with Qualcomm to enable on-device AI apps using Llama 2

Meta and Qualcomm Technologies, Inc. are working to optimize the execution of Meta’s Llama 2 directly on-device without relying on the sole use of cloud services. The ability to run Gen AI models like Llama 2 on devices such as smartphones, PCs, VR/AR headsets, and vehicles allows developers to save on cloud costs and to provide users with private, more reliable, and personalized experiences.

Qualcomm Technologies is scheduled to make available Llama 2-based AI implementation on devices powered by Snapdragon starting from 2024 onwards.

Worldcoin by OpenAI’s CEO will confirm your humanity

OpenAI’s Sam Altman has launched a new crypto project called Worldcoin. It consists of a privacy-preserving digital identity (World ID) and, where laws allow, a digital currency (WLD) received simply for being human.

You will receive the World ID after visiting an Orb, a biometric verification device. The Orb devices verify human identity by scanning people's eyes, which Altman suggests is necessary due to the growing threat posed by AI.

RunwayML's new AI update brings images to life; no prompts

RunwayML Gen-2's new picture-to-motion update improves video generation using only images as input. No text prompt is needed. It is now available in the browser and is coming soon to iOS. Here are some examples from Twitter (or X).

FABRIC personalizes diffusion models with iterative feedback

Meet FABRIC, a training-free method for using iterative feedback to improve the results of Stable Diffusion models. Instead of spending hours to find the right prompt, just click 👍/👎 to tell the model what exactly you want.

This out-of-the-box approach is applicable to a wide range of popular diffusion models. It exploits the self-attention layer present in the most widely used architectures to condition the diffusion process on a set of feedback images (pushing generation towards👍 and away from👎).

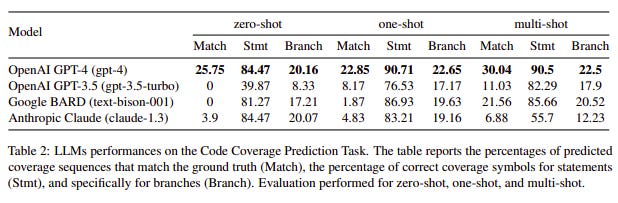

AI predicts code coverage faster and cheaper

Microsoft Research has proposed a novel benchmark task called Code Coverage Prediction. It accurately predicts code coverage, i.e., the lines of code or a percentage of code lines that are executed based on given test cases and inputs. Thus, it also helps assess the capability of LLMs in understanding code execution.

Evaluating four prominent LLMs (GPT-4, GPT-3.5, BARD, and Claude) on this task provides insights into their performance and understanding of code execution. The results indicate LLMs still have a long way to go in developing a deep understanding of code execution.

Several use case scenarios where this approach can be valuable and beneficial are:

Expensive build and execution in large software projects

Limited code availability

Live coverage or live unit testing

Introducing 3D-LLMs: Infusing 3D worlds into LLMs

As powerful as LLMs and Vision-Language Models (VLMs) can be, they are not grounded in the 3D physical world. The 3D world involves richer concepts such as spatial relationships, affordances, physics, layout, etc.

New research has proposed injecting the 3D world into large language models, introducing a whole new family of 3D-based LLMs. Specifically, 3D-LLMs can take 3D point clouds and their features as input and generate responses.

They can perform a diverse set of 3D-related tasks, including captioning, dense captioning, 3D question answering, task decomposition, 3D grounding, 3D-assisted dialog, navigation, and so on.

Alibaba Cloud brings Meta’s Llama to its clients

Alibaba’s cloud computing division said it has become the first Chinese enterprise to support Meta’s open-source AI model Llama, allowing Chinese business users to develop programs off the model.

Alibaba has also launched the first training and deployment solution for the entire Llama2 series in China, welcoming all developers to create customized large models on Alibaba Cloud.

Microsoft, Google, OpenAI, Anthropic Unite for Safe AI Progress

Anthropic, Google, Microsoft, and OpenAI have jointly announced the establishment of the Frontier Model Forum, a new industry body to ensure the safe and responsible development of frontier AI systems.

The Forum aims to identify best practices for development and deployment, collaborate with various stakeholders, and support the development of applications that address societal challenges. It will leverage the expertise of its member companies to benefit the entire AI ecosystem by advancing technical evaluations, developing benchmarks, and creating a public library of solutions.

Stability AI released SDXL 1.0, featured on Amazon Bedrock

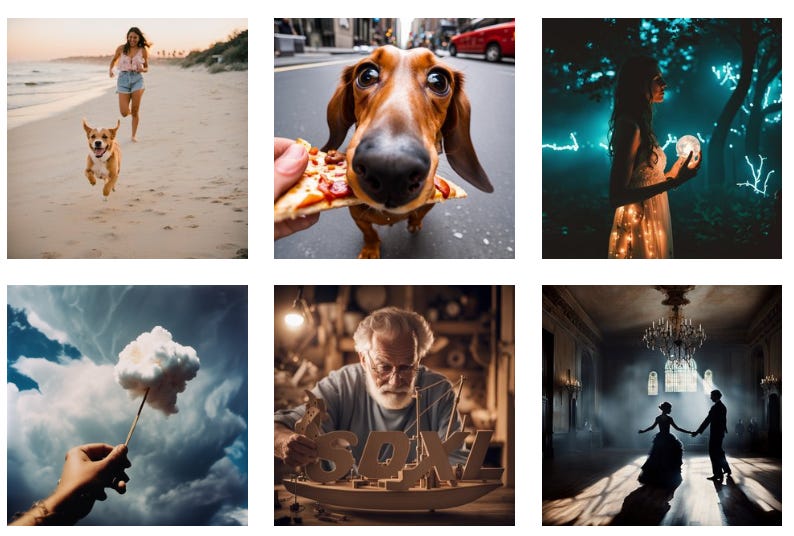

Stability AI has announced the release of Stable Diffusion XL (SDXL) 1.0, its advanced text-to-image model. The model will be featured on Amazon Bedrock, providing access to foundation models from leading AI startups. SDXL 1.0 generates vibrant, accurate images with improved colors, contrast, lighting, and shadows. It is available through Stability AI's API, GitHub page, and consumer applications.

The model is also accessible on Amazon SageMaker JumpStart. Stability API's new fine-tuning beta feature allows users to specialize generation on specific subjects. SDXL 1.0 has one of the largest parameter counts and has been widely used by ClipDrop users and Stability AI's Discord community.

(Images created using Stable Diffusion XL 1.0, featured on Amazon Bedrock)

AWS prioritizing AI: 2 major updates!

2 important AI developments from AWS.

The first is the new healthcare-focused service: ‘HealthScribe.’ A platform that uses Gen AI to transcribe and analyze conversations between clinicians and patients. This AI-powered tool can create transcripts, extract details, and generate summaries that can be entered into electronic health record systems. The platform's ML models can convert the transcripts into patient notes, which can then be analyzed for insights.

HealthScribe also offers NLP capabilities to extract medical terms from conversations where the AI capabilities are powered by Bedrock. The platform is currently only available for general medicine and orthopedics.

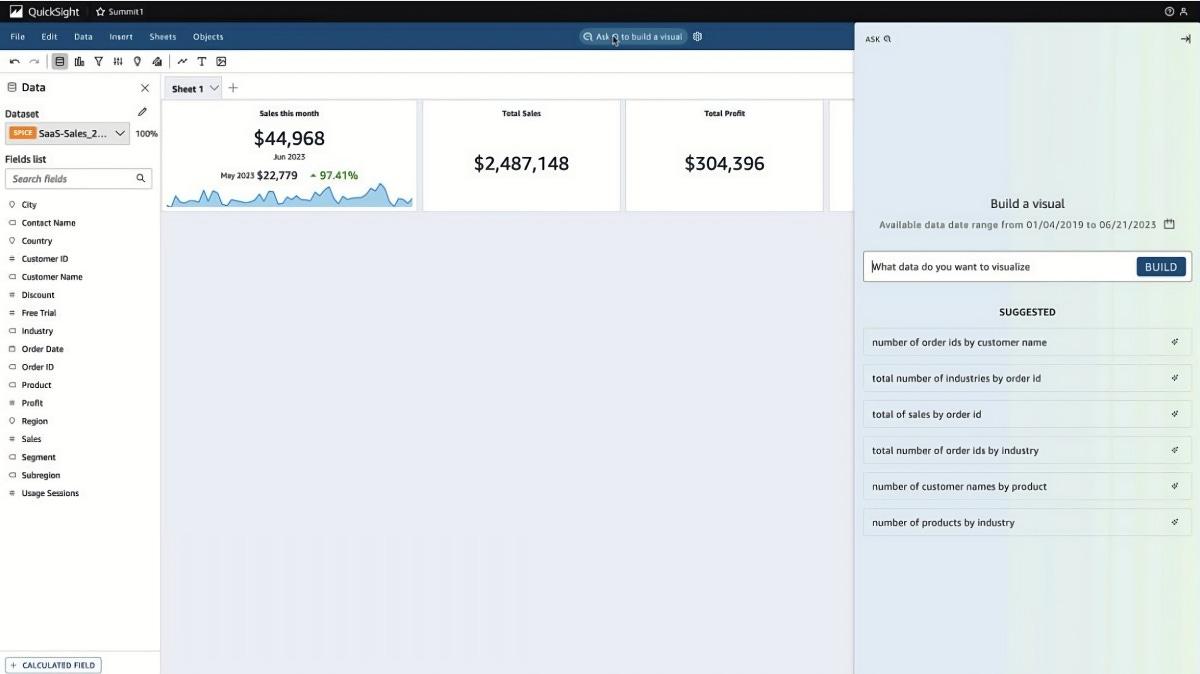

The second one is about the new AI updates in Amazon QuickSight.

Users can generate visuals, fine-tune and format them using natural language instructions, and create calculations without specific syntax. The new features include an "Ask Q" option that allows users to describe the data they want to visualize, a "Build for me" option to edit elements of dashboards and reports, and the ability to create "Stories" that combine visuals and text-based analyses.

Stackoverflow launches AI tool for Developers

Stack Overflow has announced the integration of Generative AI into its public platform, Stack Overflow for Teams, under the umbrella of OverflowAI. The new features include semantic search, enhanced search for Teams, enterprise knowledge ingestion, Slack integration, a Visual Studio Code extension, and AI community discussions.

The aim is to provide instant, trustworthy, and accurate solutions to developers' problems while keeping the developer community at the center and ensuring trust and attribution. Stack Overflow will leverage its vast knowledge base of over 58 million questions and answers to provide personalized results and fill the gaps that AI cannot address.

NVIDIA, ServiceNow, and Accenture Partner to Boost GenAI Adoption

ServiceNow, NVIDIA, and Accenture have launched AI Lighthouse, a program to accelerate the development and adoption of enterprise Gen AI capabilities. The program will allow customers to collaborate in designing custom generative AI large language models and applications to advance their businesses.

The AI Lighthouse program will focus on reducing manual work for customer service professionals, promoting self-service options, generating content automatically, and boosting developer productivity. ServiceNow has already launched generative AI capabilities and engaged with various industries to test them, and the AI Lighthouse program will build on this progress with select customers.

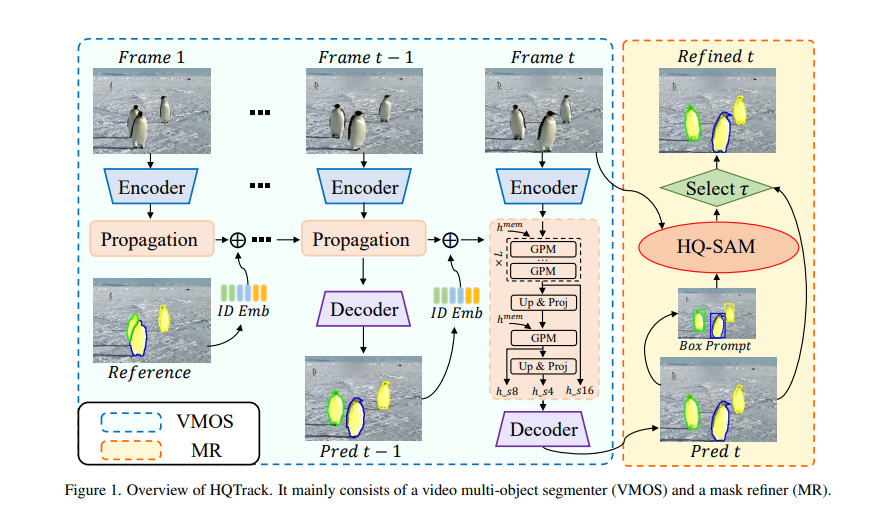

Tracking objects in HQ without any tricks

HQTrack is a framework for high-quality tracking of objects in videos. It uses a video multi-object segmenter (VMOS) and a mask refiner (MR) to improve object tracking accuracy. The VMOS propagates object masks from the initial to the current frame, but the results may not be accurate enough.

To address this, a pre-trained MR model is used to refine the tracking masks. HQTrack achieved 2nd place in the Visual Object Tracking and Segmentation challenge without using any tricks or model ensemble.

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI enthusiasts.

Thanks for reading, and see you on Monday! 😊