AI Weekly Rundown (July 1 to July 7)

News from Google, ChatGPT, Salesforce, Microsoft, SAM-PT, OpenChat, and more.

Hello, Engineering Leaders and AI Enthusiasts,

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:

✅ ChatGPT builds robots: New research

✅ Magic123 creates HQ 3D meshes from unposed images

✅ Any-to-any generation: Next stage in AI evolution

✅ OpenChat beats 100% of ChatGPT-3.5

✅ AI designs CPU in <5 hours

✅ SAM-PT: Video object segmentation with zero-shot tracking

✅ Google’s AI models to train on public data

✅ LEDITS: Image editing with next-level AI capabilities

✅ OpenAI makes GPT-4 API and Code Interpreter available

✅ Salesforce’s CodeGen2.5, a small but mighty code LLM

✅ InternLM: A model tailored for practical scenarios

✅ Microsoft’s LongNet scales transformers to 1B tokens

✅ OpenAI’s Superalignment - The next big goal!

✅ AI can now detect and prevent wildfires

ChatGPT builds robots: New research

Microsoft Research presents an experimental study using OpenAI’s ChatGPT for robotics applications. It outlines a strategy that combines design principles for prompt engineering and the creation of a high-level function library that allows ChatGPT to adapt to different robotics tasks, simulators, and form factors.

The study encompasses a range of tasks within the robotics domain, from basic logical, geometrical, and mathematical reasoning to complex domains such as aerial navigation, manipulation, and embodied agents.

Microsoft also released PromptCraft, an open-source platform where anyone can share examples of good prompting schemes for robotics applications.

Magic123 creates HQ 3D meshes from unposed images

New research from Snap Inc. (and others) presents Magic123, a novel image-to-3D pipeline that uses a two-stage coarse-to-fine optimization process to produce high-quality high-resolution 3D geometry and textures. It generates photo-realistic 3D objects from a single unposed image.

The core idea is to use 2D and 3D priors simultaneously to generate faithful 3D content from any given image. Magic123 achieves state-of-the-art results in both real-world and synthetic scenarios.

Any-to-any generation: Next stage in AI evolution

Microsoft presents CoDi, a novel generative model capable of processing and simultaneously generating content across multiple modalities. It employs a novel composable generation strategy that involves building a shared multimodal space by bridging alignment in the diffusion process. This enables the synchronized generation of intertwined modalities, such as temporally aligned video and audio.

One of CoDi’s most significant innovations is its ability to handle many-to-many generation strategies, simultaneously generating any mixture of output modalities. CoDi is also capable of single-to-single modality generation and multi-conditioning generation.

OpenChat beats 100% of ChatGPT-3.5

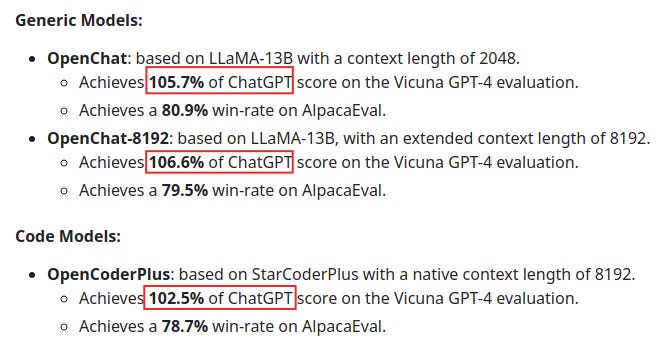

OpenChat is a collection of open-source language models specifically trained on a diverse and high-quality dataset of multi-round conversations. These models have undergone fine-tuning using approximately ~6K GPT-4 conversations filtered from the ~90K ShareGPT conversations. It is designed to achieve high performance with limited data.

The model comes in three versions: The basic OpenChat model, OpenChat-8192 and OpenCoderPlus.

AI designs CPU in <5 hours

A team of Chinese researchers published a paper describing how they used AI to design a fully functional CPU based on the RISC-V architecture, which is as fast as an Intel i486SX. They called it a “foundational step towards building self-evolving machines.” The AI model completed the design cycle in under 5 hours, reducing it by 1000 times.

SAM-PT: Video object segmentation with zero-shot tracking

Researchers introduced SAM-PT, an advanced method that expands the capabilities of the Segment Anything Model (SAM) to track and segment objects in dynamic videos. SAM-PT utilizes interactive prompts, such as points, to generate masks and achieves exceptional zero-shot performance in popular video object segmentation benchmarks, including DAVIS, YouTube-VOS, and MOSE. It takes a unique approach by leveraging robust and sparse point selection and propagation techniques.

To enhance the tracking accuracy, SAM-PT incorporates K-Medoids clustering for point initialization and a point re-initialization strategy.

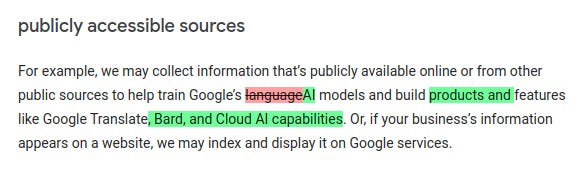

Google’s AI models to train on public data

Google has updated its privacy policy to state that it can use publicly available data to help train and create its AI models. As of July 1, here is what the tech giant's newly updated policy reads

This suggests that Google is leaning heavily into its AI bid. Plus, harnessing humanity’s collective knowledge could redefine how AI learns and comprehends information.

LEDITS: Image editing with next-level AI capabilities

Hugging Face research has introduced LEDITS- a combined lightweight approach for real-image editing, incorporating the Edit Friendly DDPM inversion technique with Semantic Guidance. Thus, it extends Semantic Guidance to real image editing while harnessing the editing capabilities of DDPM inversion.

OpenAI makes GPT-4 API and Code Interpreter available

GPT-4 API is now available to all paying OpenAI API customers. GPT-3.5 Turbo, DALL·E, and Whisper APIs are also now generally available, and OpenAI is announcing a deprecation plan for some of the older models, which will retire beginning of 2024.

(Source)

Moreover, OpenAI’s Code Interpreter will be available to all ChatGPT Plus users over the next week. It lets ChatGPT run code, optionally with access to files you've uploaded. You can also ask ChatGPT to analyze data, create charts, edit files, perform math, etc.

(Source)

Salesforce’s CodeGen2.5, a small but mighty code LLM

Salesforce’s CodeGen family of models allows users to “translate” natural language, such as English, into programming languages, such as Python. Now it has added a new member- CodeGen2.5, a small but mighty LLM for code. Here’s a tl;dr

Its smaller size means faster sampling, resulting in a speed improvement of 2x compared to CodeGen2. The small model easily allows for personalized assistants with local deployments.

InternLM: A model tailored for practical scenarios

InternLM has open-sourced a 7B parameter base model and a chat model tailored for practical scenarios. The model

Leverages trillions of high-quality tokens for training to establish a powerful knowledge base

Supports an 8k context window length, enabling longer input sequences and stronger reasoning capabilities

Provides a versatile toolset for users to flexibly build their own workflows

It is a 7B version of a 104B model that achieves SoTA performance in multiple aspects, including knowledge understanding, reading comprehension, mathematics, and coding. InternLM-7B outperforms LLaMA, Alpaca, and Vicuna on comprehensive exams, including MMLU, HumanEval, MATH, and more.

Microsoft’s LongNet scales transformers to 1B tokens

Microsoft research’s recently launched LongNet allows language models to have a context window of over 1 billion tokens without sacrificing the performance on shorter sequences.

LongNet achieves this through dilated attention, exponentially expanding the model's attentive field as token distance increases.

This breakthrough offers significant advantages:

1) It maintains linear computational complexity and a logarithmic token dependency;

2) It can be used as a distributed trainer for extremely long sequences;

3) Its dilated attention can seamlessly replace standard attention in existing Transformer models.

OpenAI’s Superalignment - The next big goal!

OpenAI has launched Superalignment, a project dedicated to addressing the challenge of aligning artificial superintelligence with human intent. Over the next four years, 20% of OpenAI's computing power will be allocated to this endeavor. The project aims to develop scientific and technical breakthroughs by creating an AI-assisted automated alignment researcher.

This researcher will evaluate AI systems, automate searches for problematic behavior, and test alignment pipelines. Superalignment will comprise a team of leading machine learning researchers and engineers open to collaborating with talented individuals interested in solving the issue of aligning superintelligence.

AI can now detect and prevent wildfires

Cal Fire, the California Department of Forestry and Fire Protection, uses AI to help detect wildfires more effectively without the human eye. Advanced cameras equipped with autonomous smoke detection capabilities are replacing the reliance on human eyes to spot potential fire outbreaks.

Detecting wildfires is challenging due to their occurrence in remote areas with limited human presence and their unpredictable nature fueled by environmental factors. To address these challenges, innovative solutions and increased vigilance are necessary to identify and respond to wildfires timely.

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI enthusiasts.

Thanks for reading, and see you on Monday! 😊

I know you don't get this enough, but keep doing the good job! I read your weekly AI news posts every week and I love them everytime. Never stop! Keep up the good work.