AI Weekly Rundown (January 20 to January 25)

Major AI announcements from Stability AI, Meta, ElevenLabs, TikTok, Google, Elon Musk, NVIDIA, and more.

Hello Engineering Leaders and AI Enthusiasts!

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:

🚀 Stability AI introduces Stable LM 2 1.6B

🌑 Nightshade, the data poisoning tool, is now available in v1

🏆 AlphaCodium: A code generation tool that beats human competitors

🤖 Meta’s novel AI advances creative 3D applications💰 ElevenLabs announces new AI products + Raised $80M

📐 TikTok's Depth Anything sets new standards for Depth Estimation🆕 Google Chrome and Ads are getting new AI features

🎥 Google Research presents Lumiere for SoTA video generation

🔍 Binoculars can detect over 90% of ChatGPT-generated text

📖 Meta introduces guide on ‘Prompt Engineering with Llama 2'🎬 NVIDIA’s AI RTX Video HDR transforms video to HDR quality

🤖 Google introduces a model for orchestrating robotic agents

Let’s go!

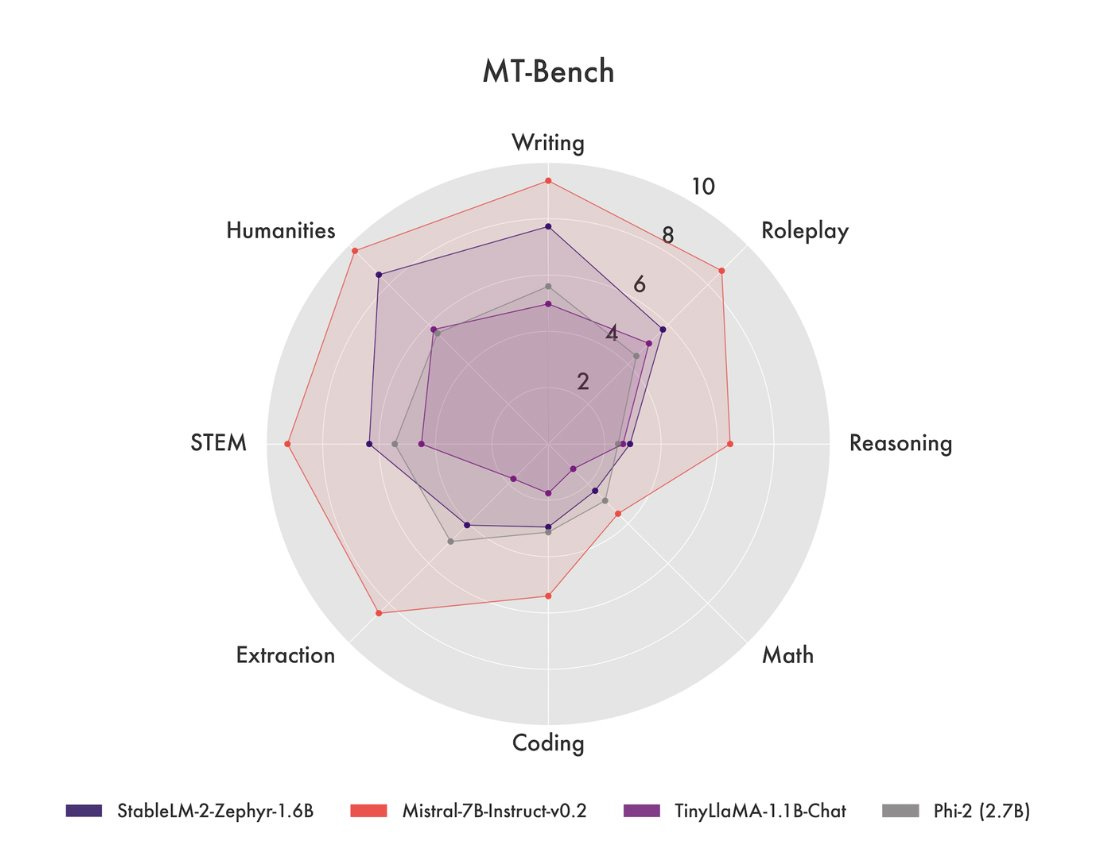

Stability AI introduces Stable LM 2 1.6B

Stability AI released Stable LM 2 1.6B, a state-of-the-art 1.6 billion parameter small language model trained on multilingual data in English, Spanish, German, Italian, French, Portuguese, and Dutch. It leverages recent algorithmic advancements in language modeling to strike a favorable balance between speed and performance, enabling fast experimentation and iteration with moderate resources.

According to Stability AI, the model outperforms other small language models with under 2 billion parameters on most benchmarks, including Microsoft’s Phi-2 (2.7B), TinyLlama 1.1B, and Falcon 1B. It is even able to surpass some larger models, including Stability AI’s own earlier Stable LM 3B model.

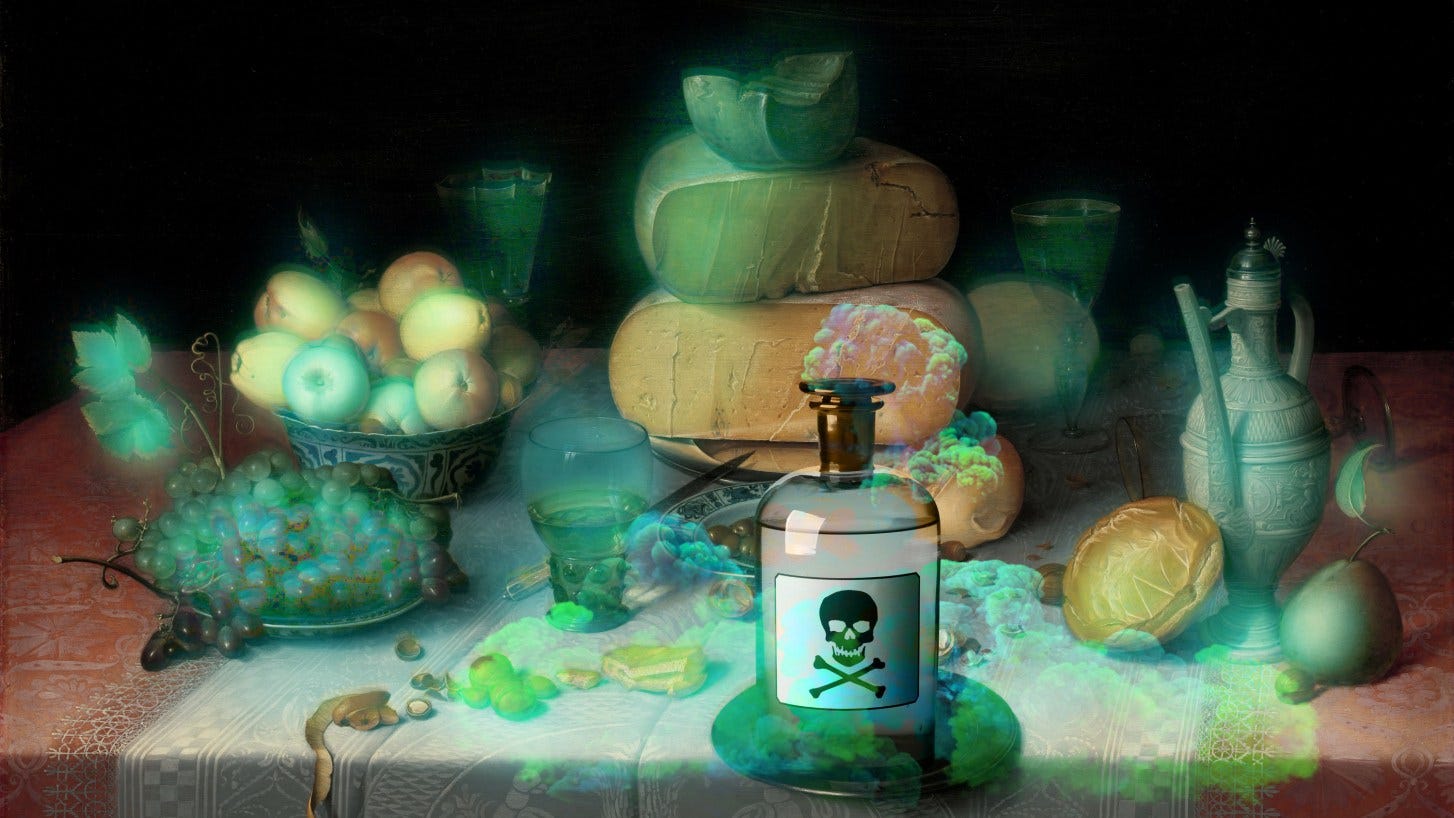

Nightshade, the data poisoning tool, is now available in v1

The University of Chicago’s Glaze Project has released Nightshade v1.0, which enables artists to sabotage generative AI models that ingest their work for training.

Glaze implements invisible pixels in original images that cause the image to fool AI systems into believing false styles. For e.g., it can be used to transform a hand-drawn image into a 3D rendering.

Nightshade goes one step further: it is designed to use manipulated pixels to damage the model by confusing it. For example, the AI model might see a car instead of a train. Fewer than 100 of these "poisoned" images could be enough to corrupt an image AI model, the developers suspect.

AlphaCodium: A code generation tool that beats human competitors

AlphaCodium is a test-based, multi-stage, code-oriented iterative flow that improves the performance of LLMs on code problems. It was tested on a challenging code generation dataset called CodeContests, which includes competitive programming problems from platforms such as Codeforces. The proposed flow consistently and significantly improves results.

On the validation set, for example, GPT-4 accuracy (pass@5) increased from 19% with a single well-designed direct prompt to 44% with the AlphaCodium flow. It also beats DeepMind's AlphaCode and their new AlphaCode2 without needing to fine-tune a model.

AlphaCodium is an open-source, available tool and works with any leading code generation model.

Meta’s novel AI advances creative 3D applications

The paper introduces a new shape representation called Mosaic-SDF (M-SDF) for 3D generative models. M-SDF approximates a shape's Signed Distance Function (SDF) using local grids near the shape's boundary.

This representation is:

Fast to compute

Parameter efficient

Compatible with Transformer-based architectures

The efficacy of M-SDF is demonstrated by training a 3D generative flow model with the 3D Warehouse dataset and text-to-3D generation using caption-shape pairs.

Meta shared this update on Twitter.

More details here.

ElevenLabs announces new AI products + Raised $80M

ElevenLabs has raised $80 million in a Series B funding round co-led by Andreessen Horowitz, Nat Friedman, and Daniel Gross. The funding will strengthen the company's position as a voice AI research and product development leader.

ElevenLabs has also announced the release of new AI products, including a Dubbing Studio, a Voice Library marketplace, and a Mobile Reader App.

TikTok's Depth Anything sets new standards for Depth Estimation

This work introduces Depth Anything, a practical solution for robust monocular depth estimation. The approach focuses on scaling up the dataset by collecting and annotating large-scale unlabeled data. Two strategies are employed to improve the model's performance: creating a more challenging optimization target through data augmentation and using auxiliary supervision to incorporate semantic priors.

The model is evaluated on multiple datasets and demonstrates impressive generalization ability. Fine-tuning with metric depth information from NYUv2 and KITTI also leads to state-of-the-art results. The improved depth model also enhances the performance of the depth-conditioned ControlNet.

Google Chrome and Ads are getting new AI features

Google Chrome is getting 3 new experimental generative AI features:

Smartly organize your tabs: With Tab Organizer, Chrome will automatically suggest and create tab groups based on your open tabs.

Create your own themes with AI: You’ll be able to quickly generate custom themes based on a subject, mood, visual style and color that you choose– no need to become an AI prompt expert!

Get help drafting things on the web: A new feature will help you write with more confidence on the web– whether you want to leave a well-written review for a restaurant, craft a friendly RSVP for a party, or make a formal inquiry about an apartment rental.

(Source)

In addition, Gemini will now power the conversational experience within the Google Ads platform. With this new update, it will be easier for advertisers to quickly build and scale Search ad campaigns.

(Source)

Google Research presents Lumiere for SoTA video generation

Lumiere is a text-to-video (T2V) diffusion model designed for synthesizing videos that portray realistic, diverse, and coherent motion– a pivotal challenge in video synthesis. It demonstrates state-of-the-art T2V generation results and shows that the design easily facilitates a wide range of content creation tasks and video editing applications.

The approach introduces a new T2V diffusion framework that generates the full temporal duration of the video at once. This is achieved by using a Space-Time U-Net (STUNet) architecture that learns to downsample the signal in both space and time, and performs the majority of its computation in a compact space-time representation.

Binoculars can detect over 90% of ChatGPT-generated text

Researchers have introduced a novel LLM detector that only requires simple calculations using a pair of pre-trained LLMs. The method, called Binoculars, achieves state-of-the-art accuracy without any training data.

It is capable of spotting machine text from a range of modern LLMs without any model-specific modifications. Researchers comprehensively evaluated Binoculars on a number of text sources and in varied situations. Over a wide range of document types, Binoculars detects over 90% of generated samples from ChatGPT (and other LLMs) at a false positive rate of 0.01%, despite not being trained on any ChatGPT data.

Enjoying the weekly updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

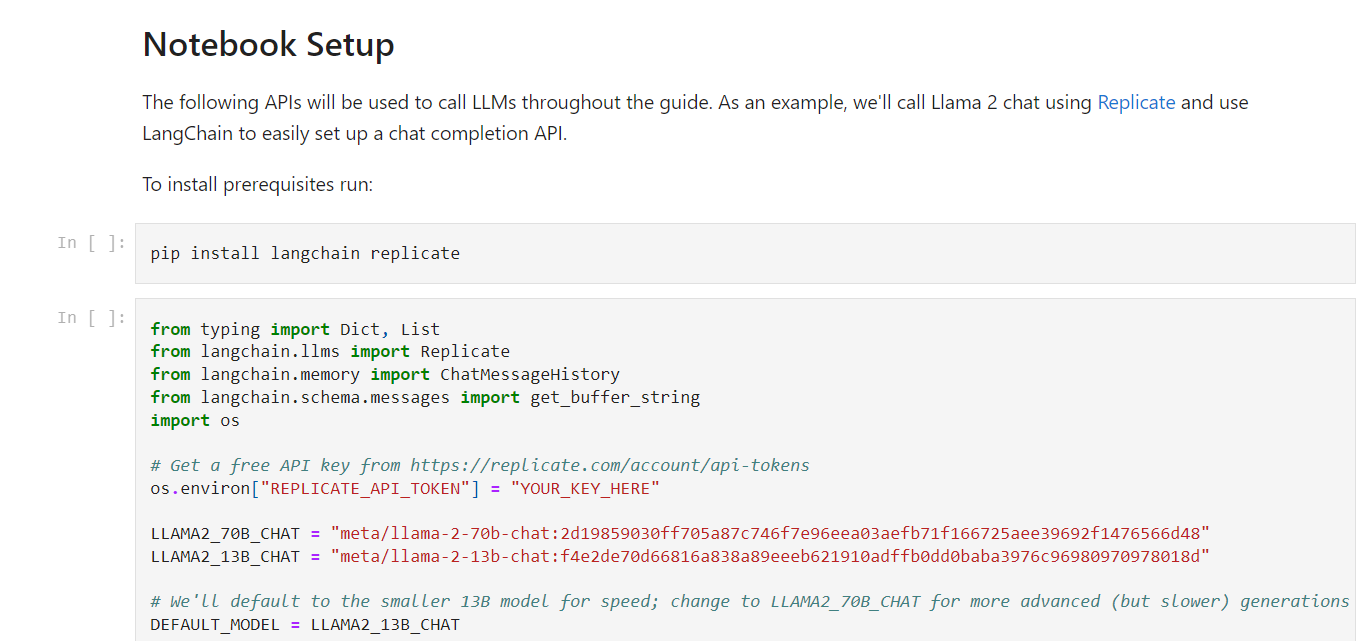

Meta releases guide on ‘Prompt Engineering with Llama 2'

Meta introduces 'Prompt Engineering with Llama 2', It's an interactive guide created by research teams at Meta that covers prompt engineering & best practices for developers, researchers & enthusiasts working with LLMs to produce stronger outputs. It's the new resource created for the Llama community.

Access the Jupyter Notebook in the llama-recipes repo ➡️ https://bit.ly/3vLzWRL

NVIDIA introduces AI RTX Video HDR, which transforms video to HDR quality

NVIDIA released AI RTX Video HDR, which transforms video to HDR quality, It works with RTX Video Super Resolution. The HDR feature requires an HDR10-compliant monitor.

RTX Video HDR is available in Chromium-based browsers, including Google Chrome and Microsoft Edge. To enable the feature, users must download and install the January Studio driver, enable Windows HDR capabilities, and enable HDR in the NVIDIA Control Panel under "RTX Video Enhancement."

Google introduces a model for orchestrating robotic agents

Google introduces AutoRT, a model for orchestrating large-scale robotic agents. It's a system that uses existing foundation models to deploy robots in new scenarios with minimal human supervision. AutoRT leverages vision-language models for scene understanding and grounding and LLMs for proposing instructions to a fleet of robots.

By tapping into the knowledge of foundation models, AutoRT can reason about autonomy and safety while scaling up data collection for robot learning. The system successfully collects diverse data from over 20 robots in multiple buildings, demonstrating its ability to align with human preferences.

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From ML to ChatGPT to generative AI and LLMs, We break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you on Monday. 😊