AI Weekly Rundown (January 26 to February 02)

Major AI announcements from OpenAI, Google, Meta, Amazon, Shopify, and more.

Hello Engineering Leaders and AI Enthusiasts!

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:

🚀 OpenAI reveals new models, drops prices, and fixes ‘lazy’ GPT-4

🌌 Prophetic company wants AI to enter your dreams

📊 The recent advances in Multimodal LLM🔝 Meta released Code Llama 70B, rivals GPT-4

🧠 Neuralink implants its brain chip in the first human

🌐 Alibaba announces Qwen-VL; beats GPT-4V and Gemini

🔍 Microsoft's annual report predicts AI's impact on jobs✨ Amazon’s new AI helps enhance virtual try-on experiences

🤖 Cambridge’s braille-reading robot is 2x faster than humans🛍️ Shopify boosts its commerce platform with AI enhancements

🚫 OpenAI explores how good GPT-4 is at creating bioweapons

💡 LLaVA-1.6: Improved reasoning, OCR, and world knowledge

🔥 Google bets big on AI with huge upgrades🛒 Amazon launches an AI shopping assistant for mobile app

💼 Meta to deploy in-house chips to reduce dependence on NVIDIA

Let’s go!

OpenAI reveals new models, drops prices, and fixes ‘lazy’ GPT-4

OpenAI announced a new generation of embedding models, new GPT-4 Turbo and moderation models, new API usage management tools, and lower pricing on GPT-3.5 Turbo.

The new models include:

2 new embedding models

An updated GPT-4 Turbo preview model

An updated GPT-3.5 Turbo model

An updated text moderation model

Also:

Updated text moderation model

Introducing new ways for developers to manage API keys and understand API usage

Quietly implemented a new ‘GPT mentions’ feature to ChatGPT (no official announcement yet). The feature allows users to integrate GPTs into a conversation by tagging them with an '@.'

Prophetic wants AI to enter your dreams

Prophetic introduces Morpheus-1, the world's 1st ‘multimodal generative ultrasonic transformer’. This innovative AI device is crafted with the purpose of exploring human consciousness through controlling lucid dreams. Morpheus-1 monitors sleep phases and gathers dream data to enhance its AI model.

The device is set to be accessible to beta users in the spring of 2024.

You can Sign up for their beta program here.

The recent advances in Multimodal LLM

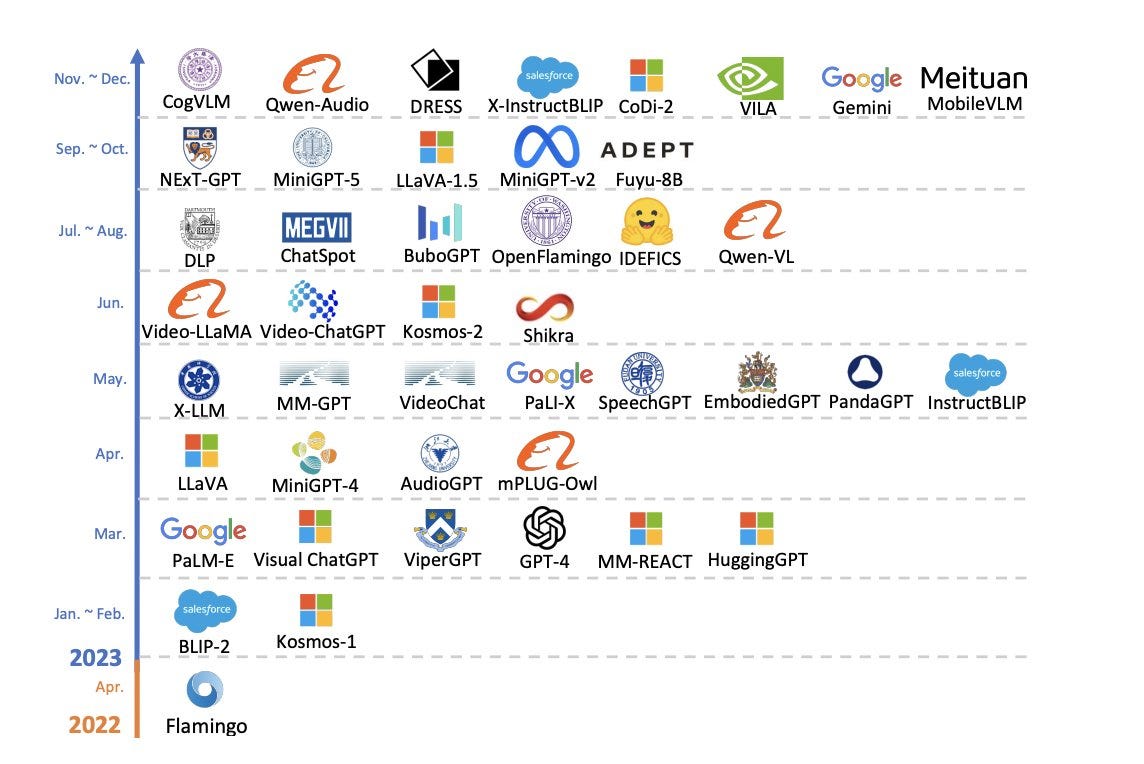

This paper ‘MM-LLMs’ discusses recent advancements in MultiModal LLMs which combine language understanding with multimodal inputs or outputs. The authors provide an overview of the design and training of MM-LLMs, introduce 26 existing models, and review their performance on various benchmarks.

(Above is the timeline of MM-LLMs)

They also share key training techniques to improve MM-LLMs and suggest future research directions.

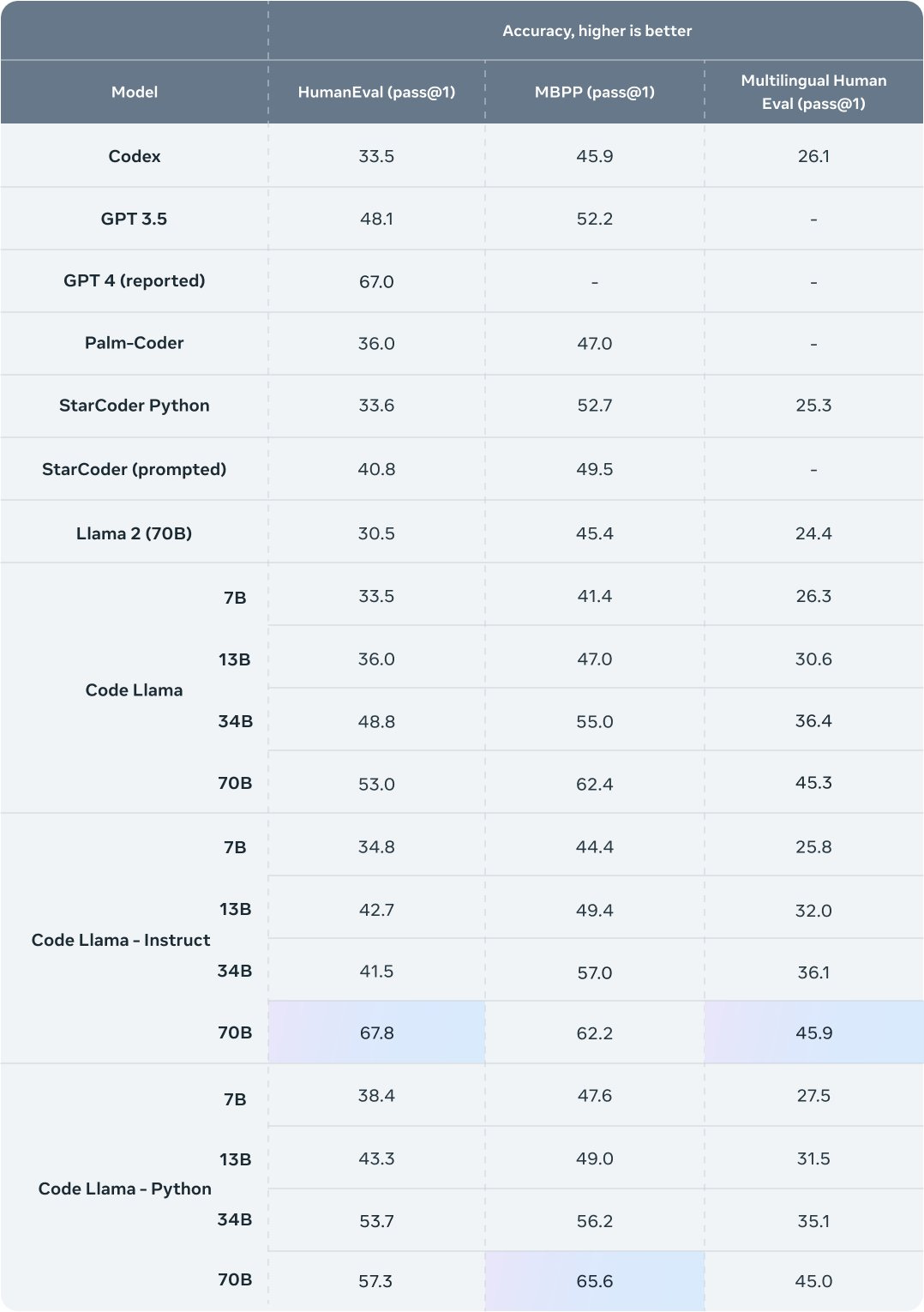

Meta released Code Llama 70B, rivals GPT-4

Meta released Code Llama 70B, a new, more performant version of its LLM for code generation. It is available under the same license as previous Code Llama models–

CodeLlama-70B

CodeLlama-70B-Python

CodeLlama-70B-Instruct

CodeLlama-70B-Instruct achieves 67.8 on HumanEval, making it one of the highest-performing open models available today. CodeLlama-70B is the most performant base for fine-tuning code generation models.

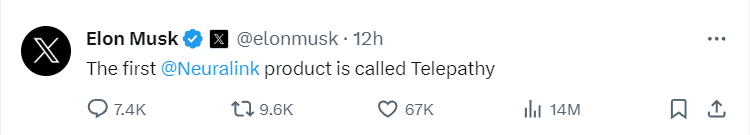

Neuralink implants its brain chip in the first human

In a first, Elon Musk’s brain-machine interface startup, Neuralink, has successfully implanted its brain chip in a human. In a post on X, he said "promising" brain activity had been detected after the procedure and the patient was "recovering well". In another post, he added:

The company's goal is to connect human brains to computers to help tackle complex neurological conditions. It was given permission to test the chip on humans by the FDA in May 2023.

Alibaba announces Qwen-VL; beats GPT-4V and Gemini

Alibaba’s Qwen-VL series has undergone a significant upgrade with the launch of two enhanced versions, Qwen-VL-Plus and Qwen-VL-Max. The key technical advancements in these versions include

Substantial boost in image-related reasoning capabilities;

Considerable enhancement in recognizing, extracting, and analyzing details within images and texts contained therein;

Support for high-definition images with resolutions above one million pixels and images of various aspect ratios.

Compared to the open-source version of Qwen-VL, these two models perform on par with Gemini Ultra and GPT-4V in multiple text-image multimodal tasks, significantly surpassing the previous best results from open-source models.

Microsoft's annual report predicts AI's impact on jobs

Microsoft released its annual ‘Future of Work 2023’ report with a focus on AI. It highlights the 2 major shifts in how work is done in the past three years, driven by remote and hybrid work technologies and the advancement of Gen AI. This year's edition focuses on integrating LLMs into work and offers a unique perspective on areas that deserve attention.

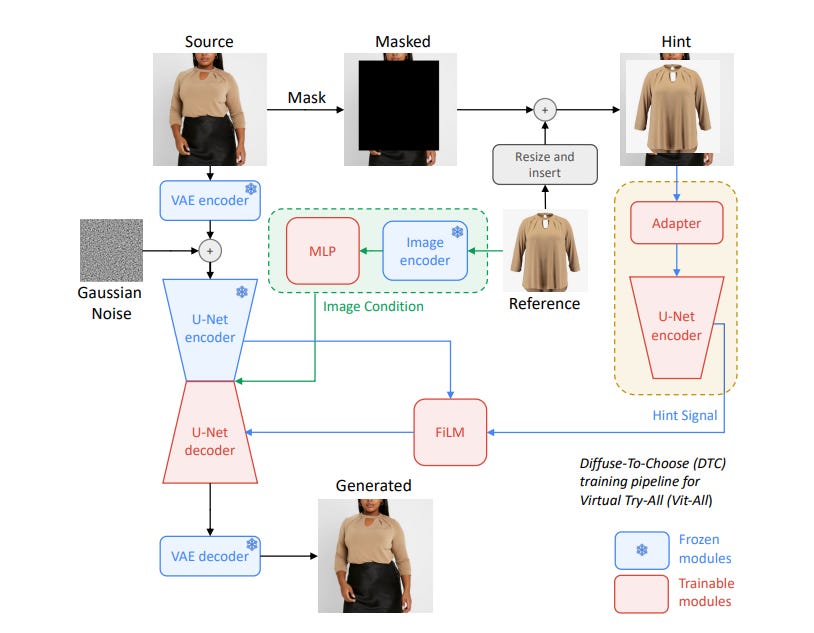

Amazon’s new AI helps enhance virtual try-on experiences

Amazon researchers have developed the “Diffuse to Choose” AI tool. It's a new image inpainting model that combines the strengths of diffusion models and personalization-driven models; It allows customers to virtually place products from online stores into their homes to visualize fit and appearance in real-time.

Cambridge researchers develop braille-reading robots 2x faster than humans

Cambridge researchers developed a robotic sensor reading braille 2x faster than humans. The sensor, which incorporates AI techniques, was able to read braille at 315 words per minute with 90% accuracy.

While the robot was not developed as an assistive technology, the high sensitivity required to read braille makes it ideal for testing the development of robot hands or prosthetics with comparable sensitivity to human fingertips. The researchers used ML algorithms to train the robotic sensor to quickly slide over lines of braille text and accurately recognize the letters.

Enjoying the weekly updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Shopify boosts its commerce platform with AI enhancements

Shopify unveiled over 100 new updates to its commerce platform, with AI emerging as a key theme. The new AI-powered capabilities are aimed at helping merchants work smarter, sell more, and create better customer experiences.

The headline feature is Shopify Magic, which applies different AI models to assist merchants in various ways. This includes automatically generating product descriptions, FAQ pages, and other marketing copy.

On the marketing front, Shopify is infusing its Audiences ad targeting tool with more AI to optimize campaign performance. Its new semantic search capability better understands search intent using natural language processing.

OpenAI explores how good GPT-4 is at creating bioweapons

OpenAI is developing a blueprint for evaluating the risk that a large language model (LLM) could aid someone in creating a biological threat.

In an evaluation involving both biology experts and students, it found that GPT-4 provides at most a mild uplift in biological threat creation accuracy. While this uplift is not large enough to be conclusive, the finding is a starting point for continued research and community deliberation.

LLaVA-1.6: Improved reasoning, OCR, and world knowledge

LLaVA-1.6 releases with improved reasoning, OCR, and world knowledge. It even exceeds Gemini Pro on several benchmarks. Compared with LLaVA-1.5, LLaVA-1.6 has several improvements:

Increasing the input image resolution to 4x more pixels.

Better visual reasoning and OCR capability with an improved visual instruction tuning data mixture.

Better visual conversation for more scenarios, covering different applications.Better world knowledge and logical reasoning.

Efficient deployment and inference with SGLang.

Along with performance improvements, LLaVA-1.6 maintains the minimalist design and data efficiency of LLaVA-1.5. The largest 34B variant finishes training in ~1 day with 32 A100s.

Google rolls out huge AI upgrades:

1. Launches an AI image generator - ImageFX

It allows users to create and edit images using a prompt-based UI. It offers an "expressive chips" feature, which provides keyword suggestions to experiment with different dimensions of image creation. Google claims to have implemented technical safeguards to prevent the tool from being used for abusive or inappropriate content.

Additionally, images generated using ImageFX will be tagged with a digital watermark called SynthID for identification purposes. Google is also expanding the use of Imagen 2, the image model, across its products and services.

2. Google has released two new AI tools for music creation: MusicFX and TextFX

MusicFX generates music based on user prompts but has limitations with stringed instruments and filters out copyrighted content.

TextFX, conversely, is a suite of modules designed to aid in the lyrics-writing process, drawing inspiration from rap artist Lupe Fiasco.

3. Google’s Bard is now Gemini Pro-powered globally, supporting 40+ languages

The chatbot will have improved understanding and summarizing content, reasoning, brainstorming, writing, and planning capabilities. Google has also extended support for more than 40 languages in its "Double check" feature, which evaluates if search results are similar to what Bard generates.

4. Google's Bard can now generate photos using its Imagen 2 text-to-image model

Bard's image generation feature is free, and Google has implemented safety measures to avoid generating explicit or offensive content.

5. Google Maps introduces a new AI feature to help users discover new places

The feature uses LLMs to analyze over 250M locations and contributions from over 300M Local Guides. Users can search for specific recommendations, and the AI will generate suggestions based on their preferences. It’s currently being rolled out in the US.

(Source)

Amazon launches an AI shopping assistant for product recommendations

Amazon has launched an AI-powered shopping assistant called Rufus in its mobile app. Rufus is trained on Amazon's product catalog and information from the web, allowing customers to chat with it to get help with finding products, comparing them, and getting recommendations.

The AI assistant will initially be available in beta to select US customers, with plans to expand to more users in the coming weeks. Customers can type or speak their questions into the chat dialog box, and Rufus will provide answers based on their training.

Meta to deploy custom in-house chips to reduce dependence on costly NVIDIA

Meta plans to deploy a new version of its custom chip aimed at supporting its AI push in its data centers this year, according to an internal company document. The chip, a second generation of Meta's in-house silicon line, could help reduce the company's dependence on Nvidia chips and control the costs associated with running AI workloads. The chip will work in coordination with commercially available graphics processing units (GPUs).

That's all for now!

Subscribe to The AI Edge and gain exclusive access to content enjoyed by professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other esteemed organizations.

Thanks for reading, and see you on Monday. 😊