AI Weekly Rundown (February 17 to February 23)

Major AI announcements from NVIDIA, Apple, Google, Adobe, Meta, and more.

Hello Engineering Leaders and AI Enthusiasts!

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:

🚀 NVIDIA's new dataset sharpens LLMs in math

🌟 Apple is working on AI updates for Spotlight and Xcode

🤖 Google open-sources Magika, its AI-powered file-type identifier

⏩ Groq’s new AI chip outperforms ChatGPT

📊 BABILong: The new benchmark to assess LLMs for long docs

👥 Stanford’s AI identifies sex from brain scans with 90% accuracy📃 Adobe’s new AI assistant manages your docs

🎤 Meta released Aria recordings to fuel smart speech recognition

🔥 Penn's AI chip runs on light, not electricity

💡 Google releases its first open-source LLM

♾️ AnyGPT: A major step towards artificial general intelligence

💻 Google launches Gemini for Workspace

🖼️ Stable Diffusion 3: A multi-subject prompting text-to-image model

🔍 LongRoPE: Extending LLM context window beyond 2M tokens

📝 Google Chrome introduces "Help me write" AI feature

Let’s go!

NVIDIA's new dataset sharpens LLMs in math

NVIDIA has released OpenMathInstruct-1, an open-source math instruction tuning dataset with 1.8M problem-solution pairs. OpenMathInstruct-1 is a high-quality, synthetically generated dataset 4x bigger than previous ones and does NOT use GPT-4. The dataset is constructed by synthesizing code-interpreter solutions for GSM8K and MATH, two popular math reasoning benchmarks, using the Mixtral model.

The best model, OpenMath-CodeLlama-70B, trained on a subset of OpenMathInstruct-1, achieves a score of 84.6% on GSM8K and 50.7% on MATH, which is competitive with the best gpt-distilled models.

Apple is working on AI updates to Spotlight and Xcode

Apple has expanded internal testing of new generative AI features for its Xcode programming software and plans to release them to third-party developers this year.

Furthermore, it is looking at potential uses for generative AI in consumer-facing products, like automatic playlist creation in Apple Music, slideshows in Keynote, or Spotlight search. AI chatbot-like search features for Spotlight could let iOS and macOS users make natural language requests, like with ChatGPT, to get weather reports or operate features deep within apps.

Google open-sources Magika, its AI-powered file-type identifier

Google has open-sourced Magika, its AI-powered file-type identification system, to help others accurately detect binary and textual file types. Magika employs a custom, highly optimized deep-learning model, enabling precise file identification within milliseconds, even when running on a CPU.

Magika, thanks to its AI model and large training dataset, is able to outperform other existing tools by about 20%. It has greater performance gains on textual files, including code files and configuration files that other tools can struggle with.

Internally, Magika is used at scale to help improve Google users’ safety by routing Gmail, Drive, and Safe Browsing files to the proper security and content policy scanners.

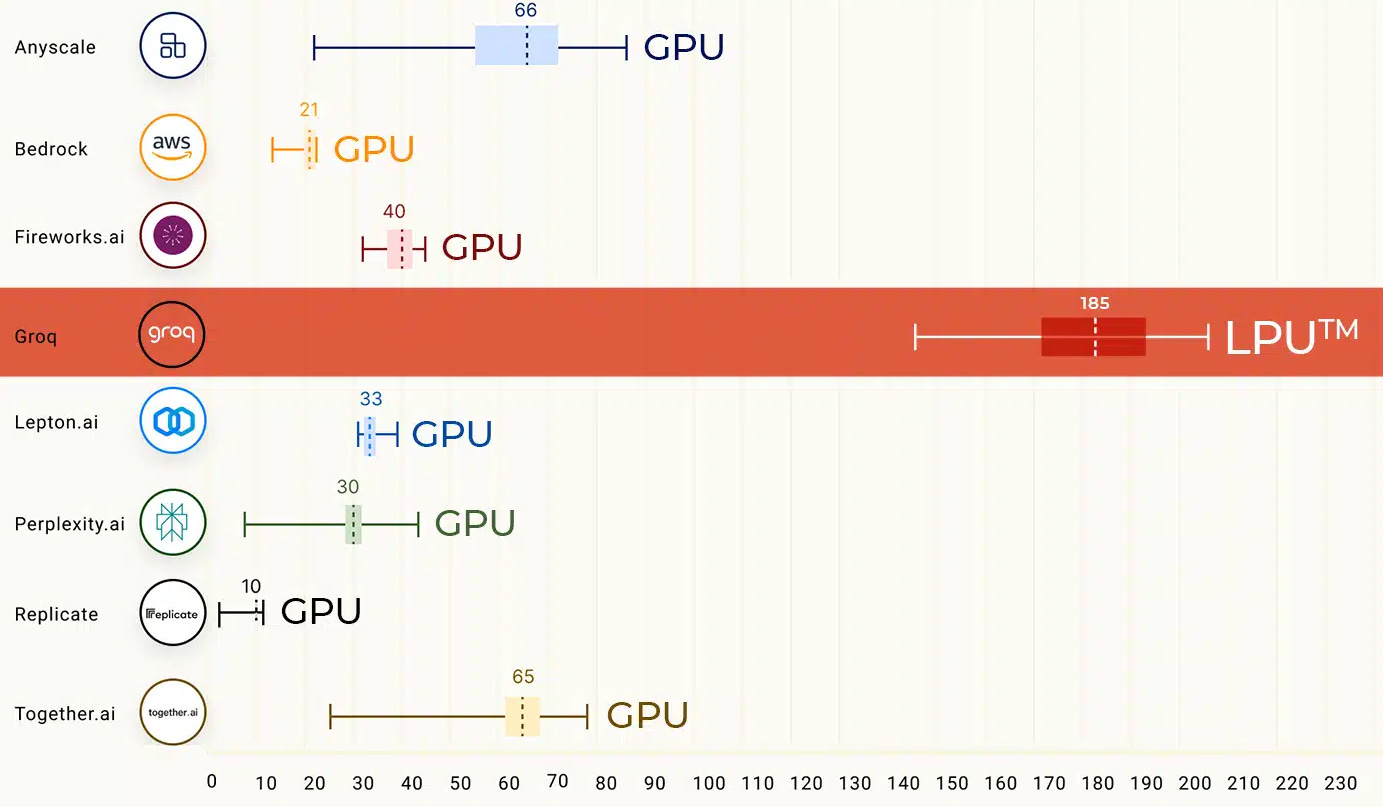

Groq’s new AI chip turbocharges LLMs, outperforms ChatGPT

Groq has developed a special AI hardware known as the first-ever Language Processing Unit (LPU) that aims to increase the processing power of current AI models that normally work on GPUs. These LPUs can process up to 500 tokens/second, far superior to Gemini Pro and ChatGPT-3.5, which can only process between 30 and 50 tokens/second.

The company has named the LPU-based AI chip “GroqChip,” which uses a "tensor streaming architecture" that is less complex than traditional GPUs, enabling lower latency and higher throughput. This makes the chip a suitable candidate for real-time AI applications such as live-streaming sports or gaming.

BABILong: The new benchmark to assess LLMs for long docs

A new research paper delves into the limitations of current generative transformer models like GPT-4 when tasked with processing lengthy documents. It identifies a significant GPT-4 and RAG dependency on the initial 25% of input, indicating potential for enhancement. To address this, the authors propose leveraging recurrent memory augmentation within the transformer model to achieve superior performance.

Introducing a new benchmark called BABILong (Benchmark for Artificial Intelligence for Long-context evaluation), the study evaluates GPT-4, RAG, and RMT (Recurrent Memory Transformer). Results demonstrate that conventional methods prove effective only for sequences up to 10^4 elements, while fine-tuning GPT-2 with recurrent memory augmentations enables handling tasks involving up to 10^7 elements, highlighting its significant advantage.

Stanford’s AI model identifies sex from brain scans with 90% accuracy

Stanford medical researchers have developed a new-age AI model that determines the sex of individuals based on brain scans, with over 90% success. The AI model focuses on dynamic MRI scans, identifying specific brain networks—such as the default mode, striatum, and limbic networks—as critical in distinguishing male from female brains.

Adobe’s new AI assistant manages your docs

Adobe launched an AI assistant feature in its Acrobat software to help users navigate documents. It summarizes content, answers questions, and generates formatted overviews. The chatbot aims to save time working with long files and complex information.

Additionally, Adobe created a dedicated 50-person AI research team called CAVA (Co-Creation for Audio, Video, & Animation) focused on advancing generative video, animation, and audio creation tools. The research group will explore integrating Adobe's existing creative tools with techniques like text-to-video generation.

Meta released Aria recordings to fuel smart speech recognition

Meta has released a multi-modal dataset of two-person conversations captured on Aria smart glasses. It contains audio across 7 microphones, video, motion sensors, and annotations. The glasses were worn by one participant while speaking spontaneously with another compensated contributor.

The dataset aims to advance research in areas like speech recognition, speaker ID, and translation for augmented reality interfaces. Its audio, visual, and motion signals together provide a rich capture of natural talking that could help train AI models. Such in-context glasses conversations can enable closed captioning and real-time language translation.

Penn's AI chip runs on light, not electricity

Penn engineers have developed a photonic chip that uses light waves for complex mathematics. It combines optical computing research by Professor Nader Engheta with nanoscale silicon photonics technology pioneered by Professor Firooz Aflatouni.

It allows accelerated AI computations with low power consumption and high performance. The design is ready for commercial production, including integration into graphics cards for AI development. Additional advantages include parallel processing without sensitive data storage.

Enjoying the weekly updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Google releases its first open-source LLM

Google has open-sourced Gemma, a new family of state-of-the-art language models available in 2B and 7B parameter sizes. Despite being lightweight enough to run on laptops and desktops, Gemma models have been built with the same technology used for Google’s massive proprietary Gemini models and achieve remarkable performance— the 7B Gemma model outperforms the 13B LLaMA model on many key natural language processing benchmarks.

in addition, Google released a Responsible Generative AI Toolkit to assist developers in building safe applications. This includes tools for robust safety classification, debugging model behavior, and implementing best practices for deployment based on Google’s experience. Gemma is available on Google Cloud, Kaggle, Colab, and a few other platforms with incentives like free credits to get started.

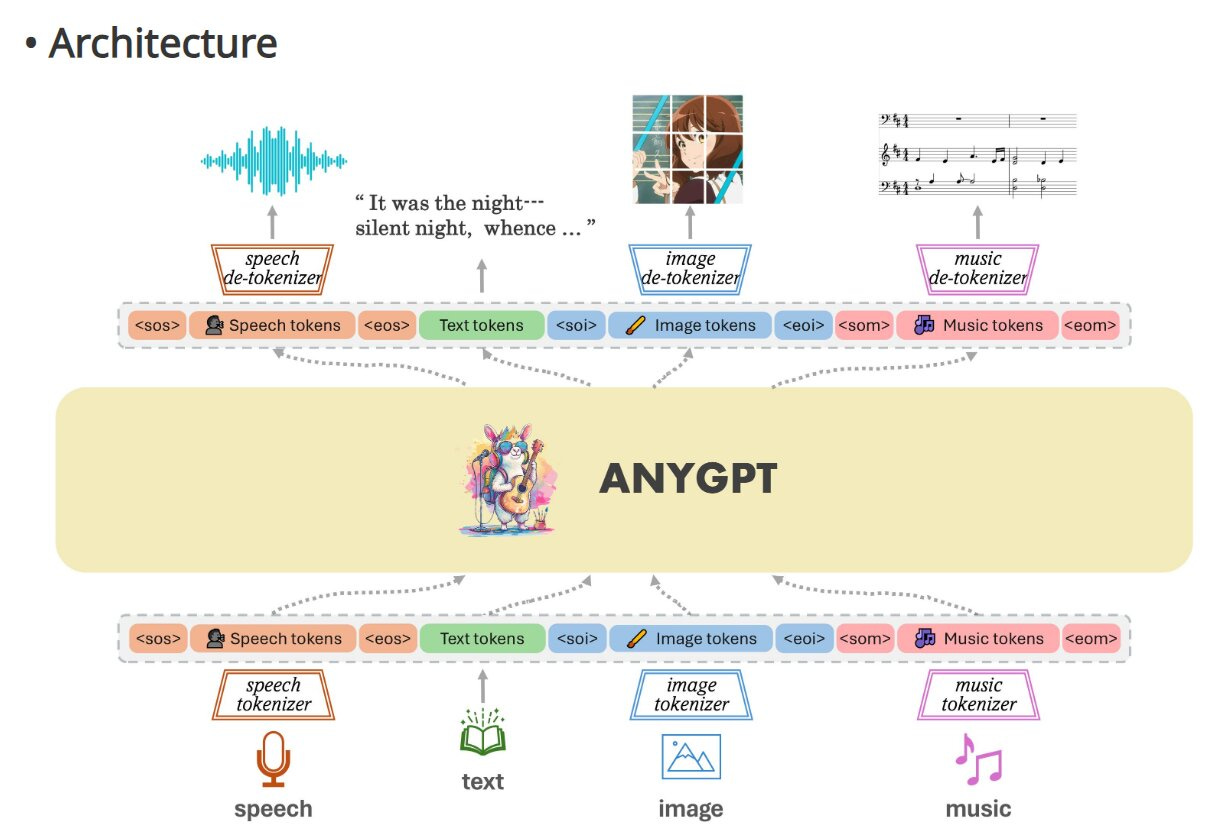

AnyGPT: A major step towards artificial general intelligence

Researchers in Shanghai have achieved a breakthrough in AI capabilities with the development of AnyGPT - a new model that can understand and generate data in virtually any modality, including text, speech, images, and music. AnyGPT leverages an innovative discrete representation approach that allows a single underlying language model architecture to smoothly process multiple modalities as inputs and outputs.

Initial experiments show that AnyGPT achieves zero-shot performance comparable to specialized models across various modalities.

Google launches Gemini for Workspace to bring advanced AI to business users

Google has rebranded its Duet AI for Workspace offering as Gemini for Workspace. This brings the capabilities of Gemini, Google's most advanced AI model, into Workspace apps like Docs, Sheets, and Slides to help business users be more productive.

The new Gemini add-on comes in two tiers - a Business version for SMBs and an Enterprise version. Both provide AI-powered features like enhanced writing and data analysis, but Enterprise offers more advanced capabilities. This offering pits Google against Microsoft, which has a similar Copilot experience for commercial users.

Stable Diffusion 3 creates jaw-dropping text-to-images!

Stability.AI announced the Stable Diffusion 3 in an early preview. It is a text-to-image model with improved performance in multi-subject prompts, image quality, and spelling abilities.

It has generated significant excitement in the AI community due to its superior image and text generation capabilities. This next-generation image tool promises better text generation, strong prompt adherence, and resistance to prompt leaking, ensuring the generated images match the requested prompts.

LongRoPE: Extending LLM context window beyond 2 million tokens

Researchers at Microsoft have introduced LongRoPE, a groundbreaking method that extends the context window of pre-trained large language models (LLMs) to an impressive 2048k tokens.

Current extended context windows are limited to around 128k tokens due to high fine-tuning costs, scarcity of long texts, and catastrophic values introduced by new token positions. LongRoPE overcomes these challenges.

Experiments on LLaMA2 and Mistral across various tasks demonstrate the effectiveness of LongRoPE. The extended models retain the original architecture with minor positional embedding modifications and optimizations.

Google Chrome introduces "Help me write" AI feature

Google has recently rolled out an experimental AI feature called "Help me write" for its Chrome browser. This feature, powered by Gemini, aims to assist users in writing or refining text based on webpage content. It focuses on providing writing suggestions for short-form content, such as filling in digital surveys and reviews and drafting descriptions for items being sold online.

The tool can understand the webpage's context and pull relevant information into its suggestions, such as highlighting critical features mentioned on a product page for item reviews. Users can right-click on an open text field on any website to access the feature on Google Chrome.

This feature is currently only available for English-speaking Chrome users in the US on Mac and Windows PCs.

That's all for now!

Subscribe to The AI Edge and gain exclusive access to content enjoyed by professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other esteemed organizations.

Thanks for reading, and see you on Monday. 😊