AI Weekly Rundown (December 02 to December 08)

Major AI announcements from Meta, Runway, Microsoft, Apple, Google and more.

Hello Engineering Leaders and AI Enthusiasts!

Another eventful week in the AI realm. Lots of big news from huge enterprises, with major updates from Meta and Microsoft.

In today’s edition:

🧠 Meta’s Audiobox advances controllability for AI audio

📁 Mozilla lets you turn LLMs into single-file executables

🚀 Alibaba’s Animate Anyone may be the next breakthrough in AI animation

🤝 Runway partners with Getty Images to build enterprise AI tools⚛️ IBM introduces next-gen Quantum Processor & Quantum System Two

📱 Microsoft’s ‘Seeing AI App’ is now on Android with 18 languages

🎉 Microsoft Copilot celebrates the first year with significant new innovations🔍 Bing’s new “Deep Search” finds deeper, relevant results for complex queries

🧠 DeepMind’s new way for AI to learn from humans in real-time

🚀 Google launches Gemini, its largest, most capable model yet📱 Meta’s new image AI and core AI experiences across its app family

🛠️ Apple quietly releases a framework, MLX, to build foundation models

🌟 Stability AI reveals StableLM Zephyr 3B, 60% smaller yet accurate🦙 Meta launches Purple Llama for Safe AI development

👤 Meta released an update to Codec Avatars with lifelike animated faces

Let’s go!

We need your help!

We are working on a Gen AI survey and would love your input.

It takes just 2 minutes.

The survey insights will help us both.

And hey, you might also win a $100 Amazon gift card!

Every response counts. Thanks in advance!

Meta’s Audiobox advances controllability for AI audio

Audiobox is Meta’s new foundation research model for audio generation. The successor to Voicebox, it is advancing generative AI for audio further by unifying generation and editing capabilities for speech, sound effects (short, discrete sounds like a dog bark, car horn, a crack of thunder, etc.), and soundscapes, using a variety of input mechanisms to maximize controllability.

Most notably, Audiobox lets you use natural language prompts to describe a sound or type of speech you want. You can also use it combined with voice inputs, thus making it easy to create custom audio for a wide range of use cases.

Mozilla lets you turn LLMs into single-file executables

LLMs for local use are usually distributed as a set of weights in a multi-gigabyte file. These cannot be directly used on their own, making them harder to distribute and run compared to other software. A given model can also have undergone changes and tweaks, leading to different results if different versions are used.

To help with that, Mozilla’s innovation group has released llama file, an open-source method of turning a set of weights into a single binary that runs on six different OSs (macOS, Windows, Linux, FreeBSD, OpenBSD, and NetBSD) without needing to be installed. This makes it dramatically easier to distribute and run LLMs and ensures that a particular version of LLM remains consistent and reproducible forever.

Alibaba’s Animate Anyone may be the next breakthrough in AI animation

Alibaba Group researchers have proposed a novel framework tailored for character animation– Animate Anyone: Consistent and Controllable Image-to-Video Synthesis for Character Animation.

Despite diffusion models’ robust generative capabilities, challenges persist in image-to-video (especially in character animation), where temporally maintaining consistency with details remains a formidable problem.

This framework leverages the power of diffusion models. To preserve the consistency of intricacies from reference images, it uses ReferenceNet to merge detail features via spatial attention. To ensure controllability and continuity, it introduces an efficient pose guide. It achieves SoTA results on benchmarks for fashion video and human dance synthesis.

Runway partners with Getty Images to build enterprise AI tools

Runway is partnering with Getty Images to develop AI tools for enterprise customers. This collaboration will result in a new video model that combines Runway's technology with Getty Images' licensed creative content library.

This model will allow companies to create HQ-customized video content by fine-tuning the baseline model with their own proprietary datasets. It will be available for commercial use in the coming months. RunwayML currently has a waiting list.

IBM introduces next-gen Quantum Processor & Quantum System Two

IBM introduces Next-Generation Quantum Processor & IBM Quantum System Two. This next-generation Quantum Processor is called IBM Quantum Heron, which offers a five-fold improvement in error reduction compared to its predecessor.

IBM Quantum System Two is the first modular quantum computer, which has begun operations with three IBM Heron processors.

IBM has extended its Quantum Development Roadmap to 2033, with a focus on improving gate operations to scale with quality towards advanced error-corrected systems.

Additionally, IBM announced Qiskit 1.0, the world's most widely used open-source quantum programming software, and showcased generative AI models designed to automate quantum code development and optimize quantum circuits.

Microsoft’s ‘Seeing AI App’ now on Android with 18 languages

Microsoft has launched the Seeing AI app on Android, offering new features and languages. The app, which narrates the world for blind and low-vision individuals, is now available in 18 languages, with plans to expand to 36 by 2024.

The Android version includes new generative AI features, such as richer descriptions of photos and the ability to chat with the app about documents. Seeing AI allows users to point their camera or take a photo to hear a description and offers various channels for specific information, such as text, documents, products, scenes, and more.

You can Download Android Seeing AI from the Play Store and the iOS from the App Store.

Microsoft Copilot celebrates the first year with significant new innovations

Celebrating the first year of Microsoft Copilot, Microsoft announced several new features that are beginning to roll out:

GPT-4 Turbo is coming soon to Copilot: It will be able to generate responses using GPT-4 Turbo, enabling it to take in more “data” with 128K context window. This will allow Copilot to better understand queries and offer better responses.

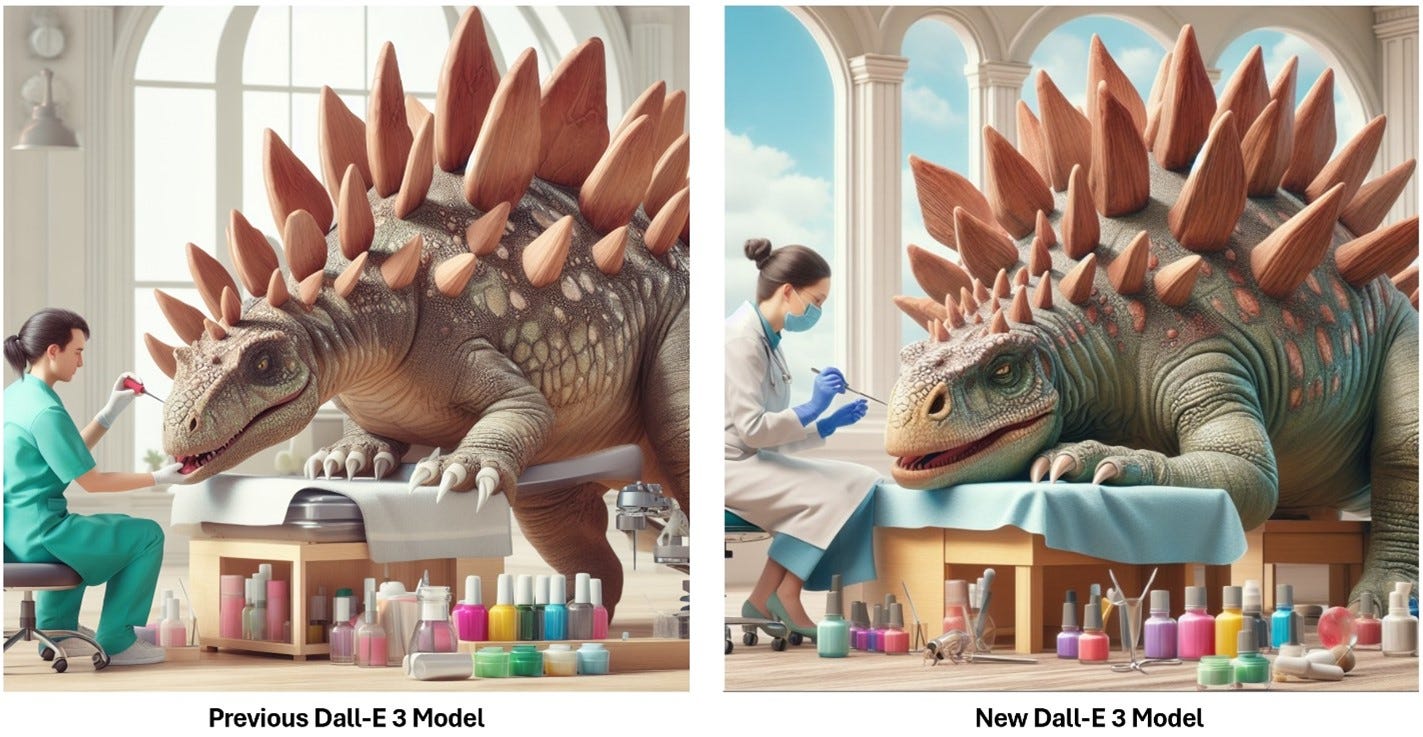

New DALL-E 3 Model: You can now use Copilot to create images that are even higher quality and more accurate to the prompt with an improved DALL-E 3 model. Here’s a comparison.

Multi-Modal with Search Grounding: Combining the power of GPT-4 with vision with Bing image search and web search data to deliver better image understanding for your queries. The results are pretty impressive.

Code Interpreter: A new capability that will enable you to perform complex tasks such as more accurate calculation, coding, data analysis, visualization, math, and more.

Video understanding and Q&A– Copilot in Edge: Summarize or ask questions about a video that you are watching in Edge.

Inline Compose with rewrite menu: With Copilot, Microsoft Edge users can easily write from most websites. Just select the text you want to change and ask Copilot to rewrite it for you.

Deep Search in Bing (more about it in the next section)

All features will be widely available soon.

Why does this matter?

Microsoft seems committed to bringing more innovation and advanced capabilities to Copilot. It is also capitalizing on its close partnership with OpenAI and making OpenAI’s advancements accessible with Copilot, paving the way for more inclusive and impactful AI utilization.

Bing’s new “Deep Search” finds deeper, relevant results for complex queries

Microsoft is introducing Deep Search in Bing to provide more relevant and comprehensive answers to the most complex search queries. It uses GPT-4 to expand a search query into a more comprehensive description of what an ideal set of results should include. This helps capture intent and expectations more accurately and clearly.

Bing then goes much deeper into the web, pulling back relevant results that often don't show up in typical search results. This takes more time than normal search, but Deep Search is not meant for every query or every user. It's designed for complex questions that require more than a simple answer.

Deep Search is an optional feature and not a replacement for Bing's existing web search, but an enhancement that offers the option for a deeper and richer exploration of the web.

DeepMind’s new way for AI to learn from humans in real-time

Google DeepMind has developed a new way for AI agents to learn from humans in a rich 3D physical simulation. This allows for robust real-time “cultural transmission” (a form of social learning) without needing large datasets.

The system uses deep reinforcement learning combined with memory, attention mechanisms, and automatic curriculum learning to achieve strong performance. Tests show that it can generalize across a wide task space, recall demos with high fidelity when the expert drops out, and closely match human trajectories with goals.

Enjoying the weekly updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Google launches Gemini, its largest, most capable model yet

It looks like ChatGPT’s ultimate competitor is here. After much anticipation, Google has launched Gemini, its most capable and general model yet. Here’s everything you need to know:

Built from the ground up to be multimodal, it can generalize and understand, operate across and combine different types of information, including text, code, audio, image, and video. (Check out this incredible demo)

Its first version, Gemini 1.0, is optimized for different sizes: Ultra for highly complex tasks, Pro for scaling across a wide range of tasks, and Nano as the most efficient model for on-device tasks.

Gemini Ultra’s performance exceeds current SoTA results on 30 of the 32 widely-used academic benchmarks used in LLM R&D.

With a score of 90.0%, Gemini Ultra is the first model to outperform human experts on MMLU.

It has next-gen capabilities– sophisticated reasoning, advanced math and coding, and more.

Gemini 1.0 is now rolling out across a range of Google products and platforms– Pro in Bard (Bard will now be better and more usable), Nano on Pixel, and Ultra will be rolling out early next year.

Meta’s new image AI and core AI experiences across its app family

Meta is rolling out a new, standalone generative AI experience on the web, Imagine with Meta, that creates images from natural language text prompts. It is powered by Meta’s Emu and creates 4 high-resolution images per prompt. It’s free to use (at least for now) for users in the U.S. It is also rolling out invisible watermarking to it.

Meta is also testing more than 20 new ways generative AI can improve your experiences across its family of apps– spanning search, social discovery, ads, business messaging, and more. For instance, it is adding new features to the messaging experience while also leveraging it behind the scenes to power smart capabilities.

Another instance, it is testing ways to easily create and share AI-generated images on Facebook.

Apple quietly releases a framework to build foundation models

Apple’s ML research team released MLX, a machine learning framework where developers can build models that run efficiently on Apple Silicon and deep learning model library MLX Data. Both are accessible through open-source repositories like GitHub and PyPI.

MLX is intended to be easy to use for developers but has enough power to train AI models like Meta’s Llama and Stable Diffusion. The video is a Llama v1 7B model implemented in MLX and running on an M2 Ultra.

Stability AI reveals StableLM Zephyr 3B, 60% smaller yet accurate

StableLM Zephyr 3B is a new addition to StableLM, a series of lightweight Large Language Models (LLMs). It is a 3 billion parameter model that is 60% smaller than 7B models, making it suitable for edge devices without high-end hardware. The model has been trained on various instruction datasets and optimized using the Direct Preference Optimization (DPO) algorithm.

It generates contextually relevant and accurate text well, surpassing larger models in similar use cases. StableLM Zephyr 3B can be used for a wide range of linguistic tasks, from Q&A-type tasks to content personalization, while maintaining its efficiency.

Meta launches Purple Llama for Safe AI development

Meta has announced the launch of Purple Llama, an umbrella project aimed at promoting the safe and responsible development of AI models. Purple Llama will provide tools and evaluations for cybersecurity and input/output safeguards. The project aims to address risks associated with generative AI models by taking a collaborative approach known as purple teaming, which combines offensive (red team) and defensive (blue team) strategies.

The cybersecurity tools will help reduce the frequency of insecure code suggestions and make it harder for AI models to generate malicious code. The input/output safeguards include an openly available foundational model called Llama Guard to filter potentially risky outputs.

This model has been trained on a mix of publicly available datasets to enable the detection of common types of potentially risky or violating content that may be relevant to a number of developer use cases. Meta is working with numerous partners to create an open ecosystem for responsible AI development.

Meta released an update to Codec Avatars with lifelike animated faces

Meta Research’s work presents Relightable Gaussian Codec Avatars, a method to create high-quality animated head avatars with realistic lighting and expressions. The avatars capture fine details like hair strands and pores using a 3D Gaussian geometry model. A novel relightable appearance model allows for real-time relighting with all-frequency reflections.

The avatars also have improved eye reflections and explicit gaze control. The method outperforms existing approaches without sacrificing real-time performance. The avatars can be rendered in real-time from any viewpoint in VR and support interactive point light control and relighting in natural illumination.

That's all for now!

Subscribe to The AI Edge and gain exclusive access to content enjoyed by professionals from Moody’s, Vonage, Voya, WEHI, Cox, INSEAD, and other esteemed organizations.

Thanks for reading, and see you on Monday. 😊