Hello, Engineering Leaders and AI Enthusiasts,

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:

✅ OpenCopilot- AI sidekick for everyone

✅ Google teaches LLMs to personalize

✅ AI creates lifelike 3D experiences from your phone video

✅ YouTube and Universal Music Partner to Launch ‘AI Incubator’

✅ Snapchat’s Gen AI ‘Dreams’

✅ 40% Workers need to reskill, says IBM

✅ Meta’s SeamlessM4T: The first all-in-one, multilingual multimodal AI

✅ Hugging Face’s IDEFICS is like a multimodal ChatGPT

✅ OpenAI enables fine-tuning for GPT-3.5 Turbo

✅ Stability collabs with NVIDIA to 2x the AI Image gen speed

✅ Figma launched an AI assistant powered by ChatGPT

✅ CoDeF ensures smooth AI-powered video edits

✅ Meta’s coding version of Llama-2

✅ Google, Amazon, Nvidia, and others pour $235M into Hugging Face

✅ Hugging Face’s Safecoder lets businesses own their own Code LLMs

Let’s go!

OpenCopilot- AI sidekick for everyone

OpenCopilot allows you to have your own product's AI copilot. With a few simple steps, it takes less than 5 minutes to build.

It integrates with your underlying APIs and can execute API calls whenever needed. It uses LLMs to determine if the user's request requires calling an API endpoint. Then, it decides which endpoint to call and passes the appropriate payload based on the given API definition.

Google teaches LLMs to personalize

LLMs are already good at synthesizing text, but personalized text generation can unlock even more. New Google research has proposed an approach inspired by the practice of writing education for personalized text generation using LLMs. It has a multistage and multitask framework consisting of multiple stages: retrieval, ranking, summarization, synthesis, and generation.

In addition, they introduce a multitask setting that further helps the model improve its generation ability, which is inspired by the observation that a student's reading proficiency and writing ability are often correlated. When evaluated on three public datasets, each covering a different and representative domain, the results showed significant improvements over various baselines.

AI creates lifelike 3D experiences from your phone video

Luma AI has introduced Flythroughs, an app that allows one-touch generation of photorealistic, cinematic 3D videos that look like professional drone captures. Record like you're showing the place to a friend, and hit Generate– all on your iPhone. No need for drones, lidar, expensive real estate cameras, and a crew.

Flythroughs is built on Luma's breakthrough NeRF and 3D generative AI and a brand new path generation model that automatically creates smooth dramatic camera moves.

YouTube and Universal Music Partner to Launch ‘AI Incubator’

YouTube is partnering with Universal Music to launch an incubator focused on exploring the use of AI in music. The incubator will work with artists and musicians, including Anitta, ABBA's Björn Ulvaeus, and Max Ricther, to gather insights on generative AI experiments and research. YouTube CEO Neal Mohan stated that the incubator will inform the company's approach as it collaborates with innovative artists, songwriters, and producers.

YouTube also plans to invest in AI-powered technology, including enhancing its copyright management tool, Content ID, to protect viewers and creators.

Snapchat’s Gen AI ‘Dreams’

Snapchat is set to introduce a new AI tool, “Dreams.” It aims to gear up its creative AI capabilities; this feature will allow users to take or upload selfies and have the app generate new pictures of them in imaginative backgrounds. Like other AI photo apps, Snapchat will require clear selfies with no obstructions or other people for better results.

The company is also developing a feature called “Dreams with Friends,” where users can permit their friends to generate AI images with both of them included. References to purchasing Dream Packs suggest that this feature may be monetizable.

40% Workers need to reskill, says IBM

According to a new IBM study, 40% of workers will need to reskill in the next three years due to the implementation of artificial intelligence. The study found that while AI will undoubtedly bring changes to the workforce and businesses, it is not necessarily for the worse.

In fact, 87% of executives surveyed expect AI to augment roles rather than replace them. The study also highlights the importance of reskilling, with tech adopters who successfully adapt to technology-driven job changes experiencing an average 15% revenue growth rate premium. The report emphasizes that AI won't replace people, but people who use AI will replace those who don't.

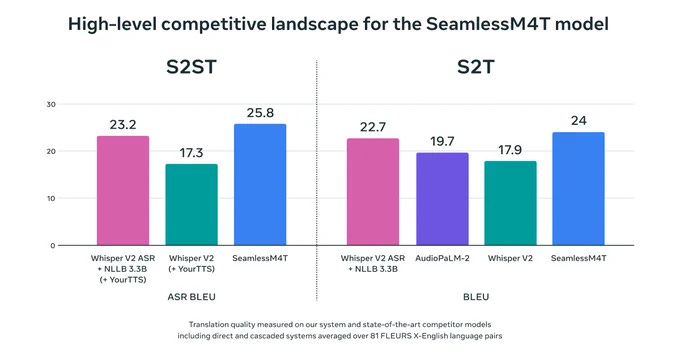

Meta’s SeamlessM4T: The first all-in-one, multilingual multimodal AI

Meta has introduced SeamlessM4T, the first all-in-one multilingual multimodal AI translation and transcription model. This single model can perform speech-to-text, speech-to-speech, text-to-speech, text-to-text translations, and more for up to 100 languages without relying on multiple separate models.

Compared to cascaded approaches, SeamlessM4T's single system approach reduces errors & delays, increasing translation efficiency & quality, delivering state-of-the-art results.

Meta is also releasing its training dataset called SeamlessAlign and sharing the model publicly to allow researchers and developers to build on this technology.

📢 Invite friends and get rewards 🤑🎁

Enjoying AI updates? Refer friends and get perks and special access to The AI Edge.

Get 400+ AI Tools and 500+ Prompts for 1 referral.

Get A Free Shoutout! for 3 referrals.

Get The Ultimate Gen AI Handbook for 5 referrals.

When you use the referral link above or the “Share” button on any post, you'll get credit for any new subscribers. Simply send the link in a text, email or share it on social media with friends.

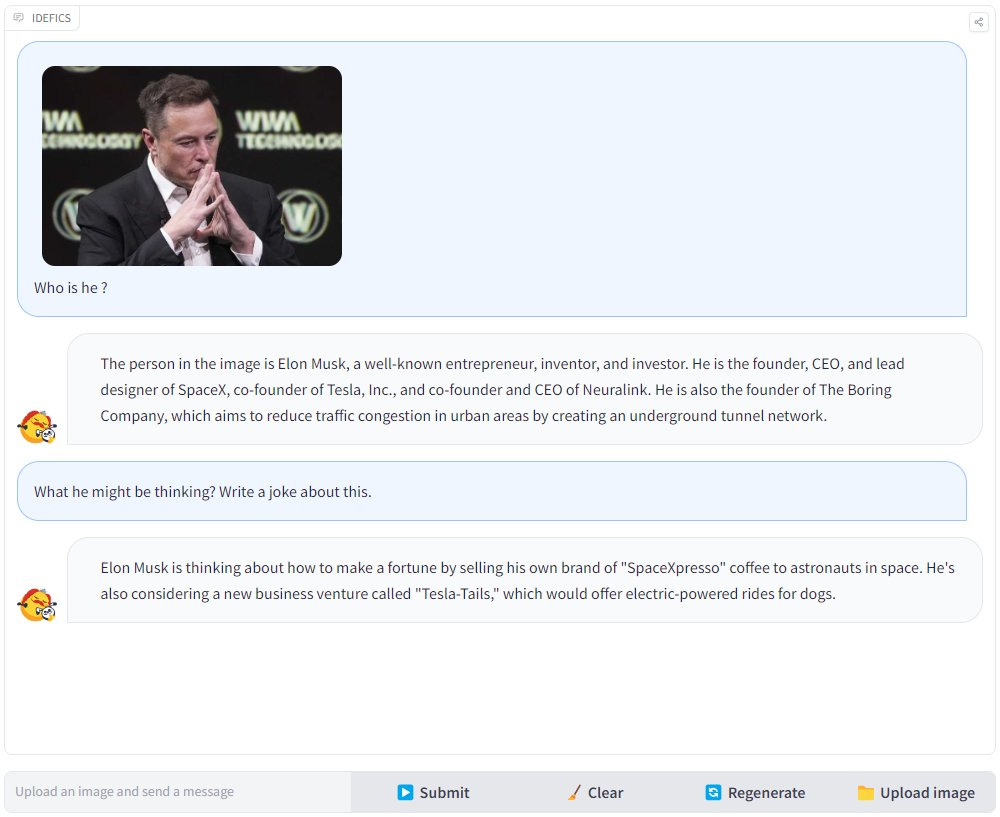

Hugging Face’s IDEFICS is like a multimodal ChatGPT

Imagine a model with a ChatGPT-like understanding of natural language; now add best-in-class image capabilities. Hugging Face has released IDEFICS (Image-aware Decoder Enhanced à la Flamingo with Interleaved Cross-attentionS), an open-access visual language model (VLM). It is based on Flamingo, a SoTA VLM initially developed by DeepMind, which has not been released publicly.

Similarly to GPT-4, the model accepts arbitrary sequences of image and text inputs and produces text outputs. IDEFICS is built solely on publicly available data and models (LLaMA v1 and OpenCLIP) and comes in two variants—the base version and the instructed version. Each variant is available at the 9 billion and 80 billion parameter sizes. We found a demo on X (🤭):

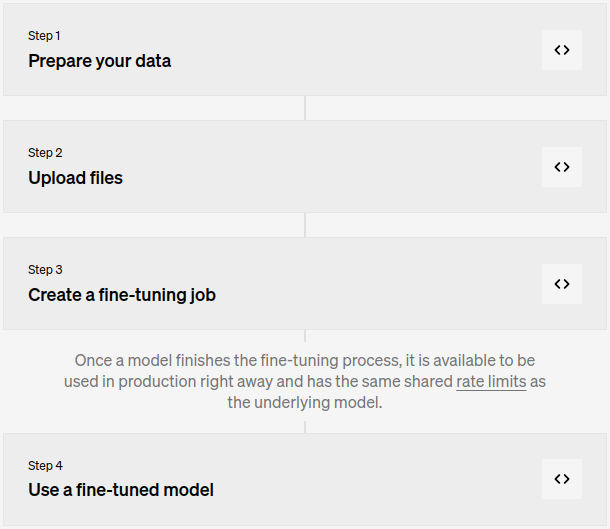

OpenAI enables fine-tuning for GPT-3.5 Turbo

OpenAI has launched fine-tuning for GPT-3.5 Turbo. Fine-tuning lets you train the model on your company's data and run it at scale. Early tests have shown a fine-tuned version of GPT-3.5 Turbo can match, or even outperform, base GPT-4-level capabilities on certain narrow tasks. Here are the fine-tuning steps:

OpenAI also said that none of fine-tuning data, input or output, will be used to train models outside of the client company. And that fine-tuning support for GPT-4 will arrive sometime later this fall.

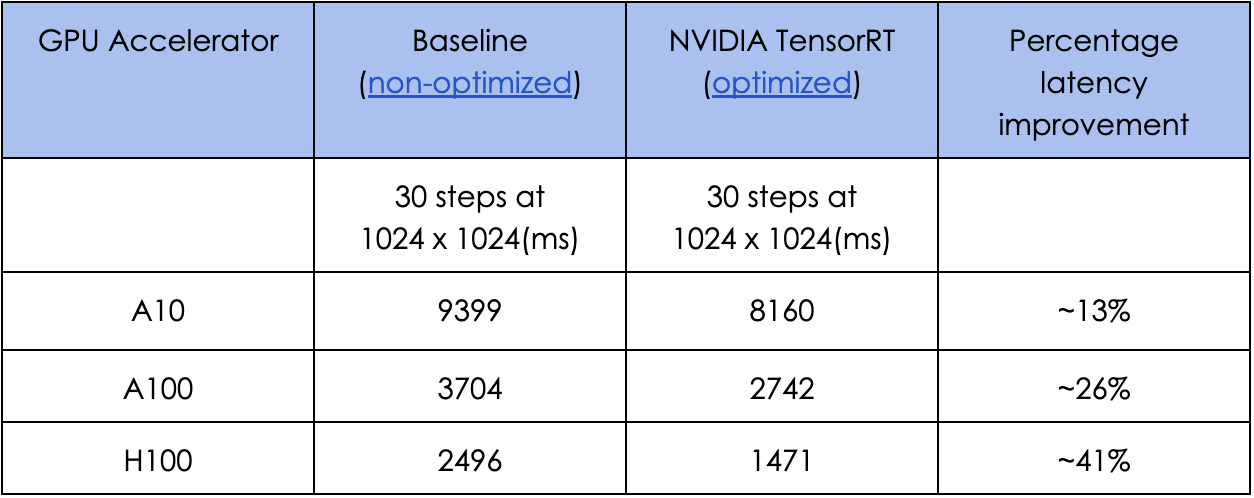

Stability collabs with NVIDIA to 2x the AI Image gen speed

Stability AI has partnered with NVIDIA to enhance the speed of their text-to-image generative AI product, Stable Diffusion XL. By integrating NVIDIA TensorRT, a performance optimization framework, they have significantly improved the speed and efficiency of SDXL.

The integration has doubled performance on NVIDIA H100 chips, generating HD images in just 1.47 seconds. The NVIDIA TensorRT model is also faster and better in latency and throughput than the non-optimized model on A10, A100, and H100 GPU accelerators. This collaboration aims to increase both the speed and accessibility of SDXL.

Latency performance comparison:

Figma launched an AI assistant powered by ChatGPT

Figma launched Jambot, an AI assistant integrated into its whiteboard software, FigJam. Jambot helps with brainstorming, creating mind maps, providing quick answers, and content rewriting, powered by ChatGPT.

Users can boost productivity by using Jambot to initiate first drafts. Figma has been changing its design suite, including introducing Custom Color Palettes in FigJam and enhancing DevMode. The company plans to incorporate AI features into its platform, with recent acquisitions of Diagram and Clover Notes.

CoDeF ensures smooth AI-powered video edits

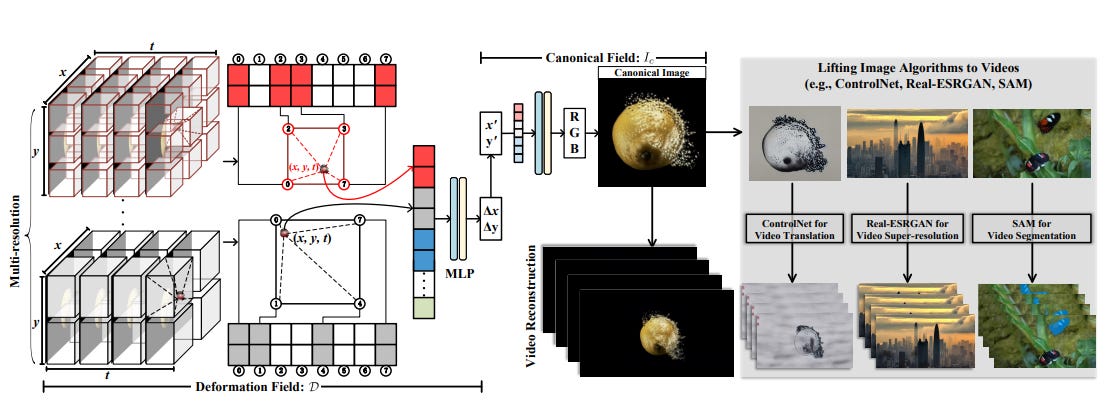

Researchers have recently introduced CoDeF. A new video representation that consists of a canonical content field and a temporal deformation field. These fields are optimized to reconstruct the target video and support lifting image algorithms for video processing.

CoDeF achieves superior cross-frame consistency and can track non-rigid objects. It can lift image-to-image translation to video-to-video translation and keypoint detection to keypoint tracking without training. Overall, CoDeF provides a novel approach to video processing that improves temporal consistency and enables various video editing tasks.

Meta’s coding version of Llama-2

Meta has released Code Llama, a state-of-the-art LLM capable of generating code and natural language about code from both code and natural language prompts. It is free for research and commercial use. It can also be used for code completion and debugging and supports many of the most popular languages today, including Python, C++, Java, PHP, Typescript (Javascript), C#, and Bash.

Code Llama is built on top of Llama 2 and is available in three models:

Code Llama, the foundational code model;

Codel Llama - Python specialized for Python;

and Code Llama - Instruct, fine-tuned for understanding natural language instructions.

It is releasing in three sizes with 7B, 13B, and 34B parameters. Meta’s benchmark testing showed that Code Llama performed better than open-source, code-specific LLMs and outperformed Llama 2. However, it seems there was no 70B variant released.

Google, Amazon, Nvidia, and others pour $235M into Hugging Face

The open-source AI model repository got massive investments from Google, Amazon, Nvidia, and Salesforce as demand for AI model access grows. Other investors included AMD, Intel, Qualcomm, and IBM, all of whom also have invested significantly into generative AI foundation models or processors running these models.

This latest financing brings Hugging Face’s valuation to $4.5 billion. The funds will be used to grow the team, currently at 170, and invest in more open-source AI and platform building.

Hugging Face’s Safecoder lets businesses own their own Code LLMs

Hugging Face has launched SafeCoder, a code assistant solution built for the enterprise. It is a complete commercial solution, including service, software, and support.

For enterprises, the ability to tune Code LLMs on the company code base to create proprietary Code LLMs improves the reliability and relevance of completions to create another level of productivity boost. However, relying on closed-source Code LLMs to create internal code assistants exposes companies to compliance and security issues.

With SafeCoder, Hugging Face will help customers build their own Code LLMs, fine-tuned on their proprietary codebase, using state-of-the-art open models and libraries, without sharing their code with Hugging Face or any other third party.

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI enthusiasts.

Thanks for reading, and see you on Monday! 😊