Advancing AI Chips: Microsoft & NVIDIA

Microsoft's custom AI chips and other Ignite 2023 updates, Nvidia unveils H200 chip.

Hello Engineering Leaders and AI Enthusiasts!

Welcome to the 148th edition of The AI Edge newsletter. This edition brings you all the updates from Microsoft Ignite 2023 with a big focus on AI.

And a huge shoutout to our incredible readers. We appreciate you😊

In today’s edition:

🚀 Microsoft’s Ignite 2023: Custom AI chips and 100 updates

🔥Nvidia unveils H200, its newest high-end AI chip

📚 Knowledge Nugget: AI Gravity (or LLM Gravity) by

Let’s go!

Microsoft’s Ignite 2023: Custom AI chips and 100 updates

Microsoft will make about 100 news announcements at Ignite 2023 that touch on multiple layers of an AI-forward strategy, from adoption to productivity to security. Here are some key announcements:

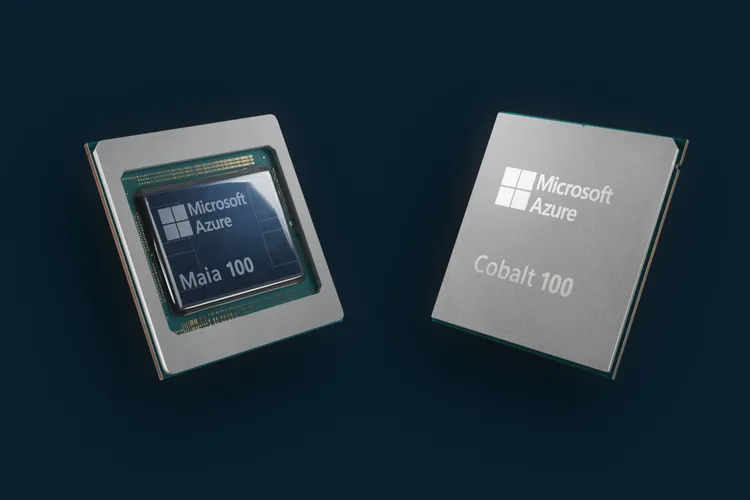

Two new Microsoft-designed chips: The Azure Maia 100 and Cobalt 100 chips are the first two custom silicon chips designed by Microsoft for its cloud infrastructure. Both are designed to power its Azure data centers and ready the company and its enterprise customers for a future full of AI. They are arriving in 2024.

Extending the Microsoft Copilot experience with Copilot-related announcements and updates

100+ feature updates in Microsoft Fabric to reinforce the data and AI connection

Expanded choice and flexibility in generative AI models to offer developers the most comprehensive selection

Expanding the Copilot Copyright Commitment (CCC) to customers using Azure OpenAI Service

New experiences in Windows to empower employees, IT, and developers that unlock new ways of working and make more AI accessible across any device

A host of new AI and productivity tools for developers, including Windows AI Studio

Announcing NVIDIA AI foundry service running on Azure

New technologies across Microsoft’s suite of security solutions and expansion of Security Copilot

(You can get more on all the announcements’ details here)

Why does this matter?

Microsoft is rethinking its cloud infrastructure for the era of AI, and literally optimizing every layer of that infrastructure. But its own chips could only help lower the cost of AI for its customers and OpenAI. However, it could help balance the demand for NVIDIA’s AI chips depending on how fast Microsoft gets these chips into action and also speed up the rollout of its new AI experiences.

(Source)

Nvidia unveils H200, its newest high-end AI chip

Nvidia on Monday unveiled the H200, a GPU designed for training and deploying the kinds of AI models that are powering the generative AI boom.

The new GPU is an upgrade from the H100, the chip OpenAI used to train its GPT-4. The key improvement with the H200 is that it includes 141GB of next-generation “HBM3” memory that will help the chip perform “inference,” or using a large model after it’s trained to generate text, images or predictions.

Nvidia said the H200 will generate output nearly twice as fast as the H100. That’s based on a test using Meta’s Llama 2 LLM.

Why does this matter?

While customer are still scrambling for its H100 chips, Nvidia launches its upgrade. But it simply may have been an attempt to steal AMD’s thunder, its biggest competitor. The main upgrade is its increased memory capacity to generate results nearly 2x faster.

AMD’s chips are expected to eat into Nvidia’s dominant market share with 192 GB of memory versus 80 GB of Nvidia’s H100. Now, Nvidia is closing that gap with 141 GB of memory in its H200 chip.

Enjoying the daily updates?

Refer your pals to subscribe to our daily newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you'll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: AI Gravity (or LLM Gravity)

How to use superior AI to acquire market share from competitors?

Data gravity: the tendency of enterprises to aggregate their apps and workloads around their data ecosystem, and hence affords data vendors a lever to cross-sell and up-sell related workloads such as data catalog, analytics, AI, etc.

Similarly, LLM gravity and its big brother AI gravity are the recent AI adoption “vectors” shaping how vendors compete in the generative AI market and how enterprise customers adopt AI.

Giving this fresh perspective,

answers the following questions in his article:How AI gravity and LLM gravity are playing out in enterprise AI adoption

How enterprise customers feel about AI gravity, and what they are doing about it

How AI (LLM) gravity compares to data gravity, and how they might interact

By the end of the post, you will be able to

Use AI gravity as a mental model to predict cloud providers’ AI roadmaps.

Some ideas for enterprise customers to hedge against AI gravity.

Why does this matter?

Understanding AI gravity and its impact helps make informed business decisions regarding technology investments and market strategies in the AI space. It can also help identify potential market opportunities and innovation areas in AI.

What Else Is Happening❗

🏷️YouTube to roll out labels for ‘realistic’ AI-generated content.

YouTube will now require creators to add labels when they upload content that includes “manipulated or synthetic content that is realistic, including using AI tools.” Users who fail to comply with the new requirements will be held accountable. The policy is meant to help prevent viewers from misleading content. (Link)

💻Dell and Hugging Face partner to simplify LLM deployment.

The two companies will create a new Dell portal on the Hugging Face platform. This will include custom, dedicated containers, scripts, and technical documents for deploying open-source models on Hugging Face with Dell servers and data storage systems. (Link)

🤖Google DeepMind announces its most advanced music generation model.

In partnership with YouTube, it is announcing Lyria, its most advanced AI music generation model to date, and two AI experiments designed to open a new playground for creativity– Dream Track and Music AI tools. (Link)

🤝Spotify to use Google's AI to tailor podcasts, audiobooks recommendations.

Spotify expanded its partnership with Google Cloud to use LLMs to help identify a user's listening patterns across podcasts and audiobooks in order to suggest tailor-made recommendations. It is also exploring the use of LLMs to provide a safer listening experience and identify potentially harmful content. (Link)

🩺In the world’s first AI doctor’s office, Forward CarePods blend AI with medical expertise.

CarePods are AI-powered and self-serve. As soon as you step in, CarePods become your personalized gateway to a broad range of Health Apps, designed to treat the issues of today and prevent the issues of tomorrow. They are being deployed in malls, gyms, and offices, with plans to more than double its footprint in 2024. (Link)

That's all for now!

If you are new to The AI Edge newsletter, subscribe to get daily AI updates and news directly sent to your inbox for free!

Thanks for reading, and see you tomorrow. 😊