AI Weekly Rundown (September 16 to September 22)

New AI features all around from OpenAI, Microsoft, and Google.

Hello, Engineering Leaders and AI Enthusiasts,

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:

🌍 Google’s AI for hyper-personalized Maps🧠 The Rise and Potential of LLM-Based Agents: A survey

🤖 AI makes it easy to personalize 3D-printable models

🔥 Google Bard’s best version yet🤖 Intel’s ‘AI PC’ can run generative AI chatbots directly on laptops

🧬 DeepMind’s new AI can predict genetic diseases

🔥 OpenAI unveils DALL·E 3🤖 Amazon brings Generative AI to Alexa and Fire TV

🧠 Google DeepMind’s says language modeling is compression

🤖 Microsoft’s Copilot puts AI into everything

📹 YouTube announces 3 new AI features for creators

🔬 Google’s innovative approach to train smaller language models

Let’s go

Google’s AI for hyper-personalized Maps

Google and DeepMind have built an AI algorithm to make route suggestions in Google Maps more personalized. It includes 360 million parameters and uses real driving data from Maps users to analyze what factors they consider when making route decisions. The AI calculations include information such as travel time, tolls, road conditions, and personal preferences.

The approach uses Inverse Reinforcement Learning (IRL), which learns from user behavior, and Receding Horizon Inverse Planning (RHIP), which uses different AI techniques for short- and long-distance travel. Tests show that RHIP improves the accuracy of suggested routes for two-wheelers by 16 to 24 percent and should get better at predicting which route they prefer over time.

The Rise and Potential of LLM-Based Agents: A survey

Probably the most comprehensive overview of LLM-based agents, this survey-cum-research covers everything from how to construct AI agents to how to harness them for good. It starts by tracing the concept of agents from its philosophical origins to its development in AI and explains why LLMs are suitable foundations for AI agents. It also:

Presents a conceptual framework for LLM-based agents that can be tailored to suit different applications

Explores the extensive applications of LLM-based agents in three aspects: single-agent scenarios, multi-agent scenarios, and human-agent cooperation

Delve into agent societies, exploring the behavior and personality of LLM-based agents, the social phenomena that emerge when they form societies, and the insights they offer for human society

Discuss a range of key topics and open problems within the field

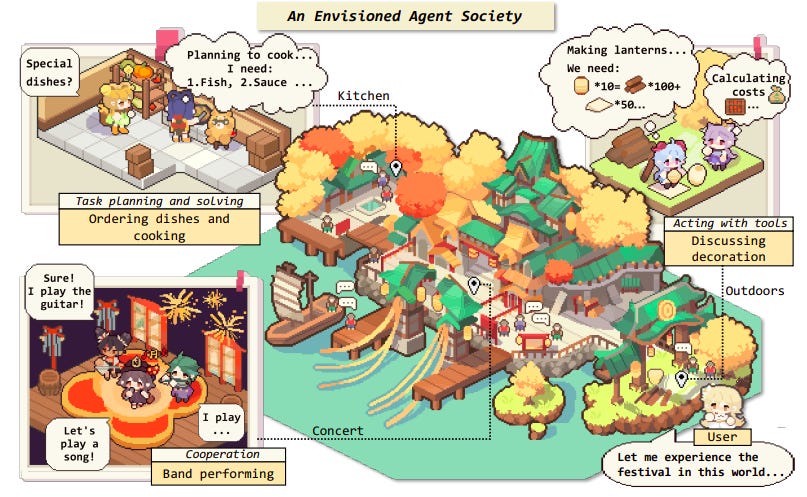

Here’s a scenario of an envisioned society composed of AI agents in which humans can also participate.

AI makes it easy to personalize 3D-printable models

MIT researchers have developed a generative AI-driven tool that enables the user to add custom design elements to 3D models without compromising the functionality of the fabricated objects. A designer could use this tool, called Style2Fab, to personalize 3D models of objects using only natural language prompts to describe their desired design. The user could then fabricate the objects with a 3D printer.

Google Bard’s best version yet

Google is rolling out Bard’s most capable model yet. Here are the new features:

Bard Extensions in English- With Extensions, Bard can find and show you relevant information from the Google tools you use every day — like Gmail, Docs, Drive, Google Maps, YouTube, and Google Flights and hotels — even when the information you need is across multiple apps and services.

Bard’s “Google it”- You can now double-check its answers more easily. When you click on the “G” icon, Bard will read the response and evaluate whether there is content across the web to substantiate it.

Shared conversations- When someone shares a Bard chat with you through a public link, you can continue the conversation, ask additional questions, or use it as a starting point for new ideas.

Expanded access to existing English language features- Access features such as uploading images with Lens, getting Search images in responses, and modifying Bard’s responses– to 40+ languages.

These features were possible because of new updates made to the PaLM 2 model.

Intel’s ‘AI PC’ can run generative AI chatbots directly on laptops

Intel’s new chip, due in December, will be able to run a generative AI chatbot on a laptop rather than having to tap into cloud data centers for computing power. It is made possible by new AI data-crunching features built into Intel's forthcoming "Meteor Lake" laptop chip and from new software tools the company is releasing.

Intel also demonstrated laptops that could generate a song in the style of Taylor Swift and answer questions in a conversational style, all while disconnected from the Internet. Moreover, Microsoft's Copilot AI assistant will be able to run on Intel-based PCs.

DeepMind’s new AI can predict genetic diseases

Google DeepMind’s new system, called AlphaMissense, can tell if the letters in the DNA will produce the correct shape. If not, it is listed as potentially disease-causing.

Currently, genetic disease hunters have fairly limited knowledge of which areas of human DNA can lead to disease and have to search across billions of chemical building blocks that make up DNA. They have classified 0.1% of letter changes, or mutations, as either benign or disease-causing. DeepMind's new model pushed that percentage up to 89%.

OpenAI unveils DALL·E 3

OpenAI has unveiled its new text-to-image model, DALL·E 3, which can translate nuanced requests into extremely detailed and accurate images. Here’s all you need to know:

DALL·E 3 is built natively on ChatGPT, which lets you use ChatGPT to generate tailored, detailed prompts for DALL·E 3. If it’s not quite right, you can ask ChatGPT to make tweaks.

Even with the same prompt, DALL·E 3 delivers significant improvements over DALL·E 2, as shown below (Left: DALL·E 2 results, Right: DALL·E 3). The prompt: “An expressive oil painting of a basketball player dunking, depicted as an explosion of a nebula.”

OpenAI has taken steps to limit DALL·E 3’s ability to generate violent, adult, or hateful content.

DALL·E 3 is designed to decline requests that ask for an image in the style of a living artist. Creators can also opt their images out from training of OpenAI’s future image generation models.

DALL·E 3 is now in research preview and will be available to ChatGPT Plus and Enterprise customers in October via the API and in Labs later this fall.

Amazon brings Generative AI to Alexa and Fire TV

At its annual devices event, Amazon announced a few AI updates:

It will soon use a new generative AI model to power improved experiences across its Echo family of devices. The new model is specifically optimized for voice and will take into account body language as well as a person’s eye contact and gestures for more powerful conversational experiences.

It also introduced generative AI updates for its Fire TV voice search, which promises to bring more conversational ways to interact with Alexa and discover new content based on specifics.

DeepMind’s says language modeling is compression

In recent years, the ML community has focused on training increasingly large and powerful self-supervised (language) models. Since these LLMs exhibit impressive predictive capabilities, they are well-positioned to be strong compressors.

This interesting research by Google DeepMind and Meta evaluates the compression capabilities of LLMs. It investigates how and why compression and prediction are equivalent. It shows that foundation models, trained primarily on text, are general-purpose compressors due to their in-context learning abilities. For example, Chinchilla 70B achieves compression rates of 43.4% on ImageNet patches and 16.4% on LibriSpeech samples, beating domain-specific compressors like PNG (58.5%) or FLAC (30.3%), respectively.

📢 Invite friends and get rewards 🤑🎁

Enjoying AI updates? Refer friends and get perks and special access to The AI Edge.

Get 400+ AI Tools and 500+ Prompts for 1 referral.

Get A Free Shoutout! for 3 referrals.

Get The Ultimate Gen AI Handbook for 5 referrals.

When you use the referral link above or the “Share” button on any post, you'll get credit for any new subscribers. Simply send the link in a text, email or share it on social media with friends.

Microsoft’s Copilot puts AI into everything

Microsoft has announced a new AI-powered feature, Microsoft Copilot. It’ll bring AI features into various Windows 11, Microsoft 365, Edge, and Bing. Our first impressions are that it’s Bing but for Windows. You can use Copilot to rearrange windows, generate text, open apps on the web, edit pictures and more.

Copilot can be accessed via an app or with a simple right-click and will be rolled out across Bing, Edge, and Microsoft 365 this fall, with the free Windows 11 update starting on September 26th.

YouTube announces 3 new AI features for creators

In a YouTube event, the company announced 3 AI-powered features for YouTube Shorts creators.

Dream Screen: It allows users to create image or video backgrounds using AI. All you need to do is type what you want to see in the background and AI will create it for you.

Creator Music: This was a previously available feature but got an AI revamp this time around. Creators can simply type in the kind and length of the music they need and AI will find the most relevant suggestions for their needs.

AI Insights for Creators: This is an inspiration tool which generates video ideas based on AI’s analysis of what the audiences are already watching and prefer.

Google’s innovative approach to train smaller language models

Large language models (LLMs) have enabled new capabilities in few-shot learning, but their massive size makes deployment challenging. To address this, the authors propose a new method called distilling step-by-step, which trains smaller task-specific models using less data while surpassing LLM performance.

First, the key idea is to extract rationales - intermediate reasoning steps - from an LLM using few-shot chain-of-thought prompting. These rationales are then used alongside labels to train smaller models in a multi-task framework, with tasks for label prediction and rationale generation. Experiments across NLI, QA, and math datasets show this approach reduces training data needs by 75-80% compared to standard fine-tuning.

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI Enthusiasts.

Thanks for reading, and see you on Monday. 😊

I am reading the agent paper too. It could be a great starting point to research about agent.