AI Weekly Rundown (October 28 to November 03)

Major AI announcements from Hugging Face, OpenAI, Microsoft, Apple, Google, and more.

Hello Engineering Leaders and AI Enthusiasts!

Another eventful week in the AI realm. Lots of big news from huge enterprises.

In today’s edition:

🤗 Hugging Face released Zephyr-7b-beta, an open-access GPT-3.5 alternative🎥 Twelve Labs introduces an AI model that understands video

🚀 OpenAI rolled out huge ChatGPT updates

💡 Microsoft’s new AI advances video understanding with GPT-4V🖊️ US President signed an executive order for AI safety

🎓 Microsoft’s new AI tool in collab with teachers

🤑 Quora’s AI chatbot launches monetization for creators🗣️ ElevenLabs debuts enterprise AI speech solutions

🤝 Dell partners with Meta to use Llama2

🍎 Apple’s new AI advancements: M3 chips and AI health coach🔥 Stability AI’s new features to revolutionize business visuals

🤖 Google’s AI model makes high-resolution 24-hour weather forecasts

🔧 SAP announced SAP Build Code for AI-powered app development📜 Cohere released Embed v3, its most advanced text embedding model

🧞♂️ Luma AI unveiled Genie, an all-new kind of generative 3D foundation model

Let’s go!

Hugging Face released Zephyr-7b-beta, an open-access GPT-3.5 alternative

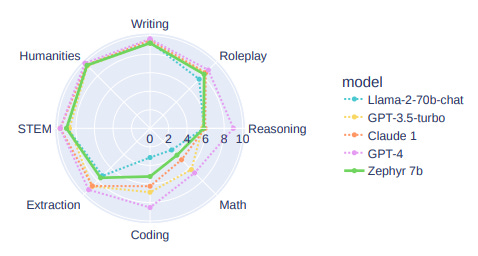

The latest Zephyr-7b-beta by Hugging Face’s H4 team is topping all 7b models on chat evals and even 10x larger models. It is as good as ChatGPT on AlpacaEval and outperforms Llama2-Chat-70B on MT-Bench.

Zephyr 7B is a series of chat models based on:

Mistral 7B base model

The UltraChat dataset with 1.4M dialogues from ChatGPT

The UltraFeedback dataset with 64k prompts & completions judged by GPT-4

Here's what the process looks like:

Twelve Labs introduces an AI model that understands video

It is announcing its latest video-language foundation model, Pegasus-1, along with a new suite of Video-to-Text APIs. Twelve Labs adopts a “Video First” strategy, focusing its model, data, and systems solely on processing and understanding video data. It has four core principles:

Efficient Long-form Video Processing

Multimodal Understanding

Video-native Embeddings

Deep Alignment between Video and Language Embeddings

Pegasus-1 exhibits massive performance improvement over previous SoTA video-language models and other approaches to video summarization.

OpenAI has rolled out huge ChatGPT updates

You can now chat with PDFs and data files. With new beta features, ChatGPT plus users can now summarize PDFs, answer questions, or generate data visualizations based on prompts.

You can now use features without manually switching. ChatGPT Plus users now won’t have to select modes like Browse with Bing or use Dall-E from the GPT-4 dropdown. Instead, it will guess what they want based on context.

Microsoft’s New AI Advances Video Understanding with GPT-4V

A paper by Microsoft Azure AI introduces “MM-VID”, a system that combines GPT-4V with specialized tools in vision, audio, and speech to enhance video understanding. MM-VID addresses challenges in analyzing long-form videos and complex tasks like understanding storylines spanning multiple episodes.

Experimental results show MM-VID's effectiveness across different video genres and lengths. It uses GPT-4V to transcribe multimodal elements into a detailed textual script, enabling advanced capabilities like audio description and character identification.

US President signed an executive order for AI safety

President Joe Biden has signed an executive order directing government agencies to develop safety guidelines for artificial intelligence. The order aims to create new standards for AI safety and security, protect privacy, advance equity and civil rights, support workers, promote innovation, and ensure responsible government use of the technology.

The order also addresses concerns such as the use of AI to engineer biological materials, content authentication, cybersecurity risks, and algorithmic discrimination. It calls for the sharing of safety test results by developers of large AI models and urges Congress to pass data privacy regulations. The order is seen as a step forward in providing standards for generative AI.

Microsoft’s new AI tool in collab with teachers

Microsoft Research has collaborated with teachers in India to develop an AI tool called Shiksha copilot, which aims to enhance teachers' abilities and empower students to learn more effectively. The tool uses generative AI to help teachers quickly create personalized learning experiences, design assignments, and create hands-on activities.

It also helps curate resources and provides a digital assistant centered around teachers' specific needs. The project is being piloted in public schools and has received positive feedback from teachers who have used it, saving them time and improving their teaching practices. The tool incorporates multimodal capabilities and supports multiple languages for a more inclusive educational experience.

Quora’s AI Chatbot Launches Monetization for Creators

Quora's AI chatbot platform, Poe, is now paying bot creators for their efforts, making it one of the first platforms to monetarily reward AI bot builders. Bot creators can generate income by leading users to subscribe to Poe or by setting a per-message fee.

The program is currently only available to U.S. users. Quora hopes this program will enable smaller companies and AI research groups to create bots and reach the public.

Read the announcement by Adam D'Angelo, Quora CEO.

ElevenLabs Debuts Enterprise AI Speech Solutions

ElevenLabs, a speech technology startup, has launched its new Eleven Labs Enterprise platform, offering businesses advanced speech solutions with high-quality audio output and enterprise-grade security. The platform can automate audiobook creation, power interactive voice agents, streamline video production, and enable dynamic in-game voice generation. It offers exclusive access to high-quality audio, fast rendering speeds, priority support, and first looks at new features.

ElevenLabs' technology is already being used by 33% of the S&P 500 companies. The company's enterprise-grade security features, including end-to-end encryption and full privacy mode, ensure content confidentiality.

Dell Partners with Meta to Use Llama2

Dell Technologies has partnered with Meta to bring the Llama 2 open-source AI model to enterprise users on-premises. Dell will support Llama 2 models to its Dell Validated Design for Generative AI hardware and generative AI solutions for on-premises deployments.

Dell will also guide its enterprise customers on deploying Llama 2 and help them build applications using open-source technology. Dell is using Llama 2 for its own internal purposes, including supporting Retrieval Augmented Generation (RAG) for its knowledge base.

📢 Invite friends and get rewards 🤑🎁

Enjoying AI updates? Refer friends and get perks and special access to The AI Edge.

Get 400+ AI Tools and 500+ Prompts for 1 referral.

Get A Free Shoutout! for 3 referrals.

Get The Ultimate Gen AI Handbook for 5 referrals.

When you use the referral link above or the “Share” button on any post, you'll get credit for any new subscribers. Simply send the link in a text, email or share it on social media with friends.

Apple’s new AI advancements: M3 chips and AI health coach

Apple unveiled M3, M3 Pro, and M3 Max, the most advanced chips for a personal computer. They have an enhanced Neural Engine to accelerate powerful ML models. The Neural Engine is up to 60% faster than in the M1 family of chips, making AI/ML workflows faster while keeping data on device to preserve privacy. M3 Max with 128GB of unified memory allows AI developers to work with even larger transformer models with billions of parameters.

(Source)

A new AI health coach is in the works. Apple is discussing using AI and data from user devices to craft individualized workout and eating plans for customers. It next-gen Apple Watch is also expected to incorporate innovative capabilities for detecting health conditions like hypertension and sleep apnea.

(Source)

Stability AI’s new features to revolutionize business visuals

Stability AI shared private previews of upcoming business offerings, including enterprise-grade APIs and new image enhancement capabilities.

Sky Replacer: It is a new tool that allows users to replace the color and aesthetic of the sky in original photos to improve their overall look and feel (thoughtfully built for industries like real estate).

Stable 3D Private Preview: Stable 3D is an automatic process to generate concept-quality textured 3D objects. It allows even a non-expert to generate a draft-quality 3D model in minutes by selecting an image or illustration or writing a text prompt. This (below) was created from text prompts in a few hours.

Stable FineTuning Private Preview: Stable FineTuning provides enterprises and developers the ability to fine-tune pictures, objects, and styles at record speed, all with the ease of a turnkey integration for their applications.

Google’s MetNet-3 makes high-resolution 24-hour weather forecasts

Developed by Google Research and Google DeepMind, MetNet-3 is the first AI weather model to learn from sparse observations and outperform the top operational systems up to 24 hours ahead at high resolutions.

Currently available in the contiguous United States and parts of Europe with a focus on 12-hour precipitation forecasts, MetNet-3 is helping bring accurate and reliable weather information to people in multiple countries and languages.

SAP supercharges development with new AI tool

SAP is introducing SAP Build Code, an application development solution incorporating gen AI to streamline coding, testing, and managing Java and JavaScript application life cycles. This new offering includes pre-built integrations, APIs, and connectors, as well as guided templates and best practices to accelerate development.

SAP Build Code enables collaboration between developers and business experts, allowing for faster innovation. With the power of generative AI, developers can rapidly build business applications using code generation from natural language descriptions. SAP Build Code is tailored for SAP development, seamlessly connecting applications, data, and processes across SAP and non-SAP assets.

Cohere’s advanced text embedding model

Cohere recently Introduced Embed v3, the latest and most advanced embedding model by Cohere. It offers top-notch performance in matching queries to document topics and assessing content quality. Embed v3 can rank high-quality documents, making it useful for noisy datasets.

The model also includes a compression-aware training method, reducing costs for running vector databases. Developers can use Embed v3 to improve search applications and retrievals for RAG systems. It overcomes the limitations of generative models by connecting with company data and providing comprehensive summaries. Cohere is releasing new English and multilingual Embed versions with impressive performance on benchmarks.

Luma AI’s genie converts text to 3D

Luma AI has developed an AI tool called Genie that allows users to create realistic 3D models from text prompts. Genie is powered by a deep neural network that has been trained on a large dataset of 3D shapes, textures, and scenes.

🌟 Create 3D things in seconds on Discord

🎨 Prototype in various styles

✨ Customize materials

🆓 Free during research preview

It can learn the relationships between words and 3D objects and generate novel shapes that are consistent with the input.

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for engineering leaders and AI enthusiasts.

Thanks for reading, and see you on Monday. 😊